What is website architecture?

Website architecture comprises a set of parameters and standard checks which ensures that the website is SEO-friendly from an architectural perspective. The key benefits of a better architecture are ensuring that the link juice flows properly throughout the website. Also, no-hidden crawl errors might disrupt the SERP visibility in the long run!

The Analysis

Chapter 1: URL Structure Analysis

Type a: Navigational Page URL analysis

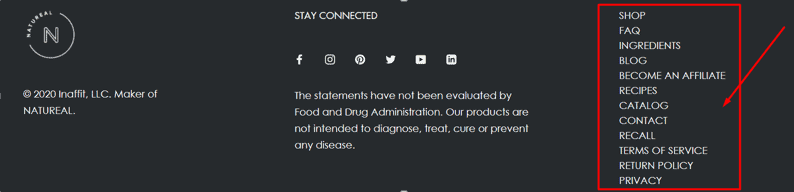

Navigational pages are basically the important and the important secondary pages of a given website! An example of the navigational page for this particular campaign is as under:

Observation:

SHOP: https://www.natu-real.com/collections/weight-loss-supplements

OUR STORY: https://www.natu-real.com/pages/our-story

RECIPES: https://www.natu-real.com/pages/recipes

Now, as we can see, all the navigational pages contain a slug prior to starting the main permalink such as “/pages”, “collections”.

Recommendation:

- The pages should not contain any extra slugs such as /pages, /collections, etc. This reduces the link equity and link juice flow

- Once the URLs changes are made, then a 301 redirection should be made from the OLD URL to the new URL, say for example: for the RECIPE page, we made the new URL https://www.natu-real.com/recipes

Then we need to re-direct using a 301 redirection from https://www.natu-real.com/pages/recipes —> https://www.natu-real.com/recipes

THIS WILL PREVENT SEO Juice Losses!

Type b: BLOG Page URL analysis

Here are a few tips before indulging in a blog page URL optimization:

- A blog page URL should always be free from any additional slug

- Blog page URLs should be as SHORT as Possible

- Blog page URLs must contain at least one keyword which depicts the topic of the blog, this will create a relevancy signal to the web crawlers

- Blog Page URLs must be free from any stop words

Observation:

On one of the Blog pages we opened, and we spotted an URL: https://www.natu-real.com/blogs/news/5-super-easy-n-effective-weight-loss-exercises-you-should-start-today-for-faster-results

The TOPIC of the Page is: 5 ‘Super-Easy n Effective’ Weight Loss Exercises You Should Start Today For Faster Results

Recommendation:

The new URL should be:

https://www.natu-real.com/blogs/5-effective-weight-loss-exercises

- The above URL is short

- The above URL is to the point on what the topic is all about

- The above URL is free from any stop words

- The above URL contains the main keyword

Chapter 2: FLAT Structure Analysis / Crawl-Depth Analysis

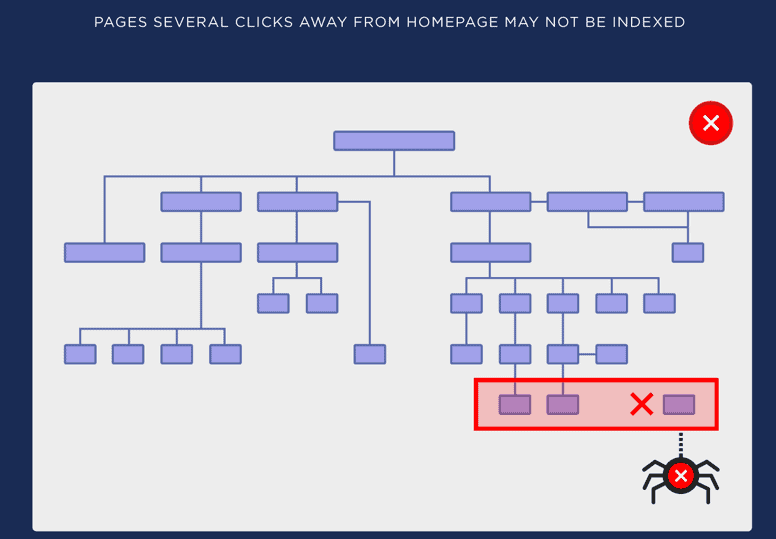

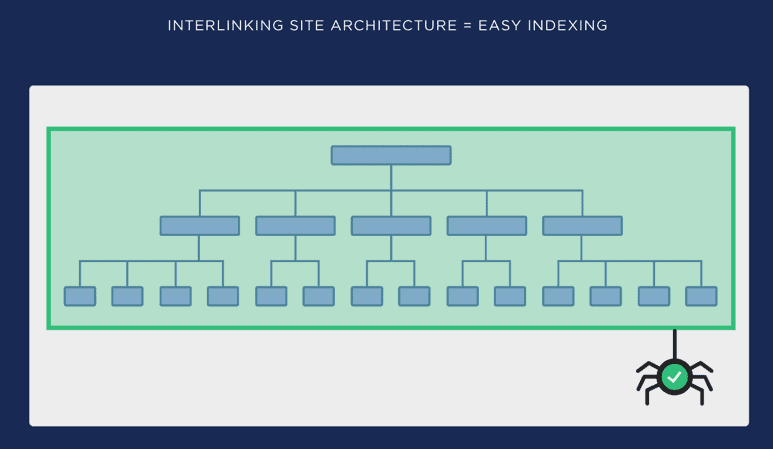

Flat architecture is nothing but just a standard architecture where a user can reach any given page in less than 4 clicks from the home page. This factor is often referred to as Crawl-depth analysis, which measures the number of clicks a user needs to perform to reach a particular page.

Diagrammatically, it’s represented as under:

Observation:

- Open Screaming Frog Spider

- Scan your Website

Click on “Visualizations”. Then Click on “Crawl Tree Graph”.

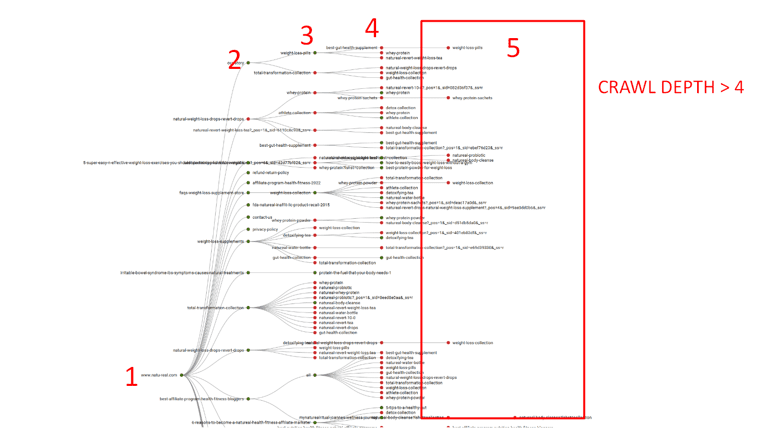

As seen from the above tree graph we can easily check the “CRAWL DEPTH” marked as 1,2,3,4 and 5. Now, all the pages which fall under “5” should be optimized to reduce the depth to 4 or less.

Recommendation:

- Make a list of all the pages that fall under “Crawl Depth > 4”

- Now either include these pages on an HTML sitemap linked with the home page for reducing the depth else, you can even connect them using pillar internal linking strategies to reduce the crawl depth!

Chapter 3: Hierarchical Analysis

Type a: Orphan Page analysis

Orphaned pages normally describe such pages which are orphaned from the rest of the pages of the website. In other words, such pages have high crawl depth and have poor user responses such as high bounces and are buried deep inside a website’s architecture.

Observation:

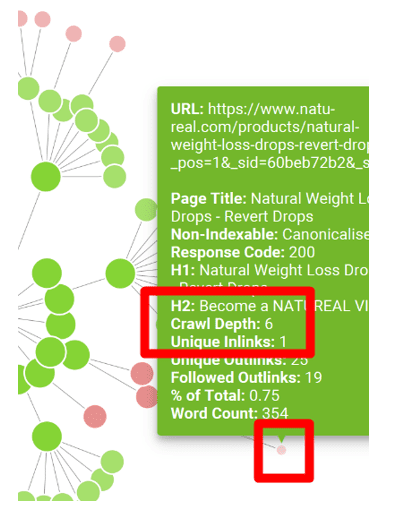

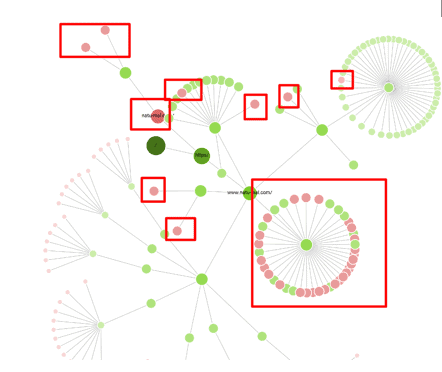

- Click on “Visualizations” and click “Force-Directed Crawl Diagram”.

You will see something as shown below:

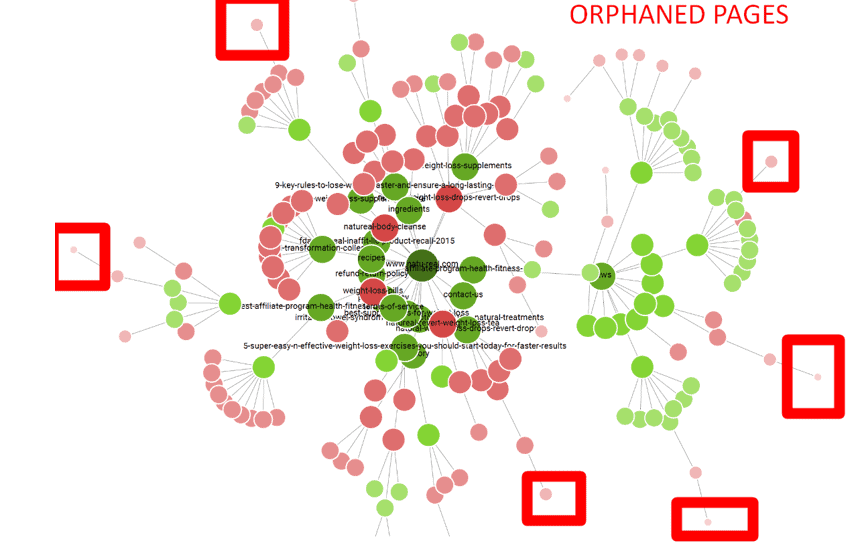

- As seen from the above screenshot, the URLs (NODES) marked as RED Boxes are the URLs that can be treated as Orphaned as they have high Crawl-depth and are buried deep down.

- For example, I have chosen one NODE (URL), as you can see from the screenshot below, the URL can be identified and also the Crawl-depth can be spotted!

P.S: Please note that these pages are different than the page ones, which you can spot from simple Crawl-tree crawl depth as in “Force-Directed Crawl”, extreme nodes can be visualized, which will show the pages that are buried deep down without any trackback on linking to other pages!

Recommendation:

- Make a list of all end NODES

- If you find these pages are not valuable, then you can delete them

- If you find they are valuable, then you can either merge them to a relevant page using the Merger technique as seen here: https://thatware.co/merge-pages-to-gain-rank/

- Lastly, you can also include them in the HTML sitemap and utilize better interlinking strategy for reducing the depth.

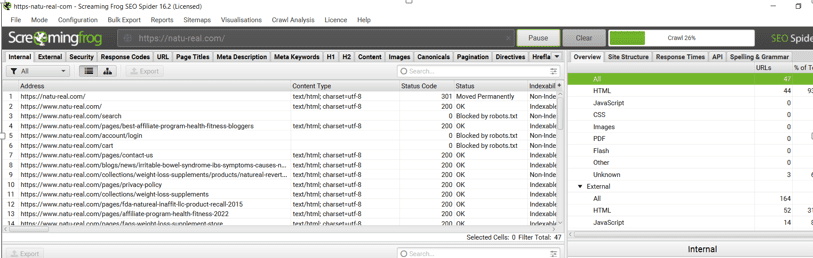

Type b: Non-index-able Page analysis

Often crawlers can encounter severe problems with any respective URLs that will prevent them from indexing due to any unprecedented indexing error. With the help of Screaming Frog Spider, such pages can be tracked and thus, one can obtain a clean architecture without any hassle.

Observation:

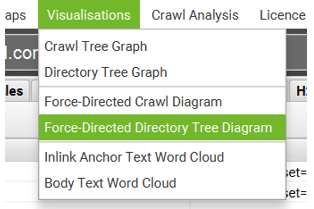

- Click on “Visualizations” and click “Force-Directed Directory Tree Diagram”.

You will see something as shown below. Please note, that the deeper the red color is, the more serious the index issue is with those URLS. Now you can filter and shortlist all the bright spotted Red Marked NODES, as shown below!

Recommendation:

- Make a list of all the NODES that appear bright RED in color

- Verify if those pages serve meaningful value for your audience. If Yes, then please implement an HTML sitemap or internal linking to reduce the Crawl depth. You can also look for additional page mobility issues to spot the page mobility friendliness of such URLs as well

- If you feel that the identified URLs are not valuable to your audience, then you can delete them and it will help preserve your Crawl budget wastage and index bloat issues.

Chapter 4: Spotting Issues within the XML Sitemap

An XML sitemap is a very important element when it comes to SEO and website Crawling. XML file is the file that web crawlers crawl first-hand to get a complete idea and the list of the URLs present within a given website. Any issues within the same will be a problematic factor for the overall website crawling and the efficiency of SEO will decrease!

Observation:

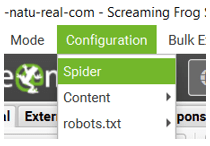

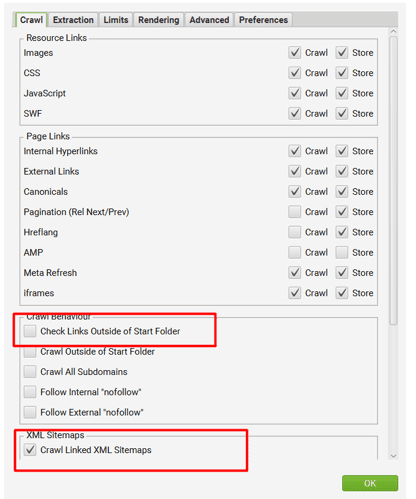

Click on “Configuration” and select “Spider”

Then Keep the settings as shown below. Basically, you need to untick all options and only allow the XML to be crawled.

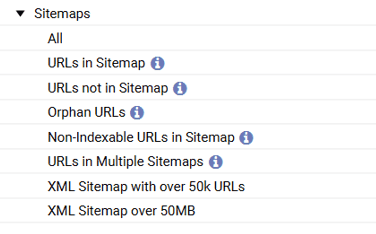

- Once done, then you will be able to see all the issues pertaining to the XML sitemap as shown on the below screenshot:

Recommendation:

- URLs not in Sitemap = If any URL is not present within the XML sitemap then please add them, as not having an URL within a XML will not help with any indexing of that particular URL.

- Orphan URLs = Any URLs under this segment should be immediately removed!

- Non-index able URLs in Sitemap = Any URLs under this segment should be immediately removed!

- URLs in Multiple Sitemaps = Any URLs under this segment should be immediately removed!

- XML Sitemap with over 50K URLs = As per general rule, a given .xml file can only contain up to 50K URLs, if you have more than 50K URLs, try creating another XML. For example, suppose you have a website with 100K pages.

Then www.example.com/sitemap1.xml can contain 50K URLs

And www.example.com/sitemap2.xml can contain 50K URLs

- XML Sitemap over 50 MB = As per general rule, a given .xml file size needs to be within 50MB. If the size exceeds, try creating another sitemap to lower the load and size.