SUPERCHARGE YOUR ONLINE VISIBILITY! CONTACT US AND LET’S ACHIEVE EXCELLENCE TOGETHER!

Why Advanced SEO is Getting Important Day by Day? With over 20 billion pages which get indexed each day by Google (source), the competition is getting way tougher day by day. Therefore, search engines are using artificial intelligence and machine learning for better ranking of the websites in SERP. With that being said, SEO service providers from all across the world are finding themselves in a difficult situation to rank most of the high competitive keywords on page one.

Artificial Intelligence now made the search engines smarter than ever before and thereafter, the search engines are getting better each day in analyzing the intent behind the content. Some of the common technologies which are being used are semantic search, information retrieval mechanism, data mining, text mining, natural language processing and few more. Here, you can read more about semantic search.

Ever thought of taking your seo website analysis or website ranking to a whole new level? If your answer is yes then you need to perform or follow some of the advanced seo techniques which will help you in taking your business to a whole new level. Here are some of the strategies and techniques which will definitely help you with your online business.

Why Advanced SEO is Getting Important Day by Day?

With over 20 billion pages that get indexed each day by Google (source), the competition is getting way tougher day by day. Therefore, search engines are using artificial intelligence and machine learning for better ranking of the websites in SERP. With that being said, SEO service providers from all across the world are finding themselves in a difficult situation to rank most of the high competitive keywords on page one.

Artificial Intelligence now made the search engines smarter than ever before and thereafter, the search engines are getting better each day in analyzing the intent behind the content. Some of the common technologies which are being used are semantic search, information retrieval mechanism, data mining, text mining, natural language processing and few more. Here, you can read more about semantic search.

Ever thought of taking your seo website analysis or website ranking to a whole new level? If your answer is yes then you need to perform or follow some of the advanced seo techniques which will help you in taking your business to a whole new level. Here are some of the strategies and techniques which will definitely help you with your online business.

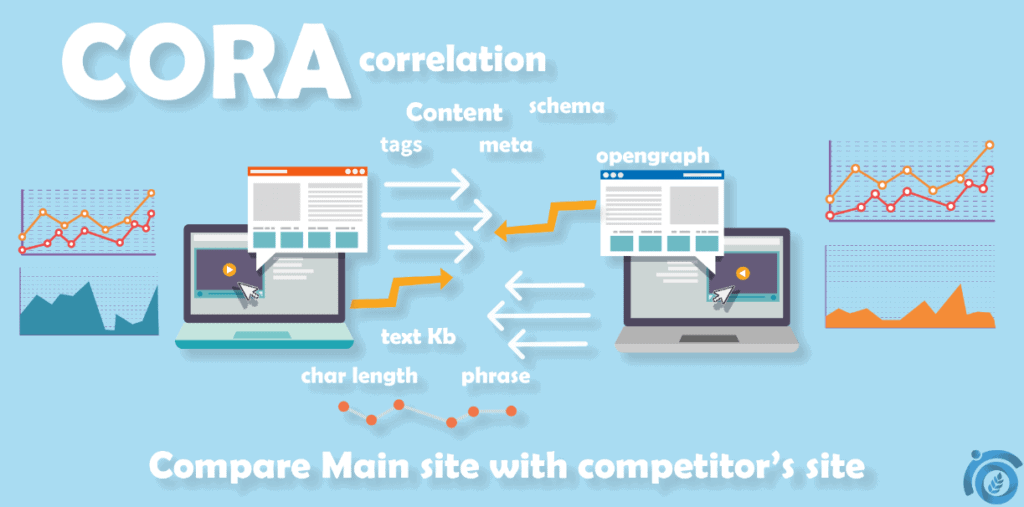

1. CORRELATION SEO (CORA): –

CORA is a correlation software which is provided by https://seotoollab.com/ ( ThatWare has also invented its own correlation code written in programming R).

Now, the question is . . .

What is CORA and How Correlation SEO can be Helpful?

CORA is basically a tool which helps in performing a correlation seo. Correlation can be really very helpful in ranking websites on the first page of SERP. If you find yourself struggling with website ranking then your seo consultant must suggest for correlation done to your landing pages.

Now, one might be thinking – What is correlation?

As per Wikipedia, correlation actually means mutual interrelation, relationship or connection between two or more items. With that being said, seo specialists can correlate your website with all other websites found in SERP based on the given location and the search term.

If you want to know in detail about correlation seo, read here.

Professional seo consultants will correlate the parameters of your website with other websites found in SERP based on the given search query. Here is the list of the parameters. (Total 528 parameters)

Basically, there are two coefficients for correlating one is Pearson’s and the other one is Spearman. The process begins by correlating the parameters one by one followed by a correlation output. The correlation output will suggest whether to add, remove or no action is required when it comes to the parameter.

For example, if the output comes as strong positive correlation value then no further action is required. On the other hand, if the output comes as negative weak value then you need to add the parameters as calculated from the Pearson’s & Spearman’s hypothesis values.

To conclude, correlation can help in optimizing your landing pages by correlating the entire on-page factors as found in SERP. Thus, it will give a greater exposure for faster ranking and every seo professionals must keep this on their to-do list.

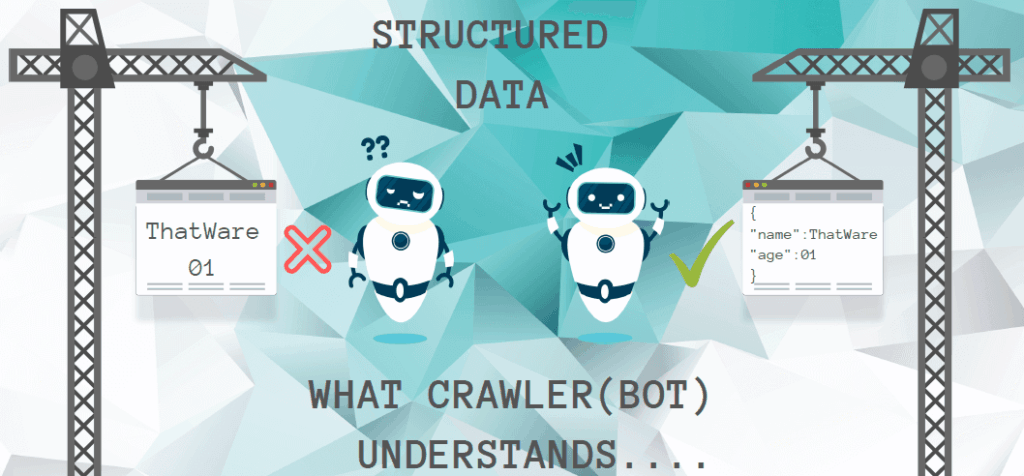

2. STRUCTURED DATA (SCHEMATIC MARKUP): –

Structured data is getting important day by day. It helps search engine crawlers to get a better idea about the intent behind the content. Top seo agency uses various formats of structured data such as microdata, RDF-a, JSON-LD, and etc.

If you want to know in detail about structured data, read here.

In a more practical approach, seo guru’s claim that JSON-LD format is very friendly as because it is a linked data and therefore during migrations, the data loss is minimal (lossless data). Also, JSON-LD markups can be dynamically triggered.

Now the question is . . .

What is structured data markup?

This is a markup language used within the HTML code of a particular landing page. It helps search engine crawlers to get a better idea about the content of a page. Furthermore, it also helps with rich snippet appearing in SERP.

Here are some of the things which you can use within your markup: –

● Your business address

● Your phone number

● The services which you offer

● Opening and closing hours

● Additional information about your business

● Social profiles

● Areas Served

● Author information

● Logo

● Images

● Videos

● Details about the services which you offer

● Any other specific information which you like to highlight

By assigning the above information on your markup, you can help search engines in specifying your intent better. To conclude, the better you represent your intent; it’s more likely you will get rank faster.

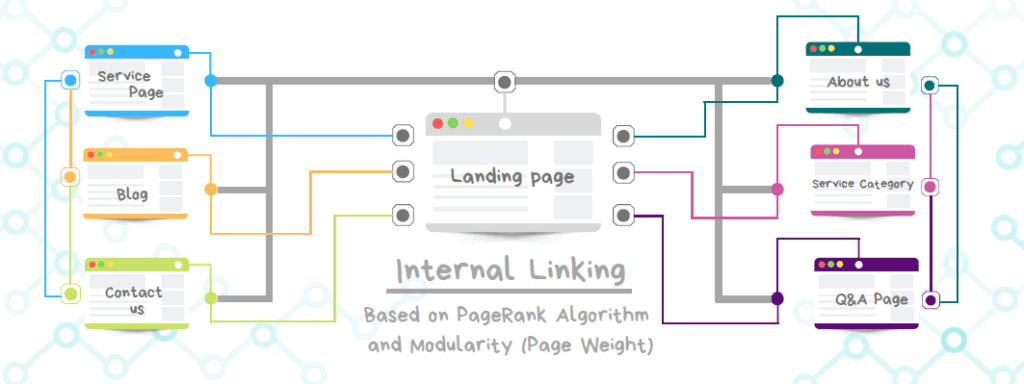

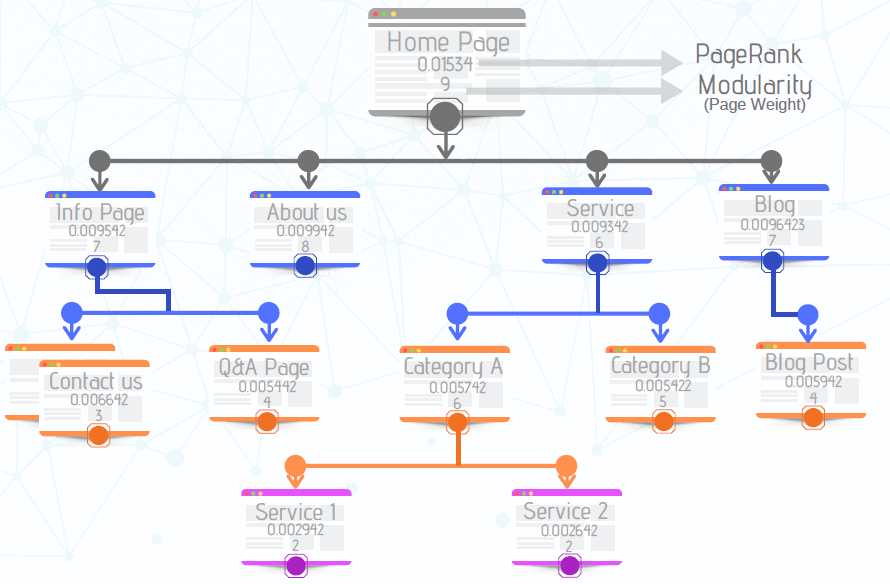

3. INTERNAL LINKING VISUALIZATION BASED ON PAGERANK AND MODULARITY (USING GEPHI): –

Everyone knows how important internal linking is. With the right internal linking architecture, one can boost their PageRank high enough. Similarly, having a bad internal linking architecture one might drain away their link juice and PageRank value. If you are thinking . . .

What is internal linking?

Internal linking is nothing but linking of one particular page of a website with another page of the same website using meaningful anchor text.

Gephi is one of the best visualization tools which will help in acquiring a clear overview of the internal linking architecture. Professional seo services include internal linking optimization but those techniques don’t really matter nowadays. Internal linking optimization should be performed by following a definite protocol which is a bit technical in nature.

Theoretically speaking, an optimal landing page should have the lowest modularity and highest PageRank value. Confused…?

Well, it works like this, suppose you have a particular landing page which you want to rank well in SERP. Then you must aim to keep the PageRank of the landing page as high as possible relative to the homepage. Also, you must keep the modularity of the page as low as possible without considering any relativity factor.

If you want to know in detail about internal linking optimization using Gephi, read here.

A high PageRank will indicate good ranking potential, on the other hand, low modularity will ensure less link juice wastage. Thus, a proper internal linking strategy is definitely important for ensuring good link juice flow and also for ensuring good link equity flow.

This will not only ensure a good ranking of your pages in SERP but will also improve your organic exposure. Especially for e-commerce seo this helps a lot with SERP visibility.

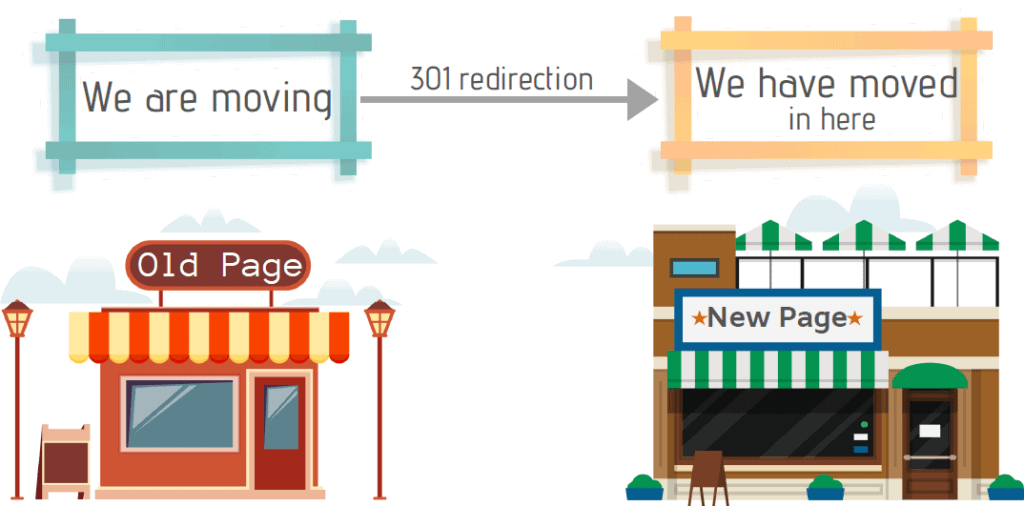

4. HANDLING REDIRECTS IN A CONTROLLED WAY: –

Redirects such as 301, 302, and so on are very crucial and really matters a lot when it comes to SEO. Using a 301 redirection for fixing 4XX issues might be a part of white hat seo but if done in a wrong way it can create a lot of issues. Wondering what could be the issue…?

A professional seo company should always make a wise utilization of the 301 redirects.

In other words, if you 301 redirect to another page with less topical trust flow or zero relevance then it can lead to a penalty of your website and your visibility scores might decrease. For example, you have “page A” which depicts topic for dogs and you want to redirect it to “page B” which has topics for pizza.

In the above case, if you perform a 301 redirection then it will badly hurt your PageRank as because the topical trust flow (relevance) of both the pages doesn’t correlate.

If you want to know in detail about 3XX redirects, read here.

To conclude, 301 redirects should only be applied where the topical trust factors are good enough or the page relevancies correlates good enough.

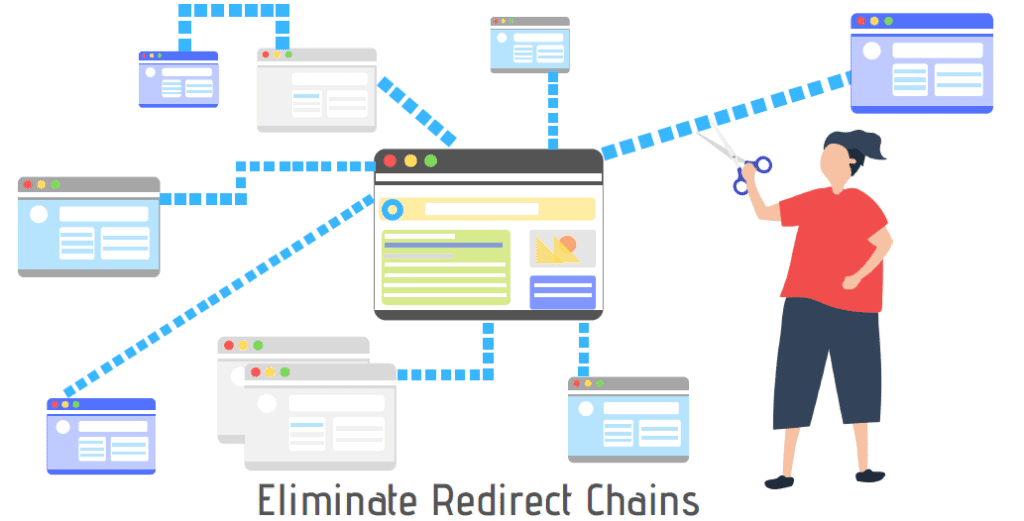

5. ELIMINATION OF REDIRECT CHAINS: –

Redirect chains are a serious threat and might result in poor website performance. Redirect chains occur when a particular landing page has multiple re-directions. This can lead to crawling trap and can badly hurt your rankings. Therefore, they need to be eliminated (if found).

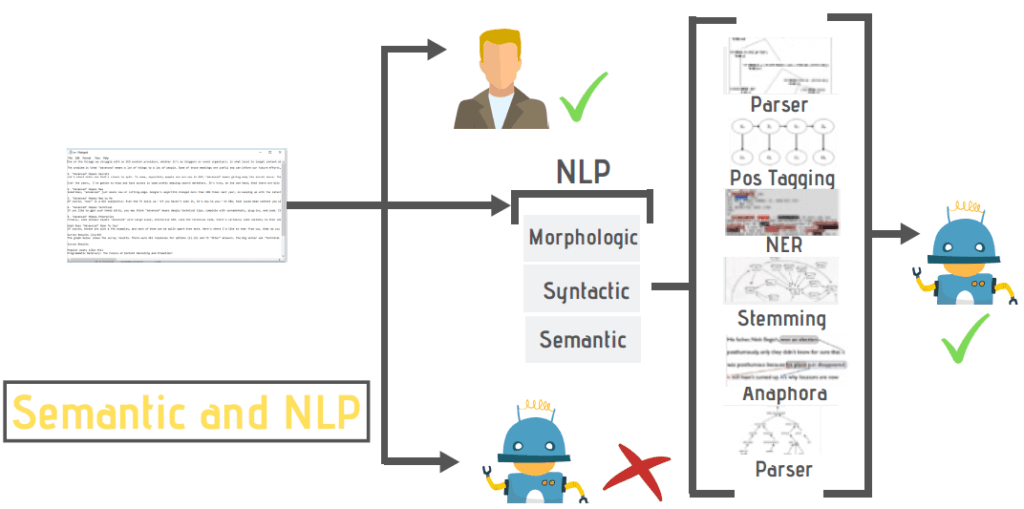

6. SEMANTIC SEARCH, NATURAL LANGUAGE PROCESSING & INFORMATION RETRIEVAL: –

Search engines are utilizing artificial intelligence for predicting the intent behind the content. Here are some of the basic answers to the simplest questions in-case you are wondering . . .

What is semantic search?

Semantic search means to search with the intent behind any particular topic. It doesn’t necessarily mean prediction but it is something which is used for specifying intent behind a particular topic.

What is natural language processing?

It is a field of computational science and artificial intelligence which involves the interaction between computers (machines) and human beings.

What is information retrieval?

This is a branch of science combined with artificial intelligence which involves the extraction of information from varied sources either query term based or natural language based.

Semantic search engineering is a part of natural language processing which finds all its theories and practical applications based on modern information retrieval techniques. In other words, information retrieval can help in better specifying the intent behind your content. The better you can represent the intent behind your page, your website is more likely to get better visibility in SERP.

This is a highly advanced topic; If you want to know in detail about semantic search and information retrieval, read here.

7. VOICE SEARCH OPTIMIZATION (VSO): –

Seo service providers and small business seo should start optimizing web pages for voice search.

This is because of the growing trend of people using the voice search facility on their smartphones, devices, tablets and other high tech gadgets. Also, the trend of using voice assistant is exponentially rising as more people are dependent upon voice search for finding pieces of information on the internet. Now one must be thinking . . .

What is voice search optimization?

Voice search optimization, also popularly known as VSO. It is a technique by which one can optimize their webpages for ranking higher in SERP when someone searches for any particular information on the internet using the voice search feature. Now the question is . . .

How to do voice search optimization?

There are many strategies by which one can optimize their pages for voice search. Some of the methods which actually work are described below.

● Make sure to prepare and prioritize your knowledge graph in order to strengthen your web page for VSO

● Leverage your entity search and entity graph

● Focus on long tail keywords, i.e, conversational keywords. Don’t make it too long; just keep it to the point

● Mark-up your web page with the “question and answer” structured data

● Optimize your landing page for rank zero or featured snippet

● Try creating custom FAQ pages

● Ensuring authority signals pass

Voice search is becoming the future of SEO. Therefore, this is something which one should take into serious consideration. If you want to know in detail about voice search optimization, read here.

8. OPTIMIZE FOR CLICK THROUGH RATE: –

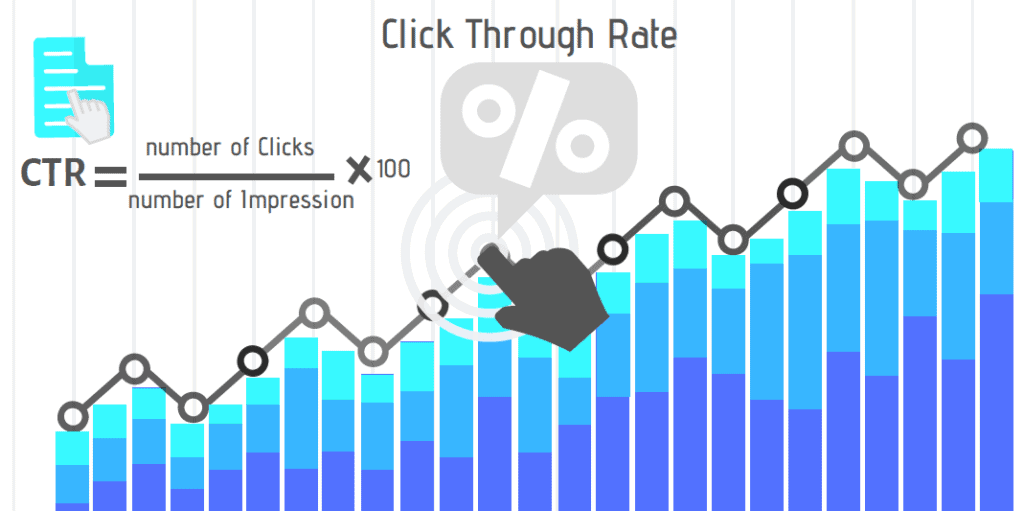

Most seo experts know the fact that rankbrain algorithm is generally taking up the SERP. Click through rate (CTR) is one of the most important factors which helps with rankbrain optimization.

In other words, if your CTR is good then you are most likely to have a good probability of ranking higher in SERP.

Now you must be thinking . . . What is click through rate?

Click through rate, also popularly known as CTR which is a percentage measure of the number of clicks divided by the total number of an impression which your listing has obtained.

In other words, (clicks / impressions ) * 100 = CTR

How to optimize your landing page for CTR?

Here are some of the ways by which one can improve their CTR: –

● Make use of rich snippets

● Proper utilization of structured data

● Make use of power words for title and description

● Use compelling metadata

● Use sitelinks extension via markups

● Use rating schema

● Use silo architecture

If you want to know in detail about CTR optimization, read here.

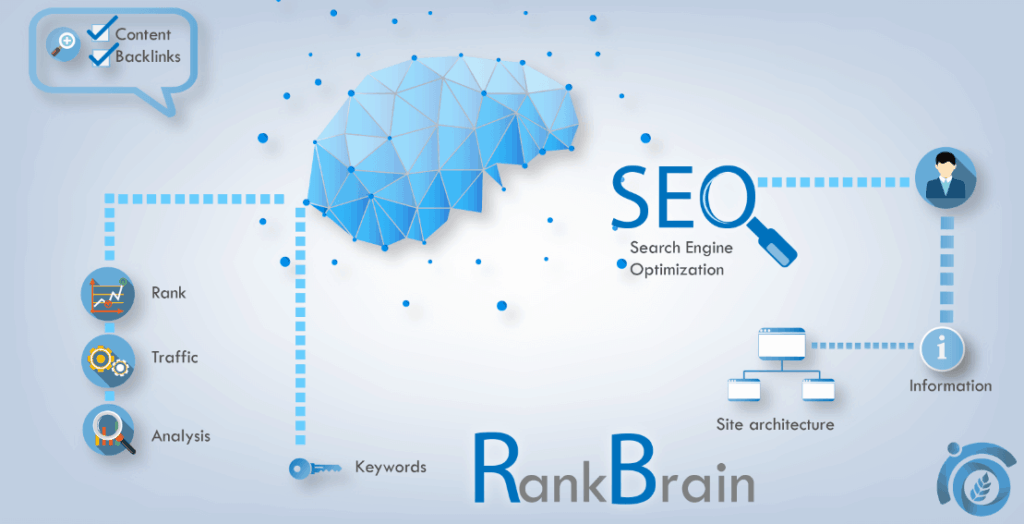

9. OPTIMIZING FOR RANKBRAIN ALGORITHM: –

Seo services company give a lot of importance for optimizing websites for rankbrain algorithm.

Now the question is… What is Rankbrain Algorithm?

Rankbrain algorithm is a confirmed algorithm update by Google which uses artificial intelligence for its core functioning. This algorithm helps in identifying whether the intent is specified or not for a given set of a query. Thereafter, it ranks pages if the intent is well specified.

How to optimize for Rankbrain algorithm?

One golden rule for optimizing your pages for the rankbrain algorithm is to specify the intent properly behind your landing page. There are several methods which can be adopted for specifying the intent behind your content. But one of the recommended ways is to utilize structured data. ThatWare has invented and created rankbrain structured data which will help in specifying the intent behind your content.

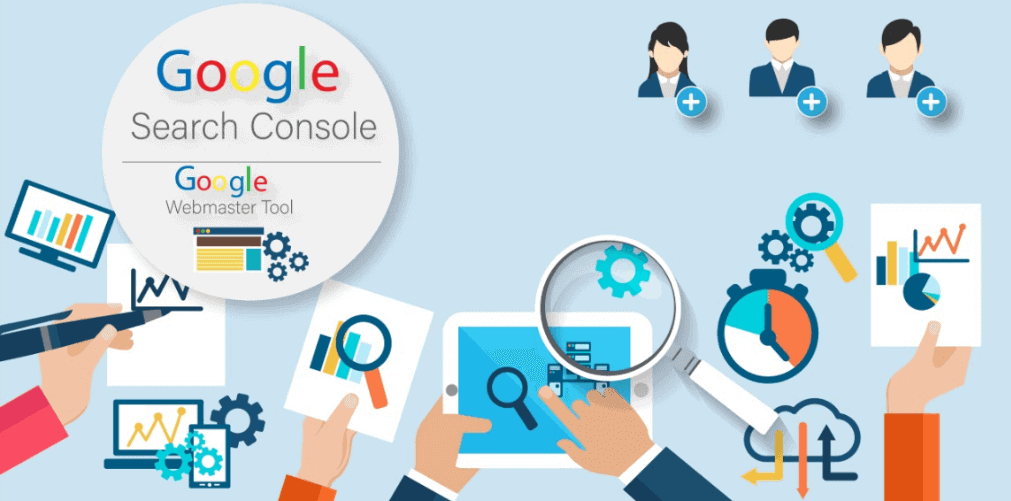

10. GOOGLE WEBMASTER TOOL (AKA SEARCH CONSOLE) OPTIMIZATION: –

Google webmaster tool (GWT) which is also known as search console. It is one of the most important tools when it comes to search engine optimization.

With the help of GWT, one can make sure that their website complies with all the webmaster policies and guidelines. In addition to the above, one can also get a clear picture of how their website is performing on the search engine results page.

Furthermore, you can get a whole lot of information about your website ranging from structured data errors, AMP errors, robots.txt errors, index issues, sitemap issues, coverage issues, mobile issues and much more.

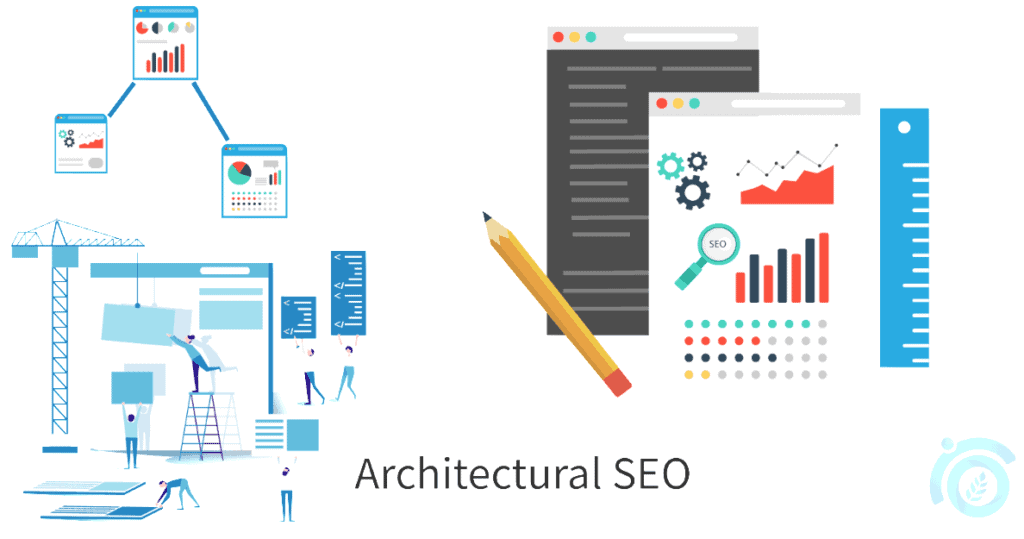

11. ARCHITECTURAL SEO: –

If you approach any best seo firm then the first and foremost thing which an expert will suggest is to make sure that the architecture of your website is search friendly.

In other words, for establishing a strong seo base; one should make sure that their architecture is very strong. You might be wondering . . .

What is Architectural SEO?

This is a part of search engine optimization technique in which the optimizations and fixes are made on the architecture of the website. This ensures that the architecture is free from any indexing, crawling issues or rendering issues.

If you want to know in detail about Architectural SEO, read here.

Some of the basic elements which should be given importance for architectural SEO are as follows: –

● Rendering issues – as in client-side rendering, server-side rendering or isomorphic rendering

● Silo Structure – as in structuring the linking between category and relevant product pages, especially for an e-commerce website

● Sitewide Links – as in footer, right sidebar and left sidebar. Important landing pages shouldn’t be placed on sitewide

● Redirect Chains – any particular landing pages shouldn’t have redirects placed in multiple loops

● Pagerank division – important money pages or landing pages should contain the maximum PageRank distribution

● In-linking Strategy – as per ideal SEO rule, an important landing page should have the most in-linking’s. This also helps with proper silo architecture.

● Proper Lead Magnet & CTA orientation – sometimes having only traffic to the website is not the only goal. Lead generation is also an important factor and proper lead magnets and call to action buttons will definitely help with the process.

12. TECHNICAL SEO: –

No matter how much you optimize your pages for organic visibility. But technical seo will always be one of the most important factors for a successful search engine optimization campaign.

Seo marketing companies use several methods and strategies for technical fixes but there are many areas which are very important and still gets overlooked. Apart from the low hanging fruit fixes, below listed are some of the areas which should also be taken into consideration while dealing with technical SEO fixes.

● Catching issues

● Waterfall resource fixes

● Htaccess optimization

● Leverage browser caching

● gZip compression

● Complete SEMrush site health fix

● Complete GTmetrix fix

● Complete Sitebulb Fix

● Proper Screaming Frog

● Minimizing ping requests

● Reduce header request

● Canonicalization

● Crawl depth fix

● Minification of JS, HTML and CSS

● Keep-alive setting

● Page speed insight factors from Google

● Time to first-byte fix

● Rendering issues fix

● Image bandwidth reduction

● Sitemap errors fix

● Optimization of asynchronous resources

● Deferring of JS

● DNS fetch time reduction

● Page performance grade improvement

13. JAVASCRIPT SEO: –

This is one of the most trending topics nowadays. Professional SEO experts are giving a lot of importance on this topic. You might be thinking . . .

What is Javascript SEO?

This is a part of modern search engineering where pages can be potentially ranked using appropriate javascript resources. Latest seo techniques involve processes like angularJS, react node JS, isomorphic rendering and much more.

Many people still think that JS is an enemy to search engine optimization but if javascript is properly handled then it can also help with better SERP visibility.

14. IMAGE OPTIMIZATION, IMAGE ALT TAGS AND IMAGE SERVING: –

Image optimization and image serving are one of the most crucial factors when it comes to internet marketing. Most people think that compressing images might hamper the resolution and the look of the image.

But in a practical sense, just reducing the bandwidth of the images helps with image optimization. This will help in reducing the bytes of the images. Therefore, the page loading speed will not be disturbed. Image ALT tags are also very important as it helps a lot with image search and acquisition of traffic via Image search.

Also, if you serve all the images of your website from a separate sub-domain such as images.example.com or static.example.com then it also helps with image optimization. In this case, the request sizes will be minimized.

15. TF-IDF: –

Tf-idf stands for term frequency versus inverse document frequency. This is basically a weighted score or measure used in text mining. It is a part of an information retrieval system.

What is Tf-idf?

Tf-idf basically stands for term frequency versus inverse document frequency. It gives a weighted score of a term which is computed against the inverse of the corpus.

Tf-idf score shows how important a particular term is when compared against its corpus. Based on a lot of seo reviews, tf-idf helps a lot with SERP visibility and ranking.

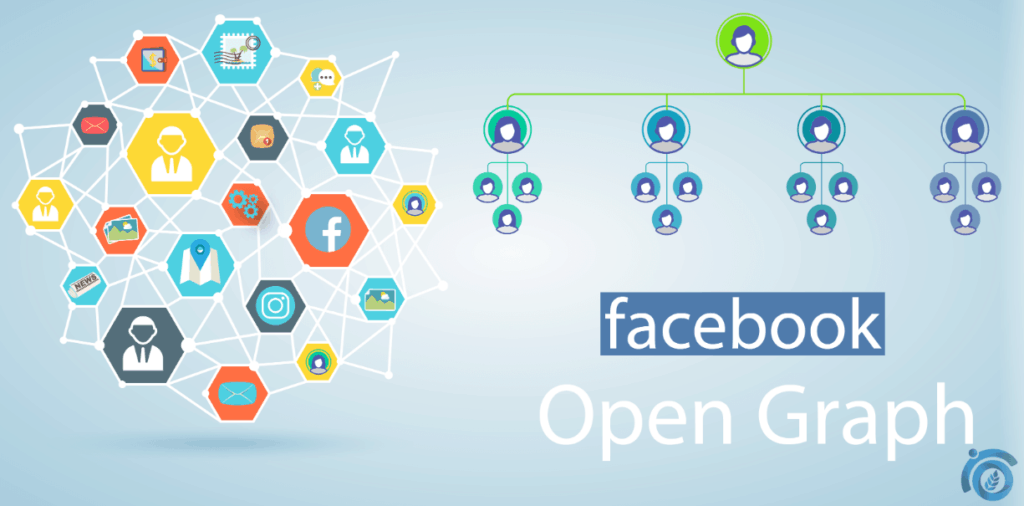

16. OPEN GRAPH PROTOCOL (OGP): –

OGP is responsible for search interactions with social media platforms.

What is Open Graph Protocol?

Open Graph protocol is a meta property which is responsible for the rich snippets appearing on social media platforms such as Facebook, Twitter, LinkedIn and etc.

The social signal does matter when it comes to search engine optimization.

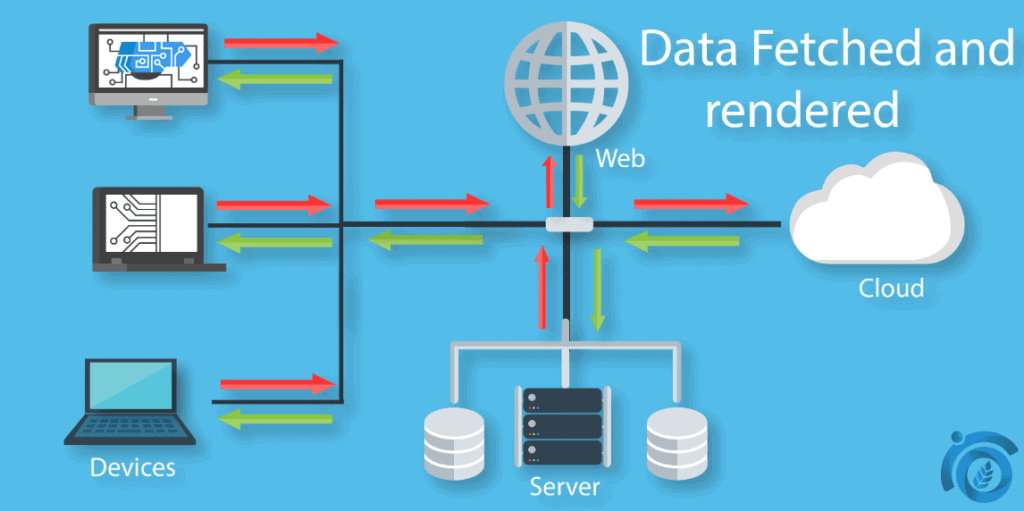

17. FETCHING AND RENDERING: –

This is an important feature from Google webmaster tool which allows one to see how Google spider bot sees a particular landing page as compared to the visitors.

In a practical sense, both the visitor and Google bot should see exactly the same piece of content. If there arises a condition where Google bot is not exactly viewing all the contents of any particular landing page. Then it is a serious threat for search engine optimization.

Improper or poor fetching by Google bot might indicate that there are some crawling or rendering issues. As a matter of fact, this can badly hurt your search rankings. Any issues which will cause harm in fetching by Google bot should be immediately taken cared of.

18. ELIMINATION OR MERGING OF ZOMBIE PAGES: –

Zombie pages badly hurts website performance. This can also decrease your SERP visibility.

What are Zombie Pages?

Zombie pages are such pages on your website which has least engagement and serves no value at all. The best way for fixing zombie pages is to merge those pages into some useful pages.

If zombie pages are eliminated or merged in a controlled way then this can help in improving SERP visibility. If you want to know in detail about Zombie Pages, read here.

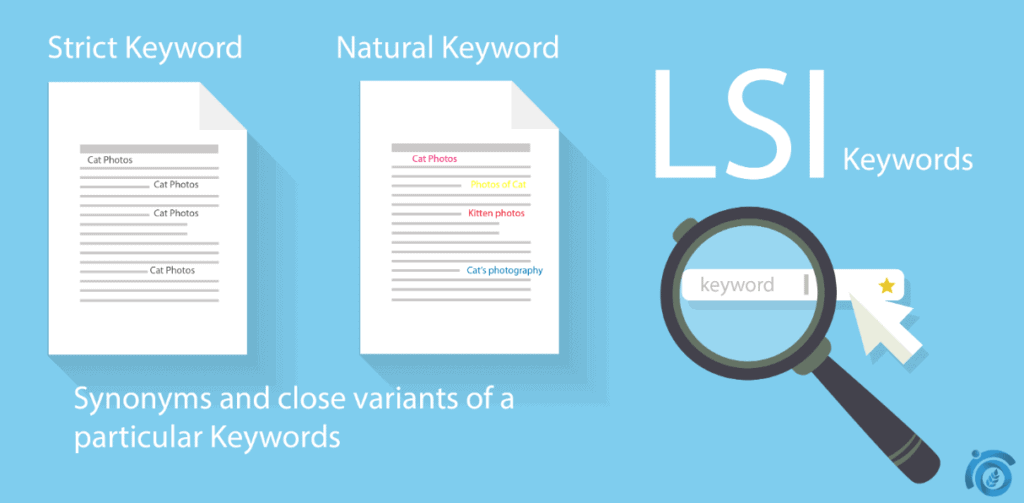

19. FIRST TIER, SECOND TIER LSI, AND CONCEPT KEYWORDS: –

First-tier and second tier LSI keywords along with concept keywords can help with massive search rankings.

What are First Tier LSI Keywords?

First Tier LSI keywords are such keywords which are a synonym to the main focused keyword. It helps a lot with semantic optimization.

What are Second Tier LSI Keywords?

Second Tier LSI are such keywords which show the related queries based on a particular set of a query. It comes really handy for intent specification.

What are Concept Keywords?

Concept keywords are such keywords which are predicted by natural language processing tool. These keywords are produced when a particular set of queries are broken down for matching the intent. It is extensively used in Rankbrain Algorithm.

If a particular page is optimized for these three types of keywords as mentioned above then it will help with massive search appearance and SERP exposure.

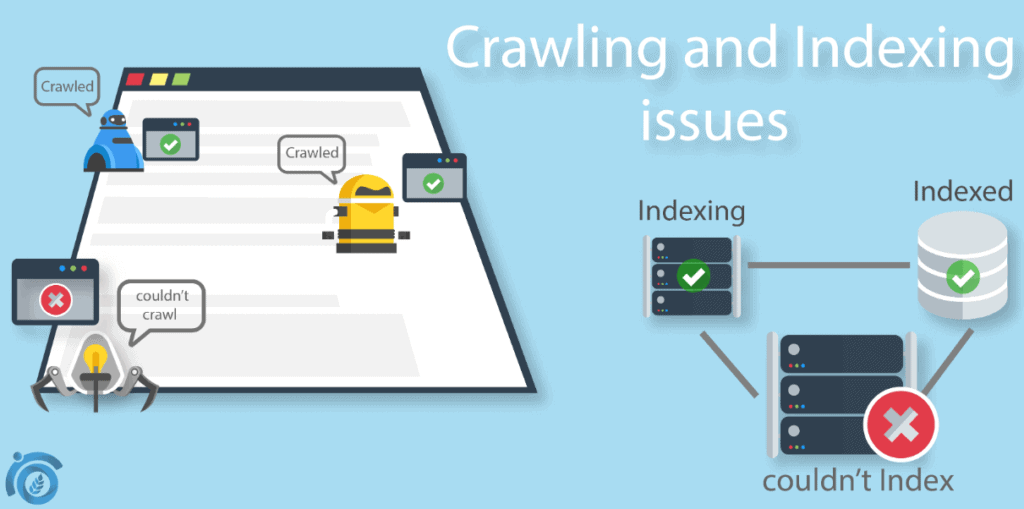

20. CRAWLING AND INDEXING ISSUES FIX (COVERAGE ISSUES): –

Crawling and indexing are two of the most basic yet important terms in the world of search engine optimization. Before digging deep, let us understand what actually both the term means.

What is Crawling?

Crawling is the very first process which is initiated by a software programme known as Crawler BOT for analyzing and storing the contents of a page into the preferred database.

For instance, Google uses Google BOT which is also known as Spider. A spider crawls and stores all the information on a particular page into the Google database, which is then queued for indexing. A spider can crawl all possible information such as titles, descriptions, contents, images, links, all page factors and etc.

What is Indexing?

Once the crawling is completed, the information of a particular page is stored into the database of Google. Later, depending upon the queries and 200+ ranking factors these pieces of information are shown by Google on Search Engine Result Pages (SERP).

For proper website ranking, there should be no issues pertaining to crawling and indexing. Quality seo services should include fixes of crawling and indexing issues as one of the most priority things. Any fault with the crawling and indexing might badly hurt search rankings. Below listed are some of the factors which can hurt crawling and indexing: –

● Eliminating Index bloat

● Minimizing crawl budget wastage

● Making sure that the URLs are search friendly

● No Query strings

● Static resources as far as practical

● Slower page load

● JS issues

● Rendering issues

● Scripts blockage

● Architectural issues

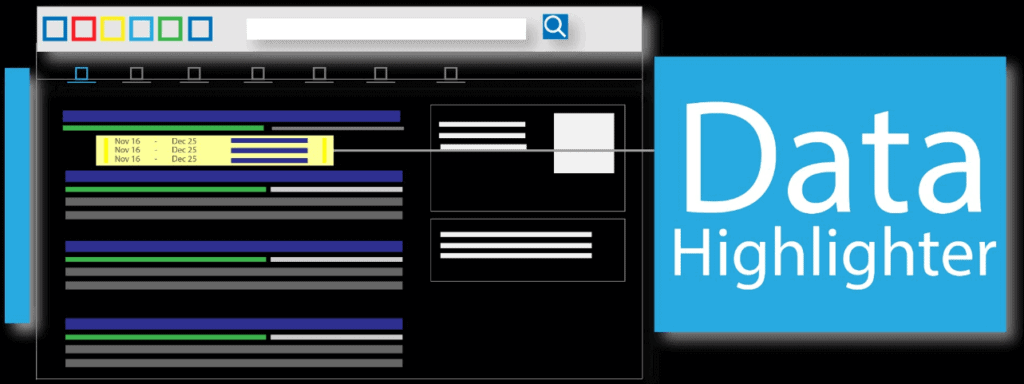

21. DATA HIGHLIGHTER (EVENTS MARK DOWN): –

This is a feature of Google webmaster tool and it is used for explaining the pattern of structured data markup to Google.

Data highlighter can also be used for highlighting important tags about a particular page. But most importantly, data highlighter can be used extremely for highlighting events for your business.

If your business has several upcoming events then one can highlight those using data highlighter. As a matter of fact, these events will then appear as rich snippets on SERP. This will not only help you in improving your CTR but will also help in massive SERP exposure.

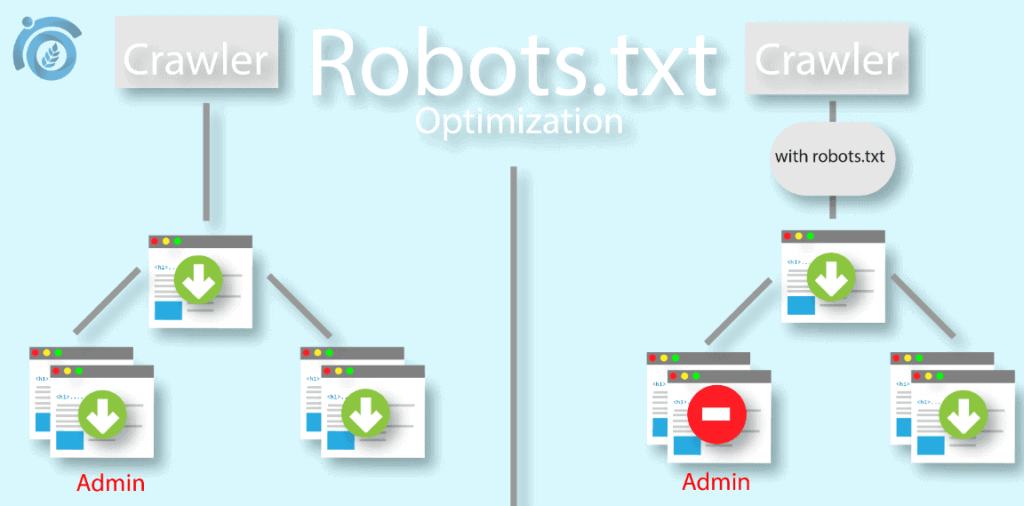

22. ROBOTS.TXT OPTIMIZATION: –

This is one of the most important steps for having a successful search campaign.

What is Robots.txt?

Robots.txt is a .txt file which is uploaded in public_html or root directory. This file is responsible for controlling the web crawlers from accessing, crawling or indexing your website.

Proper robots.txt optimization will ensure that you don’t waste your crawl budget. Also, this will make sure that important areas of your website are well indexed. Furthermore, robots.txt can also be used for controlling host, ping requests, sitemaps, block access to such resources which causes crawl budget waste, fix mobile rendering issues and much more.

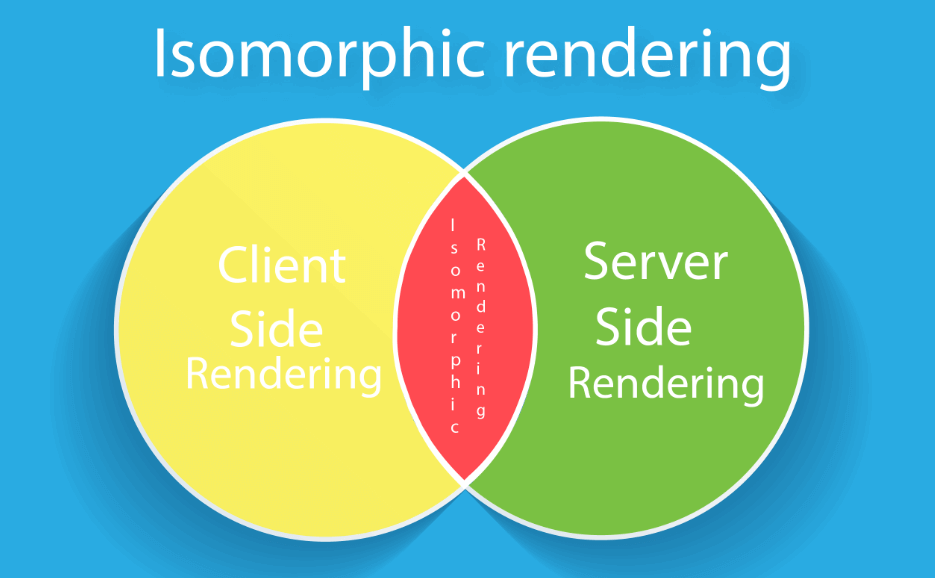

23. ISOMORPHIC RENDERING (HYBRID APPROACH): –

In the technical world, there are three types of rendering namely:

● Client Side Rendering (CSR): In client-side rendering, during the initial request the page contents are dependent on the JS to be downloaded from the browser (user end side).

● Server Side Rendering (SSR): In server-side rendering, during the initial request the page contents are dependent on the JS to be downloaded from the server (server end side).

● Isomorphic Rendering (Hybrid Approach): Isomorphic rendering is basically a hybrid approach. In this approach, the first request is made on the server side and followed future requests are made on the client side.

If your website has CSR architecture then Googlebot might need to wait for a while before access to the content of your website. This is especially for the case if your browser takes too much time to execute javascript functionality. Therefore, this might hamper the ranking of your website. This rendering is not searched friendly.

If your website has SSR architecture then Googlebot will download the JS resources from your server. Even if your browser delays with the JS fetching this will not affect the rendering of your website. This rendering is search friendly.

If your website has Isomorphic architecture then this will allow the initial request to be made from the server end side and the future requests will be done from the client end side (browser end). This type of rendering is very beneficial for search.

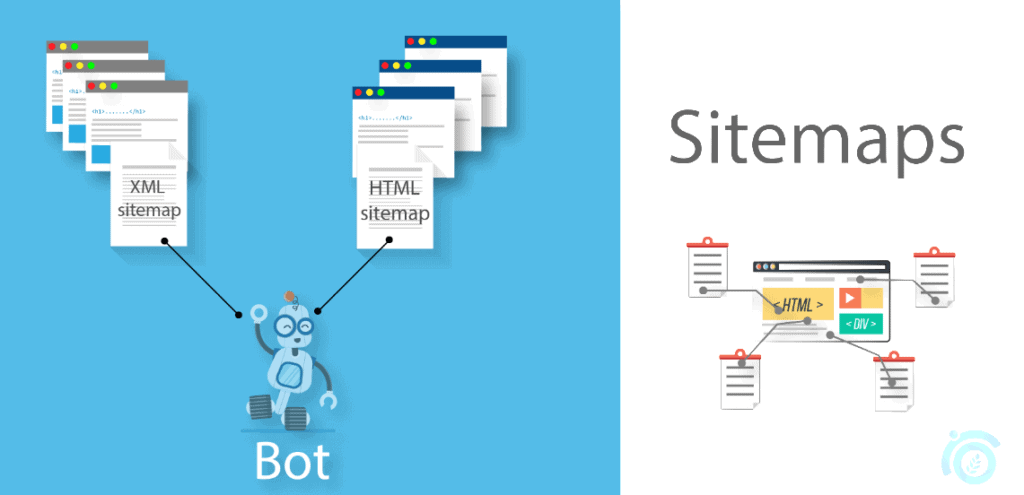

24. DIFFERENT TYPES OF SITEMAP: –

Sitemaps are a very essential factor for having an outstanding online campaign.

What is Sitemap?

A sitemap is an extensive markup language file which is uploaded into the roots or public_html. Basically, this file contains information about the URLs of a particular website. It helps search engines in knowing the existence of resources within a site. Thereby, helping with faster indexing.

Normally there are four types of sitemaps which can be used within any particular website: –

● Image Sitemap: This sitemap contains all the image URLs and it helps a lot with Image search.

● XML Sitemap: This sitemap contains all the landing page URLs of a particular page.

● Video Sitemap: This sitemap contains all the video URLs or video embed URLs.

● HTML Sitemap: This sitemap is basically for human beings which contains all the links to all the pages on the website. HTML Sitemap also helps in improving the crawl depth.

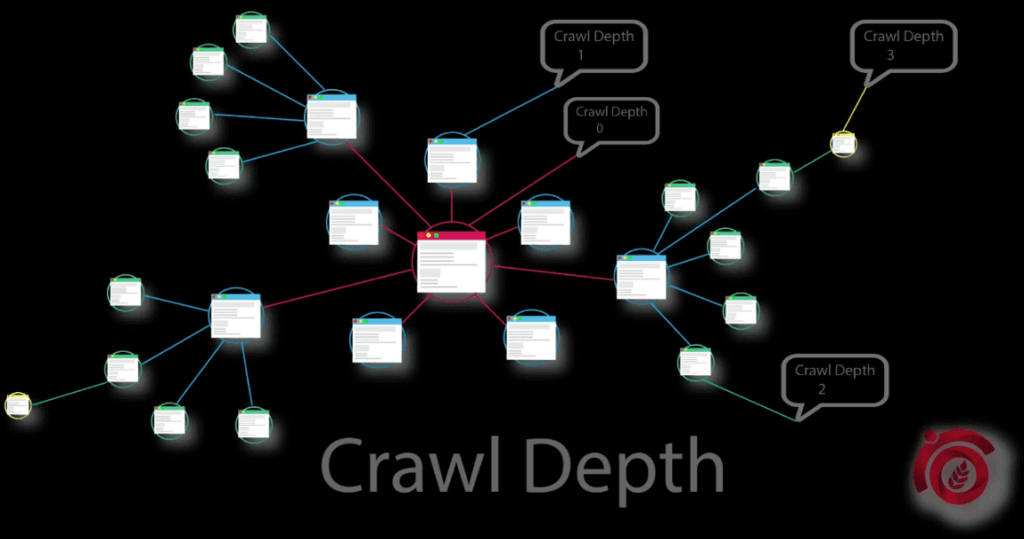

25. CRAWL DEPTH: –

This is also an important parameter in defining the advanced on-site factors of a website.

What is Crawl Depth?

Crawl depth denotes a number starting from zero. It gives a count on how many clicks does one need to make for visiting one particular landing page from the homepage.

For example, suppose you need to click four times to visit a particular landing page from the homepage. If this is the case, then the crawl depth value is 4.

From a search engine optimization point of view, crawl depth should be less than three. Lesser the value more is the ranking benefit. Having low crawl depth also helps in saving crawl budget waste.

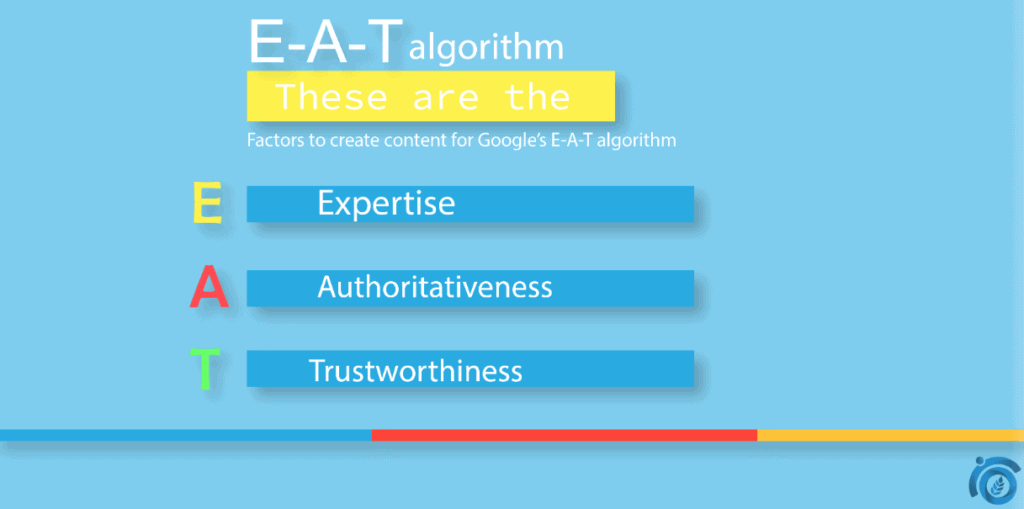

26. E.A.T SIGNALS: –

EAT stands for Expertise Authority and Trust. This is very important and considered as one of the most important ranking signals.

What is EAT in SEO?

EAT stands for Expertise, Authority, and Trust. This is a part of content quality rater guidelines from Google. It is also the main concept behind Google Medic Update Algorithm.

EAT signals are definitely being considered by Google as one of the major ranking signals. If you want to optimize your website for EAT then one needs to follow the underneath points: –

● Make sure you have high trust flow score for your website

● Make sure you have good relevance or topical trust flow for your website

● Make sure you have authoritative referring domains pointing to your website

● Make sure you have expertise author on your website with proven experience on the same field

● Make sure you have a valid author schema markup

● Make sure you have a unique “about-us” page describing all your

● Make sure you have trusted referring IP values

● Make sure you have trusted subnet-C class IP

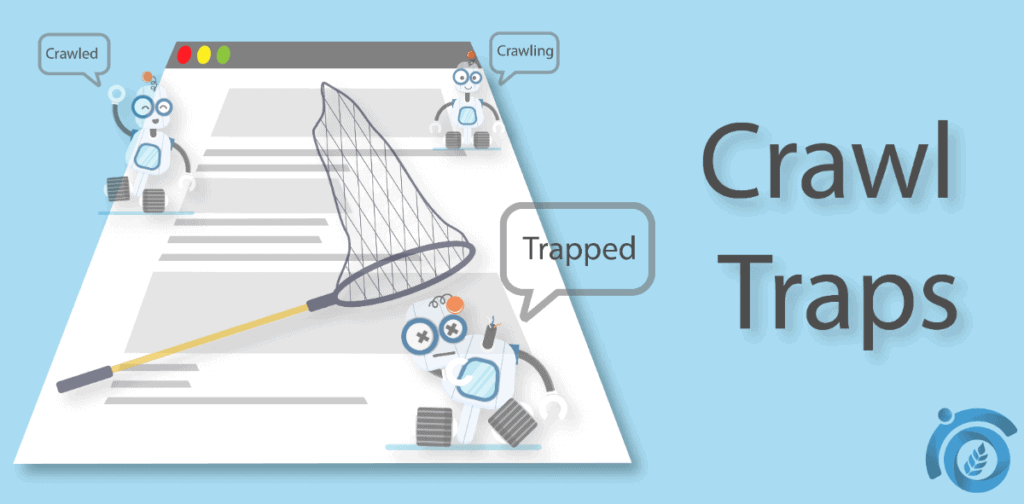

27. AVOIDING CRAWL TRAPS: –

Crawling is one of the most important steps for a successful online ranking of a website. Things like fetching, indexing, rendering, correlation and etc – all the things are dependent on crawling. If there is an issue with crawling then it will hamper the websites overall performance with ranking.

Therefore, it becomes very much important for eliminating any type of crawl issues which might prevail within a particular website. BUT . . .

Sometimes, certain issues occur accidentally which might affect the crawl rate or crawl budget unknowingly. These are mentioned as below: –

● Infinite URL structure such as example.com/11/11/11/ . . .

● Avoid dates in URLs

● Opt for static URLs as far as practical

● Avoid Zombie pages as far as practical

● Avoid Index Bloat

● Avoid auto-generated URLs as far as practical

● Avoid auto-generated pages as far as practical

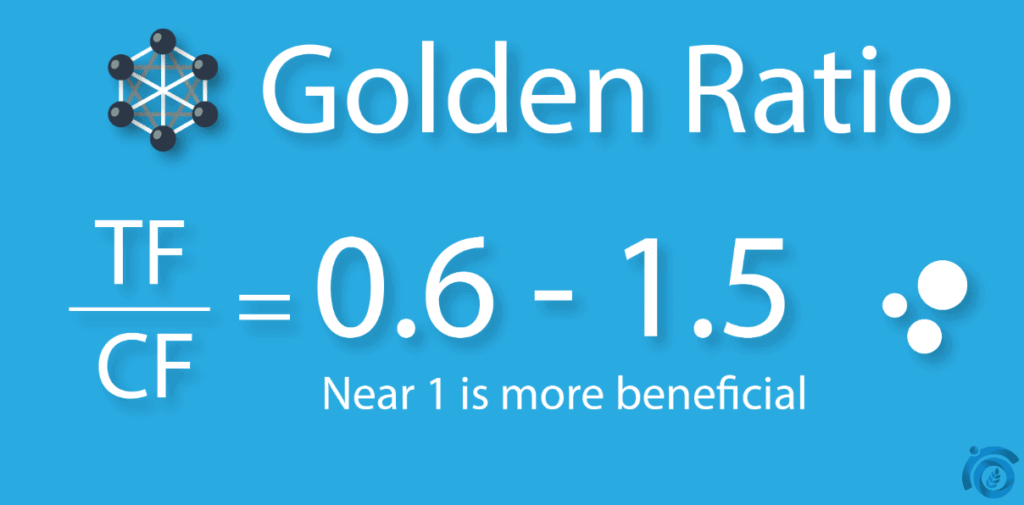

28. OPTIMIZING FOR GOLDEN RATIO: –

There are two metrics, one is known as the Trust Flow (TF) and the other one is known as the Citation Flow (CF).

TF is the measure of the authoritativeness of how authoritative the links are which are pointing to the website. CF is the measure of the quality of the links pointing to the roots of a particular website.

Mathematically, TF/CF score should be between 0.6 and 1.5

More the value is nearer to 1, more is the benefit. This overall process is known as the Golden Ratio. When it comes to optimizing for the golden ratio, one needs to balance the TF score and CF score.

TF score can be improved by the following:

● High authority Citation NAPs

● High-quality link submission

● Tiered Backlinks

● Linkback from high authority websites

● Improving the referring domains

CF score can be improved by the following:

● High DA Link Building

● Improving Referring IP’s

● WEB 2.0 and WEB 3.0

● Various forms of submission

29. FRESHNESS SIGNALS: –

For having an optimal website ranking benefit and SERP visibility, the freshness of a page should be well maintained. In a correlation study conducted by ThatWare, we have found that pages with fresh contents, links or scripts has 63% more link juice retention capacity.

Normally, link juice of any particular page tends to drain away with time passing by.

30. ABOVE THE FOLD OPTIMIZATION: –

Above the fold of a website is very important, this becomes the first point of interaction insight for both visitors and the web crawlers.

If the first fold of any particular website is well optimized then it can create a good user experience and also help a lot with crawling.

Some above the fold optimization tips for enhancing user experience is as follows:

● Optimize Images

● Lazy loading

● Less or no ad-snippets

● Proper minification

● CTA buttons or lead magnets

Some above the fold optimization tips for enhancing the crawling rate are as follows:

● Deferring of JS

● Asynchronous loading

● Minification of resources

● Proper caching

● Enabling gZip compression and much more

31. USING IFRAMES AND AJAX: –

Iframe and Ajax and two of the hottest topics, many peoples are still confused with the fact whether they are good for search engine optimization or not.

What is an iframe?

Iframe is a html code which can help someone to embed content from another website on his or her own website. With the help of an iframe, you can show your visitors a piece of content from another website without sending your visitors to the other website.

Generally speaking, an iframe doesn’t create any issues of duplicate content. Furthermore, if the iframe is properly in-lined then it will not create any crawling issues either.

What is an AJAX?

AJAX is an asynchronous javascript which gets loaded after DOM parser renders both the HTML and CSS. In other words, once a page gets loaded with all HTML and CSS properties, the JS gets executed.

There is a common myth that Google finds it very difficult to crawl AJAX resources. Whereas, it ajax helps a lot with the user experience. But technically speaking, Googlebot has improved a lot and now it can render and index ajax pages.

Just one thing you have to bear on your mind, AJAX pages can get crawled easily but one needs to ensure that there are no rendering issues within that page.

32. FEATURED SNIPPET OR RANK ZERO OPTIMIZATION: –

The featured snippet also popularly known as Rank Zero is a modern concept on semantic search industry.

What is Featured Snippet?

A featured snippet is a search box containing answers to a particular query. It is also known as Rank Zero as it is shown above the position 1 search result. A featured snippet is most likely to satisfy a user intent by serving the right answer against a right query.

There are several ways by which one can optimize their landing page for appearing as a featured snippet. ThatWare has also invented one structured markup especially for helping pages to be appearing as a featured snippet.

33. THIN CONTENT ISSUES: –

Thin content is one of the serious threat to a website ranking. Pages must contain at least 400-500 words of contents, better if it is more than 1890 words of content.

If pages are analyzed in having fewer words of content along with scraped or copied content. Then it might lead to the destruction of website ranking.

34. MIXED CONTENT ISSUES (HTTP AND HTTPS / WWW AND NON-WWW) : –

If your website has SSL certificates installed then pages might appear in both HTTP and https version.

The main issue is that Google will consider both of the pages as separate pages and this might lead to duplicate pages issue also known as a mixed content issue.

The same condition may also arise if a particular page load in both www and non-www format. For having the best search ranking, these issues should be identified and solved.

35. WEBSITE NAVIGATION: –

Website navigation plays an important role in search ranking. Basically, there are several types of navigation such as –

● Faceted Navigation

● Sitewide Navigation

● Breadcrumb Navigation

● Slider Navigation and much more . . .

Generally, breadcrumb navigation helps a lot not only with user experience but also helps web crawlers with faster crawling. Breadcrumbs help in reducing crawl depth.

On the other hand, faceted navigation when used in an e-commerce website results in crawl budget wastage. Faceted navigation should be properly handled else it might hamper website ranking.

36. CRAWL BUDGET AND INDEX BLOAT OPTIMIZATION: –

Optimizing the crawl budget for your website is very important for the good online success of any particular website. Similarly, controlling the index bloat is also equally important for enjoying good SERP ranking. Let us first understand what these two actually means.

What is Crawl Budget?

Crawl budget is defined as the number of pages Google will crawl each day on your website. Normally, it’s an average score and it defines how many pages are getting crawled each day on an average.

Generally speaking, the crawl budget should be good, i.e, Google should crawl a high number of pages each day. Wondering why? Well, here’s the reason behind it . . .

Suppose you have an e-commerce website which has around 3,00,000 pages and your crawl budget is 3000. Therefore, only 3000 pages will be crawled each day. In other words, it will take almost 300+ days for a complete crawl of all the pages of your website.

Now suppose you update anyone content of your pages, then it will still be uncertain as for when that particular page will get crawled. So to conclude, the more you keep the crawl budget better for your website. The more is the chances of ranking higher on SERP.

There are several factors which can help in improving the crawl budget, some of them are mentioned as under:

● Proper page load speed

● Search friendly URL structure

● Index Bloat Reduction

● Unique content

● Good intent specification

● Proper JS optimization and much more . . .

Now coming over to the next question –

What is an Index Bloat?

Index bloat is the condition in which Googlebot crawls and indexes bulk pages. Index bloat arises when fewer priority pages also get indexed.

In any particular website, there might be lots of unimportant pages which shouldn’t be unnecessarily indexed. Unnecessary index of web pages might result in wastage of crawl budget.

Here are some of the ways which can help in preventing index bloat:

● Identification of less important pages

● Blocking the less important pages from Google crawl via robots.txt

● Proper navigation optimization

● Duplicate URLs check

● Query parameters check

● Faceted URLs optimization and much more . . .

37. IN-LINKING: –

In-linking a basic yet advanced strategy which especially helps e-commerce websites for ranking their most important pages better in SERP.

In-linking is also known as content silo’s as because it’s structure is very much similar to silo architecture. This strategy is all about making your Money Page or any important landing page rank better in Google SERP. The main principle which is followed here is linking your Money Page in important anchors on other pages (also known as silo pages) within your website.

The following is the eligibility criteria for the silo pages:

● The golden ratio for the page should be good

● The ahref page rating should be good

● The page should contain effective anchors which will depict the topic for the money page

● The silo page should have good value and much more . . .

38. SEARCH FRIENDLY URL STRUCTURE AND PARAMETERS: –

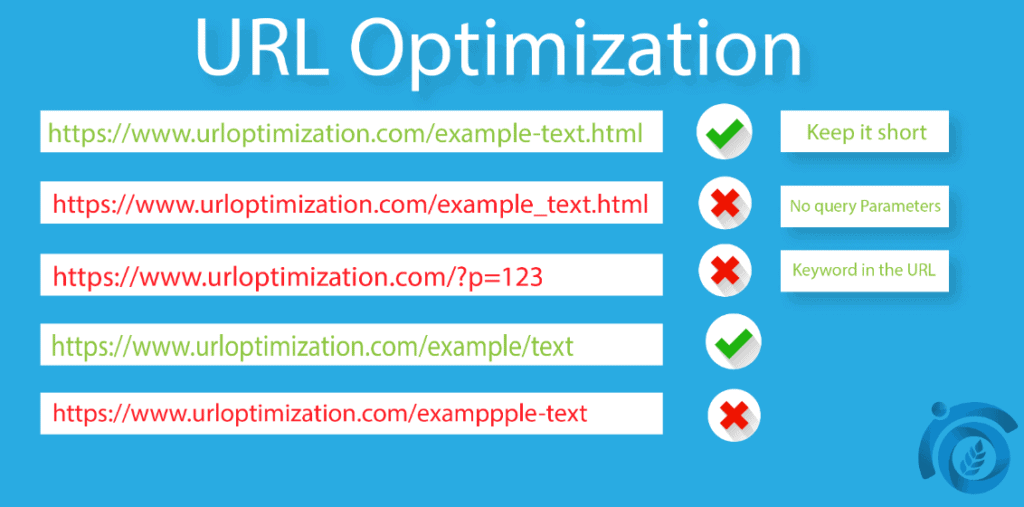

URL places very big importance as a ranking signal. For having a good seo campaign, one should ensure that the URLs of their website completely search friendly. Here are some of the points which will ensure that the URLs are search efficient:

● URLs should be as short as possible

● URLs should be free from sub-directories as far as practical

● URLs should definitely be static in nature

● URLs should not contain any query parameters which might cause caching issues

● URLs should effectively communicate the page topic

● URLs should contain the focus keyword or semantic keyword as far as practical

● If the URLs contain Google analytical parameters such as utm_codes then they need to properly take care of by search console or robots

● The URLs should be cacheable at any cost

39. TOPICAL TRUST FLOW, UX EXPERIENCE AND CTA: –

Topical trust flow, UX and Call-to-actions (CTA) are very important when it comes to best SEO strategies. Let us cover all of these ones by one.

What is Topical Trust Flow?

Topical trust flow is a measure which provides how relevant your website is when compared to the categories of the referring domains from external websites.

In other words, topical trust flow is also responsible for analyzing the relevant factor for your website. More the topical trust flow, the better it is.

What is Call-to-actions (CTA)?

Call-to-actions also was known as CTAs are buttons which are used on lead magnets or on sales funnel pages. It helps in quick customer or visitor interaction which can later help with lead generation or opt-in process. In the marketing world, CTAs should be used only in areas where visitor interaction is found to be the maximum.

There are several ways by which a proper CTA optimization can be done. Some of the most effective ways is mentioned as under:

● Heat map

● A/B testing

● A/B/C testing

● Color testing

● Surveys

● Conversion rates analysis

What is UX Experience?

UX experience basically has to deal with the user experience for creating the best user engagement. Search engine likes it very much if you create high enough user experience for your visitors in mind.

Better the UX experience, best is user engagement. And better engagement is directly proportional to better search rankings.

40. CACHE VERSION AND A/B TESTING: –

Google cache is very much important for an effective SEO service.

Google cache is very important for the following reasons: –

● It will indicate how frequently Googlebot crawls your page. More the crawling rate, better is the crawl budget.

● If you observe frequent cache by Google then it will also help a lot with your search rankings. The reason is because of the faster crawl and thereafter any upgrade on the contents will reflect quite a soon on the SERP.

● Cache version of your landing page will also help in analyzing if all the contents of your page are indexed properly on Google or not.

● Proper visualization of cache version will also indicate that your landing page is free from any crawl errors.

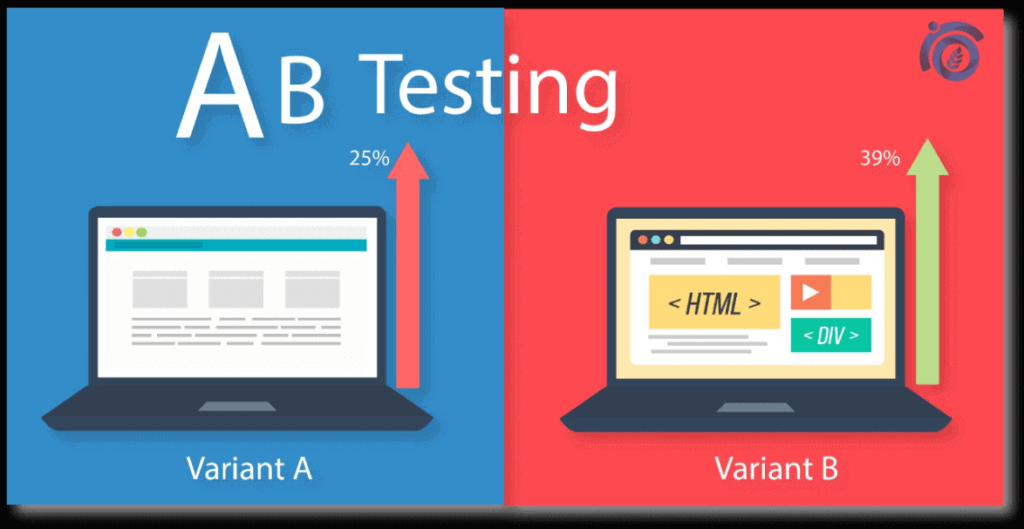

What is A/B testing?

A/B testing basically means testing the performance of your landing pages in two or more variants. This includes dividing the traffic in equal proportion on all the variants of your same landing page.

A/B testing basically help with the underlying things: –

● It helps a lot for CRO

● It helps a lot in improving user engagement

● It helps a lot in finding the best landing page variants for improving the lead generation

● It helps a lot in improving the sales funnel

● It helps a lot in improving the UX experience

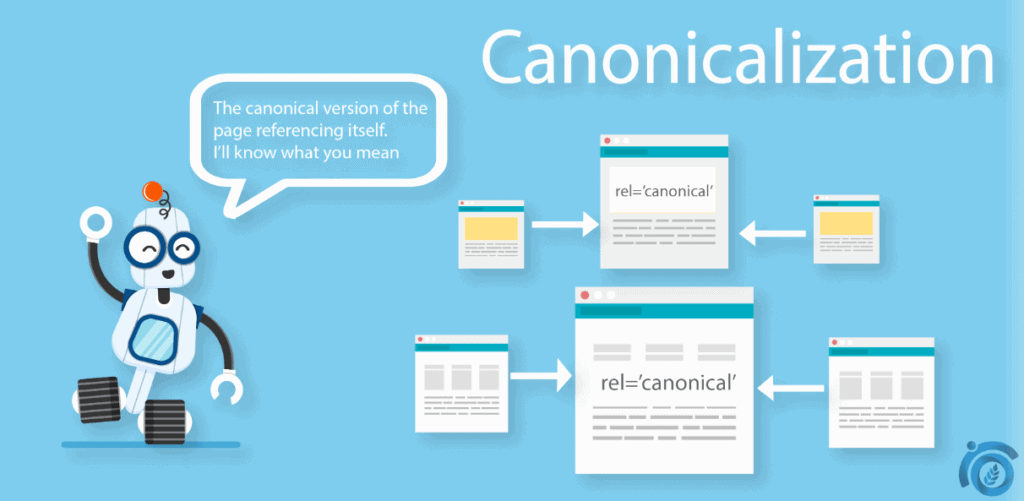

41. CANONICALIZATION: –

When it comes to website optimization, canonicalization comes really handy.

What is Canonicalization?

Canonicalization refers to the act of preventing duplicate or identical content issues appearing on multiple URLs on the same website. Duplicate or identical content issues are a serious threat to the ranking performance of a particular website.

Some even www and non-www or http and https version or even URLs with trailing slashes and non-trailing slashes can create duplicate issues. These issues can be fixed by proper canonicalization tag.

Pagination also creates a duplicate issue which can also be overcome by canonicalization.

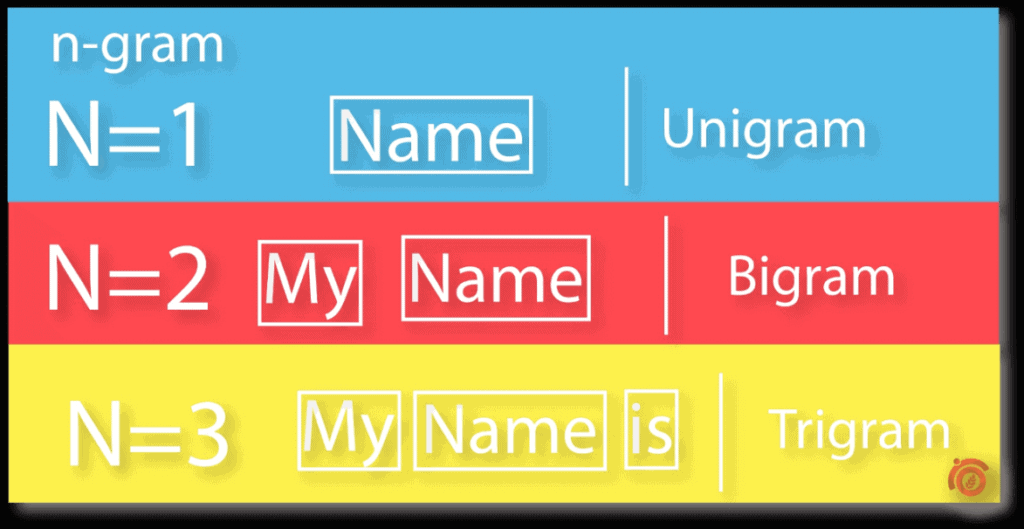

42. KEYWORD DENSITY AND N-GRAM ANALYSIS: –

Keyword density and n-gram analysis are very powerful concepts which are useful for search rankings.

What is Keyword Density?

Keyword density is the percentage of the focus keyword appearing on a particular landing page. The ideal range is between 1 – 1.8%

It is calculated by the following mathematical formula:

Keyword Density = (total no. of the focused keyword) / (total no. of words within the page) * 100

In a practical sense, the value can be between 1 – 1.8% but the seo provider should bear in mind not to over-optimize a page with an excess density of the keyword. Otherwise, it will make the page look like keyword stuffed. Keyword stuffing can cause penalty and it will make a loss in website traffic and rankings.

What is the n-gram Analysis?

In information technology, n-gram refers to the breaking down of a continuous form of text or speech into n pairs. The pairs can be distinguished in either syllables, words, letters, sample pairs, base pairs, and etc.

N-gram is very useful when it comes to search and semantic search engineering. This is an advanced topic and can help a lot with proper content optimization.

A proper n-gram analysis will ensure that no index-stack pairs within close semantic proximity are stuffed or are duplicate. If you want to learn more about it, visit the link below.

43. MOBILE FIRST INDEXING: –

This is one of the hot topics which is prevailing out these days. In fact, one survey confirms that the majority of websites also got affected with this rolling out.

What is Mobile First Indexing?

Mobile first indexing is an indexing policy laid out by Google engineers which will index and rank the mobile version first. In other words, the mobile version will first be crawled and then indexed.

With that being said, now mobile versions will have the ultimate importance as compared to the desktop versions. For ensuring hassle-free mobile first indexing, follow the underneath steps:

● Make sure you have no mobile issues

● Make sure none of the mobile loading resources are blocked by any robots

● Make sure your website is 100% responsive and have proper viewport assigned

● Make sure mobile version canonicalization is working properly

● Make sure your mobile version of the landing pages have zero or no crawling issues

● Make sure your mobile versions are getting properly fetched and rendered

● Make sure AMP versions work without any possible error

● Make sure mobile version landing pages are properly contained into the XML sitemap without any error

44. GEO-TARGET (LATITUDINAL TARGETING) :-

If you own a local store and want to market it locally then Geo-targeting comes really handy.

Geo-targeting of latitudinal targeting can help in making your brand exposure more firm. Geo-targeting can be done in two ways:

● Geo Metadata

● Latitudinal Structured Data (also known as GeoCoordinates)

For instance, below is the geo metadata for our website thatware.co

<meta name=”geo.region” content=”IN-WB” />

<meta name=”geo.placename” content=”Howrah” />

<meta name=”geo.position” content=”22.590288;88.359403″ />

<meta name=”ICBM” content=”22.590288, 88.359403″ />

Below is the syntax for latitudinal structured data

<script type=”application/ld+json”>

{

“@context”: “http://schema.org”,

“@type”: “Place”,

“geo”: { “@type”: “GeoCoordinates”,

“latitude”: “40.75”,

“longitude”: “73.98” },

“name”: “Empire State Building”

}

</script>

45. DWELL TIME, BOUNCE RATE AND INFINITE SCROLL: –

Dwell time, bounce rate and infinite scroll are three important analytical KPIs.

What is Dwell Time?

Dwell time is the total amount of time which visitors spends on your website before leaving. Dwell time also helps with direct measurement of the website engagement. More the dwell time, better it is for search rankings.

What is Bounce Rate?

Bounce rate is defined as the number of visitors who leaves the current page and visits any other page or other websites. Lesser the bounce rate, better it is for search rankings.

What is Infinite Scrolling?

Infinite scrolling refers to the act of making any particular landing page to scroll without any end or limit. Infinite scrolling can help increase the dwell time and decrease the bounce rate. Thereafter, it will help a lot with the search rankings.

46. PAGERANK SCULPTING, LINK JUICE, AND EXTERNAL LINK OPTIMIZATION: –

Link juice is nothing but defined as the value or the worthiness of a link.

Logically speaking, link juice gets divided in equal proportion whenever other pages are linked with a page.

Seo consultant services had the practice of using nofollow tags so as to preserve the link juice from draining away. Generally speaking, people used to play around with the nofollow tag for preventing the link juice flow and in return the pages used to have hundreds of link backs.

With the launch of PageRank sculpting, now even if you assign nofollow tags the link juice will not be preserved rather it will get evaporated away. For external link optimisation, make sure at least your landing page has 3 links to the external authoritative sites with relevant anchors focusing your topic.

47. AMP: –

As per the Penguin Google algorithm update, AMP became one of the hottest topics all around the world.

What is AMP?

AMP stands for Accelerated Mobile Pages. It is the cache version of your html page which helps in superfast loading in Google mobile SERP.

AMP works on the principle of cache loading of the html source code of your website. The JS and CSS of your website which will be used in AMP will be totally different. If you build an AMP page, here are some of the things which one should bear in mind:

● AMP versions should have proper canonicalization setup with their desktop version of the master page

● AMP versions should be free from any rendering and indexing issues

● AMP versions should have the metadata set up properly

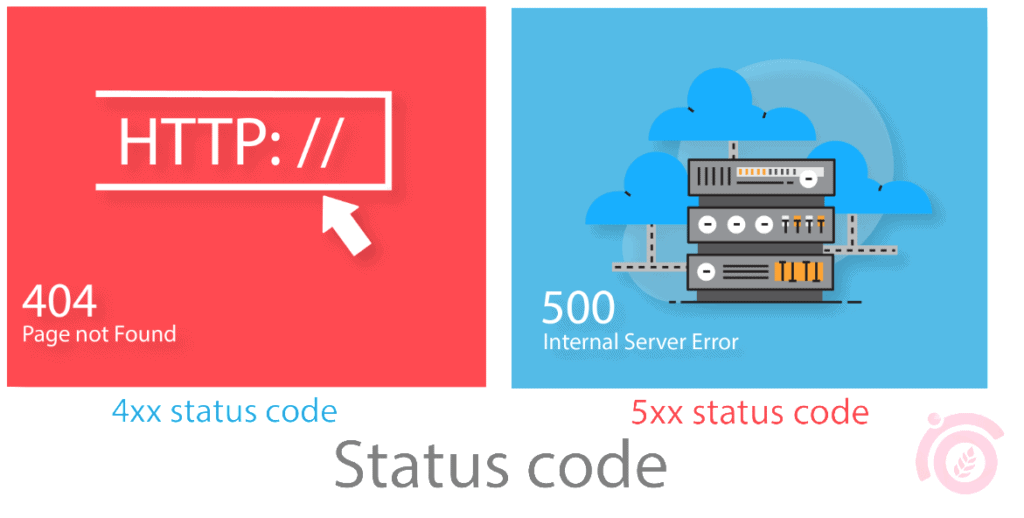

48. PLAYING WITH 4XX AND 5XX ERRORS: –

4xx and 5xx errors are a serious threat to search engine optimization. These errors should be immediately fixed.

What are 4xx errors?

4xx errors basically refer to “page not found” errors. It can be of several types such as 401, 402, 403, 404 and etc. 4xx errors can cause a lot of problems on crawling issues. 4xx errors can be fixed using a proper relevant redirect or by fixing the page source.

What are 5xx errors?

5xx errors basically refer to “server end errors”. These errors are basically caused to server misconfigurations or server related issues. 5xx errors can be fixed by accessing the “access log” file via the server.

49. FACTORS AFFECTING OVERALL LOADING TIME: –

Overall loading time of a website can be hampered against multiple factors. These factors are described below:

1. DNS fetch time – DNS time is the amount of time (in millisecond) it takes a domain lookup to occur while a browser retrieves a page source.

2. Initial connection – It is the total time in a millisecond, which a browser needs to wait before the connection is a handshake between the server.

3. SSL – This is the SSL connect time which is caused by the HTTPS secure socket layer. This time is the recorded time which a browser connects from non-encrypted version to an encrypted version.

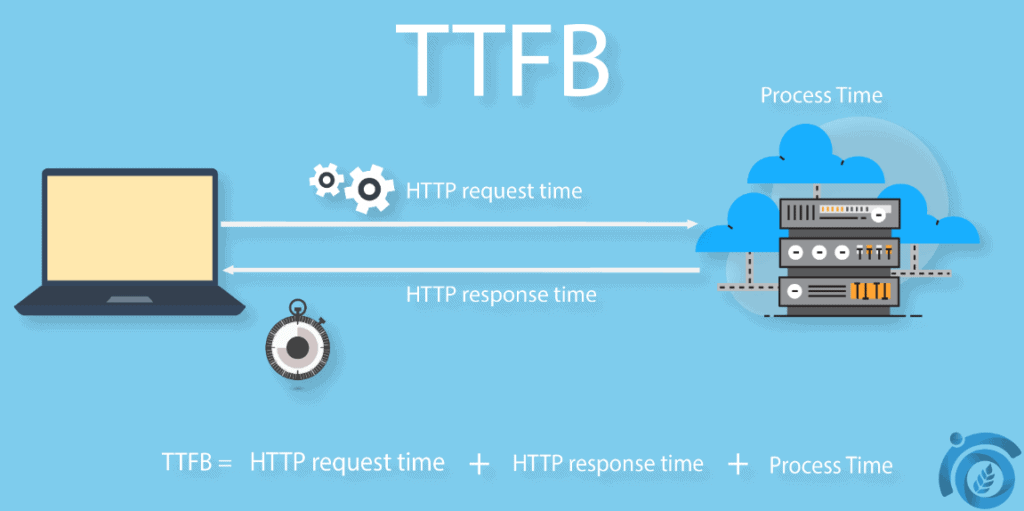

4. First time to byte – This is also known as Time to First Byte of TTFB. This is the time period from the initial connection to the loading of the first byte of the page.

5. Page weight (HTTP Archive) / HTTP Request – This is the load connection which is caused by the HTTP request between the browser and the server.

6. DOM content loaded – This loading time occurs when the data object model of the content gets fully loaded.

7. Time to last byte / Page Load – This is the time to load the last byte or after completing the loading of the entire page.

8. Critical rendering path – Depict how the browsers can read the source codes of a page such as JS, CSS, and html.

9. Waterfall – It counts for all the resources which are responsible for loading the entire page. It is recommended to keep the overall summation of the waterfall within 1 MB.

10. RTT – It stands for the time taken by a request to return from a server sent from a browser.

50. BRAND SIGNALS: –

Brand signals are very important when it comes to search engine optimisation. Furthermore, seo london and other big agencies now strongly focus on improving brand signals and overall branding.

Brand signals can be improved by a number of ways, some of them are mentioned as below:

● Using branded anchor’s

● Using brand syndication

● Making brand awareness

● Focussing over branded off-site optimization and much more . . .

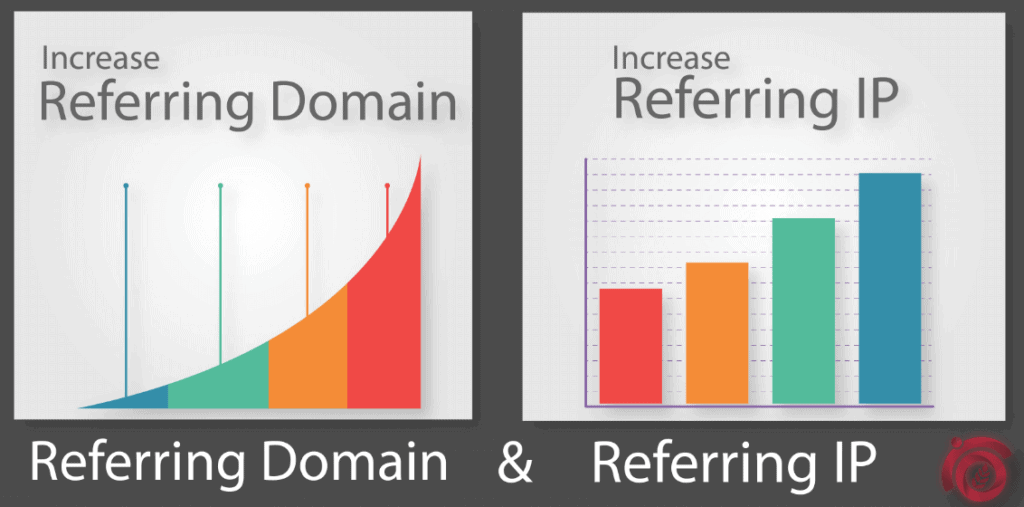

51. REFERRING DOMAINS, REFERRING IP AND SUBNET C – CLASS: –

When it comes to the KPIs of off-page then factors like referring domains, referring IP and subnet C class are very important.

What are Referring Domains?

Referring domains are the number of unique domains from which one website successfully acquires a backlink. If multiple backlinks are acquired from the same domain then it won’t get counted as a new referring domain.

What are Referring IPs?

Referring IPs are the number of unique IPs from which one website successfully acquires a backlink. If multiple backlinks are acquired from different domains but the same IP then referring domain might change but referring IP will remain the same.

What is the Subnet C – Class?

Subnet C – Class basically refers to the IP of the host where the server is located. It can be shared, virtual, private or dedicated.

Referring domains and referring Ip’s are considered as two of the strong kpis which can be utilized as one of the massive ranking factors.

52. ANCHOR TEXT OPTIMISATION AND CO-CITATION : –

Anchor text optimization and co-citation are two very important practices which can help with proper content optimization.

What is Anchor Text Optimization?

Anchor text optimization is the act of optimizing the anchors within a page. The anchors should be clearly communicating the topic of the page. There are several types of anchors, some of them are listed as under:

● Branded anchors

● Phrase match anchors

● Exact match anchors

● LSI anchors

● 2nd Tier LSI anchors

● Semantic anchors

● Naked anchors

● Partial match anchors

● Long tail anchors

Always remember that a proper anchor text will help Google in clear understanding of what your page is all about and will help with massive search engine rankings.

What is Co-citation?

Co-citation is the practice of using a long tail keyword or a semantic keyword as an anchor with phrase match or LSI keywords sprinkled around it.

53. ADVANCED LINK BUILDING: –

Traditional link building practices involve different types of off-page submissions such as:

● Bookmarking

● Citations

● Classifieds

● Business Listings

● Podcasts

● Social Submissions

● PPT and PDF submissions

● Image and Info-graphic submissions

● WEB 2.0 and much more . . .

But advanced link building involves many different types of strategies combined with different types of submissions. These strategies help in improving the link juice and referring domains and also helps with solid search rankings. Some of the advanced link building practices are mentioned as under:

● RDP (referring domain into PBN)

● Set-p Model

● Tier 2 Set-p Model

● Web 3.0

● RDP 2.0

● Link Wheel

● KNN Clustering

● Off-page Silo and much more . . .

54. CONVERSION RATE OPTIMISATION (CRO): –

CRO is an important aspect of digital marketing and is definitely one of the hot requirement for any marketer in this world.

What is Conversion Rate Optimisation (CRO)?

Conversion rate optimization is the practice of optimizing the sales funnel and lead magnets. CRO will help with monetary or leads acquisition. Its implementation will also likely to improve the conversion rates as well. There are several conversion rate optimization tips, some of them are mentioned as under:

● Better utilization of exit-intent technology

● A proper study of heat maps

● Proper analysis of scroll-depth

● Proper analysis of user behavior and user interaction points

● Engagement hike analysis

● Copywriting converting metadata

● Proper color utilization

● Proper orientation of lead magnets

● Proper first fold optimization

● Shopping cart optimization and much more . . .

55. PENALTY ANALYSIS AND DIAGNOSING TRAFFIC DROPS: –

This is a very important area in SEO where proper analysis on the traffic drops is researched with the Google Updates booklets for finding out the mild algorithm changes or brewing updates.

Google produces over 500 algorithm changes a year. Most are small changes but sometimes few big core changes also occur.

56. DYNAMIC QUERIES AND INTENT SPECIFICATION USING GOOGLE TAG MANAGER: –

One can use item scope property and JSON-LD for proper intent specification using dynamic queries or intent satisfying automated QnA.

This set-up mostly is of static nature but this can be automated with the help of Google Tag Manager. With the help of Google Tag Manager, one can make one piece of intent specifying code to dynamically trigger on other landing pages.

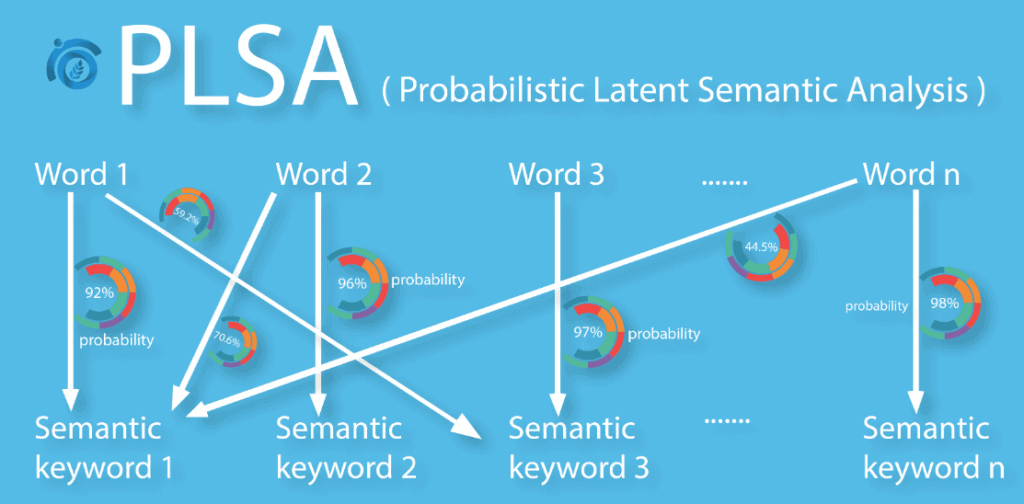

57. PROBABILISTIC LATENT SEMANTIC ANALYSIS (LSA): –

Probabilistic LSA is a concept of semantic search engineering which with the concept behind the content. Probabilistic LSA works by analyzing the overall topic behind a particular content and breaks it down into several topics which will effectively communicate the topic of the content. Thereafter, it will help with a proper intent specification.

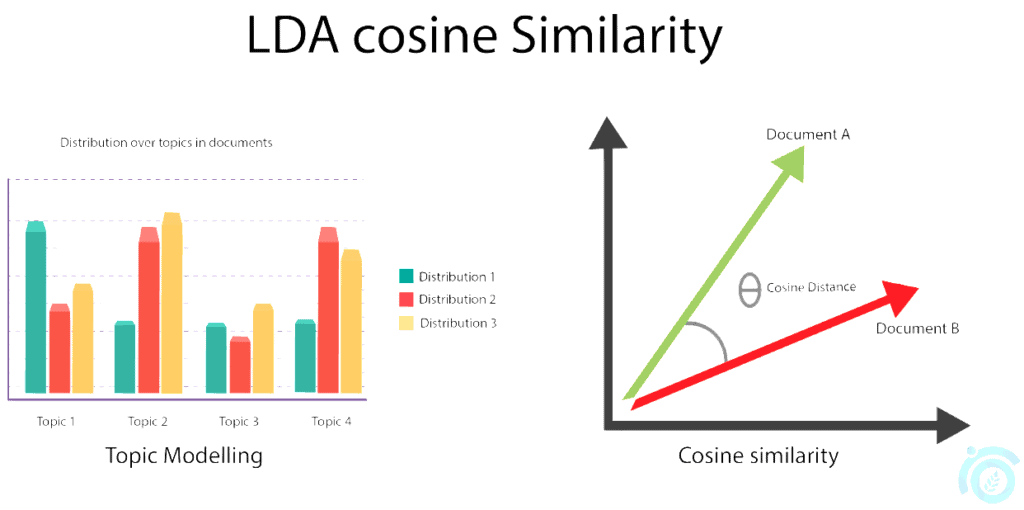

58. LDACOSINE OPTIMISATION: –

LDAcosine is one of the most important factors in topic modeling and vector space modeling. It’s an integrated part of natural language processing and information retrieval. Cosine similarity helps a lot with search engine optimization and also helps in ranking highly competitive keywords in Google search. Cosine similarity works on the principle of topic similarity and LDA which stands for Latent Dirichlet Allocation works on the topic relevance. Together LDACosine can give one probabilistic value when the search term which you are optimizing is compared against a document or content set. If the value is high then it will be better for search engine rankings. In most cases, you can also compare the value of the search term against your competitors landing page.

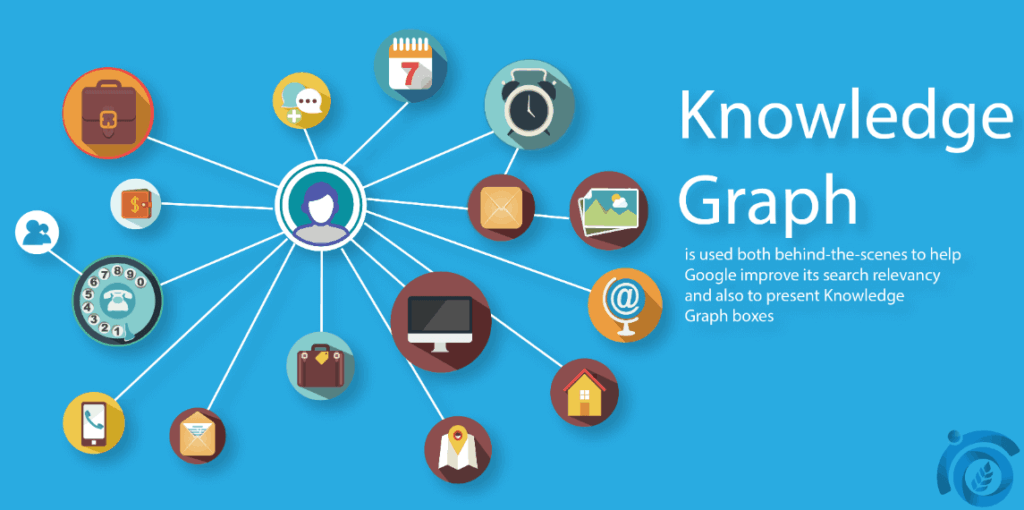

59. KNOWLEDGE GRAPH PANEL (KGP) AND ENTITY SEARCH OPTIMISATION: –

In the era of semantic search engineering, the two factors namely knowledge graph and entity search matching hold high value in search rankings.

One can optimize for knowledge graph by using the right sets of structured data. Best if someone uses JSON-LD. Entity search matching can be performed by using relational values and matching the relationship variables with the entity itself.

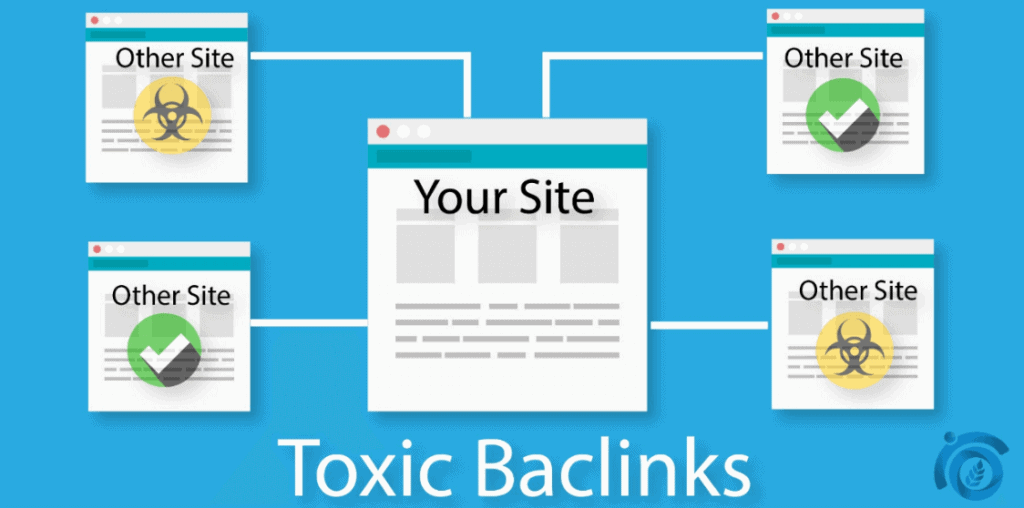

60. TOXIC BACKLINKS DISAVOW: –

Backlinks are an important factor for proper search rankings. But bad or toxic backlinks can harm search rankings. Toxic backlinks can even be harmful and might result in a severe penalty.

You will know a backlink is toxic if it fulfills the following criteria:

● If the backlink has a high spam score

● If the backlink has a poor referring domain and referring IP value

● If the backlink has zero or less topical trust flow

● If the backlink is lost

● If the backlink ends up in redirect chains

● If the backlink has zero of extremely low TF and CF score

● If the backlink has high incoming links from toxic sites

● If the backlinks don’t come from a secured server or an IP

It is advisable to keep a check on the backlink profile every 20 days following the above criteria. Once you keep a list of all the toxic backlinks then you can simply disavow the list from your search console. Make sure to keep the list in .txt format.

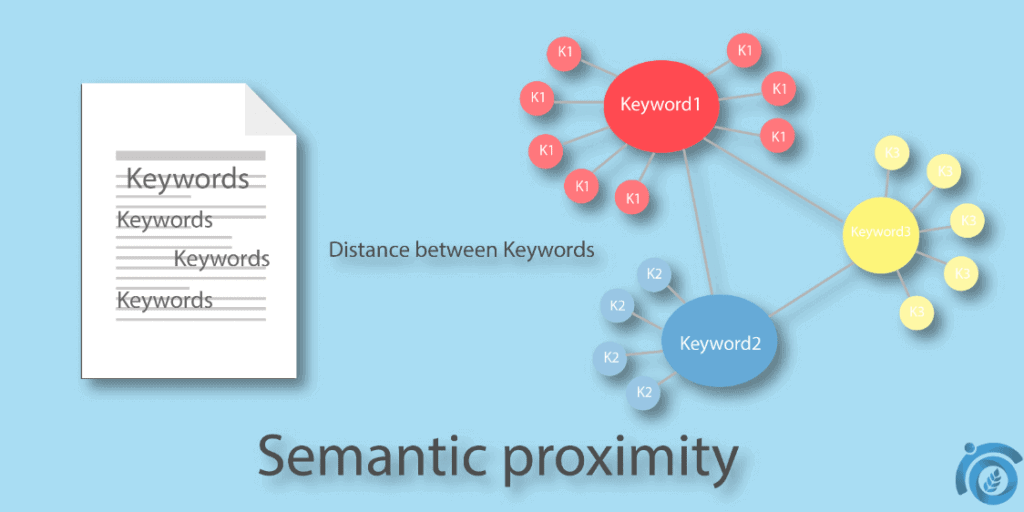

61. SEMANTIC PROXIMITY: –

Best seo companies will definitely make sure that your landing page is properly optimized for semantic proximity.

What is Semantic Proximity?

Semantic proximity basically refers to the practice of using semantic keywords and long tail keywords in close proximity to each other. In most cases, it refers to the usage of the keywords with balance gap or distance from each other.

Semantic proximity will also help in proper n-gram optimization and it makes sure that the n-level is not overstuffed.

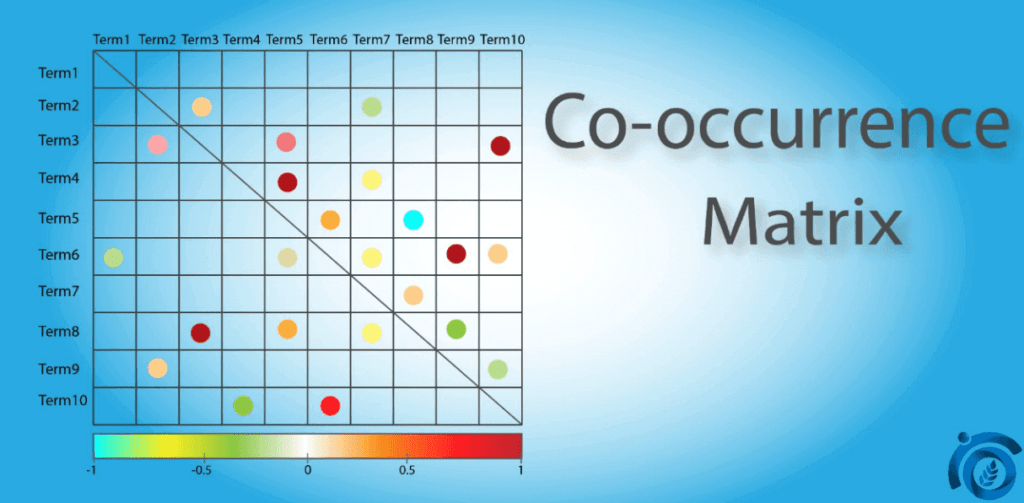

62. CO-OCCURRENCE: –

Co-occurrence is a phrase match concept which helps a lot with the intent specification and entity match specification.

What is Co-occurrence in SEO?

In search engine optimization, co-occurrence refers to the act of using phrase match keywords within a particular content for optimizing a particular topic. It also helps a lot with entity search matching. Co-occurrence finds huge importance in semantic search as it helps in an effective intent specification.

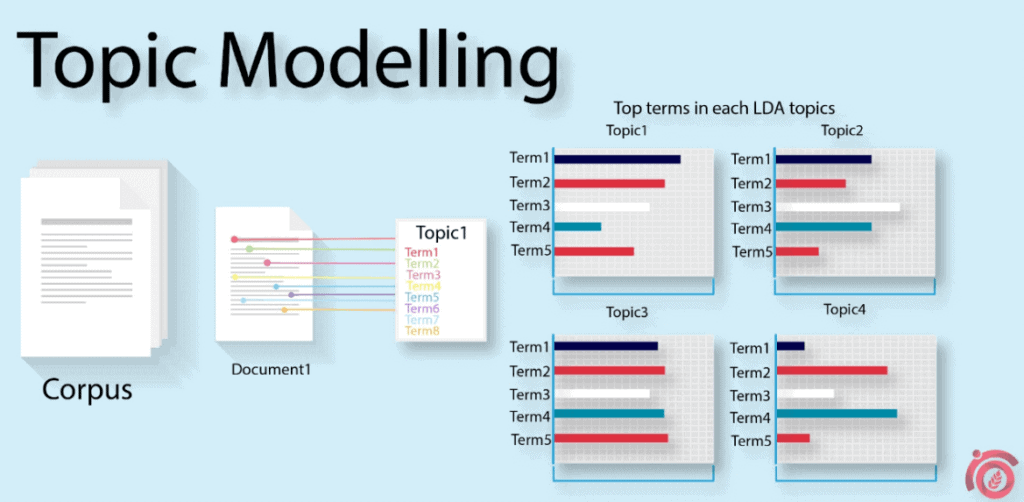

63. TOPIC MODELING: –

Topic modeling is an integrated part of information retrieval and finds its core principle algorithm based on data mining and text mining.

What is Topic Modeling?

Topic modeling is basically a sequence of vector space modeling which find its main working principal from text mining and data mining. It helps in specifying the intent behind a content.

Topic modeling is very essential for search rankings as it effectively helps with rankbrain optimization. It helps in finding the contextual meaning behind a text or content.

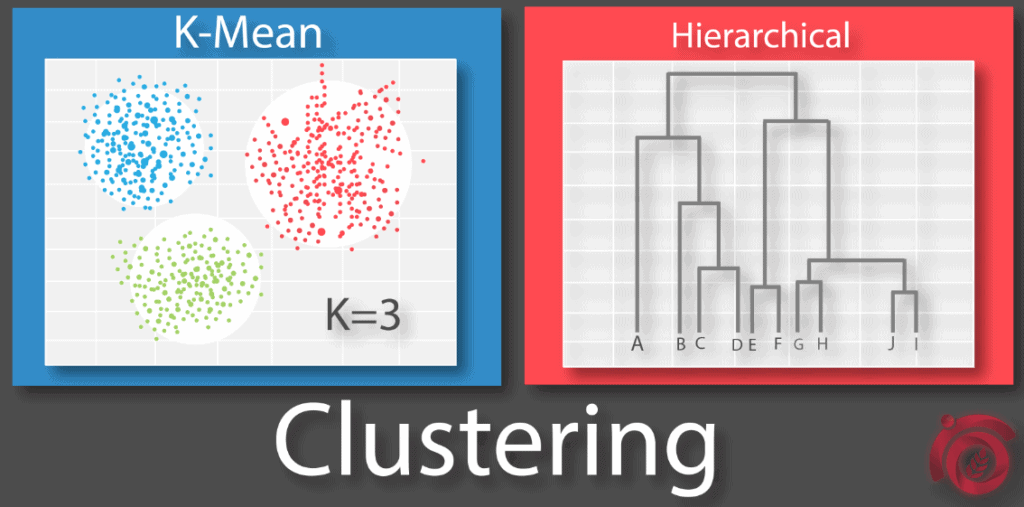

64. CLUSTERING IN SEO: –

Clustering is an important part of information retrieval and is totally based on AI. It can be used to enhance SEO.

Basically, there are many types of clustering such as KNN clustering, hierarchical clustering, flat clustering and much more.

The main objectives of clustering are mentioned as below: –

● Hierarchical clustering allows one to group all the similar tags together in a single cluster. It can be viewed from a dendrogram later and can be utilized for proper analysis of the tags which can better help with website rankings.

● KNN clustering allows one to group all the nearest neighbors within a document set. The grouping can also be performed based on term frequency or cosine similarity. It can later be utilized for content optimization such as co-occurrence and co-citation.

65. SUPPLEMENTAL INDEX: –

Google contains two versions of a webpage namely main index version and another one is supplemental index version.

The main index version is the page which is shown in SERP and is counted as an organic visibility factor. On the other hand, the supplemental index is the part of the index of your webpage which is stored in Google’s record but search engines consider the pages to be of poor quality and are therefore not indexed.Supplement index values can help in optimizing the pages better so that they are indexed into actual Google search.

66. POGO STICKING: –

Pogo sticking refers to the act of jumping up and down in organic position due to similar keywords ranking on different landing page URLs. It works like this, suppose you have a landing page and it ranks for a specific keyword. Now, suppose you have different landing page from the same domain which ranks for the same keyword. Now as per rankbrain algorithm, if one page has better CTR then the other it will outrank the other page. This will arise in a conflict between the pages and the keywords. This circumstance is known as pogo sticking.

67. PODCAST: –

A podcast is on buzz quite lately and many top 10 SEO companies are already opting for it.

A podcast can really help in boosting up the SERP visibility as it is officially a ranking signal and also helps a lot with EAT. Podcast improves the trust and authority to your website which in return will help in improving your branding and credibility.

68. GOING BEYOND GOOGLE SEARCH: –

Since this is an era of AI and smartphones, thereafter now one needs to think beyond Google search. Augmented reality is taking the shape of the internet and due to this very fact now one needs to optimize their websites for multiple platforms such as apps, online business portals, virtual reality frameworks, augmented query devices and much more.

69. MAPPING: –

Mapping or keyword mapping is a basic on-page optimization practice. This step will ensure that all the correct search terms or keywords are properly against the proper landing page URL.

Keyword mapping also helps with proper Metadata optimization and helps in improving the sales funnel ratio whilst maintaining a good user experience.

70. GOOGLE NEWS: –

This is a new big thing now and many internet marketing companies have already started to deal with it.

Optimizing for Google news might provide you with the following benefits:

● This will 10X your Brand Awareness

● This will help a lot with brand signals which are also a direct ranking factor for Google and Bing

● This will increase your chances of getting good traffic acquisition

● This will help in improving the credibility of your website

● This will help in improving your websites trust factor

● This will also help with EAT algorithm

71. ARCHITECTURAL TAXONOMY: –

This section totally deals with the websites architecture and structural layouts.

For instance, any given website might have multiple sections such as category pages, service pages, blog sections, archive sections, and much more. For obtaining the maximum search ranking benefits, the architectural taxonomy should be 100% accurate and proper.

The main reason would be to ensure that the website doesn’t losses any link juice. It should also maintain a solid silo architecture.

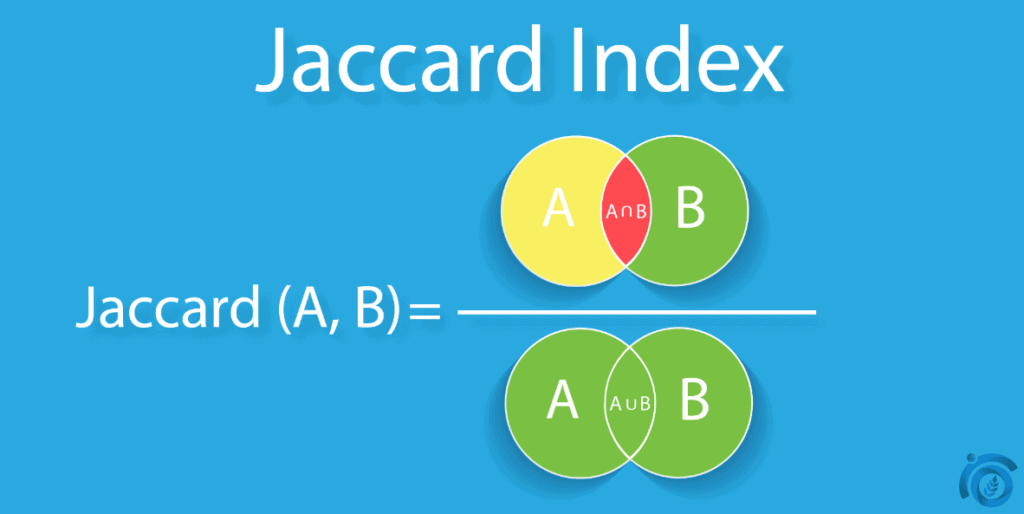

72. TAGS OPTIMIZATION USING JACCARD INDEX: –

People often confuse between the Jaccard index and cosine similarity. Both of the algorithms provide a similarity value.

The major difference lies within the fact that cosine similarity is calculated between term vectors or word vectors. Whereas, Jaccard index is calculated with binary vectors. The accuracy level of the Jaccard index will be slightly higher than that of cosine in terms of ROC curve.

It is computed as the ratio between the minimum summation of the terms vectors with a maximum summation of the term vectors.

Mathematically it is represented as,

Jg(a,b)=∑imin(ai,bi) / ∑imax(ai,bi), where a & b are the term binary vectors.

73. W3C: –

This is an international representation for the world wide web organisation. Basically, W3C contains many sets of codes instruction which should be properly followed for making any particular website 100% w3c compliant.

Furthermore, based on a correlation study by ThatWare – we found that it has a small strong correlation on rankings based on the collection of the website which is w3c complaint versus the websites which is not w3c compliant.