Get a Customized Website SEO Audit and Quantum SEO Marketing Strategy and Action Plan

In recent years, search engines have shifted the way users interact with information. Increasingly, Google presents answers directly on the search results page, allowing users to get what they need without ever clicking through to a website. These zero-click searches are becoming the norm, especially for queries like definitions, weather updates, product information, or quick “how-to” guides. For businesses and content creators, this shift means traditional SEO strategies are no longer enough. Ranking high on search results is just part of the equation. The new challenge is ensuring your content is positioned to capture attention even when users do not click through.

Optimizing for zero-click searches has emerged as a critical strategy in modern SEO. By structuring content to appear in featured snippets, knowledge panels, and rich results, websites can maintain visibility, build brand authority, and guide users toward additional resources. Zero-click optimization is not just about visibility; it is about demonstrating expertise and relevance in ways that search engines recognize and reward. A site that consistently answers queries comprehensively can establish itself as a go-to resource in its niche, increasing trust and long-term engagement.

This is where topical authority and coverage analysis play a pivotal role. Topical authority measures how deeply and credibly a website addresses a subject, rewarding sites that cover topics in detail, with interconnected articles and comprehensive information. Coverage analysis evaluates how thoroughly a site addresses all related subtopics, identifying gaps that might prevent a page from fully satisfying user intent. Together, these approaches ensure that content is not only relevant but exhaustive, creating a foundation for zero-click visibility.

Artificial intelligence and natural language processing are transforming the way content can achieve this. Embedding similarity and RoBERTa provide sophisticated methods to analyze and structure content. Embedding similarity converts text into numerical vectors, allowing semantic comparisons with target queries, while RoBERTa, a powerful transformer model, understands context and identifies missing elements or gaps in coverage. Leveraging these tools enables websites to map content clusters, optimize for intent, and build a network of pages that strengthen topical authority.

By following these techniques, content creators can design strategies that cover every angle of a topic, anticipate user questions, and increase the likelihood of appearing in zero-click results. This approach not only boosts visibility but also positions a website as a trusted authority in its niche.

Understanding Topical Authority in SEO

Definition of Topical Authority

Topical authority is a measure of how knowledgeable and credible a website is on a specific subject. It goes beyond targeting individual keywords. A site with strong topical authority consistently publishes detailed, high-quality content that addresses a wide range of aspects related to a topic. This demonstrates expertise, trustworthiness, and relevance, which search engines recognize when ranking content.

Why Google Rewards Topical Authority

Search engines aim to provide users with the most reliable and comprehensive answers. Websites that demonstrate authority on a topic are more likely to satisfy user intent across multiple queries. Google rewards these sites because they reduce the likelihood of users needing to visit multiple sources to get complete information. Authority signals ensure that search engines can trust the site to be a go-to resource in its niche.

Trust & Expertise Signals

Trust and expertise are critical components of topical authority. Trust is built through accurate, well-researched content, citations from authoritative sources, and positive user interactions. Expertise is demonstrated through in-depth coverage of a topic, advanced insights, case studies, and actionable advice. When both trust and expertise are visible, search engines are more likely to prioritize the site in rankings and feature it in zero-click results.

Depth of Coverage & Interlinking

Depth of coverage refers to how extensively a website addresses all aspects of a topic. Internal linking plays a key role in structuring this content. Linking related articles allows search engines to understand the relationship between topics and subtopics, forming a knowledge network. A well-linked network of content signals comprehensive coverage, which strengthens the site’s authority.

Examples of Topical Authority

A clear example is a website covering AI in healthcare. Publishing just one article on AI applications in diagnostics shows limited expertise. In contrast, a site with multiple articles covering AI in diagnostics, patient monitoring, ethical considerations, regulatory compliance, emerging tools, and case studies demonstrates full coverage and authority.

Difference Between Authority and Keyword Frequency

Topical authority is about quality, not keyword repetition. High keyword density alone does not build authority. Keyword stuffing may temporarily boost rankings but cannot replace comprehensive content, structured coverage, and expertise. Authority requires consistent creation of informative content that genuinely addresses user intent.

How Topical Authority Impacts Rankings

Websites with strong topical authority are more likely to rank for both head terms, which are competitive high-volume keywords, and long-tail keywords, which are more specific queries. This combination ensures broader visibility and stronger positioning as a niche authority.

Zero-Click Opportunities

High topical authority increases the chances of appearing in zero-click results. Google favors authoritative sites for featured snippets, knowledge panels, and rich answers. By thoroughly covering a topic, a website can provide direct answers to queries, reducing user effort and boosting brand credibility without requiring a click.

Coverage Analysis in SEO

Definition of Coverage Analysis

Coverage analysis is the process of evaluating how thoroughly a website addresses all aspects of a particular topic. It measures whether content sufficiently covers relevant subtopics, answers potential user queries, and leaves no gaps in information. Essentially, it ensures that a website’s content is complete, interconnected, and aligned with search intent.

Why Coverage Matters for Topical Authority

A website may produce many articles, but without proper coverage, its authority is limited. Comprehensive coverage signals to search engines that a site is an expert in its niche. By addressing all relevant aspects of a topic, a website demonstrates depth and reliability, which are key criteria in Google’s ranking algorithm. Strong coverage reduces the chances that users need to look elsewhere for answers, increasing both engagement and trust.

Avoiding Content Gaps

Content gaps occur when important subtopics, questions, or perspectives are missing from a site. These gaps can prevent a website from ranking for certain queries and weaken its topical authority. Conducting coverage analysis identifies these gaps, allowing content creators to fill missing pieces and create a complete resource that satisfies both search engines and users.

Meeting User Intent Comprehensively

Coverage analysis helps ensure content aligns with user intent. Different users searching for the same main topic may have varying needs. Some may seek beginner-friendly explanations, while others look for detailed technical insights. By mapping content to cover all possible user intents, websites can capture a broader audience and increase the likelihood of appearing in featured snippets or zero-click results.

Components of Coverage Analysis

- Identifying Subtopics

Subtopics are the smaller, related aspects of a main topic. Identifying them ensures content addresses the topic from multiple angles. For example, for “Electric Vehicle Charging,” subtopics include home charging solutions, public charging infrastructure, fast versus slow charging, apps for EV charging, and government policies.

- Evaluating Content Completeness

Once subtopics are identified, content must be evaluated for depth and detail. Are the articles thorough enough to answer user questions? Are key points, statistics, or case studies included? This evaluation ensures no subtopic is left partially addressed, maintaining the website’s authority.

Tools for Coverage Analysis

- Manual Topic Mapping

This involves listing all potential subtopics and verifying whether each has a dedicated, comprehensive article. Manual mapping helps visualize gaps and plan content creation.

- SEO AI/NLP Tools

Advanced AI tools, like embedding similarity models or NLP-based content analyzers, can automatically compare your content with competitors or search intent. These tools identify missing subtopics, semantic gaps, and opportunities to improve coverage, making analysis faster and more precise.

By systematically conducting coverage analysis, websites can enhance topical authority, align with user intent, and improve their chances of appearing in zero-click search results. Proper coverage ensures that users find complete answers in one place, which strengthens trust, engagement, and search engine recognition.

AI and NLP in SEO: Embedding Similarity and RoBERTa Algorithm

Introduction to Embedding Similarity

Embedding similarity is a method that allows websites to understand the meaning of content rather than just matching exact keywords. It uses artificial intelligence to convert text into mathematical representations called vectors. These vectors capture the semantic meaning of words, sentences, and paragraphs, enabling AI to measure how closely a piece of content aligns with a specific topic or user query.

Unlike traditional SEO methods that rely on keyword frequency, embedding similarity evaluates the intent behind the content, ensuring that pages truly answer what users are looking for.

Converting Content into Vectors

The first step in embedding similarity is vectorization. Every word, phrase, and sentence is transformed into a vector in a multi-dimensional space, where semantically similar pieces of text are located closer together.

For example, an article about “fast charging for electric vehicles” and one about “rapid EV charging stations” may use different terms but convey the same concept. Vectorization allows these two articles to be recognized as contextually similar, providing a more accurate assessment of coverage and relevance across the website.

Comparing Semantic Similarity Using Cosine Similarity and Manhattan Distance

After content is vectorized, algorithms such as cosine similarity and Manhattan distance are used to measure closeness. Cosine similarity evaluates the angle between two vectors, showing how closely the meaning aligns.

Manhattan distance calculates the absolute differences between vector components, providing another way to gauge similarity. By combining these methods, websites can identify which pages most closely match target topics, spot content gaps, and determine areas that need improvement to achieve full coverage.

Introduction to RoBERTa Algorithm

RoBERTa, created by Facebook AI, is a transformer-based model designed to analyze text with contextual understanding. Unlike older models, RoBERTa is trained on vast datasets and optimized for nuanced comprehension of language.

It can process entire paragraphs, recognize relationships between concepts, and understand subtle meanings. In SEO, RoBERTa helps identify semantic gaps, missing entities, and incomplete coverage that traditional keyword analysis might overlook.

How RoBERTa Surpasses BERT in Performance

RoBERTa builds on BERT’s architecture but improves training, removes certain limitations, and uses larger datasets. This results in better accuracy in understanding context and intent. For SEO, it can detect when content partially addresses a topic, misses subtopics, or fails to answer potential user questions.

This makes it invaluable for creating authoritative content that satisfies both user needs and search engine evaluation.

Combining RoBERTa and Embeddings for SEO

Together, embedding similarity and RoBERTa provide a powerful framework for content analysis. Embeddings quantify semantic closeness between pages and queries, while RoBERTa adds context awareness.

This combination allows websites to detect content gaps, identify missing subtopics, and uncover hidden opportunities for deeper coverage. For example, if a page on electric vehicle charging only covers home charging but omits public charging networks and regulatory policies, RoBERTa can highlight these gaps while embeddings rank content similarity to the intended query.

Benefits for Zero-Click SEO

The ultimate benefit is improving zero-click search optimization. Content analyzed and optimized with embeddings and RoBERTa is more likely to appear in featured snippets, knowledge panels, and other rich results that answer queries directly. By fully addressing user intent and covering all related subtopics, websites increase their chances of delivering value without requiring clicks, enhancing visibility and credibility.

Mapping Your Content Versus Competitors

Another advantage is benchmarking against competitor content. RoBERTa can analyze competitor articles, highlighting subtopics they cover and gaps in your own content. Embedding similarity then evaluates which pages match the user query most closely. This approach ensures your website maintains topical authority, stays competitive, and maximizes opportunities for zero-click results.

In conclusion, embedding similarity and RoBERTa allow SEO strategies to move beyond traditional keyword-focused methods. They enable websites to understand context, detect gaps, optimize content for intent, and strengthen topical authority.

By leveraging these AI and NLP tools, businesses can create comprehensive, high-quality content that satisfies users, earns search engine trust, and captures both clicks and zero-click visibility.

Conceptual Analogy: Building a City

Agent A vs Agent B Analogy

Imagine two agents tasked with creating a city. Agent A builds a home, a park, a school, and a police station. While these are important, much is missing. Agent B builds homes, roads, schools, parks, police stations, hospitals, colleges, universities, and industrial areas. This city is complete, serving all the needs of its residents. The contrast is clear: Agent A leaves gaps, while Agent B ensures full coverage and functionality.

Explaining Gaps, Full Coverage, and Society Needs

Gaps in content are like missing structures in a city. Without hospitals or roads, residents’ needs are unmet. Similarly, a website that only covers a few aspects of a topic fails to address user queries fully. Full coverage ensures all essential elements are included. Meeting societal needs in a city is like fulfilling user intent in SEO. Every subtopic, question, and related concept must be addressed to provide a complete experience.

SEO Perspective

Search engines prioritize websites that offer comprehensive coverage. Google evaluates how well content satisfies user intent across multiple queries. A page that partially addresses a topic is less likely to rank than one that thoroughly answers all related questions. Just as a city cannot function effectively with missing infrastructure, a website cannot achieve authority without covering all relevant subtopics.

Why Search Engines Favor Comprehensive Coverage

Comprehensive content signals expertise, trustworthiness, and authority. Search engines detect patterns in internal linking, content depth, and topical breadth to determine whether a website can be relied upon as a resource. Missing subtopics create weaknesses, reducing the likelihood of appearing in featured snippets, knowledge panels, or zero-click results. A well-covered topic demonstrates reliability and value.

Lessons for Content Strategy

To emulate Agent B, content creators must first identify all relevant subtopics. Each page should connect to others through internal links, forming a cohesive network similar to a city’s roads linking neighborhoods. Structured content, clear headings, and clusters of related articles help ensure no topic is overlooked. This approach strengthens topical authority and improves rankings across both head terms and long-tail queries.

User Intent as Basic Needs

User intent is comparable to the fundamental needs of a city’s residents. Just as a city must provide shelter, safety, healthcare, and education, a website must fulfill informational, transactional, and navigational needs. Missing topics or unanswered queries are equivalent to a city without hospitals or schools. Addressing these gaps ensures users find complete answers in one place.

How Missing Topics Equal Missed Opportunities

Any gaps in content leave room for competitors to attract users. Pages that fail to cover all aspects of a topic risk losing traffic, trust, and authority. By systematically identifying and filling these gaps, websites become comprehensive resources, capturing more queries and increasing visibility for both clicks and zero-click results.

ThatWare’s Approach to Topical Authority and Coverage Optimization

At ThatWare, we specialize in leveraging advanced AI and NLP technologies to help our clients achieve unmatched topical authority and comprehensive content coverage. Our team combines deep SEO expertise with state-of-the-art tools like embedding similarity models and the RoBERTa algorithm to analyze, optimize, and structure content that aligns perfectly with user intent.

By identifying semantic gaps, clustering relevant topics, and ensuring every subtopic is thoroughly addressed, we build content networks that not only satisfy search engines but also enhance user experience. This approach allows our clients to dominate both regular and zero-click search results, gaining maximum visibility and authority in their niche.

Let’s understand this with a very simple example:

Suppose there is objective of creating a city

So, Agent A created home + park + police station + school

And Agent B created Home + Roads + Schools + parks + Police station + college + University + hospitals + Industries etc

There is a judge “X” who will make sure who has done the job perfectly or more precisely,

Here, X has chosen agent B as a winner for the scenario,

Why?

1. No Gaps

2. Full Coverage

3. Society needs

4. Humanity connection/ basic needs of survival

So, if look from the perspective of search engine algorithm, if a website is covering maximum and all needful topics that is relevant to justify the user search intent of a particular query then that website will be the winner of the game and get reward as ranking from the Search Engine.

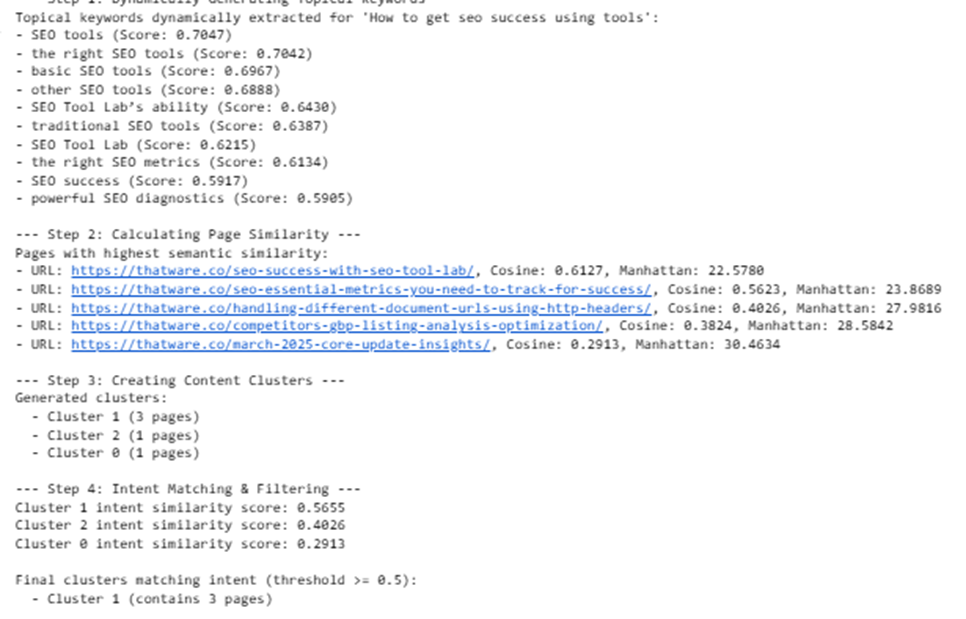

So, how we can optimize a website for topical coverage using the Embedded Similarity and RoBertA Algorithm

Here are the following steps,

Step 1:

Take the search query or topic as 1st input

Take the target content page and related pages/other blogs pages as 2nd input, and run into Roberta Content to Word vectorization algorithm,

Where the words of content will be converted into vector embeddings/ vector space modelling

For example: If we want to find out all the relevant keywords that can help to cover the topical graph of the taken topic or query then this step will provide the list of that words that we can inject to the content naturally to increase the topical relevance.

Step 2:

We need to run Embedded Similarity Algorithm, where two core algorithm, Cosine Similarity and Manhattan Distance will perform to get the list of pages that has closest resembles of the topic

We will take the outputs of two programs(Cosine Similarity & Manhattan Distance) list of pages with highest cosine similarity and the list of pages with closest resembles

then we will do the intersect of two lists and get the final list of unique pages which has highest cosine similarity and closest resembles

Step 3:

Create different clusters of those pages that have been sort listed in previous step, and here K-Means Embedding Algorithm would be use for creating the clusters

The each cluster should be different and unique.

Step 4:

Now we need to do the intent matching of the initial query or topic with each clusters,

and eliminate the cluster that does not match intent.

Here is the Google Colab link for the experiment:

https://colab.research.google.com/drive/1Q9m1bo9aK7X0Q_tCMVqWbq128sEf8g-d

Input:

Final output:

So we would focus only on those clusters for creating the topical network for the query that would justify all the requirements and enhance the topical authority coverage for the zero click results.

Advanced Techniques for Zero-Click Search Optimization

Structured Data and Schema Markup

Structured data and schema markup play a critical role in zero-click search optimization. By adding structured data to web pages, websites provide search engines with explicit information about the content, such as products, services, events, or articles. This helps Google and other search engines understand the context and relevance of content beyond simple keywords.

Proper implementation of schema markup increases the likelihood of content appearing in rich results, knowledge panels, and featured snippets. Structured content acts as a roadmap for search engines, highlighting key information and enhancing visibility without requiring users to click through to the site.

How Google Interprets Structured Content

Google uses structured content to extract information in a format it can interpret and display directly on the search results page. For instance, a recipe page marked with structured data can show ingredients, cooking time, and ratings directly in search results. Similarly, a service page can display pricing, reviews, or availability.

By aligning content with structured data standards, websites provide clear signals that make it easier for search engines to feature the content prominently, which is essential for zero-click optimization.

Featured Snippets Optimization

Featured snippets are a prime opportunity for zero-click traffic. To optimize for these, content must be concise, authoritative, and directly answer common user queries. Using the topic clusters developed through embedding similarity and RoBERTa analysis, websites can structure content to target specific queries.

Breaking down answers into bullet points, numbered steps, or short paragraphs improves readability and increases the chances of being selected for a snippet. Optimized featured snippets provide immediate value to users, establish authority, and attract attention without requiring a click.

Using Clusters to Answer User Queries Directly

Topic clusters allow websites to address multiple aspects of a query comprehensively. By organizing content into clusters based on relevance and user intent, search engines can pull precise answers directly from pages.

Each cluster acts as a mini knowledge network, covering everything from basic definitions to advanced insights. When clusters are aligned with user questions, websites increase the probability of appearing in zero-click formats, including instant answers, knowledge cards, and FAQs.

FAQ and How-to Content Integration

Integrating FAQ sections and how-to guides is an effective way to boost zero-click visibility. These formats directly anticipate user queries and provide structured, clear answers. Google often displays FAQ and how-to content in rich result boxes, making it accessible on the search page itself. Regularly updating these sections based on emerging queries ensures that content stays relevant and maintains authority over time.

Content Refresh and Gap Analysis

Zero-click optimization requires continuous monitoring and updating of content. Regular content refresh ensures that topic clusters remain comprehensive and up to date. Gap analysis identifies missing subtopics, outdated information, or poorly performing pages, allowing websites to fill these gaps promptly. Refreshing content and expanding coverage strengthens topical authority, improves user satisfaction, and increases the likelihood of appearing in featured snippets and other zero-click formats.

By combining structured data, optimized snippets, well-organized clusters, FAQ integration, and continuous content refresh, websites can achieve effective zero-click search optimization. These techniques ensure that content not only ranks well but also provides immediate value to users, strengthens authority, and maximizes visibility in modern search environments.

User Engagement and Interaction Metrics

User engagement and interaction metrics are essential indicators of content performance, especially when optimizing for zero-click search results. These metrics help evaluate whether your content not only ranks but also effectively satisfies user intent. Tracking engagement provides valuable insights into how users interact with your website, which areas perform well, and where improvements are needed to maintain authority and relevance.

Click-Through Rate (CTR)

Click-through rate measures the percentage of users who click on a link after seeing it in search results. While zero-click searches reduce some direct clicks, monitoring CTR on related pages remains crucial. High CTR indicates that users find your content appealing and relevant to their queries. Conversely, a low CTR signals a disconnect between the search snippet and the content’s perceived value. By analyzing CTR across topic clusters, websites can identify which headlines, meta descriptions, and structured content formats resonate most with users, allowing for optimization to attract more clicks where needed.

Dwell Time / Time on Page

Dwell time, or time on page, measures how long users stay on a webpage. Longer dwell times suggest that content is satisfying user intent, providing comprehensive and relevant information. For SEO, dwell time acts as an implicit signal of topical relevance and quality.

Pages with higher dwell times indicate that users are engaging deeply with the material, which can strengthen authority in the eyes of search engines. Monitoring dwell time across different clusters helps identify which sections of content fully meet user needs and which require additional depth or clarity.

Scroll Depth

Scroll depth measures how far users read down a page, providing insight into whether the content is fully consumed. Pages with high scroll depth indicate that users are exploring the content in detail, while shallow scroll depth may reveal that users are not finding the information they need or lose interest quickly.

This metric is particularly useful when content is structured in long-form articles or topic clusters. Optimizing layout, headings, and readability can encourage users to engage with content more thoroughly, ensuring that the full scope of a topic is covered.

Bounce Rate

Bounce rate tracks the percentage of users who leave a page without interacting with other pages on the website. A low bounce rate suggests that content aligns well with user intent and encourages further exploration.

When combined with internal linking between topic clusters, a low bounce rate indicates that users are navigating seamlessly through related content, reinforcing topical authority. High bounce rates, on the other hand, may signal gaps in coverage or poor alignment with user expectations, highlighting areas that need improvement.

Internal Linking Interaction

Internal linking interaction measures how users navigate through your topical clusters. Strong internal engagement signals authority to search engines because it shows that users are exploring multiple pages within the same topic, finding relevant information, and staying engaged with the content network. This metric complements embedding-based semantic relevance and provides behavioral proof that your coverage strategy is effective from a user perspective. Properly structured internal linking ensures that both search engines and visitors can access the full breadth of your content, strengthening topical authority and improving overall SEO performance.

Integrating Metrics for Comprehensive Insights

These engagement metrics work together to provide a holistic view of content effectiveness. While embedding similarity and RoBERTa algorithms ensure semantic relevance and coverage, user behavior metrics offer real-world feedback on how well content satisfies actual visitors. By continuously tracking CTR, dwell time, scroll depth, bounce rate, and internal linking interaction, websites can refine their content strategies, improve topical authority, and enhance performance for both traditional and zero-click search results.

In conclusion, analyzing user engagement metrics is vital for measuring the success of content strategies. These insights complement AI-driven content optimization, ensuring that pages not only rank but also deliver meaningful experiences to users, strengthen authority, and maintain visibility in competitive search environments.

Challenges and Limitations

While optimizing for zero-click search results using topical authority, embedding similarity, and RoBERTa offers significant advantages, there are several challenges and limitations that SEO professionals must consider.

Technical Complexity

Implementing advanced AI and NLP-based SEO strategies requires technical expertise. Setting up vectorization models, running embedding similarity analyses, and using transformer algorithms like RoBERTa demands knowledge of data science, programming, and machine learning. Without proper expertise, executing these processes efficiently can be difficult and may lead to inaccurate insights.

Requires AI and NLP Understanding

SEO teams must understand how natural language processing works to interpret semantic relevance, embeddings, and context-based analyses correctly. Misinterpreting results can cause content strategies to focus on the wrong subtopics or fail to address user intent effectively. Continuous training and familiarity with AI tools are necessary for accurate implementation.

Content Quality Versus Quantity

A common challenge is balancing content volume with quality. While building comprehensive topic clusters is important, producing large amounts of low-quality content can harm user experience and reduce authority. Each piece must be valuable, well-researched, and aligned with user intent.

Avoiding Keyword Stuffing

Even with semantic optimization, there is a risk of over-optimizing content by inserting excessive keywords or semantically related terms unnaturally. Keyword stuffing can negatively impact rankings and diminish readability. Proper content structuring and natural language integration are essential.

Evolving Search Algorithms

Search engines continuously update algorithms, affecting how topical authority and zero-click content are evaluated. Strategies that work today may require adjustments tomorrow, making adaptability critical.

Continuous Monitoring Needed

Finally, optimizing for zero-click results is not a one-time task. Regular monitoring, content updates, and gap analysis are required to maintain authority, cover emerging subtopics, and ensure content continues to satisfy user intent.

Understanding these challenges allows businesses to approach advanced SEO strategies strategically, mitigating risks while maximizing results.

Conclusion

Topical authority and coverage analysis are vital for modern SEO. By thoroughly addressing all relevant subtopics, websites build trust, expertise, and authority in their niche. Comprehensive content satisfies user intent and signals reliability to search engines, increasing visibility in both traditional and zero-click results. Embedding similarity and RoBERTa algorithms help identify semantic gaps, missing subtopics, and content opportunities, allowing websites to align closely with user queries.

Structured content, topic clusters, and internal linking further strengthen authority while enhancing the chances of appearing in featured snippets and knowledge panels.

Looking forward, zero-click search trends will continue to rise, making AI-powered content optimization essential. Continuous monitoring, content refresh, and gap analysis ensure websites maintain relevance and authority over time. Implementing semantic analysis and structured data allows SEOs to provide meaningful answers, improve engagement metrics, and outperform competitors.

Businesses that invest in high-quality, comprehensive content supported by AI tools will not only achieve better rankings but also deliver valuable experiences to users, positioning themselves for long-term growth in a competitive search landscape.

Click here to download the full guide about Zero-Click Search Optimization.