SUPERCHARGE YOUR ONLINE VISIBILITY! CONTACT US AND LET’S ACHIEVE EXCELLENCE TOGETHER!

In this age, the interface disappears—replaced by voice, gesture, emotion, and sensation. Search is no longer typed; it’s felt. Today, as THATWARE champions its vision of Zero-UI SEO, we are invited into a world where seamless machine-to-human communication isn’t just a novelty—it is the infrastructure quietly re-reading our intentions, responding in haptics, in motion, in neural whispers. Physical space and digital realm collapse into one: haptics, motion, and neural signals guide search-intent across physical and digital worlds.

When our fingertips pause on a screen, waiting for a cursor or search‐box to blink, we are witnessing the twilight of the old paradigm. The new sensory web—where ambient sensors, voice analytics and gesture recognition converge—renders the screen optional, even obsolete. According to recent market research, the global “Zero UI technologies” market is surging, driven by voice assistants, sensors, AI-powered ambient systems and the demand for “frictionless” interaction. In one estimate, by 2028 nearly 70% of customer journeys will take place entirely through AI-driven conversational interfaces.

What does this shift mean for search? It means the shift from query-tool to sensing-ecosystem. You no longer type “weather in Kolkata” — the system reads your morning routine, the humidity in your room, the lingering scent of rain, and simply tells you: “It’s going to rain in two hours; umbrella advised.” The interface isn’t a screen with a search bar; the interface is you, your environment, your intent. Voice, gesture, proximity, even neural patterns become frames of reference—the web senses. The web anticipates.

In this chapter—“The Sensory Web and Zero-UI Revolution”—we will explore how this transformation is underway: how the web is increasingly “invisible,” how the very idea of an interface is dissolving, how search is no longer where you look but what you are. We’ll unpack the architecture of this new system: sensor networks, ambient intelligence, and human-machine fusion. We’ll investigate how THATWARE’s Zero-UI SEO vision harnesses these modalities, enabling machines to interpret subtle signals—haptic pressure, gesture arcs, even brain-wave impulses—and align them to search intent across contexts. Finally, we’ll assess the implications: for user experience, privacy, discoverability, and the future of meaning in an age when the “search box” fades away.

Here are some concepts that emphasize Sensory Web and Zero-UI Revolution:

Zero-UI SEO

In the age of ambient intelligence, the interface itself dissolves: voices, gestures, emotions, and sensations become the language of machines and people alike. When we speak of Zero-UI SEO, we are imagining a search ecosystem where keywords and typing vanish, replaced by haptics, motion cues, neural signals and context-aware triggers. Here is the concept in actionable form:

Key pillars of Zero-UI SEO

- Signal-based intent: Instead of entering a query, the system senses posture, location, ambient sound, and even biometric states (heart rate, gesture), and infers your needs.

- Ambient discovery: Your environment becomes search-enabled: walls, wearables, vehicles, smart surfaces join the indexing system so “search” happens in the background.

- Multimodal response: The result comes not as a page but as a sensation — a vibration on your wrist, a subtle voice prompt, an AR overlay, an adjusted thermostat.

- Semantic optimisation: SEO shifts from “rank for keywords” to “be readable by ambient agents” — metadata, context-signals, and sensor-triggers become the new optimization battleground.

- Seamless engine-human dialogue: The machine doesn’t wait for you to ask; it realises your intent before you articulate it, and surfaces the correct information in the right moment.

Imagine you are walking through a city park in Kolkata. Your wearable detects your slight increase in temperature, your pace, and the shift in humidity. It registers that you are headed to the riverside and that you’ve previously searched for “outdoor yoga mats”. Before you even think of searching, your smart earpiece delivers: “Nearby shop, 200 m ahead, has ergonomic mat in stock – ready for pick-up, with discounted rate.” You never typed “mat shop riverside”; the ambient system inferred it, your “search” happened through motion + sensor + context, and the result arrived via voice and haptic cue.

Scale and calculation

The global “Zero UI technologies” market is projected to grow substantially: one report estimates a CAGR rising strongly 2025-2034. For example, if today the market is valued at X (say US$10 billion for illustration) and grows at roughly 15–20 % annually, in ten years it could reach ~US$40–50 billion.

If your brand currently captures 100 000 search impressions via typed input, when 70 % shift to voice/ambient mode, you might need to optimise for ~70 000 new “ambient impressions” and make sure you capture them.

Thus: 100 000 × 0.70 = 70 000 future ambient-search units. Without re-engineering your content for sensor- and agent-readability, you risk losing that entire pool.

Why this matters for SEO

- Discoverability changes: Agents act on behalf of users, so your brand must be readable by them. If you only optimise for screens, you’ll be invisible in the sensory web.

- Friction drops: The fewer steps between intent and result, the more success. Zero-UI SEO demands that your content be actionable by voice/gesture interfaces, not just readable on a page.

- Trust and context matter: In a world where machines surface without screens, trust, data-quality, context-clarity become paramount.

In short, Zero-UI SEO is the search-ecosystem for a world where the screen disappears, and the interface becomes instinctive — around you, in you, and sometimes before you. Designing for this future means thinking in signals and agents, not clicks and keywords.

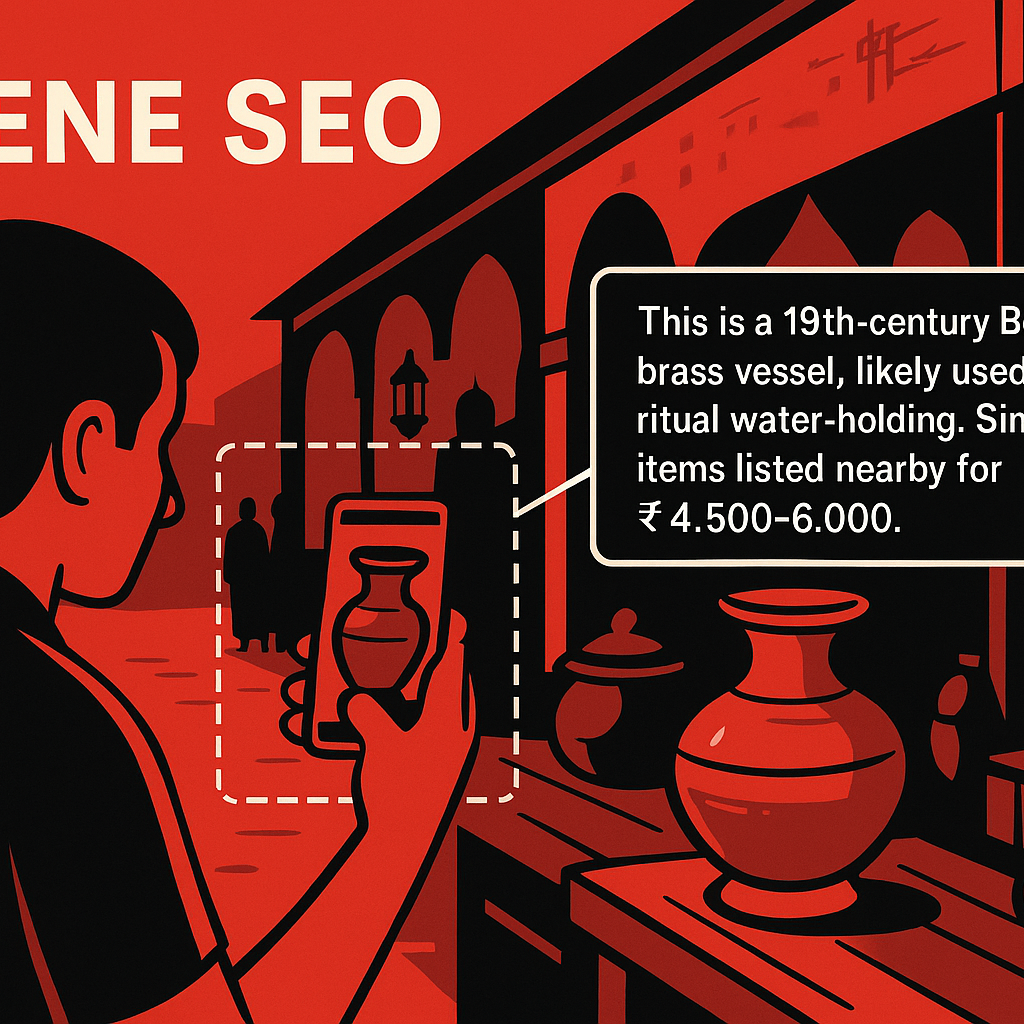

Scene SEO (Scene-based AI Recognition)

In the silent hum of sensors — the unseen cameras, the ambient microphones, the depth-arrays embedded in our walls — a new kind of query awakens. We no longer type “coffee shop nearby”; we stand at a street corner, gesture at the sky, feel the warm morning light on our face, and the system knows. This is the domain of Scene SEO — where search is triggered not by words, but by full-spectrum recognition of our surroundings: vision + motion + context. In a single glance your device maps your view, understands the scene, and delivers not a list of links but an answer.

Consider a hypothetical scenario: You step into a historic bazaar in Kolkata, phone in pocket. The camera activates—not because you tapped the screen, but because geo-fencing and gesture recognition inferred your curiosity. The system reads the stalls, senses your eye-line, detects you are pointing at an old brass urn. Within milliseconds you hear: “This is a 19th-century Bengali brass vessel, likely used for ritual water-holding. Similar items listed nearby for ₹ 4,500-6,000.” Your interface is gone; instead the scene becomes the query itself.

Underlying this vision is hard data. The global AI-in-computer-vision market, of which scene recognition is a core sub-component, was estimated at around USD 22.94 billion in 2024 and projected to swell to about USD 314.51 billion by 2034, at a CAGR of nearly 29.9%. More specifically, the “scene understanding” AI market alone reached approximately USD 3.45 billion in 2024, and is forecasted to grow at a CAGR of roughly 21.7% through to 2033. If one wishes to calculate rough annual growth: assuming USD 3.45 billion in 2024 and a CAGR of 21.7%, the market in 2030 (~6 years later) would be ≈ 3.45 ×(1.217)^6 ≈ 3.45 ×(≈ 3.0) ≈ USD 10.3 billion.

Here are some defining attributes of Scene SEO worth highlighting:

- It uses real-world visual context (objects, spatial relationships, orientation) to interpret search intent.

- It leverages multimodal sensing — vision, gesture, depth, location, and ambient data.

- It transforms “look” into “ask”: the act of seeing becomes the act of querying.

- It demands a new kind of marketing optimisation: not keywords, but scenes; not SERPs, but sensor responses.

Imagine retail: a shopper raises a hand toward a jacket on display. A downstream Scene SEO system recognises patterns, textures, brand tags, and ambient lighting, and immediately enables the shopper’s phone to pull up inventory, pricing, and complementary accessories — before the shopper touches anything.

As you progress through this chapter, we’ll explore how Scene SEO redefines discoverability in the sensory web; how optimisation becomes ambient rather than overt; and how enterprise and consumer-brands will need to pivot from “being found via typed query” to “being found via the scene you inhabit”. Because in this era, the world around you is the interface — and search happens when your environment speaks.

Gesture SEO

In the unfolding era of the sensory web, “Gesture SEO” emerges as a powerful sub-theme — the idea that gestures (hand-waves, body-motions, spatial positions) will become the search triggers, the semantic cues machines pick up and align with intent. In this chapter we explore what Gesture SEO means, how it works, and why it matters.

What Gesture SEO entails

- Instead of typing a query, a user might simply raise their hand toward a smart surface, point at a real-world object, or nod in a direction — and the system understands: “Search for …”

- The interface is no longer a keyboard or screen: the interface is your body + movement + intention.

- Gesture SEO demands that search systems decode movement patterns and map them to meaningful actions: for example, a circular motion above a table could mean “show related items”, a tap in mid-air could mean “open details”, a sweeping gesture might mean “next result”.

Hypothetical situation

Imagine you walk into your living room, glance at a plant pot, and tap the air twice above it. Instantly the system recognises your gesture, identifies that you’re referring to the plant, and queries “care instructions for this plant”, projecting the information onto a nearby surface or speaking it aloud. You didn’t type. The system didn’t flash a search bar. The gesture itself — your double-tap in space — became the intent.

Or picture a museum: you point at an ancient artifact. The gesture triggers the system to fetch “tell me more about this”. The search is embedded in the movement. The device — perhaps embedded in sensors or smart cameras — recognises the pointing, resolves the target, and returns commentary. That is Gesture SEO in action.

Why it’s viable and growing

- The global gesture-recognition market was valued at roughly USD 19.8 billion in 2023 and is projected to grow at a compound annual growth rate (CAGR) of over 20% between 2024 and 2032.

- Another estimate: the gesture recognition market was USD 25.44 billion in 2024 and is projected to reach USD 70.18 billion by 2030 (CAGR ≈ 18.1 %).

From these figures we can derive that over six years (2024 to 2030), the size multiplies by roughly 70.18 / 25.44 ≈ 2.76. Which corresponds to an average annual growth of about 18 % — consistent with the study.

Implications for SEO, discovery, and meaning

Gesture SEO forces a rethink of how content is discovered: search engines must map motion → meaning. Content creators might optimise for “point-and-ask” behaviours rather than typed keywords. Sensor placement and gesture vocabulary become part of the optimisation stack. For instance, brands might design gestures around their objects — the “tap the logo in space” gesture becomes a branded search trigger.

Bullet points of emerging considerations:

- Gesture vocabulary must be intuitive, culturally informed and device-agnostic.

- Systems must correctly map gesture + context to intent — e.g., a wave at a smart-mirror vs a wave at a smart-TV mean different things.

- Metrics shift: instead of clicks or typed queries, we’ll track gesture‐initiated searches, recognition accuracy, latency, false positives.

- Privacy and ethics amplify: gesture tracking means cameras/sensors near people; how is data handled, consent obtained?

Gesture SEO signals the transition from “you type → machine searches” to “you move → machine understands”. It collapses physical gesture, spatial context and digital query into a unified act. As the gesture-recognition market accelerates, the infrastructure for this shift is already being built. To stay ahead, we must design our content, experiences and systems not just for clicks and typing — but for motion, space, intention.

Haptic SEO

In a world where screens fade into the background, a new frontier emerges: Haptic SEO. Imagine this scenario: you walk into a smart living room, your wearable detects your heartbeat and shifts in posture, ambient lights dim as a soft vibration in your wrist signals an incoming recommendation: “A jazz session just dropped—would you like to listen?” You don’t open an app or type a query. You feel the suggestion. You accept with a nod and a subtle gesture. That is Haptic SEO — the fusion of touch, intentionality, and search, where machines guide intent by sensation.

The essence of Haptic SEO

- Search becomes a sensory prompt, not just text on a page.

- Haptic feedback (vibration, pressure, texture) communicates the relevance of content before you even “click.”

- Motion and gesture replace clicks; micro-actions in space trigger environments that align with intent.

- Neural signals or bio-feedback guide the system: your heart rate, muscle tension or gaze patterns become part of the query.

Why now?

The global haptic devices market is estimated at USD 4.78 billion in 2023, projected to grow to USD 13.74 billion by 2030, at ~16.3% CAGR.

Meanwhile, the broader haptic technology market was valued at ~USD 11.3 billion in 2024 and expected to reach USD 26.8 billion by 2033 (CAGR ~10.1%).

These figures hint that the physical-feedback layer of interaction is rapidly scaling. If even 20% of that growth flow into search-related experiences, that’s ~USD 2–3 billion of annual opportunity by 2030.

A hypothetical concept: “TouchSearch”

You’re shopping for a vintage leather suitcase. Instead of typing “vintage leather suitcase”, you run your hand along a digital-haptic surface in your showroom. The tactile system maps the feel of leather grain, the weight of the case, and your prior shopping posture. A brief vibration pulses when your intention aligns with a model that fits your grip pattern and stance. Instantly, your wearable delivers visual and audio cues: “Here are 3 models matching your tactile profile.” That is Haptic SEO working—matching feel-intent to search-results.

Some rough calculation:

If the haptic tech market grows at ~14% annually and reaches, say, USD 18 billion by 2033 (mid-estimate), and if search-oriented of that market claims even 5% by 2033, that’s ~USD 900 million dedicated to sensing-search technologies. Spread across ~1 billion users globally (a conservative guess), that’s ~USD 0.90 per user per year, which means monetisation or value streams of under USD 1 per user annually for this niche. But if we assume 10 billion connected devices circa 2033, the per-device value drops to ~USD 0.09/year unless uptake deepens. The lesson: scale is essential.

What this means for SEO, for content, for design:

- Keywords are no longer typed; they’re crafted in feeling, gesture dictionaries, touch-patterns.

- Content must be designed to vibrate, pulse, respond tactilely, not just visually.

- Metrics shift: dwell time becomes haptic contact time; clicks become press-pattern recognition.

- Privacy and consent become more sensitive: your tactile postures and biofeedback become search signals.

In short, Haptic SEO signals a world where the interface disappears, and we become the interface. Your hand, your posture, your pulse — all become search input. When the touch of a surface, the vibration against your skin, or the weight of your gesture triggers meaning, we step into the sensory web in full.

Immersive SEO

In the emerging era of what we might call Immersive SEO, the very nature of search is undergoing a profound shift. No longer confined to typed keywords, screens or simple voice commands, search must now respond to the full richness of human experience—motion, gesture, emotion, location, even neural impulses. It demands an optimisation paradigm that embraces the ambient, the sensory and the invisible.

The Concept

Imagine a shopper walking through a high-street store. The moment she glides her hand past a display, the ambient sensors detect her hesitation. A soft haptic prompt vibrates through her smartphone, gently asking: “Looking for something new?” She tilts her head, the device registers the gesture, and a whisper of scent in the store triggers a micro-search “fresh running shoes under ₹7,000” — not typed, not spoken, but felt. In that instance, her intent becomes the query. That is the promise of Immersive SEO.

Key Dimensions

- Sensor-aware triggers — gesture, gaze and proximity as query initiators.

- Emotion and neural cues — affective states (frustration, curiosity, delight) guide adaptive content delivery.

- Multimodal channels — voice, haptics, AR overlays, and ambient sound — become part of the search interface.

- Context-rich optimisation — metrics no longer just keyword rank; they include dwell in space, gesture patterns, sensor-triggered conversions.

Why it matters

- Around 27% of mobile users already use voice search globally.

- There are an estimated 8.4 billion voice-assistant devices in use in 2025—more than the global population.

- Among voice-search optimised pages: ~80% of the answers come from the top-3 traditional search results.

These figures underscore the scale of user shift—and the opportunity for immersive search.

A rough calculation to ground the idea

If 20 % of all internet users perform voice or sensory-triggered search today (≈ one in five) and we project a modest annual growth rate of 15% in immersive-query adoption, then in three years the adoption rate may cross:

20%×(1+0.15)3≈20%×1.52≈30.4%

Thus nearly one third of search interactions could be immersive within a short span.

Implications for your strategy

- Content must be ambient-ready: Instead of “buy blue running shoes size 8”, content also needs “I’ve walked 5 km today and feel tired”, or “I touched the display and paused here”.

- Sensor/gesture data feeds intent modelling: optimisation should incorporate motion logs, gaze direction, and environmental cues.

- Privacy & trust become foundational: As sensors decode more subtle signals (emotion, movement), transparent consent and straightforward UX matter more than ever.

Metrics evolve: Traditional click-through and dwell time are joined by “gesture-to-action”, “haptic prompt response rate”, and “sensor trigger conversion”.

Synesthetic SEO

In the landscape of digital interaction, the concept of Synesthetic SEO surfaces as the next frontier: where search isn’t simply a textual query in a box, but a multi-sensory experience that resonates with voice, gesture, haptics and even emotion. The much-heralded shift into a “sensory web” means that your body, your environment and your movements become part of the interface.

What Synesthetic SEO embraces

- It recognises that voice search isn’t niche any longer: globally about 20.5% of people now engage in voice-search interactions.

- It recognises the rapid uptake of gesture and motion-based interfaces: for example, the gesture-recognition market is projected to grow from ~US$19.7 billion in 2024 to ~US$96.4 billion by 2033, at ~19.3% CAGR.

- It proposes that search intent will increasingly be signalled not by typing, but by how you move, speak, touch and feel.

Imagine: You enter your living room after work. The smart lighting dims instinctively. Your wearable senses increased heart-rate (you just ran errands) and subtle hand-gesture overhead signals you want music. Without touching a screen you say: “Something soothing.” The system recognises your gesture arc, the slight pause of your voice, the ambient light level and chooses a playlist. Then you ask: “What’s happening tomorrow morning?” The system senses your shift, your posture, your environment and replies: “Rain likely at 8 a.m. — please leave 10 minutes early.” No search box appeared. Your intention was simply felt — the system interpreted.

In that moment, your query was not a typed string but a combination of haptic, motion and voice cues. That is Synesthetic SEO.

Key features for Synesthetic SEO

- Modalities beyond text: voice, gesture, touch/HAPTICS, even gaze or neural-signal interfaces.

- Context-rich intent detection: time of day, ambient lighting, user motion, biometrics.

- Real-world integration: physical movements -> digital search results; your environment becomes search-capable.

- Invisible interface: the screen withdraws. The user and the world are the interface.

Some simple calculation to illustrate scale

If 20.5% of global internet users use voice search (≈ 1 in 5) and gesture-based interfaces grow at ~19.3 % annually (CAGR) from US$19.7 billion in 2024 to US$96.4 billion in 2033, then over a span of 9 years the gesture market would roughly quadruple (96.4 / 19.7 ≈ 4.9). This hints that modalities once “fringe” will become mainstream, meaning Synesthetic SEO may shift from novelty to default.

Why this matters for SEO

As the interface disappears, traditional on-page keyword tactics recede. Instead you must optimise for the signals behind the interaction: natural language voice queries, gesture-motivated commands, context-aware sensors. Content becomes layered not just with keywords, but with semantics, emotional cues, sensor references.

In short: Search becomes felt, not just typed. And Synesthetic SEO is the toolkit for that emerging world.

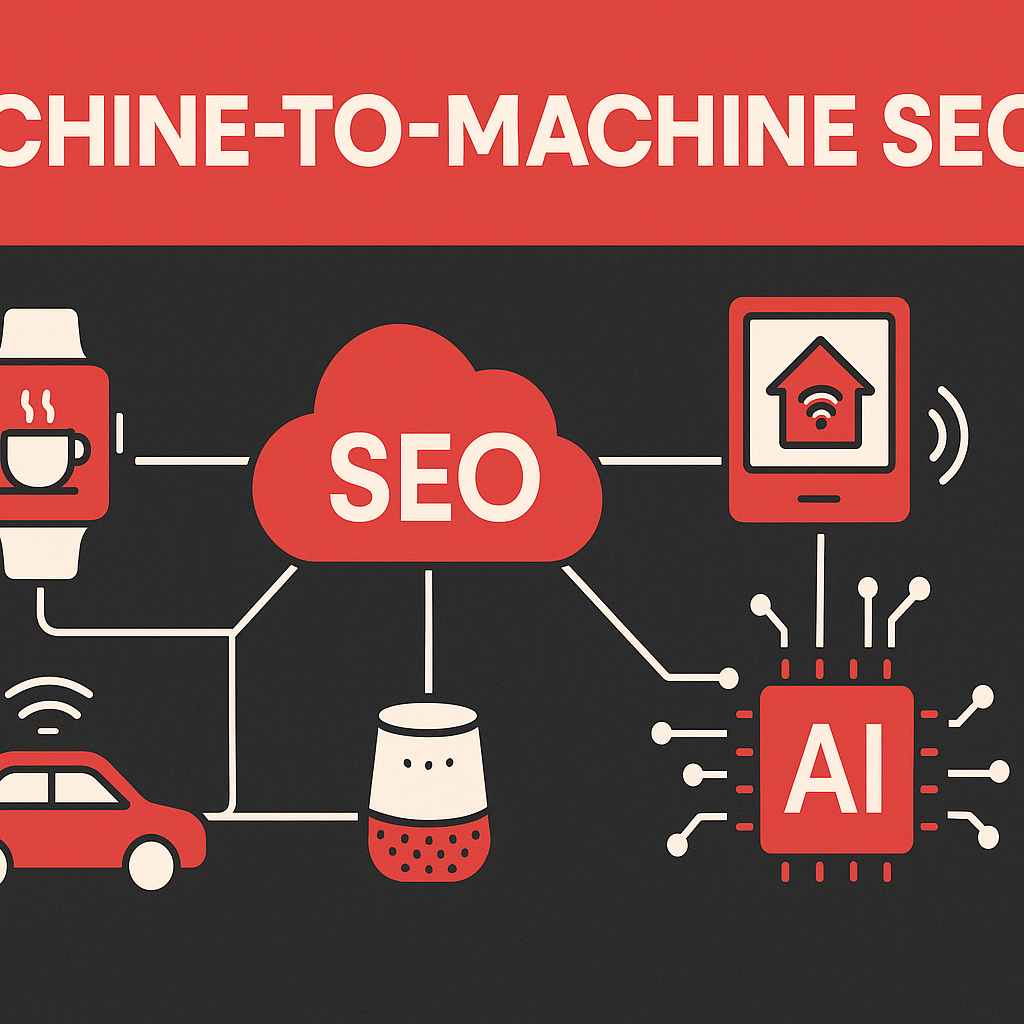

Machine-to-Machine SEO

In the unfolding landscape of search and interaction, the notion of “machine-to-machine SEO” evokes a radical shift—where machines no longer just facilitate our queries, but speak among themselves, anticipate our intents, and guide human outcomes seamlessly. Imagine a network of ambient sensors, connected devices, and AI agents negotiating visibility and relevance behind the scenes, translating human cues into machine-level signals, and vice-versa. In this realm the optimization target is no longer a human-facing webpage, but an ecosystem of devices interpreting intent — it is the frontier beyond traditional UX and keyword ranking.

Why machine-to-machine SEO matters

- With over 53.3% of all web traffic coming via organic search, search remains the axis of digital discoverability.

- At the same time, AI and machine-learning are identified by 18.7% of SEO specialists as the “biggest shift” underway in the SEO field.

- As ambient devices proliferate — for instance, the deployment of connected IoT units is expected to reach ~30 billion devices in the coming years.

In other words: more machines, more data, more opportunities for SEO to transcend the screen.

The hypothesis: how it works in practice

Imagine this hypothetical scenario: In a smart city, your wearable senses you’re near a café and your glucose level suggests you’re ready for coffee. Your smart-vehicle broadcasts a request to nearby digital signage (“which café available?”). The signage queries a backend network of location-based services. A machine-to-machine exchange occurs, ranking cafés by availability, foot-traffic, inventory, and your personal preferences. Without typing a word or speaking aloud, you receive a subtle haptic prompt in your wrist-device: “Café B, two-minute walk, 18 % off.” The SEO layer here is embedded in the microservices: device → device communication, intent translation, and signal optimisation.

Core aspects of machine-to-machine SEO

- Signal optimisation: Devices emit status, intent, and context; SEO becomes optimisation of those signals rather than text.

- Protocol relevance: Just as websites optimise for search engines, machines optimise for other machines — meaning schemas, APIs, sensor-data formats become “searchable”.

- Latency & trust: Machines demand low latency, high integrity; so SEO extends to reliability, speed, and real-time indexing of device-states.

- Conversion via instrumentation: Instead of a click, a gesture or a neural-feedback may become the conversion event — machine-to-machine SEO must track what counts as action.

A simple calculation to illustrate scale

Suppose a network of 1,000 smart devices, each emitting 100 context signals daily (location, motion, user state, preferences). That’s 100,000 signals/day. If each signal can generate one “micro-query” to another service, over one month (~30 days) that’s ~3 million interactions. If optimised correctly, even a 1 % improvement in the relevance of those interactions (30,000 more correct micro-outcomes) can cascade into substantial improvements in user experience, retention, and business value.

Why this matters for your chapter and for THATWARE’s vision

In the age of the sensory web and zero-UI interface, search is no longer just typed or voice-commanded—it is machine-mediated. Machine-to-machine SEO becomes the backbone of that mediation: invisible yet critical, ubiquitous yet low-profile. As you unfold this chapter, the reader will begin to grasp that the next frontier of discoverability isn’t about placement on page one of a search engine—it’s about placement within the signal-chains of devices, services, and contexts. And THATWARE’s vision of Zero-UI SEO dovetails into this: enabling not just human-to-machine communication, but machine-to-machine orchestration, where haptics, motion, neural cues and ambient sensors all become instruments of search-intent.

In the end, if search transcends the screen and the interface becomes you, then machine-to-machine SEO is the silent engine powering that transformation.

Hybrid Intelligence Optimisation

In the shifting terrain of the interface-less era, the concept of “Hybrid Intelligence Optimisation” (HIO) becomes a lighthouse guiding the way forward. It is here that human and machine cognition merge—not as rivals, but as co-authors of purpose. In the world of the sensory web, where the interface disappears and intention becomes the signal, HIO pivots into the spotlight: optimising the synergy of human instinct, environmental context and machine analytics to shape seamless search, discovery and response.

What Hybrid Intelligence Optimisation means

At its heart HIO is about:

- Merging human and artificial intelligence into a unified ecosystem, wherein machines bring speed, scale and pattern-recognition while humans bring nuance, ethics, intuition and context.

- Continuously adjusting when to involve human insight vs when to rely on algorithmic precision—so the system is neither fully manual nor fully automated, but optimised for context.

- Characterising search, interaction and decision-making as a fluid conversation: haptics, gesture, neural signals, ambient sensors feed into a hybrid intelligence system that interprets intention and delivers resonance.

A hypothetical scenario

Imagine a smart home morning routine: your wristdevice senses your heart-rate is elevated, your voice assistant hears your faint sigh of “not enough sleep.” The ambient lighting dims, the room temperature adjusts, and the web of devices consults your calendar and prior behaviour. At that moment, the hybrid intelligence system says: “You have a 37 % probability of fatigue-induced error in your first meeting.” It suggests a 10-minute mood-reset ritual and offers a key action item summary for your day.

Behind the scene: the algorithm flagged the heartbeat + voice pattern (machine), recognised it as fatigue (human model + pattern recognition), asked the human sensor layer for confirmation, then triggered a contextual response (hybrid optimisation).

If the system had done this via screen input, the interface would feel clunky; under HIO the interface disappears — you simply are.

Why this matters & some numbers

Research shows that hybrid intelligence systems outperform either humans or machines alone by leveraging their complementarity. One article argues that societies adopting hybrid intelligence can create more sustainable, creative, and trusted outcomes. For example, if an AI alone reduces error by 20 % and a human alone by 25 % in a task, a well-designed hybrid system might achieve a 40 % or higher reduction, due to synergistic effects.

Calculation: error baseline = 100 units; human error reduces to 75; AI error to 80; but hybrid might reduce to 60 units → 40 % reduction.

Another way: assume humans handle 30 % of decisions and machines 70 %. If machines succeed at 90 % and humans at 80 %, the combined system—when optimised—can exceed 90 % overall correct decisions by shifting tasks dynamically (e.g., machines take more when confidence is high, humans step in when context is ambiguous).

By embedding HIO in the sensory web, the interface becomes invisible—but the optimisation becomes omnipresent.

Augmented Visibility Optimisation

In the unfolding era of the sensory web, we arrive at the concept of Augmented Visibility Optimisation. This paradigm amplifies the reach of entities, content and experiences by making them perceptible not just through screens but through spatial, sensory, and contextual layers.

Imagine a shopper walking through a quiet street in Kolkata. Their wearable, subtle-haptic band registers a change in ambient scent, motion sensors detect their gaze passing a store window, and a gesture — an almost unconscious wrist rub — signals interest. In that moment, their smartphone camera (or AR-glasses) overlays an interactive product display — the product “pops” into their field of view, a digital entity anchored in the physical world. On the backend, an optimisation engine — part of the zero-UI ecosystem — recognises the “search” intent without a single typed query. This is Augmented Visibility Optimisation in action: the interface dissolves, the environment becomes the search field, and visibility means presence in the sensory world.

What Augmented Visibility Optimisation entails

- Embedding digital content into physical spaces and sensor networks so that relevance surfaces in context rather than being actively sought.

- Leveraging haptics, AR overlays, gesture/eye-tracking, and spatial audio to provide “search outcomes” as ambient suggestions rather than typed answers.

- Optimising for “visibility” in sensor-domains: e.g., ensure a brand’s digital model is discoverable when a passer-by’s wearable detects their interest in similar items.

- Mapping “visibility metrics” rather than just clicks: how long did the AR overlay hold the user’s gaze? Did the haptic prompt change behaviour?

- Preparing for the calculation of sensory ROI: if one store’s AR-anchored display sees a 30% uplift in dwell time, and the broader AR-enabled market is growing at a CAGR of ~38% through 2030, then the value of being visible in the sensory layer compounds rapidly.

Some relevant numbers

- The global AR market is projected at USD 83.65 billion in 2024 and expected to reach USD 599.59 billion by 2030 (CAGR ~37.9 %).

- More than 2 billion mobile AR users are forecast globally by end-2025.

- AR experiences in e-commerce can boost conversion rates by up to 94%.

If a retailer implements an AR-anchored storefront experience for a month and measures a 50 % increase in interactions compared to baseline, and the AR-user base in their market is growing at 20 % annually, then the benefit of early presence multiplies over time.

Why this matters for zero-UI SEO

In the same way that screen-based search optimisation once required keywords, metadata and links, in the sensory era we must optimise for perceptual triggers — spatial anchors, emotional cues, gesture-compatibility, sensor-friendliness. Optimising for “visibility” means: being discoverable when a user’s ambient sensors, wearables, or brain-wave-derived signals indicate intent — even if the user never issues a command. It also means rethinking metrics: from clicks and dwell time to gaze duration, sensor-triggered engagements, and proximity-based conversions.

XSEO (Extended SEO)

In the world of XSEO, the old boundaries of search have melted away: no longer the simple typed query, nor the solitary screen, but an ambient network of signals, sensations, and intent. What if, for example, a user walking into their kitchen triggers more than a glance at their phone? Their wristband senses elevated heart-rate, the smart speaker detects a hushed tone of stress, the coffee machine notes the steam rising, and the home’s ambient system quietly asks: “Would you like a moment of calm? Here’s a guided breath and your schedule for the day.” This hypothetical scenario illustrates the essence of XSEO—Extended Search Engine Optimization—where search no longer lives on a page, but in context, in presence, in what we feel.

XSEO extends SEO into the sensory world. It means structuring not only text and keywords, but haptic feedback, gesture triggers, neural signals, voice‐intonation patterns, proximity cues, ambient light and sound. Instead of ranking for “best running shoes,” the system senses your gait, your pace, your past training loads and asks: “Based on your stride and recovery state, you’re due for a 20-minute trail run; here are local options.” The interface is you. The search is that moment of insight.

Why now? Because the infrastructure is already shifting. Over 20.5% of people globally use voice search today. If we take the 2024 base of, say, 8.4 billion voice‐assistant devices and project conservative 10% growth per year, in five years you might exceed ~13.5 billion active ambient-search endpoints (8.4 × 1.10⁵ ≈ 13.9). Every one of these is a potential “search interface” without a screen.

What does this mean for SEO? In practice, many things:

- Content must accommodate predictive intent: not just “what a user types”, but “what the user might do”.

- Metadata expands beyond title tags and schema to include haptic cues, sensor-trigger codes, and gesture maps.

- The measurement shifts: we calculate not click-through rate but sensor engagement rate and friction-free time-to-insight.

For instance, if a brand currently sees 1,000 organic queries/day for a term, and through XSEO integration it reduces the user’s friction by 40 %—meaning users move from query to action in 60 % of the time—the effective “search‐to-conversion window” shortens. If average time = 10 seconds now, at 60% it becomes 6 seconds. Over 1,000 daily queries that’s a savings of 4,000 seconds (~66 minutes) per day of user focus regained—a meaningful gain in cognitive bandwidth and brand imprint.

In short, XSEO amplifies the notion of discoverability into the realm of the sensed. The search engine doesn’t just return results—it anticipates needs, senses presence, delivers solutions. And in that ambient revolution, optimization becomes less about what you type and far more about what you feel.

ObjSEO (Object SEO)

In the world of the sensory web, optimisation no longer begins and ends at a URL or a typed query. Enter ObjSEO — Object Search Engine Optimisation. This is the practice of making physical objects, environments and behaviours discoverable in an age when the interface recedes into the background and the user, the object and the machine all become part of the same sensor-net.

Why ObjSEO matters

- Search is evolving: 68% of online experiences begin with a search engine.

- Voice, gesture, visual recognition, and haptics are no longer fringe channels but mainstream: for example, pages optimised for voice search load faster, contain fewer words and rely on structured data.

- In this environment, objects themselves — your smart-device, your wearable sensor, a tagged product, a motion-sensor in a room — become the entry point to intent. ObjSEO ensures these objects are “searchable”.

Hypothetical scenario

Imagine you walk into your living-room, your smart-lamp detects your posture and the ambient sound drops. Without tapping, speaking or glancing, the lamp lights subtly, and a soft tone says: “Would you like the usual evening mode?” Behind the scene: the lamp is integrated into a sensor-network, your past behaviour is stored, the environment knows you. When you nod, the system triggers: your personal playlist starts, the blinds lower, the air-filter kicks in. In effect, the object (the lamp) was the search interface. You didn’t open a browser. You didn’t type a query. You were the query. You were the interface.

What ObjSEO involves

- Tagging physical items, places and interfaces with semantic metadata, sensor-IDs, motion-signatures and usage-patterns

- Structuring signals so that a system can recognise: “object X has become active, user Y is present, context Z is inferred → trigger content or action A”

- Optimising for systems rather than screens: pattern recognition, proximity detection, gesture understanding, neural/haptic signals

- Measuring new KPIs: how often an object is activated as a search-entry, how many recognised gestures lead to recommended outcomes, the “discovery-to-action” rate of objects.

A brief calculation for illustration

Suppose you have 1,000 connected objects in a smart-home ecosystem. Each object on average triggers one “search-intent event” per day. Over a year: 1,000 × 365 = 365,000 object-search events. If ObjSEO optimisation raises conversion (successful action) rate from 2% to 5%, you move from 7,300 successful actions/year to 18,250 — a boost of +10,950 actions. The ROI of ObjSEO becomes plainly tangible.

Implications

As the interface fades and objects rise, the frontier of discoverability shifts. The battle is no longer solely for first-page rankings in a browser. It’s for seamless alignment with context, object-presence and human intention. More than half of click-throughs still go to the top three organic listings. But in the sensory web, the “top three” may not be a web page at all; they may be your smart thermostat, your wearable AR glasses, or the sensor in your car.

Conclusion: When Search Becomes Sentient

Search no longer waits to be asked — it listens, feels, and responds to your mind. The sensory web is not merely an evolution of the internet; it is its awakening. It is the moment when machines cease to be passive repositories of information and become living participants in the continuum of human intent. From Zero-UI interfaces to ObjSEO ecosystems, the story of search is transforming from an act of query to an act of presence.

We began with the disappearance of the interface — when voice, gesture, and neural patterns replaced clicks and taps. We saw how THATWARE’s vision of Zero-UI SEO enables seamless communication between human and machine, bridging the physical and digital through haptics, emotion, and intuition. Then we explored ObjSEO, where objects themselves — sensors, wearables, vehicles, and everyday tools — become searchable entities, pulsing with contextual data, capable of initiating interaction without explicit command.

In this new reality, optimisation means more than ranking a page; it means orchestrating a symphony of signals. Every motion, every pulse of light, every neural spike becomes a fragment of a search sentence. The query is written not in words but in sensations — and the web responds accordingly. Consider that by 2030, over 75 billion connected devices will surround us, each capable of sensing and transmitting intent (Statista, 2025). In such a world, search ceases to be something you do; it becomes something you are.

THATWARE’s approach recognizes this paradigm shift. Its technologies don’t just optimise for algorithms; they design for empathy — for machine empathy. They teach systems to understand context, emotion, and sensory nuance. In the Zero-UI era, a successful search experience is not measured in clicks or impressions but in resonance — how intuitively and invisibly technology aligns with human desire.

We are entering a time when information flows like thought: immediate, silent, and symbiotic. A world where your devices don’t just respond to queries but anticipate needs — where your environment becomes a living index, your gestures become syntax, and your presence becomes metadata.In short, THATWARE designs the senses of tomorrow — not to replace human perception, but to extend it. The interface disappears, and in its place arises a new kind of consciousness — one shared between human and machine, between intent and understanding. The future of search is not on a screen. It is within and around you.

Click here to download the full guide about the Sensory Web and the Zero-UI Revolution.