Get a Customized Website SEO Audit and SEO Marketing Strategy

This project develops a comprehensive semantic evaluation system designed to measure how accurately a page maintains focus on its intended topic and where it may unintentionally drift into adjacent subject areas. Using transformer-based embeddings, the system builds a topic centroid representing the core thematic intent of the page. All content blocks are then analyzed against this centroid to determine their alignment, identify off-topic segments, and quantify the precision of topical coverage.

Beyond analyzing a single page in isolation, the system also assesses how its themes interact with other related pages. A dedicated cross-page module evaluates centroid similarities and section-level overlaps to reveal where two pages may cover similar ground or compete for the same conceptual territory. This helps distinguish natural topic adjacency from unwanted content leakage.

Together, these components provide a structured, data-driven assessment of topical focus integrity. The approach is fully automated, modular, and optimized for real-world SEO workflows where maintaining clear topical boundaries is essential for search performance, content differentiation, and cluster-level authority.

Project Purpose

The purpose of this project is to offer a reliable and structured method for evaluating how well a page maintains its intended topical scope and how distinctly it differentiates itself from related pages within the same content ecosystem. Modern content environments often suffer from subtle topic overlap, fragmented thematic focus, or unintended expansion into adjacent subjects. These issues can weaken topical authority, dilute relevance, and create unnecessary competition between pages addressing similar themes.

This system addresses these challenges by combining transformer-based semantic understanding with a block-level analytical framework. It determines whether individual sections support the primary topic, highlights areas that drift away from the core theme, and measures how closely multiple pages align at a conceptual level. Through both within-page and cross-page evaluations, the project enables clear detection of topical precision, leakage, overlap, and differentiation.

The overall purpose is to establish a dependable foundation for producing focused, non-competing, and well-structured content assets that strengthen topical clarity, reinforce thematic depth, and support more effective content architecture.

Project’s Key Topics Explanation and Understanding

Topical Boundaries in SEO Content

Topical boundaries define the conceptual limits of what a piece of content is intended to cover. In a well-structured SEO ecosystem, each page should focus on a distinct aspect of a broader topic cluster—ensuring clarity both for users and search engines. When a page consistently stays within these boundaries, search engines can easily interpret its primary purpose, increasing the likelihood of strong topical authority and stable rankings.

However, pages often unintentionally drift beyond their intended scope. This occurs when content expands into adjacent areas, introduces loosely related topics, or attempts to cover multiple themes in a single piece. Such boundary leakage weakens the semantic clarity of the page and can create conflicts with other pages targeting related but separate topics. When boundaries blur, search engines may struggle to identify which page is the best match for specific queries, resulting in ranking fluctuations or cannibalization.

This project evaluates how strictly each page adheres to its topical boundaries, detecting both subtle and significant boundary shifts. The analysis provides a detailed view of where the content strengthens topical authority and where it diffuses into less relevant areas. This ensures that every page serves a clear strategic purpose and contributes effectively to its broader topical cluster.

Content Centroid Representation

A content centroid is the semantic “center of gravity” of a page. It is created by aggregating contextual embeddings of all meaningful content sections, producing a numerical vector that summarizes the page’s dominant topic. This centroid is not a keyword average—it is a contextual representation capturing deeper relationships between ideas, themes, and linguistic structures in the content.

In a cluster of pages, each centroid acts as a conceptual signature. By comparing centroids across pages, the system can determine how similar or distinct the pages truly are at a thematic level. If two centroids are very close, the pages may be targeting overlapping intent, even if the wording or structure differs. Conversely, a large centroid separation indicates strong thematic differentiation, which is ideal for a well-segmented content strategy.

This representation provides a high-level diagnostic of the entire content set. It answers a fundamental question: Are these pages semantically unique enough to stand independently, or are they unintentionally competing? Centroid-level insights lay the foundation for detecting deeper, section-level overlaps and thematic conflicts.

Cross-Page Similarity Assessment

Cross-page similarity analysis evaluates how conceptually aligned one page is with another. While centroid comparison offers a macro-level view, cross-page similarity dives into both broad thematic relationships and localized content similarities. This dual perspective allows the analysis to identify whether pages are genuinely distinct or whether they share meaningful amounts of semantic content.

In a real SEO environment, this assessment is critical. Multiple pages created around a similar theme—intentionally or not—often begin covering the same conceptual territory. Even subtle overlaps can trigger keyword cannibalization, where two pages compete for similar queries, weakening performance for both. The cross-page similarity evaluation highlights these risks early by identifying where thematic or structural redundancy may exist.

The results offer actionable visibility into cluster cohesion and separation. Pages that are too similar can be repositioned, rewritten, or combined. Pages that are meaningfully different can be further strengthened to reinforce their unique role within the cluster. This analysis ensures that the overall content architecture supports long-term rankings instead of internal competition.

Section-Level Alignment Scoring

Every page is composed of multiple content sections or blocks, each contributing differently to the primary topic. Alignment scoring measures how strongly each section supports the core theme identified through the page centroid. Highly aligned sections are the core assets of the page—they reinforce the main topic, provide depth, and improve SEO relevance.

On the other hand, lower-alignment sections often introduce unnecessary context, tangential ideas, or off-topic discussions. These sections may dilute topical precision and increase the risk of leakage. Alignment scoring pinpoints exactly where this occurs, allowing for targeted optimization rather than large-scale rewriting.

This granular scoring is valuable for content restructuring, pruning, and enhancement. It not only identifies weak areas but also reveals high-performing sections that may be leveraged or expanded. Through this block-by-block view, the project provides a detailed structural evaluation of each page and helps identify opportunities to strengthen topic adherence from within the content.

Leakage Section Identification

Leakage sections are parts of the content that drift away from the core topic and introduce unrelated or marginally related information. These sections contribute to topical noise, reducing semantic clarity and weakening search engine interpretation. Leakage often occurs unintentionally when writers attempt to broaden context, include tangential examples, or connect multiple themes in a single page.

This project identifies leakage by comparing each block against the page centroid and detecting sections that fall below a defined topical relevance threshold. By isolating these weak areas, the analysis reveals specific content segments that may cause ranking instability or hinder authority building.

Understanding leakage provides actionable insights into whether these sections should be refined, repositioned, or removed. Improving or eliminating low-alignment segments restores topical sharpness, ensures semantic consistency, and enables the page to compete more effectively for its intended thematic space.

Section Overlap Across Multiple Pages

Section overlap analysis extends beyond page-level evaluation by comparing individual content blocks across the entire set of URLs. Even when two pages appear distinct at the surface level, they may share certain sections that resemble each other either in structure, semantics, or intention. Such overlaps contribute to redundancy and dilute the uniqueness of each page.

The project identifies which sections across pages display similar embeddings, indicating conceptual or contextual repetition. This is critical for maintaining a clean and well-differentiated content library. The insights help determine whether certain sections should be consolidated, rewritten, or redistributed to ensure that each page offers unique value.

Section-level overlap prevention is essential for strong cluster architecture. It ensures that related pages complement rather than compete with one another, strengthening the entire topical ecosystem.

Embedding-Based Semantic Evaluation

This project uses transformer-based embeddings to evaluate content at both the page and section level. Embeddings allow the system to capture nuanced semantic relationships beyond literal or keyword-based signals. This enables a more accurate and modern evaluation that aligns with how search engines interpret content today.

Embedding-based analysis captures context, intent, and conceptual relationships between topics—making it ideal for analyzing topical boundaries, centroid similarities, leakage, and cross-page overlaps. This approach ensures that the evaluation reflects actual semantic understanding rather than keyword frequency or surface-level comparisons.

By using advanced NLP embeddings, the project delivers insights that align with real-world search engine ranking behavior, providing a reliable foundation for content restructuring and strategic optimization.

keyboard_arrow_down

Q&A: Understanding the Project’s Value and Importance

Why is identifying topical boundaries important for maintaining strong SEO performance?

Topical boundaries act as the semantic guardrails of any content ecosystem. When a page stays within these boundaries, its intent becomes clearer to search engines, improving the chances of ranking for the correct set of queries. A tightly focused narrative signals expertise and authority, both of which are essential factors in modern search algorithms.

However, pages often drift unintentionally into surrounding topics, creating ambiguity. When this happens, multiple pages begin touching on similar ideas, weakening the distinct purpose of each piece. Identifying topical boundaries reveals exactly where the content maintains clarity and where it drifts. This ensures that every page strengthens its topic cluster instead of competing internally or sending mixed signals to search engines.

In practice, this leads to more stable rankings, reduced cannibalization, and an overall stronger content structure. The analysis ensures that each page occupies its intended semantic space without overlap or dilution.

How does centroid-level analysis help differentiate pages that appear similar?

Centroid analysis provides a high-level semantic fingerprint of each page. Even when two pages appear different in writing style or structure, their underlying themes may be similar. The centroid captures these deeper relationships by summarizing the entire page’s semantics into a single contextual vector.

By comparing centroids across multiple pages, the analysis identifies whether two pages are genuinely distinct or subtly competing for the same intent. This is often difficult to detect through manual review, especially when dealing with large content libraries. Pages with high centroid similarity may unintentionally target the same user journey or query space, resulting in diluted performance.

Understanding centroid distances helps determine where content needs refinement, consolidation, or repositioning. It ensures that each page contributes unique value, supports topic diversification, and reinforces a logical content hierarchy across the site.

What practical benefits come from detecting cross-page similarity and overlap?

Cross-page similarity detection prevents internal competition—one of the most common and costly issues in SEO. When two or more pages overlap conceptually, search engines may split relevance signals across them, causing both pages to underperform. Detecting these issues early ensures that each page can be optimized to occupy its own semantic territory.

Beyond avoiding cannibalization, this analysis helps strengthen the content architecture. Overlaps can reveal gaps, redundancies, or unintended duplications that arise over time as new content is created. By highlighting these patterns, the system assists in refining cluster strategy, reinforcing unique coverage areas, and aligning content with specific search intents.

This ultimately leads to a cleaner, more powerful content ecosystem where every page supports the broader strategy instead of weakening it. The benefit is both immediate—through optimization—and long-term through sustainable ranking stability.

How does section-level alignment help identify the strongest and weakest parts of a page?

Every page contains sections that vary in quality, focus, and relevance. Section-level alignment evaluates each block individually and determines how effectively it supports the page’s central topic. High-alignment sections are the most valuable—they contribute depth, authority, and clarity.

Lower-alignment sections, on the other hand, may introduce tangents or unnecessary context. These parts often go unnoticed during manual reviews, especially in long-form content. The analysis highlights these weak spots with precision, allowing for targeted improvements rather than broad rewrites.

By understanding which sections carry the most value and which introduce noise, content decisions become more strategic. Strengthening high-value blocks and refining or removing weaker ones enhances the page’s overall focus and improves its ability to rank consistently.

Why is identifying leakage sections crucial for improving topical precision?

Leakage sections reduce semantic strength by diverting attention away from the core topic. These segments may be well-written, but if they drift too far from the intended theme, they weaken the page’s relevance. Leakage contributes directly to ranking instability by introducing mixed signals.

Identifying these sections makes optimization more efficient. Instead of rewriting the entire content, the system pinpoints specific areas that dilute the page’s purpose. This helps preserve strong sections while cleaning up or refocusing weaker ones, maintaining the integrity of the topic.

Addressing leakage ensures that every part of the page reinforces the central theme, resulting in improved topical authority, stronger keyword alignment, and a more cohesive user experience.

What value does section-level overlap detection bring in multi-page environments?

Section-level overlap detection is essential when managing multiple pages that belong to the same cluster or target related themes. Overlaps often occur unintentionally as content expands and multiple pages discuss similar ideas. Even if the pages differ at the macro level, certain sections may still resemble each other semantically.

These overlaps create redundancy and diminish the uniqueness of each page. By identifying them, the analysis helps determine whether sections need rewriting, repositioning, or removal. It prevents pages from competing during indexing and ensures that each URL offers distinct, complementary value within the cluster.

This level of granularity brings transparency to the content ecosystem and strengthens the strategic role of each page, ensuring long-term cohesion across the entire topic set.

How does embedding-based analysis improve content evaluation compared to keyword-based methods?

Traditional keyword matching overlooks the deeper meaning behind content. Embedding-based analysis captures context, relationships, and intent—mirroring how modern search engines interpret text. This allows the system to detect nuanced overlaps, thematic drift, and conceptual similarities that keyword tools cannot recognize.

By using transformer-based embeddings, the evaluation becomes aligned with real-world ranking mechanisms. This ensures that insights are not only accurate but also actionable in an SEO environment where semantic understanding is key. Embedding-based methods provide a more realistic representation of how search engines assess content, making the analysis directly valuable in shaping strategy.

This advanced approach delivers richer insights that cannot be achieved through traditional keyword reports, enabling more precise optimization and stronger performance outcomes.

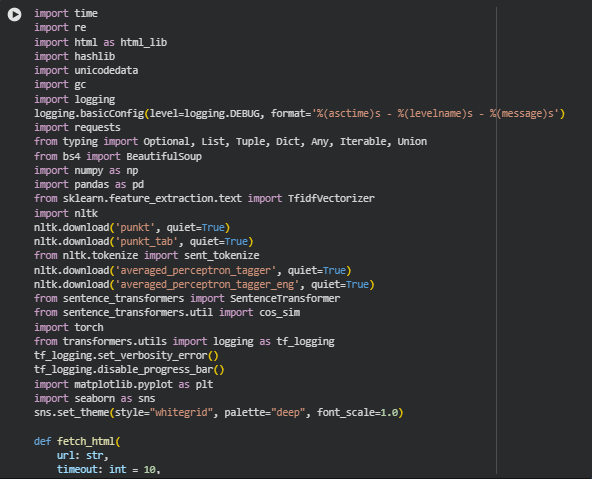

Libraries Used

time

The time library is a core Python module that provides functions to work with timestamps, measure execution duration, and handle delays. It is commonly used in performance-sensitive applications where developers need visibility into how long different operations take.

In this project, time is used to track the execution time of key processing steps such as text extraction, embedding computation, and similarity calculations. This helps ensure that the overall pipeline performs efficiently when processing multiple pages and large text segments.

re

The re library provides Python’s regular expression engine, enabling advanced pattern matching, text scanning, and rule-based text cleaning. It plays a critical role in tasks like detecting patterns, removing unwanted characters, and standardizing input strings.

Here, re is used to clean extracted text, normalize whitespace, filter out boilerplate structures, and prepare content for segmented analysis. It also supports custom label-trimming logic for visualizations, ensuring long text labels remain readable in plots.

html (aliased as html_lib)

The built-in html module provides utilities for handling HTML entities, encoding, and decoding. Web content often contains encoded characters such as &, , or escaped Unicode symbols.

In this project, the module assists in decoding HTML entities during page content extraction. This ensures the text is clean, human-readable, and semantically accurate before downstream processing like tokenization or embedding generation.

hashlib

hashlib provides hashing algorithms such as MD5, SHA-1, and SHA-256. These are useful for creating secure, deterministic identifiers.

In this implementation, hashlib is used to generate unique hashed identifiers for pages or text blocks. These identifiers help track items across the pipeline, organize similarity scores, and ensure stable references in visualizations without exposing full URLs or long texts.

unicodedata

The unicodedata module offers access to Unicode character properties, normalization forms, and general Unicode handling.

In this project, it is used to normalize text so that characters with accents, formatting inconsistencies, or multi-codepoint representations become standardized. This ensures clean and consistent text for both TF-IDF processing and embedding generation.

gc

The gc (garbage collector) module gives Python-level control over memory cleanup by exposing garbage collection mechanisms.

Because embedding models and text vectors can occupy significant memory, the project uses gc to manually trigger cleanup during specific steps. This helps maintain predictable performance and avoids memory bloat in multi-page analysis runs.

logging

The logging library provides a standardized way to record runtime information, warnings, and debugging details. It is crucial for understanding the behavior of complex pipelines.

In this project, logging is used to track each major step in the workflow—page fetching, text extraction, preprocessing, embedding computation, and similarity analysis. It helps diagnose issues quickly and ensures traceability in real-world deployments.

requests

The requests library is the industry-standard tool for making HTTP requests in Python. It offers a simple interface for fetching webpages, APIs, and external resources.

Here, it is used to fetch the content of user-provided URLs. It handles retries, redirects, and response validation, ensuring a stable and reliable page extraction pipeline before the content is analyzed.

typing

The typing module enables type hints such as List, Dict, Tuple, and Optional. Type hints improve code readability and reduce logical errors during development.

Within this project, it is used extensively to annotate function signatures and internal data structures. This provides clarity on expected input/output formats, which is essential for maintainability and extensibility.

BeautifulSoup (from bs4)

BeautifulSoup is a popular library for parsing HTML and XML documents. It can cleanly extract text, handle nested elements, and remove non-content artifacts.

Here, it forms the backbone of the text extraction workflow. The library removes scripts, styles, navigation elements, and irrelevant markup, leaving only meaningful content blocks for scoring and embedding preparation.

numpy

numpy is a foundational library for numerical computing. It provides efficient arrays, vectorized operations, and support for mathematical transformations.

In this project, it underpins similarity calculations, normalization steps, and numerical transformations needed for TF-IDF vectors and embedding comparisons. It ensures the analysis pipeline remains fast and scalable.

pandas

pandas provides high-performance data structures for tabular and labeled data, enabling clean manipulation of datasets.

The project uses pandas to organize extracted segments, scores, embeddings, and similarity results. It also acts as the intermediate format for result aggregation before visualization.

TfidfVectorizer from sklearn

The TF-IDF Vectorizer converts text into numeric vectors based on weighted term frequencies, capturing how important each word is in a document.

In this project, TF-IDF is used to extract surface-level topical signals. It complements deeper semantic embeddings by highlighting keyword relevance, lexical density, and content alignment at the term level.

nltk

NLTK (Natural Language Toolkit) is a widely used library for tokenization, text segmentation, and part-of-speech tagging.

This project uses NLTK for sentence tokenization and POS tagging when breaking content into analysis units and identifying structural or linguistic patterns that relate to semantic relevance and descriptive clarity.

SentenceTransformer & cos_sim

SentenceTransformer provides state-of-the-art transformer-based embedding models that convert sentences into dense semantic vectors. cos_sim computes cosine similarity between vectors.

This is core to the semantic analysis pipeline. Embeddings represent each content segment’s meaning, enabling precise measurement of semantic alignment and relevancy patterns across pages, sections, and topics.

torch

PyTorch is a deep learning framework used for running neural networks, including transformer models.

Here, it is required for executing the sentence-embedding model efficiently, handling GPU/CPU selection, and performing vector computations.

transformers.logging

This module allows controlling the verbosity of the HuggingFace Transformers library.

In this project, it suppresses model download messages, progress bars, and warnings to keep logs clean and professional during execution.

matplotlib.pyplot

Matplotlib is the primary plotting library in Python, used for creating 2D charts, annotations, and visual summaries.

The project uses it to generate structured visualization outputs such as similarity heatmaps, distribution plots, and block-level scoring charts. It ensures each plot is stable and customizable.

seaborn

Seaborn is a statistical visualization library built on top of Matplotlib. It provides more polished aesthetics and high-level APIs.

In this project, Seaborn improves clarity of heatmaps, barplots, and semantic distribution charts. It is paired with the trimming function for managing long text labels in legends and axis labels.

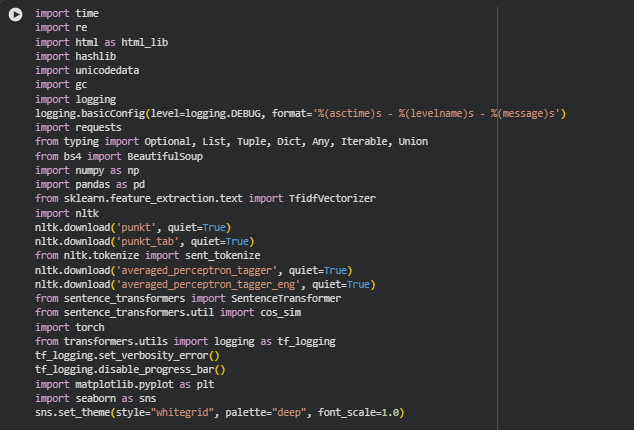

Function: fetch_html

Summary

The fetch_html function retrieves the raw HTML content of a webpage while applying a polite crawling strategy. It incorporates configurable delays, retry logic, exponential backoff, timeout control, and browser-like headers to minimize the risk of request blocks or rate limits. It is designed for stability in real-world deployments where multiple URLs are fetched in sequence.

The function returns the full HTML text only when the response is successful, contains sufficient content, and is correctly decoded with the appropriate character encoding settings. In cases where all retries fail, it returns None so that downstream functions can treat the page as unavailable.

Key Code Explanations

headers = {“User-Agent”: “Mozilla/5.0 (compatible; TopicalBoundaryBot/1.0)”}

- This line sets the HTTP request header to mimic a real browser. Many websites reject unknown or default user agents, so using a structured identifier helps prevent blocks while still being transparent.

while attempt <= max_retries:

- This loop ensures the function attempts the request multiple times. It allows the system to recover gracefully from temporary connectivity issues, throttling, or network fluctuations.

wait = backoff ** attempt

time.sleep(wait)

- This implements exponential backoff. Each retry waits significantly longer than the previous one. This is essential for avoiding aggressive repeated hits on websites that temporarily reject or slow traffic.

r.encoding = r.apparent_encoding or “utf-8”

html_text = r.text

- Webpages may incorrectly declare their encoding. Using apparent_encoding helps automatically detect the correct one to avoid corrupted characters in extracted text.

if len(html_text.strip()) > 100:

return html_text

- A sanity check is used to ensure the fetched HTML is not empty or blocked (e.g., CAPTCHA or error stubs). Only meaningful content is passed downstream.

Function: clean_html_for_blocks

Summary

The clean_html_for_blocks function prepares webpage content for semantic segmentation by removing non-content elements. It filters out scripts, navigation menus, ads, visual elements, and metadata that would distort the semantic understanding of the page. The function also removes HTML comments and empty tags to produce a clean, content-heavy structure suitable for block-based extraction.

This cleaning ensures that the analysis focuses solely on meaningful text that contributes to topical understanding, improving both the accuracy and stability of block extraction.

Key Code Explanations

soup = BeautifulSoup(html_text, “lxml”)

- The HTML is parsed using the fast and robust lxml parser. It handles poorly structured HTML effectively, which is common on real-world websites.

remove_tags = [“script”, “style”, …]

- This list defines elements that do not contribute to topical meaning. Removing them ensures that only content intended for user reading remains.

for el in soup.find_all(tag):

el.decompose()

- decompose() removes elements entirely from the DOM tree, preventing residual empty tags and improving processing performance.

for comment in soup.find_all(string=lambda t: isinstance(t, type(soup.Comment))):

comment.extract()

- HTML often contains comments used by CMS systems or ad networks. Extracting them ensures that the text remains clean and analysis-ready.

if not tag.get_text(strip=True):

tag.decompose()

- Empty tags have no value for semantic interpretation. Removing them avoids unnecessary block creation noise.

Function: extract_page_blocks

Summary

The extract_page_blocks function is the core of the content segmentation workflow. It fetches the page HTML, cleans it, identifies the page title, and extracts coherent text blocks based on heading hierarchy and paragraph structures. These blocks serve as the units for semantic relevance, boundary detection, and topical drift analysis.

The process respects both semantic structure (headings like H2/H3/H4) and content sufficiency (min_block_chars). Each block receives a unique hash ID for stable references during scoring, visualization, and result interpretation. If a page fails to fetch or parse, the function returns a structured error dictionary without disrupting the pipeline.

Key Code Explanations

html_text = fetch_html(url)

if not html_text:

return {“url”: url, “title”: None, “blocks”: [], “note”: “Failed to fetch”}

- The function delegates page retrieval to fetch_html and exits early if the page could not be accessed. This prevents cascading errors later in the workflow.

soup = clean_html_for_blocks(html_text)

- Cleaning prior to extraction ensures that only meaningful content structures contribute to the block-extraction logic. This improves segmentation accuracy dramatically.

elements = soup.find_all([“h2”, “h3”, “h4”, “p”, “li”, “blockquote”])

- Only structural and textual elements relevant to content meaning are included. This allows the function to build topical blocks that match how users experience the page.

if tag in [“h2”, “h3”, “h4”]:

if len(current_block[“raw_text”]) >= min_block_chars:

blocks.append(current_block)

current_block = {“heading”: text, “raw_text”: “”, “position”: block_idx}

- This logic ensures that a new topical block begins whenever a heading appears—mirroring real-world content organization. Only blocks with enough content are retained.

if tag in [“p”, “li”, “blockquote”]:

current_block[“raw_text”] += ” ” + text

- Paragraphs and list items are appended to the current block, accumulating coherent thematic content.

for blk in blocks:

base = f”{blk[‘heading’]}_{blk[‘position’]}_{url}”

blk[“block_id”] = hashlib.md5(base.encode(“utf-8”)).hexdigest()

- Each block receives a deterministic unique ID based on heading, position, and URL. This avoids collisions and ensures stable references across reports, charts, and multiple runs.

keyboard_arrow_down

Function: fetch_html

Summary

The fetch_html function retrieves the raw HTML content of a webpage while applying a polite crawling strategy. It incorporates configurable delays, retry logic, exponential backoff, timeout control, and browser-like headers to minimize the risk of request blocks or rate limits. It is designed for stability in real-world deployments where multiple URLs are fetched in sequence.

The function returns the full HTML text only when the response is successful, contains sufficient content, and is correctly decoded with the appropriate character encoding settings. In cases where all retries fail, it returns None so that downstream functions can treat the page as unavailable.

Key Code Explanations

1.

headers = {“User-Agent”: “Mozilla/5.0 (compatible; TopicalBoundaryBot/1.0)”}

This line sets the HTTP request header to mimic a real browser. Many websites reject unknown or default user agents, so using a structured identifier helps prevent blocks while still being transparent.

2.

while attempt <= max_retries:

This loop ensures the function attempts the request multiple times. It allows the system to recover gracefully from temporary connectivity issues, throttling, or network fluctuations.

3.

wait = backoff ** attempt

time.sleep(wait)

This implements exponential backoff. Each retry waits significantly longer than the previous one. This is essential for avoiding aggressive repeated hits on websites that temporarily reject or slow traffic.

4.

r.encoding = r.apparent_encoding or “utf-8”

html_text = r.text

Webpages may incorrectly declare their encoding. Using apparent_encoding helps automatically detect the correct one to avoid corrupted characters in extracted text.

5.

if len(html_text.strip()) > 100:

return html_text

A sanity check is used to ensure the fetched HTML is not empty or blocked (e.g., CAPTCHA or error stubs). Only meaningful content is passed downstream.

Function: clean_html_for_blocks

Summary

The clean_html_for_blocks function prepares webpage content for semantic segmentation by removing non-content elements. It filters out scripts, navigation menus, ads, visual elements, and metadata that would distort the semantic understanding of the page. The function also removes HTML comments and empty tags to produce a clean, content-heavy structure suitable for block-based extraction.

This cleaning ensures that the analysis focuses solely on meaningful text that contributes to topical understanding, improving both the accuracy and stability of block extraction.

Key Code Explanations

1.

soup = BeautifulSoup(html_text, “lxml”)

The HTML is parsed using the fast and robust lxml parser. It handles poorly structured HTML effectively, which is common on real-world websites.

2.

remove_tags = [“script”, “style”, …]

This list defines elements that do not contribute to topical meaning. Removing them ensures that only content intended for user reading remains.

3.

for el in soup.find_all(tag):

el.decompose()

decompose() removes elements entirely from the DOM tree, preventing residual empty tags and improving processing performance.

4.

for comment in soup.find_all(string=lambda t: isinstance(t, type(soup.Comment))):

comment.extract()

HTML often contains comments used by CMS systems or ad networks. Extracting them ensures that the text remains clean and analysis-ready.

5.

if not tag.get_text(strip=True):

tag.decompose()

Empty tags have no value for semantic interpretation. Removing them avoids unnecessary block creation noise.

Function: extract_page_blocks

Summary

The extract_page_blocks function is the core of the content segmentation workflow. It fetches the page HTML, cleans it, identifies the page title, and extracts coherent text blocks based on heading hierarchy and paragraph structures. These blocks serve as the units for semantic relevance, boundary detection, and topical drift analysis.

The process respects both semantic structure (headings like H2/H3/H4) and content sufficiency (min_block_chars). Each block receives a unique hash ID for stable references during scoring, visualization, and result interpretation. If a page fails to fetch or parse, the function returns a structured error dictionary without disrupting the pipeline.

Key Code Explanations

1.

html_text = fetch_html(url)

if not html_text:

return {“url”: url, “title”: None, “blocks”: [], “note”: “Failed to fetch”}

The function delegates page retrieval to fetch_html and exits early if the page could not be accessed. This prevents cascading errors later in the workflow.

2.

soup = clean_html_for_blocks(html_text)

Cleaning prior to extraction ensures that only meaningful content structures contribute to the block-extraction logic. This improves segmentation accuracy dramatically.

3.

elements = soup.find_all([“h2”, “h3”, “h4”, “p”, “li”, “blockquote”])

Only structural and textual elements relevant to content meaning are included. This allows the function to build topical blocks that match how users experience the page.

4.

if tag in [“h2”, “h3”, “h4”]:

if len(current_block[“raw_text”]) >= min_block_chars:

blocks.append(current_block)

current_block = {“heading”: text, “raw_text”: “”, “position”: block_idx}

This logic ensures that a new topical block begins whenever a heading appears—mirroring real-world content organization. Only blocks with enough content are retained.

5.

if tag in [“p”, “li”, “blockquote”]:

current_block[“raw_text”] += ” ” + text

Paragraphs and list items are appended to the current block, accumulating coherent thematic content.

6.

for blk in blocks:

base = f”{blk[‘heading’]}_{blk[‘position’]}_{url}”

blk[“block_id”] = hashlib.md5(base.encode(“utf-8”)).hexdigest()

Each block receives a deterministic unique ID based on heading, position, and URL. This avoids collisions and ensures stable references across reports, charts, and multiple runs.

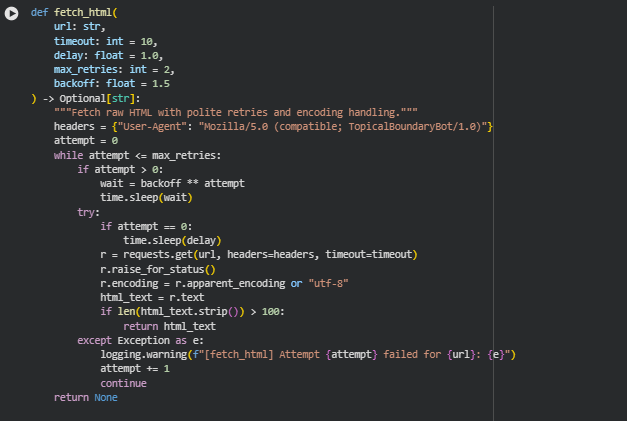

Function: split_long_block

Summary

The split_long_block function handles situations where individual content blocks are excessively long for embedding, semantic scoring, or transformer processing. Large blocks naturally occur in long-form articles or pages with dense paragraphs under a single heading. These oversized blocks can degrade embedding quality, exceed model token limits, or weaken the granularity of topical boundary detection.

To preserve semantic coherence, the function divides the block using sentence boundaries rather than arbitrary character or word counts. It estimates the number of tokens per sentence using a heuristic designed to approximate the behavior of transformer tokenizers. Once the estimated token count for the current chunk exceeds the allowed limit, the chunk is finalized and stored as a new block. Each chunk inherits the original heading but receives an incremental part indicator (e.g., “(Part 1)”, “(Part 2)”). A unique hashed block ID is assigned for consistency and traceability.

This ensures that every block processed downstream remains within safe token limits while preserving readability and contextual meaning, resulting in more accurate scoring and cleaner interpretability.

Key Code Explanations

1.

sentences = sent_tokenize(block[“text”])

The function begins by splitting the entire block into individual sentences. Partitioning by sentences prevents semantic breaks and ensures that the content remains logically structured even after splitting.

2.

sent_tokens = len(sent.split()) / 0.75

This line estimates the number of tokens in a sentence using a ratio derived from typical transformer tokenization patterns (approximately 1.33 tokens per word). It avoids the overhead of calling an actual tokenizer while still providing a consistent approximation.

3.

if current_token_est + sent_tokens > max_tokens:

Each chunk grows sentence by sentence until it reaches the maximum allowed token threshold. This check ensures that the final segment remains under the configured limit, preventing model truncation or performance degradation.

4.

chunk_text = ” “.join(current_chunk)

new_blocks.append({

“block_id”: hashlib.md5((block[“block_id”] + f”_{part_counter}”).encode()).hexdigest(),

“heading”: f”{block[‘heading’]} (Part {part_counter})”,

…

})

This block finalizes each chunk:

- Combines sentences into a unified text segment

- Generates a deterministic new block ID using the original ID plus a part index

- Adjusts the heading to reflect the part number

- Preserves metadata such as position and token estimates

This ensures traceability and clarity in downstream analysis and visualizations.

5.

if current_chunk:

…

new_blocks.append({…})

After iterating through all sentences, the final partial chunk is added. This guarantees completeness even if the last chunk never triggered a token overflow.

Function: apply_block_splitter

Summary

The apply_block_splitter function processes a list of blocks and applies the block-splitting logic wherever necessary. Its purpose is to ensure uniform token-size boundaries across all blocks before semantic embedding or scoring. It iterates through each block, checks its estimated token count, and either:

- Splits the block using split_long_block if it exceeds the threshold, or

- Adds it unchanged if it falls within acceptable limits.

By returning the updated list of blocks, it integrates seamlessly into the extraction pipeline and ensures that all downstream components operate on well-structured, manageable content segments. As a utility function, it handles no parsing or text manipulation beyond delegating the splitting operation.

Function: normalize_block_text

Summary

The normalize_block_text function performs low-level text cleaning to prepare extracted HTML content for further processing. It focuses on structural normalization rather than semantic transformation. The function removes artifacts introduced by HTML encoding, browser rendering, Unicode inconsistencies, and stray control characters. This step ensures that the text is standardized, predictable, and free from formatting noise—important for consistent scoring, token estimation, and block splitting.

Normalization at this stage also reduces the risk of uneven embeddings or accidental mismatches during similarity calculations. By enforcing a uniform representation of spaces, line breaks, and special characters, this function helps maintain reliability across different websites that may use inconsistent HTML or text formatting.

Function: preprocess_blocks

Summary

The preprocess_blocks function is a crucial component of the pipeline, responsible for transforming raw extracted blocks into analyzable, high-quality content segments. It performs several layers of cleaning and filtering to ensure that only meaningful, topically relevant sections proceed to the analysis stage.

The function first normalizes text using normalize_block_text, then removes superficial or low-information text components such as inline URLs, reference markers, and boilerplate content. It also filters out blocks that are too short to carry any semantic weight, helping maintain analysis quality across different domains.

A key part of this function is boilerplate suppression. Blog posts, guides, and landing pages often include repetitive components—newsletter prompts, navigational text, disclosures, and promotional elements. Only content that contributes to topical coherence is retained, creating a dataset that is more aligned with the intent of the page.

After cleaning and filtering, the function computes important metadata such as word count, token estimation, and positional order. Finally, a failsafe splitting mechanism ensures oversized content blocks are processed through the block splitter, maintaining token safety for downstream embedding models.

The resulting return structure preserves the original URL and title while replacing raw blocks with a fully normalized, cleaned, and size-regulated block list ready for scoring and embedding.

Key Code Explanations

1.

default_boilerplate = [

“privacy policy”, “terms of service”, “cookie”, “subscribe”,

“click here”, “read more”, “related posts”, “advertisement”,

“contact us”, “newsletter”

]

This list represents commonly found non-informative website text. These phrases occur frequently in sidebars, page footers, announcement banners, and other non-topical components. By using this list as a filter criteria, the function ensures that such routine elements do not influence topical scoring or embedding similarity.

2.

text = normalize_block_text(raw_text)

Calling the normalization function ensures that the block text is free from HTML artifacts and standardized in Unicode form. This reduces noise and prevents inconsistencies in word counting, token estimation, or embedding generation.

3.

if not text or len(text.split()) < min_words_per_block:

continue

This line excludes content that is too short to represent meaningful topical information. Very small blocks—short disclaimers, single-line blurbs, or standalone list items—provide little to no value for semantic analysis and are removed early.

4.

if any(bp in lower_text for bp in default_boilerplate) and len(lower_text.split()) < 200:

continue

This ensures boilerplate content is rejected without eliminating long-form content that merely contains a boilerplate term incidentally. For instance, a genuine article section referencing “newsletter strategies” should not be discarded. Combining keyword detection with a word-count threshold preserves legitimate content.

5.

token_est = int(word_count * 1.3)

A rough token estimation is computed based on the typical ratio between words and transformer tokens. This estimation guides the failsafe block splitting step and is computationally efficient compared to full tokenization.

6.

blocks = apply_block_splitter(clean_blocks, max_tokens, avg_tokens_per_sentence) `

Before returning, the function ensures that all blocks fall within safe token limits. This prevents downstream errors when generating embeddings or similarity scores and allows the rest of the pipeline to assume that block sizes are compliant.

Function: load_embedding_model

Summary

This function is responsible for loading the embedding model used throughout the analysis pipeline. Since the project relies heavily on transforming textual content into high-quality semantic vectors, ensuring that the model loads correctly—and is deployed on the most suitable device—is a critical first step in guaranteeing consistent performance.

The function automatically detects whether a GPU is available and assigns the model to the optimal device. This ensures better efficiency for large-scale page processing while still falling back safely to CPU environments. It includes robust checks to confirm that the model is not only loaded but also fully operational by performing a test embedding. These validation steps help prevent runtime failures at later stages of the analysis pipeline.

Overall, this function acts as the foundation for all semantic computations in the project. By guaranteeing that the embedding engine is properly initialized and ready, it ensures reliable, repeatable, and high-precision vector representations for every piece of content processed.

Key Code Explanations

1

device = torch.device(“cuda”) if torch.cuda.is_available() else torch.device(“cpu”)

This line determines whether a GPU is available and selects the appropriate device. Semantic embedding computations are significantly faster on a GPU—especially for multi-page, multi-block analysis—so this decision has a direct impact on performance. If CUDA is not available, the function gracefully defaults to CPU.

2

model = SentenceTransformer(model_name, device=device)

The actual embedding model is instantiated here using the chosen device. This sets the foundation for all downstream operations where text from content blocks is converted into dense vector embeddings. By loading the model directly onto the device, the function ensures minimal overhead and avoids unnecessary data transfers.

3

_ = model.encode([“Embedding model test sentence.”], normalize_embeddings=True)

This is an operational health check. After the model loads, the function performs a real encoding task using a simple test sentence. This ensures the model is functioning correctly—both structurally and numerically. If the model were corrupted, mis-configured, or incompatible with the environment, this line would immediately surface the problem. It also validates the normalization process, which is essential for stable similarity calculations later in the analysis.

Function: auto_derive_topic_intent

Summary

This function generates a concise topic intent statement using purely statistical methods—specifically TF-IDF over extracted noun phrases—without relying on any language model. This makes it efficient, lightweight, and deterministic, which is ideal during large-scale content processing or when running analyses in restricted environments.

The goal of this function is to capture the central thematic direction of a page by analyzing two pieces of information: the page title and the first content block. These two components typically carry the highest topical density and usually reflect the primary intention behind the page. By combining them, the function obtains a strong signal of what the content is fundamentally about.

To accomplish this, the function tokenizes the combined text, extracts nouns based on part-of-speech tagging, and then applies TF-IDF to surface the most meaningful noun terms. These terms are then merged into a short, human-readable topic intent statement. This distilled intent is later used as a reference point for the topical boundary evaluation steps in the broader analysis pipeline.

Key Code Explanations

1

text = f”{title}. {first_block_text}”

This line strategically combines the title and the first main content block. The title alone may be too short or ambiguous, while the first block often introduces the central topic with better clarity. Merging them ensures the intent extraction process starts with a strong, semantically rich sample of text.

2

tokens = nltk.word_tokenize(text)

tagged = nltk.pos_tag(tokens)

The function tokenizes the text and applies POS tagging to identify grammatical roles. Since noun phrases carry the strongest topical weight in most SEO-oriented pages, POS tagging is essential to isolate noun-based signals before applying TF-IDF. This ensures the final intent statement is not polluted by verbs, filler phrases, or connective words.

3

nouns = [word for word, pos in tagged if pos.startswith(‘NN’)]

This line retains only the tokens that are identified as nouns (singular or plural). For topic intent, nouns represent subjects, entities, and core topics. Filtering the text down to nouns simplifies the dataset and significantly improves the effectiveness of TF-IDF ranking.

4

vectorizer = TfidfVectorizer(max_features=5, stop_words=”english”)

vectorizer.fit([” “.join(nouns)])

key_terms = vectorizer.get_feature_names_out()

TF-IDF is applied only on the noun list, which helps surface the most significant terms based on their contribution to meaning. Using a small feature cap keeps the focus on the strongest signals. If the noun list is sparse or too generic, TF-IDF still helps select more meaningful combinations compared to raw frequency alone.

5

intent = ” “.join(key_terms)

intent = re.sub(r”\s+”, ” “, intent).strip()

After finding high-value noun terms, this block constructs a succinct, readable intent statement. The additional normalization ensures the text is clean, compact, and uniformly spaced—important for downstream use where consistency in representation is necessary.

Function: derive_topic_intent

Summary

This function determines the final topic intent for a given page by following a clear and predictable prioritization strategy. In many real-world content analysis workflows, external strategic direction, editor inputs, or business-specific intent statements may be available. When such manually provided intent exists, it should always override any automatically generated interpretation—ensuring the analysis respects user-defined, context-aware guidance.

If no manual intent is supplied, the function automatically derives the page’s topic intent using the combined information from the page title and its first content block. This follows a practical insight: the first block typically introduces or contextualizes the page’s purpose more reliably than later blocks, which may drift into auxiliary details. The automatic derivation relies on the previously defined TF-IDF noun-phrase approach, making the resulting intent both efficient and semantically precise.

Key Code Explanations

1

if provided_intent:

return provided_intent.strip()

This conditional ensures that whenever a user or system explicitly provides a topic intent, it takes priority. This design respects external editorial judgment or strategy documentation, which often reflects nuanced brand positioning or proprietary content guidelines that automatic methods cannot fully infer. The .strip() ensures that the returned text remains clean and free of formatting artifacts.

2

title = page.get(“title”, “”)

blocks = page.get(“blocks”, [])

The function safely retrieves the page title and block list. Using .get() avoids runtime errors if the page dictionary is missing fields. Returning empty defaults ensures the function remains robust even when dealing with incomplete or inconsistent page extraction outputs—common in real-world crawling and scraping scenarios.

3

if not blocks:

return title.strip()

In cases where no valid blocks could be extracted (for example, extremely thin pages, media-only pages, or extraction failures), the function falls back to using only the title as the intent. While minimal, the title still offers a reliable thematic anchor and preserves consistency in downstream processing.

4

first_block_text = blocks[0][“text”]

return auto_derive_topic_intent(title, first_block_text)

The first block is selected as the primary textual basis for automatic intent derivation. It is then passed along with the title to the auto_derive_topic_intent function, which applies noun extraction and TF-IDF weighting. This step ensures the final intent reflects the strongest, most immediate content signals while remaining efficient and interpretable.

Function: assign_topic_intents_to_pages

Summary

This function assigns a finalized topic intent to each processed page by combining manual inputs (when available) with the automatic intent derivation workflow. In real-world content analysis environments, not all pages require automated inference—some may come with predefined topic statements supplied by strategists, editors, or subject-matter experts. This function ensures that such authoritative inputs are always respected when present.

The function receives a single processed page dictionary and optionally a mapping of manual intents keyed by URL. It retrieves the page’s URL, checks whether a corresponding manual intent exists, and passes the appropriate value into the derive_topic_intent function. The resulting intent is then added back into the page dictionary under the key “topic_intent”.

This approach ensures that the intent assignment process remains consistent, predictable, and easy to audit. Whether a large batch of pages is being analyzed or only a single page, the function behaves uniformly and produces an intent that downstream topical boundary and semantic relevance analyses can depend on. The page is finally returned with its new intent attribute applied.

Function: embed_texts

Summary

This function transforms a list of textual inputs into dense numerical embeddings using a preloaded SentenceTransformer model. Embeddings provide a vector-space representation of meaning, enabling the system to compare blocks, detect overlaps, evaluate alignment with topic intents, and measure semantic distances across pages. Because embedding computation is a core component of topical boundary analysis, this function ensures that text is encoded consistently, efficiently, and with proper normalization.

The function first validates that the input list is not empty; if no texts are provided, it returns an empty NumPy array, preventing downstream errors. The batch size is automatically adjusted to avoid unnecessary memory usage on smaller inputs. The model’s .encode() method generates embeddings with normalization enabled so that cosine similarity calculations remain stable, interpretable, and scaled between -1 and 1. The embeddings are returned as a NumPy array, ready for all subsequent similarity, clustering, and cross-page comparison operations.

Function: embed_topic_intent

Summary

This function generates a normalized embedding vector specifically for a page’s assigned topic intent. Since the entire project relies on measuring how well each content block aligns with the declared topical intent, this embedding serves as the semantic anchor against which all block embeddings and cross-page comparisons are evaluated.

The function extracts the text stored under page[“topic_intent”]—whether manually supplied or automatically derived—and encodes it using the embed_texts utility. The result is a 2-dimensional NumPy array of shape (1, D), representing one normalized embedding ready for cosine similarity operations. Keeping the topic intent embedding separate and consistently shaped ensures reliable comparison logic across pages, blocks, and cross-page centroid calculations.

Function: embed_blocks

Summary

This function converts all textual content blocks of a page into their corresponding embedding vectors. Each block represents a semantically coherent unit of content, and transforming them into embeddings allows the project to evaluate topical alignment, compute centroids, perform similarity scoring, detect leakages, and analyze cross-page overlaps.

The function extracts the “text” field from every block in the page dictionary and sends these texts to the shared embedding utility embed_texts. This ensures consistent preprocessing, batching, normalization, and model handling across the project. The output is a NumPy array of shape (num_blocks, D), where each row corresponds to a block embedding. If a page has no valid blocks, the function returns an empty array—allowing the downstream pipeline to gracefully skip embedding-dependent operations.

Function: compute_page_centroid

Summary

This function computes the central semantic representation—or centroid—of a page by averaging all block embeddings. Each block embedding reflects the meaning of a specific content section, and taking the mean creates a single vector that captures the overall topical theme of the page.

The centroid plays a crucial role in the analysis:

- It becomes the reference point for measuring how aligned each block is with the page’s topic.

- It enables cross-page comparisons to detect topical overlap or leakage.

- It provides a stable, normalized representation of the page’s primary subject matter.

If a page has no valid block embeddings, the function safely returns an empty array to prevent downstream errors.

Because this function performs a straightforward operation—averaging embeddings—the explanation focuses primarily on describing the role and importance of this computation.

Key Code Explanations

1.

if block_embeddings.size == 0:

return np.array([])

This ensures the function handles edge cases gracefully. If no embeddings are available, it returns an empty array rather than attempting invalid arithmetic.

2.

centroid = block_embeddings.mean(axis=0, keepdims=True)

This line computes the mean value along the first dimension (across all blocks).

- axis=0 aggregates all block vectors to produce one averaged vector.

- keepdims=True ensures the output maintains shape (1, D), which is required for compatibility with cosine similarity calculations elsewhere.

Function: compute_block_alignment_scores

Summary

This function measures how well each content block aligns with the page’s overall topical direction. It computes the cosine similarity between every block embedding and the page centroid embedding. The output list reflects the degree to which each block supports or diverges from the core topic.

A higher alignment score indicates stronger relevance to the page’s main theme, while lower scores highlight blocks that may introduce tangential, diluted, or off-topic content. These scores serve as the foundation for key project metrics such as topical precision, leakage detection, and interpretability visualization.

If either the block embeddings or the centroid is unavailable, the function safely returns an empty list, allowing downstream steps to proceed without failure.

Key Code Explanations

1.

if block_embeddings.size == 0 or page_centroid.size == 0: return []

This check ensures robustness. If the page has no valid blocks or the centroid couldn’t be computed, the function returns an empty result instead of attempting similarity calculations.

2.

sims = cos_sim(block_embeddings, page_centroid) # (N,1)

This computes cosine similarity between each block embedding (N vectors) and the single centroid vector. The output shape (N,1) represents one score per block.

3.

return sims.flatten().tolist()

The similarity array is flattened into a simple list of floats, making it easy for later functions to index scores alongside their respective blocks.

Function: compute_page_topical_precision

Summary

This function produces a single topical precision score for an entire page by aggregating the similarity scores of individual content blocks. Each block receives an alignment score representing how closely its semantic meaning matches the page’s overall centroid. These raw scores (which naturally range between -1 and 1) are transformed into a normalized 0–1 scale, making interpretation intuitive and consistent.

To ensure the page-level score reflects practical content importance, the function uses word-count weighting by default. Longer, more substantial blocks naturally contribute more to topical precision than very short ones. This results in a more accurate representation of the page’s true topical focus.

If word counts are missing or inconsistent, the function falls back to equal weighting. The final score is safely clipped to the range [0, 1].

This metric is foundational for evaluating:

- How consistently the page stays aligned with its primary topic

- Whether certain blocks dilute topical focus

- Overall content coherence

Key Code Explanations

1.

if not alignment_scores:

return 0.0

This defensive check ensures that an empty input returns a neutral score rather than causing an error. When no scores are available, topical precision defaults to 0.

2.

a = np.asarray(alignment_scores, dtype=float)

a = (a + 1.0) / 2.0

· Converts the list of cosine similarities to a NumPy array.

· Rescales each score from [-1, 1] → [0, 1].

- -1 becomes 0

- 0 becomes 0.5

- 1 remains 1 This normalization is important for interpretability and consistent downstream comparisons.

3.

if block_wordcounts is not None:

w = np.asarray(block_wordcounts, dtype=float)

if w.shape[0] != a.shape[0]:

w = np.ones_like(a)

else:

w = np.ones_like(a)

This determines block-level weights:

- Uses word counts if properly aligned with the number of scores

- Falls back to equal weighting if sizes mismatch or word counts are unavailable This prevents misalignment issues and ensures stable behavior.

4.

precision = float((a * (w / wsum)).sum())

This calculates a weighted mean of the normalized alignment scores:

- (w / wsum) ensures weights sum to 1

- Multiplying by a and summing produces the weighted average This emphasizes semantically significant blocks during aggregation.

5.

return float(np.clip(precision, 0.0, 1.0))

Final defensive clipping ensures the score stays strictly between 0 and 1 even in rare numerical edge cases.

Function: detect_leakage_sections

Summary

The detect_leakage_sections function flags content blocks that drift away from the core topical focus of the page. It takes each block’s alignment score (cosine similarity against the page centroid), scales it from [-1, 1] to [0, 1], and then checks whether the block falls below a specified topical threshold. Any block whose scaled score is lower than the threshold is considered a “leakage section,” meaning it introduces off-topic or weakly-related content.

The function returns structured information for each such block, including its ID, heading, position, alignment score, and the reason for being flagged. This helps identify where topical dilution occurs and which sections need refinement.

Key Code Explanations

1. Scaling the alignment scores

a = np.asarray(alignment_scores, dtype=float)

a_scaled = (a + 1.0) / 2.0

Raw cosine similarity ranges from -1 to 1. This line converts it into a more interpretable range (0 to 1) using a standard normalization formula. A score closer to 1 indicates strong on-topic alignment, while lower values indicate weaker alignment. The threshold is applied on this scaled range.

2. Identifying leakage blocks

for idx, score in enumerate(a_scaled):

if score < threshold:

Each block’s scaled score is compared against the threshold (default 0.60). Any block falling below this value is deemed off-topic enough to be flagged for leakage. This loop ensures every block is evaluated independently.

3. Building a structured leakage result record

results.append({

“block_id”: meta.get(“block_id”),

“heading”: meta.get(“heading”),

“position”: meta.get(“position”),

“alignment”: float(score),

“reason”: f”alignment below threshold ({score:.3f} < {threshold})”

})

Each flagged block is stored as a dictionary containing metadata and contextual explanation. This ensures downstream analysis or visualizations can easily consume the leakage information. The “reason” field provides immediate clarity on why the block was selected, improving interpretability.

Function: analyze_page_topical_precision

Summary

The analyze_page_topical_precision function is the central orchestrator for the entire within-page topical precision workflow. It processes a single page and enriches it with metrics that quantify how well each content block aligns with the page’s topical intent. The function executes this analysis in a structured sequence:

1. Embedding Stage:

- Generates embeddings for the page’s topic intent statement.

- Generates embeddings for all content blocks.

2. Centroid Computation:

- Uses block embeddings and word counts to compute the overall page centroid (representing the page’s core topic vector).

3. Alignment Analysis:

- Computes cosine similarity between each block and the page centroid.

- Converts raw scores into [0,1] scale and attaches these values to individual blocks.

4. Page-Level Precision Score:

- Aggregates all alignment scores (weighted by word count) to compute a page-level topical precision score.

5. Leakage Detection:

- Flags blocks that fall below the specified alignment threshold and returns detailed leakage diagnostics.

The function returns a shallow copy of the page dictionary containing:

- A metrics dictionary (centroid, raw alignment scores, topical precision, and leakage sections).

- Updated blocks with an appended alignment_score field.

This makes the page immediately ready for reporting, visualization, or cross-page comparative analysis.

Key Code Explanations

1. Early exit when required data is missing

if not blocks or not topic_intent_text:

…

b[“alignment_score”] = 0.0

return result

If either the page lacks content blocks or there is no topic intent text, the analysis cannot proceed meaningfully. In such cases, the function safely returns a default structure with empty metrics and assigns a neutral alignment score (0.0) to all blocks. This avoids downstream errors and ensures consistent output formats.

2. Embedding generation

intent_emb = embed_topic_intent(page, model, batch_size=batch_size)

block_embs = embed_blocks(page, model, batch_size=batch_size)

These calls produce semantic embeddings for the topic intent and each block. The embeddings form the mathematical basis for centroid computation and similarity scoring. The model and batch size are passed through for efficient and flexible embedding generation.

3. Centroid computation with word-count weighting

block_wordcounts = [int(b.get(“word_count”, 0)) for b in blocks]

centroid = compute_page_centroid(block_embs, block_wordcounts)

result[“metrics”][“centroid”] = centroid

Word counts are extracted for weighting purposes. Longer blocks contribute more strongly to the page’s semantic direction, ensuring the centroid reflects the true dominant theme of the content. The resulting centroid is saved into the metrics section for any later cross-page analysis.

4. Computing and attaching alignment scores

alignment_raw = compute_block_alignment_scores(block_embs, centroid)

alignment_scaled = [float((s + 1.0) / 2.0) for s in alignment_raw]

for blk, score in zip(result.get(“blocks”, []), alignment_scaled):

blk[“alignment_score”] = score

Raw cosine similarity ([-1,1]) indicates how close each block is to the page centroid. These values are transformed into [0,1] for interpretability. The function then writes a scaled alignment score directly into each block’s dictionary, making block-level results universally accessible.

5. Page-level topical precision calculation

topical_precision = compute_page_topical_precision(alignment_raw, block_wordcounts)

result[“metrics”][“topical_precision”] = topical_precision

This aggregates raw alignment scores into a single page-level metric. The score reflects overall semantic consistency. The weighting ensures long-form sections influence the precision score proportionally more than very short blocks.

6. Leakage detection

leakage_sections = detect_leakage_sections(alignment_raw, result.get(“blocks”, []), threshold=leakage_threshold)

result[“metrics”][“leakage_sections”] = leakage_sections

The function checks for blocks that fall below the specified alignment threshold. These are returned with detailed metadata, giving clear insight into which parts of the content stray off-topic and why.

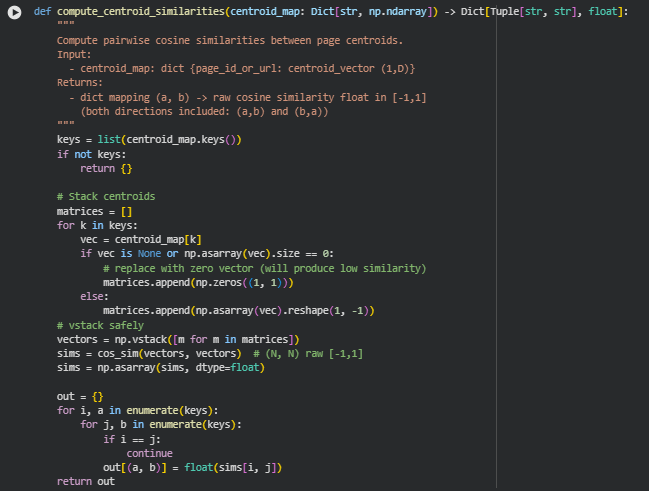

Function: compute_centroid_similarities

Summary

The compute_centroid_similarities function calculates the semantic similarity between all pairs of page-level centroids. Each centroid represents the dominant topical vector of a page, and comparing these vectors allows the system to determine how semantically close different pages are to each other.

This function is central for cross-page topical analysis, including:

- Detecting topic overlap between related pages

- Measuring cluster consistency within topic groups

- Identifying potential cannibalization risks

- Comparing pages for internal competition mapping

The function accepts a centroid_map (a dictionary mapping page identifiers to centroid vectors) and returns a dictionary where each key is a pair of pages (a, b) and the value is their cosine similarity (range [-1,1]). Higher values indicate greater semantic similarity and therefore closer topic overlap.

If a centroid is missing or empty, it’s replaced with a zero vector, ensuring the function never breaks and always returns a complete pairwise similarity matrix.

Key Code Explanations

1. Handling empty or missing centroids

vec = centroid_map[k]

if vec is None or np.asarray(vec).size == 0:

matrices.append(np.zeros((1, 1)))

else:

matrices.append(np.asarray(vec).reshape(1, -1))

Each centroid vector is validated before stacking.

- If a centroid is missing or empty, it’s replaced with a (1,1) zero vector.

- Zero vectors produce low similarity values, which is desirable because missing centroids shouldn’t falsely signal high semantic overlap. This makes the function robust and safe in real-world scenarios where some pages may not contain blocks or could not be embedded.

2. Building the centroid matrix for similarity computation

vectors = np.vstack([m for m in matrices])

sims = cos_sim(vectors, vectors) # (N, N)

All centroid vectors are stacked vertically to form a matrix of shape (N, D), where:

- N = number of pages

- D = embedding dimension

The pairwise cosine similarity matrix is then produced using cos_sim, resulting in an (N × N) matrix where every element represents similarity between two pages.

3. Generating pairwise output (excluding self-pairs)

for i, a in enumerate(keys):

for j, b in enumerate(keys):

if i == j:

continue

out[(a, b)] = float(sims[i, j])

The function iterates through every page pair:

- It skips self-pairs (a, a) because comparing a page with itself is not useful.

- It returns both directions (a, b) and (b, a) for full clarity, even though cosine similarity is symmetric.

The final output is an easy-to-consume dictionary where any two pages’ semantic similarity can be instantly retrieved.

Function: compute_section_overlap_index

Summary

The compute_section_overlap_index function measures how much two pages overlap at the section level by comparing embeddings of their content blocks. This is important for detecting topical duplication, internal competition, and cannibalization between pages that may appear to cover distinct topics but share underlying semantic sections.

It takes two sets of embeddings—section_embeddings_a and section_embeddings_b—and computes a similarity matrix of shape (nA × nB) where each value represents how similar a section in Page A is to a section in Page B.

The function supports three different analytical modes, each applicable to real-world situations:

· topk_mean (default): Focuses on the strongest overlapping sections. Good for detecting meaningful, targeted content overlap.

· proportion_above_cutoff: Measures what fraction of section pairs are substantially similar. Useful to detect widespread overlap or duplication.

· average_pairwise: Gives a holistic blended score of all section similarities.

All methods return a score in the range [0,1], where higher values represent greater semantic overlap between the two pages. If either page lacks embeddings (e.g., empty or unavailable), the function safely returns 0.0.

Key Code Explanations

1. Early exit when a page has no sections

if section_embeddings_a.size == 0 or section_embeddings_b.size == 0:

return 0.0

This prevents unnecessary computation and ensures stability. A page with zero sections cannot overlap with another page. The function gracefully returns a minimal overlap score—this avoids false positives and keeps the interpretation correct in real-world conditions (e.g., empty pages, skipped blocks, extraction failure).

2. Computing and scaling similarity matrix

sim = cos_sim(A, B) # shape (nA, nB) raw [-1,1]

sim = np.asarray(sim, dtype=float)

sim_scaled = (sim + 1.0) / 2.0 # scale to [0,1]

- sim contains cosine similarities between all section pairs.

- Cosine similarity ranges from -1 to 1, which is not intuitive for client-facing metrics.

- Scaling into [0,1] standardizes the results for interpretability and ensures consistency with other project metrics.

This allows the final overlap index to be used alongside other topical precision results without conversion.

3. topk_mean — focusing on the strongest overlapping sections

max_per_A = sim_scaled.max(axis=1)

topk_A = np.sort(max_per_A)[-min(topk, len(max_per_A)):]

mean_A = float(np.mean(topk_A)) if len(topk_A) > 0 else 0.0

This identifies the best match in Page B for each section in Page A, then selects the top-k strongest overlaps. This approach is ideal for practical SEO scenarios because:

- Often only a few strong overlaps indicate potential cannibalization.

- We prioritize meaningful overlaps rather than noise from many minor similarities.

The same logic is also applied symmetrically from Page B → Page A. The final score averages both directions, reducing directional bias.

4. proportion_above_cutoff — detecting broad content duplication

above = sim_scaled >= similarity_cutoff

proportion = float(np.sum(above) / sim_scaled.size)

This method counts how many section pairs exceed a meaningful similarity threshold. Useful when determining whether much of the content between two pages is overlapping—not just isolated sections. This is valuable for identifying duplicate content clusters or redundant coverage within a site.

5. average_pairwise — full matrix averaging

return float(np.mean(sim_scaled))

This computes the mean of all section pair similarities—essentially giving a holistic overlap score. This is useful in high-level clustering or page grouping tasks where the goal is to understand broad relational patterns rather than pinpoint specific overlap sections.

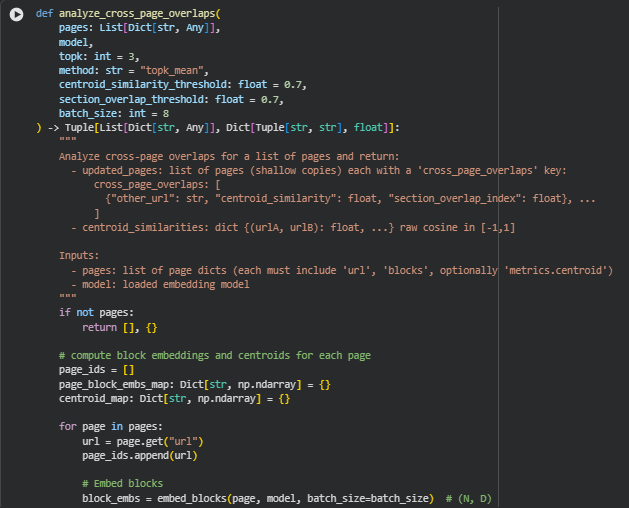

Function: analyze_cross_page_overlaps

Summary

The analyze_cross_page_overlaps function performs multi-page topical overlap analysis, enabling a complete view of how pages relate to each other semantically and structurally. It evaluates pages from two complementary perspectives:

· Centroid-Level Similarity Reveals whether two pages share an overall topical direction. This is a high-level similarity measure calculated from page-level centroid embeddings.

· Section-Level Overlap Index Captures how individual content blocks (sections) between two pages overlap. This detects subtle competition such as duplicated subsections, repeated explanations, or shared intent patterns.

This enables actionable insight into content duplication, topical redundancy across a site, and opportunities for refinement of site architecture or content segmentation.

Key Code Explanations

1. Building Embeddings and Centroids for All Pages

block_embs = embed_blocks(page, model, batch_size=batch_size)

page_block_embs_map[url] = block_embs