Get a Customized Website SEO Audit and SEO Marketing Strategy

The internet has undergone several seismic shifts since its inception. What began as a collection of static HTML pages evolved into an interconnected web of dynamic platforms, multimedia, and real-time interactions. Social networks, cloud computing, and AI-driven search engines have transformed it into a living ecosystem of data—constantly expanding, reshaping, and self-replicating. Yet despite this remarkable growth, one fundamental challenge persists: comprehension. Search engines and algorithms, while more advanced than ever, still parse the web through fragmented signals—keywords, backlinks, engagement metrics—rather than a true grasp of meaning. For humans, this means results that can feel shallow, misaligned, or incomplete. For machines, it means a lack of genuine contextual understanding.

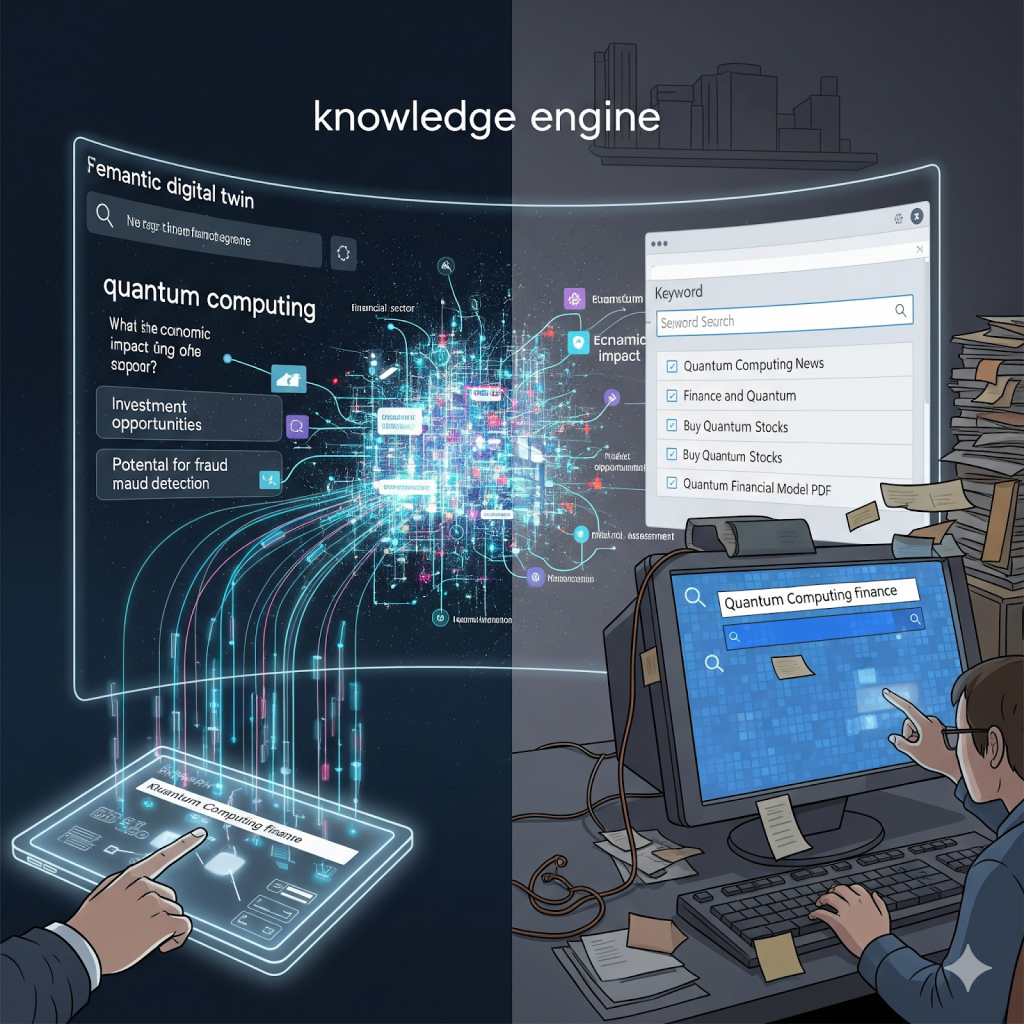

Enter the concept of a Neural Semantic Map of the Internet—a digital twin of the web designed not just to index information but to continuously learn, connect, and contextualize knowledge at both human and machine levels. Unlike traditional search infrastructure, a semantic digital twin is not static. It adapts in real time, mapping how ideas, entities, and relationships evolve across global conversations, publications, and datasets. Think of it as a living, breathing model of the internet’s knowledge layer—capable of both mirroring its present and predicting its future.

This vision isn’t about building another search engine. It’s about creating a real-time semantic fabric that unifies the world’s information into an understandable, navigable structure—bridging human meaning with machine precision. Such a system would allow researchers to uncover hidden knowledge pathways, businesses to anticipate shifts in consumer intent, and individuals to access information not as disconnected fragments, but as part of an evolving story.

The thesis of this paper is simple yet transformative: a global semantic digital twin can redefine how we search, manage knowledge, and design predictive SEO strategies. By aligning the way machines process data with the way humans interpret meaning, we can move beyond the limits of algorithm-bound search toward a more intelligent, intuitive internet.

The Problem: Why Current Search and SEO Are Broken

Search is the backbone of how we access information today, but the system is showing cracks. Both users and brands feel the strain as the landscape grows noisier, more fragmented, and harder to navigate. The very tools designed to organize the world’s knowledge now face problems of scale, complexity, and intent recognition.

Information Overload

Every day, millions of new pages are created across websites, blogs, social platforms, and e-commerce listings. On paper, this democratizes knowledge. In practice, it buries meaningful information under mountains of noise. A user searching for “best supplements for energy” might encounter thousands of results—affiliate listicles, sponsored posts, outdated advice, and scientific studies mixed without clear distinction. The signal-to-noise ratio has collapsed, leaving searchers overwhelmed rather than informed.

Reactive SEO

For brands, the challenge is different but equally damaging. Search optimization has become a reactive game. Instead of building enduring value, companies often scramble to keep pace with Google’s latest algorithm shifts. When a major update rolls out, strategies that worked yesterday can collapse overnight. Businesses pour resources into patchwork fixes, chasing rankings instead of anticipating what the search ecosystem truly values. This reactive cycle drains budgets and erodes trust in SEO as a stable growth channel.

Fragmented Knowledge

Another flaw lies in how information is organized. Entities, relationships, and contexts exist naturally—products are tied to reviews, people to achievements, companies to industries—but search engines often fail to connect these dots holistically. A query about a medication, for instance, might return separate results for brand names, side effects, and clinical trials, rather than weaving them into a connected, contextual understanding. Users end up doing the work of stitching together knowledge that should already be integrated.

Machine Limitations

At its core, SEO is still built around machines that index keywords, not meaning. While advances like natural language processing have improved things, algorithms still struggle with evolving context and human nuance. Search engines may recognize the word “apple,” but distinguishing between the fruit, the tech company, or a metaphor for health depends on context that machines often misinterpret. Intent—the real driver behind a search—is too often guessed rather than understood.

A Moving Target: Algorithm Disruptions

The unpredictability of algorithm changes highlights these limitations. Consider Google’s BERT update, which sought to better interpret natural language queries, or the more recent Helpful Content Update, aimed at rewarding genuinely useful pages. While positive in intent, these shifts disrupt established SEO strategies overnight. Brands built on keyword density or thin content models see their rankings nosedive, while even well-intentioned sites struggle to understand why their visibility changes. The constant turbulence reflects an underlying truth: search engines are still learning how to interpret meaning at scale, and businesses remain collateral damage in the process.

Enter Neural Semantic Maps: What They Are

The internet today is more than a collection of pages—it is a living, breathing network of meanings, relationships, and contexts. To navigate this ever-changing landscape, researchers and technologists are developing Neural Semantic Maps, a next-generation framework that functions as a global semantic digital twin of the web. Unlike traditional search indexes that primarily catalog keywords and links, Neural Semantic Maps interpret the deeper relationships between concepts, people, and information.

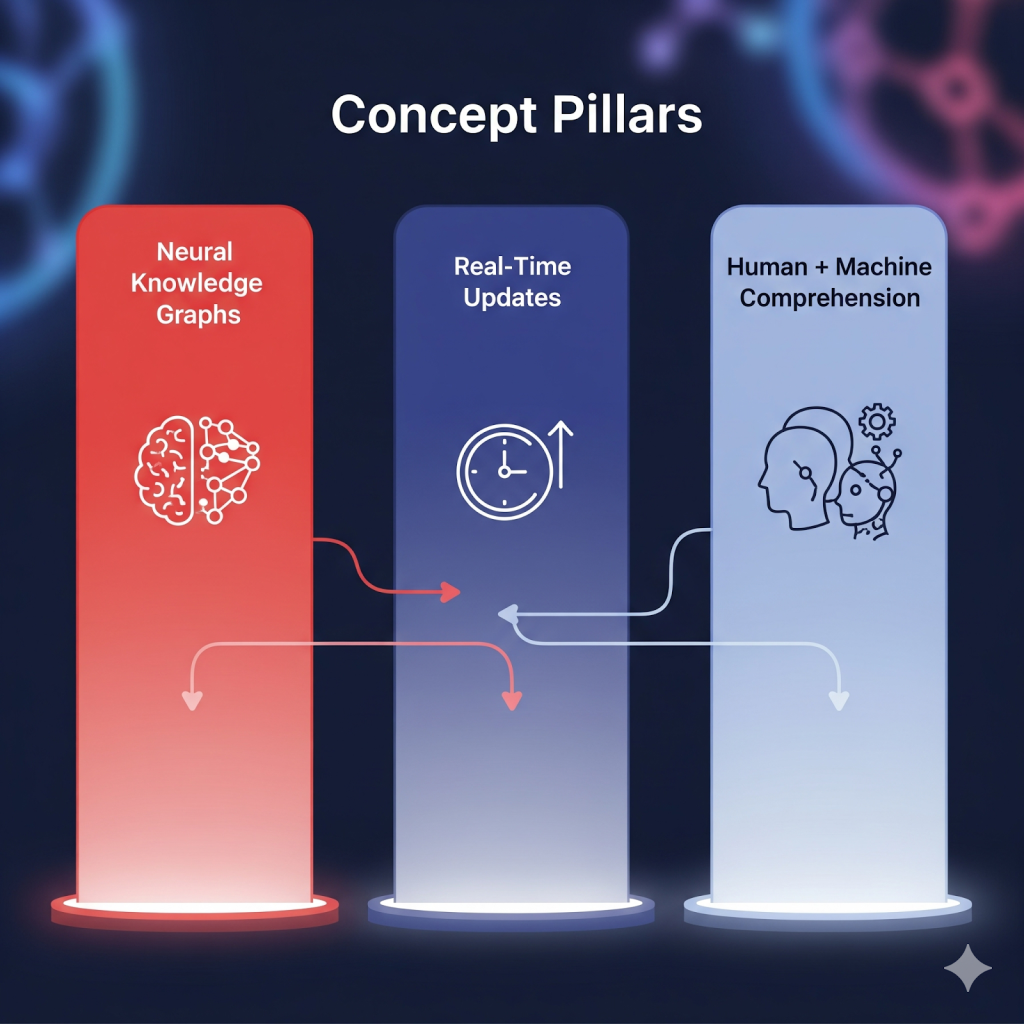

At their core, Neural Semantic Maps are built on three key components:

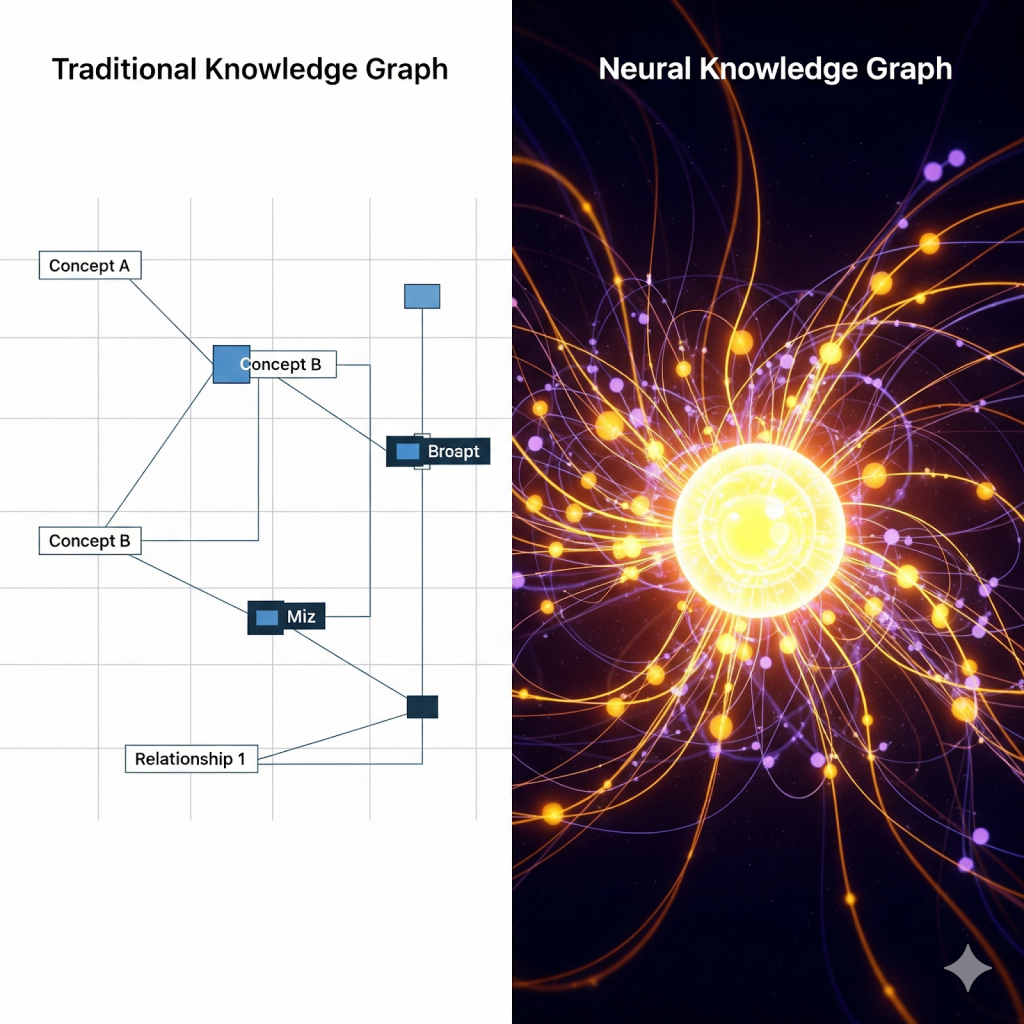

1. Neural Knowledge Graphs

Traditional knowledge graphs, like Google’s Knowledge Graph, link entities (people, places, organizations) to one another. Neural Knowledge Graphs push this further by introducing machine learning and AI-driven reasoning. Instead of just stating that “Tesla” is connected to “Elon Musk,” a neural graph understands how the relationship shifts depending on context—whether the user is searching about electric vehicles, stock markets, or innovation history. These connections are not static but dynamically weighted, enabling the graph to learn and adapt to new data patterns.

2. Real-Time Updates

The digital world doesn’t stand still, and neither do Neural Semantic Maps. They are designed as living systems that constantly evolve with the flow of new content, news, and user interactions. Think of it as a semantic layer that continuously mirrors the live internet. This allows for immediate integration of new knowledge—whether it’s a scientific discovery, a trending cultural reference, or a breaking news story—ensuring that the map reflects the most current state of meaning across the web.

3. Human + Machine Comprehension

Perhaps the most revolutionary element is the blend of machine intelligence with human-like understanding. Neural Semantic Maps don’t just recognize entities and facts; they interpret nuance, tone, sentiment, and context. For example, they can distinguish between a sarcastic tweet about a product and a genuine customer review. This dual comprehension bridges the gap between raw data processing and meaningful interpretation, making digital interactions far more intuitive.

An Analogy: Google Maps for Meaning

The easiest way to picture Neural Semantic Maps is through analogy. Just as Google Maps charts the physical world—roads, routes, and traffic—Neural Semantic Maps chart the world of meaning. Instead of telling you how to get from New York to Boston, they tell you how ideas, concepts, and narratives connect, diverge, and evolve. In other words, they make sense of the “semantic terrain” of the web.

Beyond Traditional Knowledge Graphs

While Google’s Knowledge Graph has been a milestone in structuring web information, it primarily focuses on linking facts and entities. Neural Semantic Maps go much further. They add predictive and semantic intelligence, meaning they don’t just record what exists—they anticipate what might emerge. This predictive layer can forecast shifts in meaning, detect emerging narratives, and adapt to evolving human language, including slang, cultural expressions, and domain-specific jargon.

Neural Semantic Maps transform the web into a living semantic ecosystem. They move beyond static databases of facts and into the realm of dynamic, predictive, and contextually rich intelligence—offering a foundation for the next era of search, AI interaction, and digital comprehension.

How Neural Semantic Maps Work

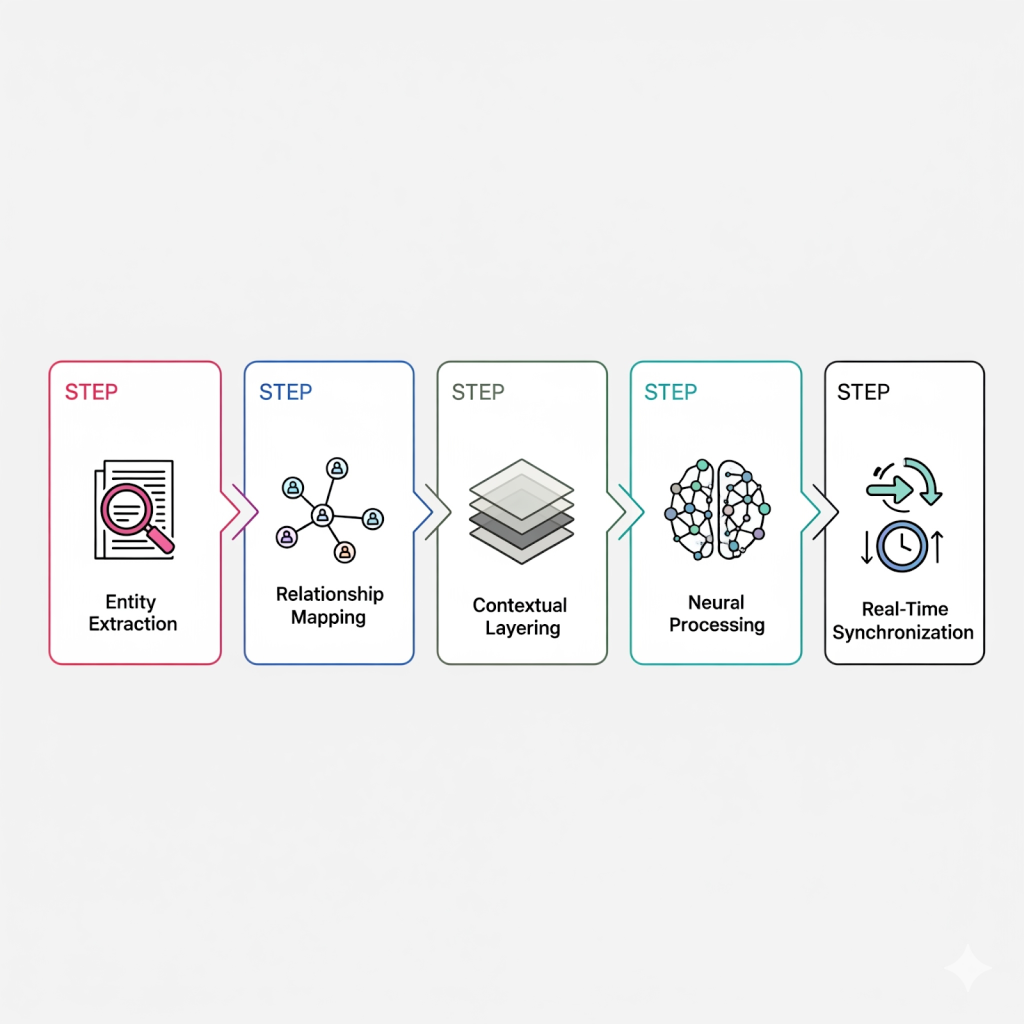

Neural semantic maps are at the heart of next-generation SEO because they do more than track keywords—they model meaning. Instead of treating words as isolated tokens, they interpret people, places, events, and concepts as dynamic entities in constant relationship. This process unfolds in five interconnected steps that transform raw online content into actionable intelligence.

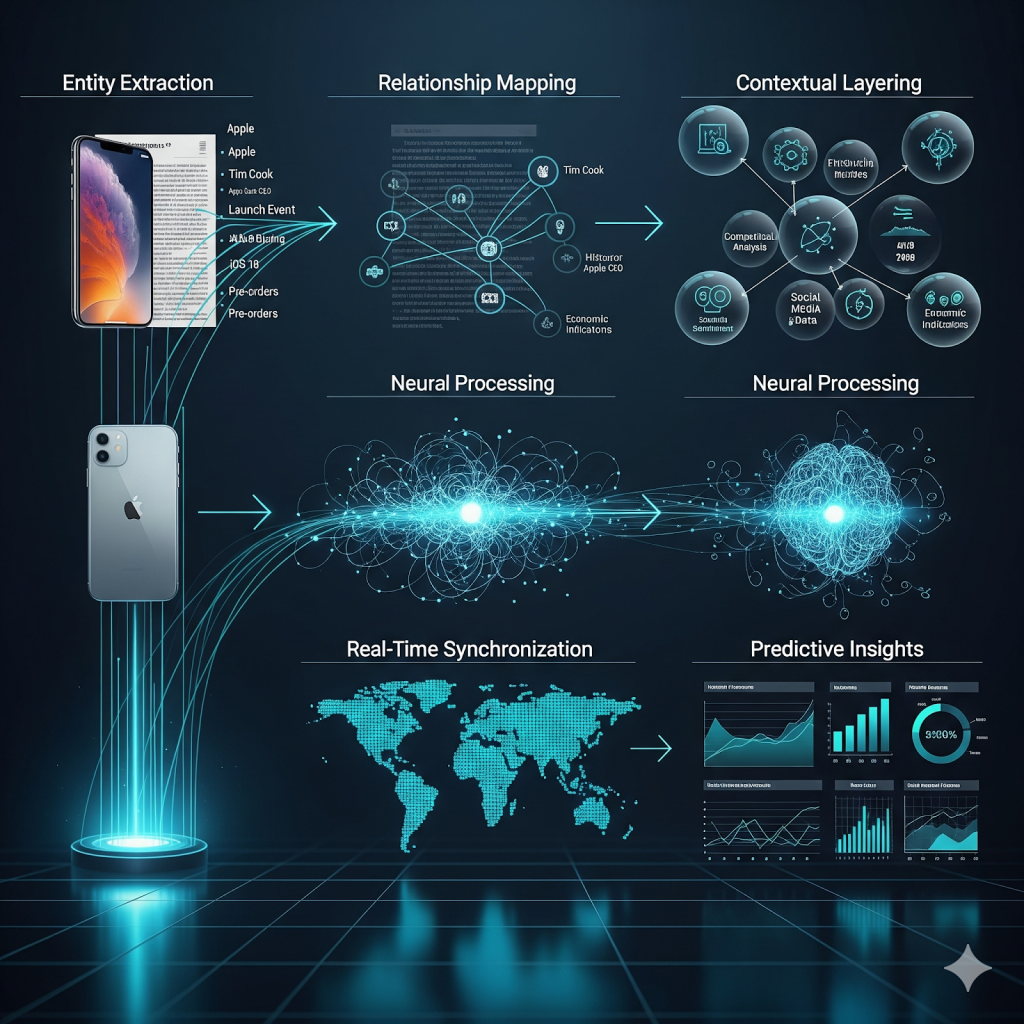

Step 1: Entity Extraction

The first stage begins with entity extraction, where algorithms scan content to identify key elements: people, brands, organizations, locations, products, and abstract concepts. Think of this as creating the “cast of characters” for any piece of digital content. For example, in a news article about Apple launching a new iPhone in San Francisco, the system instantly recognizes “Apple” (brand), “iPhone” (product), and “San Francisco” (location). By isolating entities, the map builds a foundation for more complex analysis.

Step 2: Relationship Mapping

Once entities are identified, the system connects them. Relationship mapping reveals how these entities interact within and across different contexts. Apple isn’t just a standalone brand—it’s tied to technology, consumer electronics, innovation events, and competitor brands like Samsung. This interconnected web of meaning enables search engines to understand not just what content contains, but how it fits into larger knowledge structures.

Step 3: Contextual Layering

Meaning doesn’t exist in a vacuum. That’s where contextual layering comes in. The system adds metadata such as sentiment (positive, negative, neutral), time (current, historical, predictive), geography (local, national, global), and intent (informational, transactional, navigational). For instance, an article about Apple’s iPhone launch may carry excitement in U.S. markets but skepticism in European outlets. These layered dimensions allow semantic maps to deliver a richer, more precise interpretation of how audiences engage with content.

Step 4: Neural Processing

Here is where deep learning takes over. Neural networks analyze these multi-layered connections and anticipate changes in meaning or importance. If consumer sentiment shifts from excitement to frustration after a product recall, the system predicts how search trends might evolve. Neural processing goes beyond static indexing—it continuously “learns,” refining its understanding of what matters most and signaling potential future patterns.

Step 5: Real-Time Synchronization

Finally, neural semantic maps operate in real time. As new content floods the web—tweets, blogs, breaking news—the system updates itself automatically. This continuous synchronization ensures businesses and search engines work with the freshest insights. Rather than relying on stale snapshots, the semantic map evolves in parallel with online discourse.

Example Workflow in Action

Imagine a breaking news story: “Apple unveils new iPhone at San Francisco launch event.” Immediately, the neural semantic map extracts the entities—Apple, iPhone, San Francisco, launch event. It then maps relationships (Apple → consumer electronics → competitors like Samsung). Contextual layers are added: high global excitement, positive sentiment, and transactional intent. Neural processing kicks in, predicting a surge in searches for “iPhone preorder,” “Apple event highlights,” and “best smartphones 2025.” Before Google’s algorithm fully shifts to reflect these changes, businesses plugged into the system are already alerted. Tech retailers, bloggers, and affiliate marketers can update content instantly, capturing search visibility in real time.

Neural semantic maps turn scattered digital noise into a structured, predictive model of meaning. They don’t just explain what content says—they forecast how it will shape attention, intent, and search behavior tomorrow.

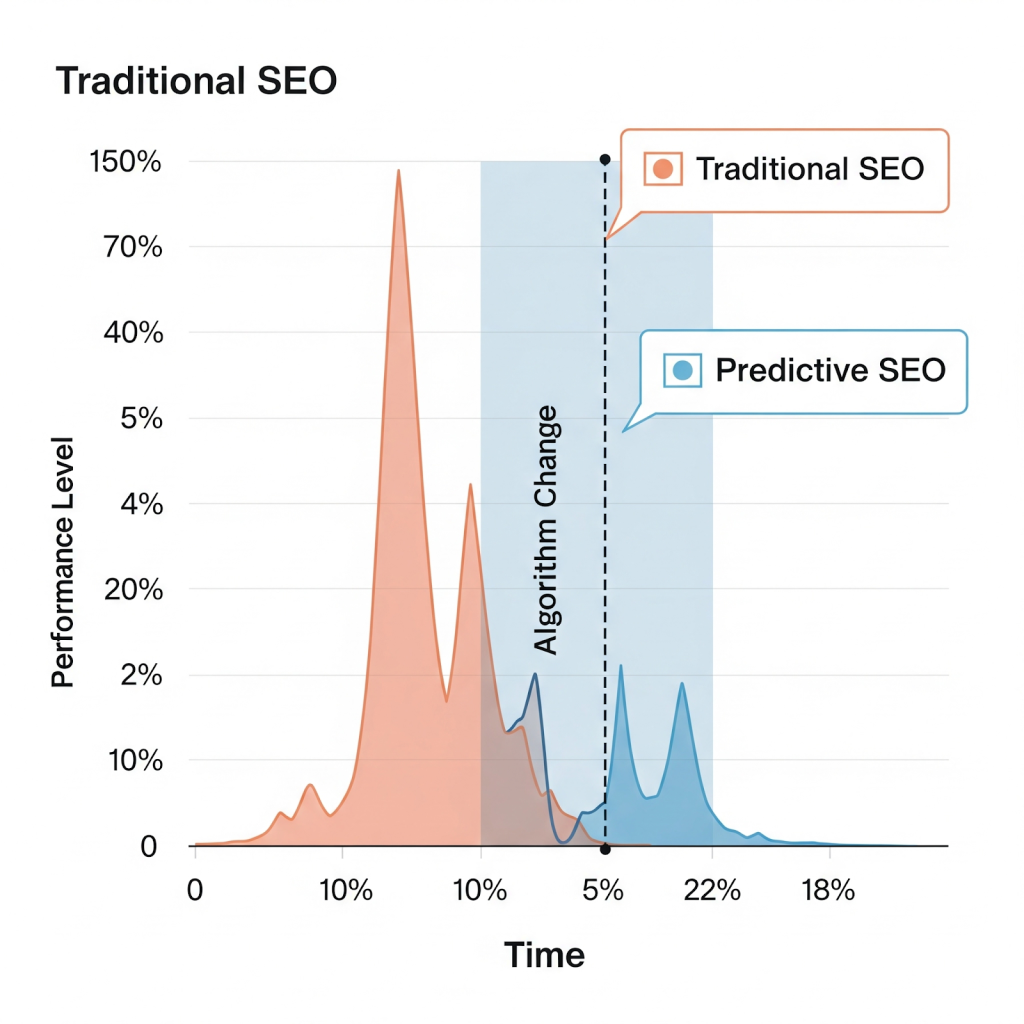

Predictive SEO: Optimizing Before the Algorithm Shifts

For years, SEO has been mostly reactive. Marketers would monitor Google’s algorithm updates, wait for the fallout, and then adjust strategies to recover rankings or maintain visibility. While this approach works, it leaves brands playing catch-up, often losing valuable traffic during the adjustment period.

Predictive SEO flips this model. Instead of waiting for updates, it anticipates them. By leveraging Neural Knowledge Graphs and semantic models, predictive SEO identifies how search engines are likely to prioritize entities, topics, and intent signals before those shifts become mainstream.

Take Neural Knowledge Graphs, for example. They analyze connections between entities—people, places, topics, and emerging trends—and forecast how these relationships might influence ranking signals. If Google begins weighting certain contextual links or authority signals more heavily, predictive SEO can detect these patterns early, allowing brands to adjust content and linking strategies in advance.

Imagine an upcoming global conversation around AI legislation. A Neural Semantic Map could detect that terms like “AI compliance,” “regulatory frameworks,” and “data transparency” are starting to cluster around this entity. A forward-looking brand could publish authoritative articles, case studies, or explainer content on these topics before competitors even recognize the shift. By the time the algorithm recalibrates, the brand already owns topical authority, securing early visibility and long-term positioning.

The benefits are hard to ignore:

- Faster adaptation: Brands don’t scramble after updates—they’re already aligned with the evolving search signals.

- Long-term relevance: Instead of chasing keywords, content is mapped to durable entity relationships and user intent, ensuring it stays valuable even as ranking criteria evolve.

- Reduced dependence on keyword stuffing: Predictive SEO prioritizes semantic depth and context over repetitive optimization tricks, resulting in more natural, authoritative content.

In essence, predictive SEO transforms search optimization from a reactive scramble into a proactive strategy. By reading the “signals before the signals,” brands can future-proof their visibility and maintain an edge in increasingly competitive digital landscapes.

Business & Marketing Applications

The rise of Neural Semantic Maps (NSMs) and quantum-inspired SEO strategies is not just a technical breakthrough—it has profound implications for how businesses approach digital marketing. By understanding how search engines interpret meaning, intent, and context, brands can create strategies that are not only future-proof but also sharper than traditional keyword-driven methods.

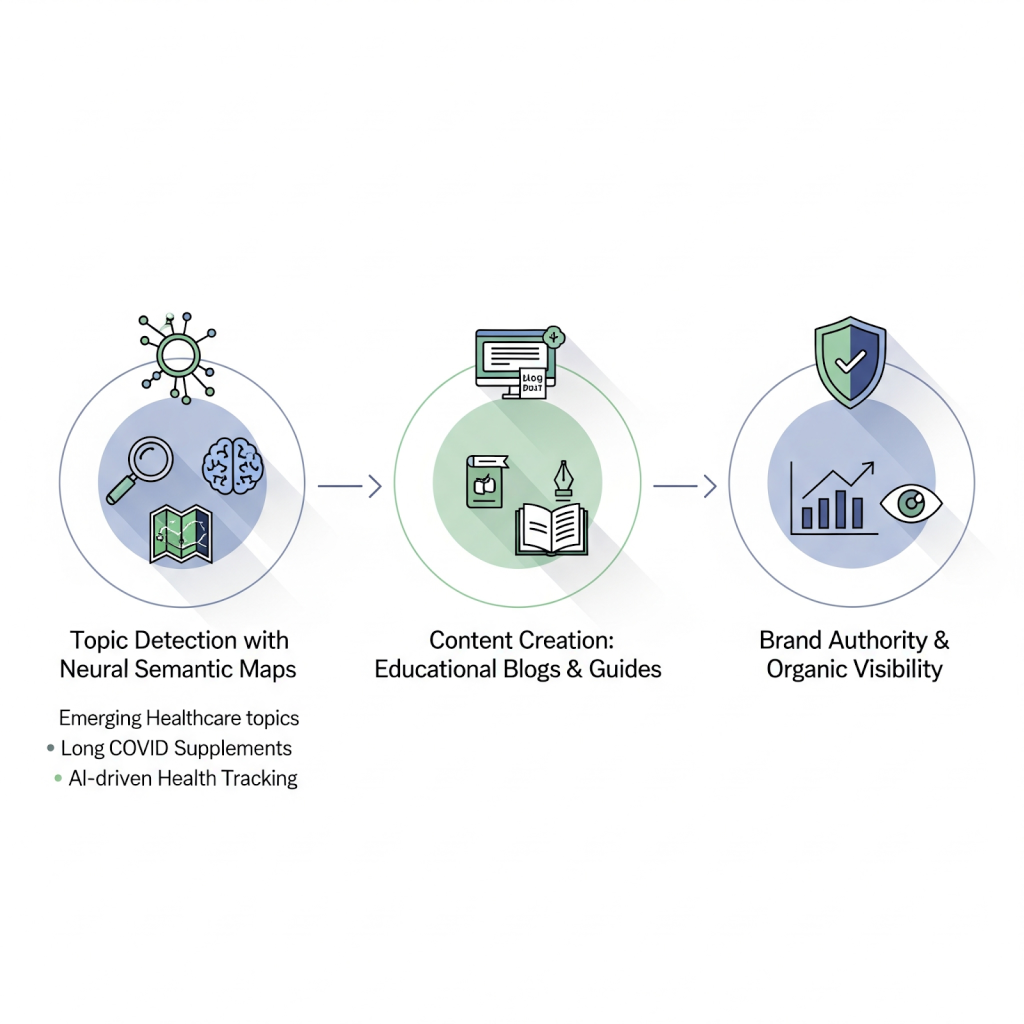

Content Strategy: From Keywords to Predictive Signals

For years, content marketing revolved around targeting search volume and keyword density. But with semantic modeling, brands can now craft articles, blogs, and landing pages that anticipate search trends before they peak. Instead of chasing traffic, businesses can position themselves as thought leaders by answering the “next” set of questions consumers will ask. For example, a financial services brand could identify rising interest in “AI-driven retirement planning” and build content before it becomes mainstream, capturing attention early.

Brand Authority in Entity-Based Search

Search engines are steadily shifting from keyword indexes toward entity recognition. This means Google doesn’t just see a company as a name—it connects that brand to concepts, industries, and credibility markers. By establishing dominance in entity-based search, companies can become the authoritative “node” in a semantic network. If competitors are slow to act, early adopters can cement authority around key entities, making it harder for others to dethrone them later.

E-commerce: Intent-Driven Recommendations

E-commerce platforms often optimize product pages around high-volume keywords, but this misses the nuance of user intent. With semantic analysis, brands can recommend products based on contextual meaning rather than sheer traffic numbers. For example, a skincare company could recognize that shoppers searching for “eco-friendly acne solutions” are not the same as those searching for “natural moisturizers.” By segmenting intent at this granular level, e-commerce businesses can personalize product discovery and increase conversions.

Advertising: Smarter Contextual Placements

In advertising, the ability to understand semantic intent translates to smarter placements. Traditional contextual ads rely on keyword matches, but semantic maps can place ads where user mindset and context align more naturally. For instance, a travel insurance ad doesn’t need to appear only on pages with the keyword “travel insurance.” Instead, it can be placed on content clusters about “adventure travel safety,” where users are subconsciously primed for protection-related products. This improves relevance while reducing wasted impressions.

Customer Insights Across Platforms

One of the biggest advantages of semantic-driven marketing is the ability to decode intent across multiple touchpoints. Instead of siloed data—social media mentions, site search queries, customer reviews—brands can unify these signals into a semantic model of consumer needs. A clothing retailer, for example, may discover that rising discussions around “slow fashion” overlap with searches for “sustainable fabrics,” indicating a shift in consumer mindset. Acting on these insights gives businesses the agility to adapt offerings before demand peaks.

Real-World Scenario: Healthcare Brand Advantage

Consider a healthcare brand specializing in preventative care. Using Neural Semantic Maps, the brand detects emerging semantic clusters around “long COVID supplements” and “AI-driven health tracking.” Instead of waiting for these queries to become high-volume keywords, the brand creates educational blogs, patient guides, and product landing pages tailored to these topics. By the time consumer demand surges, the healthcare company is already established as the go-to authority. This not only drives organic visibility but also builds trust in a sensitive, high-stakes industry.

Technical Foundations: Neural Knowledge Graphs Explained

A Neural Knowledge Graph (NKG) is a next-generation approach to organizing and interpreting information. At its core, it blends two historically separate schools of artificial intelligence. On one side is symbolic AI, which structures information into nodes and edges, much like a traditional knowledge graph. On the other side is connectionist AI, which relies on neural networks to detect patterns, make predictions, and adapt through learning. By merging these, NKGs not only represent knowledge but also reason over it, creating a system that feels less like a static library and more like a living brain.

Key Advantages

One of the most striking strengths of NKGs is scalability. Traditional graphs struggle to handle billions of entities and trillions of relationships efficiently, but NKGs can scale fluidly, making them suitable for today’s massive, ever-expanding datasets.

Second, they offer the ability to infer new relationships that are not explicitly stated. For example, if the graph knows that “Vitamin D improves bone strength” and “Bone strength reduces fracture risk,” an NKG can connect the dots and suggest “Vitamin D may help reduce fracture risk”—without being directly told. This inference power is what elevates them beyond static mapping.

Third, NKGs are built on dynamic learning. Unlike traditional graphs that require manual updates, NKGs continuously adapt as new data emerges. They evolve with fresh inputs—whether that’s breaking news, scientific discoveries, or shifting user behavior—ensuring the knowledge remains current.

Comparison to Traditional Graphs

To understand their value, it helps to contrast them with traditional graphs like Wikidata or DBpedia. Those systems are largely static: relationships must be explicitly entered and updated by contributors. While useful as reference databases, they lack adaptability. In contrast, a neural-powered graph evolves. It doesn’t just store knowledge; it interprets and reconfigures itself in real time, learning from context, trends, and continuous feedback loops.

In practical terms, traditional graphs are like encyclopedias—rich but fixed. Neural Knowledge Graphs, however, are more like a living brain, continuously firing and rewiring based on the internet’s pulse. They don’t just record facts; they reason, predict, and adapt.

A Brain-Like Network

The best way to visualize an NKG is as a brain-like network stretched across the digital world. Imagine billions of neurons (entities) firing and connecting, strengthening or weakening pathways based on relevance and context. Just as the human brain refines understanding through experience, an NKG refines knowledge through interaction with streams of online data. The result is a system that can keep pace with the complexity and speed of the modern web, making sense of connections that static models would miss.

In short, Neural Knowledge Graphs are not just databases—they are dynamic, adaptive intelligence frameworks that redefine how machines store, reason, and act on knowledge.

The Future of the Internet with Semantic Digital Twins

The internet is on the verge of a major transformation. Where search engines once served as the primary gateway to knowledge, the future points toward knowledge engines powered by semantic digital twins. Instead of typing a query and sifting through a ranked list of links, users will be able to ask direct questions and receive context-rich, conversational answers tailored to their intent. This shift marks the end of traditional search and the rise of personalized, intelligent knowledge layers.

At the heart of this evolution is augmented intelligence, where humans and machines work together to interpret, analyze, and apply information. Semantic digital twins act as dynamic models of entities—people, organizations, or even entire systems—that can understand relationships, context, and meaning across the web. Imagine not just retrieving data, but collaborating with a digital counterpart that continuously learns and refines its insights alongside you.

However, this new paradigm introduces critical ethical considerations. With knowledge engines synthesizing and shaping responses, the risks of bias, misinformation, and overreliance on algorithmic authority grow. Privacy concerns deepen as digital twins collect and connect vast amounts of personal and organizational data. The challenge will be to balance innovation with safeguards that ensure transparency, accountability, and fairness.

The global impact of semantic digital twins is profound. In academia, they could accelerate research by connecting cross-disciplinary insights at unprecedented speed, reducing duplication, and highlighting unexplored connections. For governments, real-time policy simulations powered by semantic models could support faster, more informed decisions on issues like climate, healthcare, or infrastructure. Businesses will gain predictive foresight, allowing them to anticipate market shifts, consumer behavior, and competitive landscapes, enabling strategies that are proactive rather than reactive.

Looking ahead, the trajectory is clear: within the next decade, semantic digital twins have the potential to supplant search engines as the dominant knowledge layer of the internet. Just as search engines redefined how we access information, semantic twins may redefine how we understand and apply it—shifting the internet from a passive archive of pages to an active, intelligent collaborator in human progress.

Challenges and Limitations

While semantic mapping and next-generation SEO strategies hold enormous potential, they also bring a unique set of challenges that must be acknowledged.

Data Bias

The quality of any semantic map is only as good as the data it is built on. If the source data is biased, incomplete, or skewed toward certain demographics, the resulting maps will reflect and potentially amplify those biases. For example, overrepresentation of Western language patterns could distort how intent is understood globally. This creates ethical concerns, particularly for brands that want to reach diverse audiences without inadvertently excluding or misrepresenting them.

Privacy & Ownership

Another pressing issue revolves around ownership and control of the “semantic twin” — the digital representation of a brand’s or individual’s knowledge ecosystem. Who has the right to build, maintain, or monetize these semantic models? If third-party platforms dominate, businesses may find themselves ceding too much control over their data. On the other hand, developing proprietary systems requires resources that not all companies can afford. Balancing transparency, user privacy, and data sovereignty will be crucial as adoption scales.

Technical Complexity

Designing and maintaining real-time, global semantic systems is no small feat. It requires immense computational power, advanced machine learning models, and ongoing data synchronization across multiple domains. Few organizations possess the infrastructure to do this at scale, which makes accessibility a challenge. Even for enterprises, the cost and complexity of integrating semantic systems into existing digital ecosystems can become a barrier.

Adoption Barrier

Finally, businesses may resist moving beyond the comfort zone of traditional SEO. For many, keyword targeting, link building, and on-page optimization remain familiar, predictable, and measurable strategies. The shift toward semantic networks and intent-driven optimization demands new skills, tools, and mindsets. Without clear case studies and ROI benchmarks, skepticism will persist — slowing widespread adoption of these advanced methods.

In short, the road to semantic-driven SEO is promising but not without hurdles. Addressing these limitations openly will be key to ensuring the technology matures in a way that is fair, transparent, and beneficial for all stakeholders.

Conclusion: Building a Smarter, Predictive Web

Neural Semantic Maps mark a turning point in the way we discover, structure, and interact with knowledge online. Unlike static keyword systems or even traditional link graphs, these advanced models capture the true meaning of information and map relationships with human-like intuition. This shift is more than a technical breakthrough—it redefines how businesses, creators, and audiences connect in an increasingly intelligent digital landscape.

In this environment, predictive SEO is no longer a “nice to have.” It is the backbone of digital competitiveness. Search engines are evolving into anticipatory systems that don’t just react to queries but forecast intent, personalize results, and surface insights before users even articulate them. Brands that cling to outdated SEO tactics will find themselves outpaced by those who embrace semantic-driven strategies designed for tomorrow’s search economy. The winners will be organizations that see SEO not as an afterthought but as an adaptive, predictive framework woven into every touchpoint of their digital presence.

This is the moment for forward-thinking marketers, entrepreneurs, and technology leaders to act. Waiting until predictive search becomes mainstream is a recipe for being left behind. By exploring neural knowledge graph solutions today—whether through pilot projects, partnerships, or in-house innovation—you position your business to ride the crest of this transformation instead of scrambling to catch up.

The long-term vision is nothing short of extraordinary: a web that behaves like a living, breathing intelligence system. Imagine a digital environment where context flows seamlessly, where content isn’t just stored but actively learns, predicts, and evolves alongside human curiosity. Neural Semantic Maps are the scaffolding of that future. They are the connective tissue that will allow the web to shift from being a static archive of pages to becoming a dynamic, adaptive partner in our pursuit of knowledge.

The question is not whether this future is coming—it is how quickly you will decide to step into it.