Get a Customized Website SEO Audit and SEO Marketing Strategy

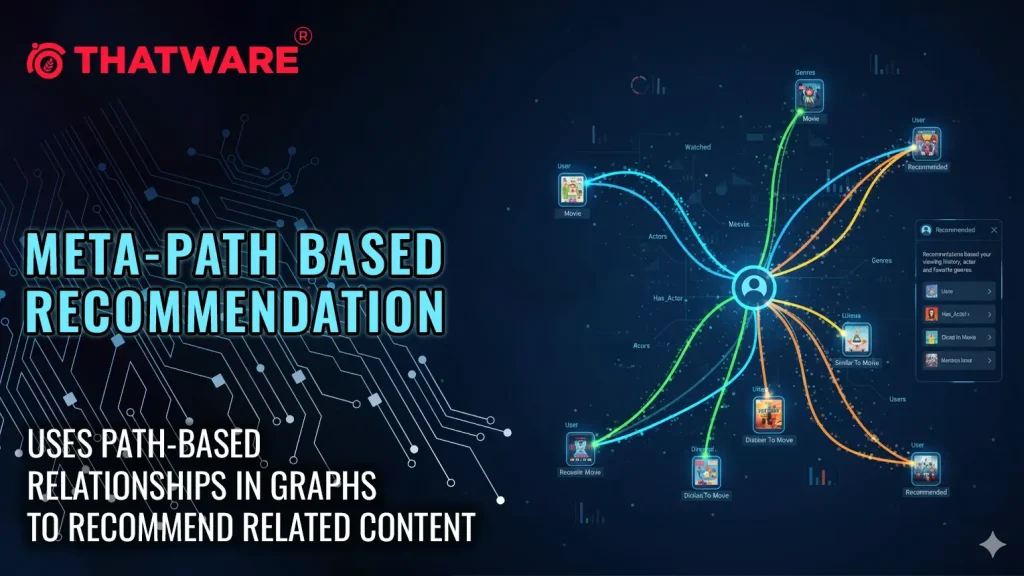

This project introduces a recommendation system designed to surface the most contextually relevant content within a website using graph-based meta-path analysis. By structuring a website’s content into nodes and edges—representing sections, queries, and their semantic relationships—the system identifies natural pathways that reflect how topics connect and flow.

Unlike traditional keyword or similarity-only approaches, the graph structure highlights deeper contextual links. This ensures that recommendations go beyond surface-level matches to reflect true topical relevance and authority. As a result, the system can guide users toward related sections, strengthen internal linking strategies, and uncover hidden relationships between queries and long-form content.

For SEO content strategy, this capability translates into improved discoverability, stronger thematic clustering, and a clearer view of how content supports search intent across multiple dimensions. By visualizing these relationships, decision-makers can optimize both the user journey and the search engine’s understanding of topical authority.

Project Purpose

The project was developed to address a common gap in SEO-driven content strategies: the difficulty of connecting queries, page sections, and topical clusters in a way that reflects real search intent and content authority. Standard approaches often focus only on keyword matching or surface-level similarity, which fails to capture how different parts of a page relate to one another and to external search queries.

By applying graph-based meta-path analysis, the purpose of the system is to:

- Reveal content-query relationships that highlight how well sections of a page respond to specific search intents.

- Strengthen internal linking opportunities by identifying the most natural content connections across long-form documents.

- Enhance topical authority mapping, ensuring that related content forms strong clusters around core themes.

- Support SEO strategy with explainable insights through visual graphs and structured recommendations.

The ultimate goal is to make long-form content more discoverable, more aligned with user search behavior, and easier to optimize for both relevance and authority.

Graph-based Analysis in SEO

In SEO, content is rarely effective when treated as isolated text blocks. Search engines evaluate the strength of a page through the relationships between queries, content sections, and topics. Graph-based analysis represents this ecosystem visually and mathematically:

- Nodes: Queries, content sections, and topics.

- Edges: Relationships showing alignment or similarity between nodes.

This structure allows strategists to see not only which section answers which query but also how different sections reinforce one another to build topical authority. Unlike keyword-only methods, a graph view captures hierarchical and lateral relationships across the page, delivering insights into authority clusters, intent coverage, and structural gaps.

Understanding Meta-paths

A meta-path is a patterned route across the graph that connects multiple node types. In SEO analysis, meta-paths reveal how content performs beyond one-to-one matches:

- Query → Section → Query: Shows which sections are capable of answering multiple queries, indicating high-value content blocks.

- Section → Topic → Section: Groups sections into topical clusters, highlighting opportunities for internal reinforcement.

- Query → Section → Topic: Connects user search intent directly to higher-level themes, showing how content contributes to authority.

Meta-paths make hidden content dynamics visible. For example, a section that links two different topics might be the key to improving overall relevance but could remain unnoticed in a linear analysis.

Queries, Sections, and Topical Relationships

- Queries: Each query represents a distinct search intent. By placing queries into the graph, strategists see which parts of the content align with intent and which are under-served.

- Sections: Structured blocks (like H2/H3 subheadings or paragraph groups) provide the “surface area” for aligning content with queries. Some sections become central hubs if they align with multiple queries or topics.

- Topics: Broader thematic nodes that connect related sections. They reveal the thematic spine of a page — the backbone that supports topical authority in search.

The interplay among these three levels demonstrates content efficiency (where queries are well-answered), redundancy (where sections overlap without adding value), and gaps (where intent is unserved).

SEO Value of Graph and Meta-paths

The benefit of modeling SEO content in this way lies in the recommendations that emerge naturally from the graph structure:

- Content Coverage Analysis: Highlights where queries are insufficiently supported, guiding new content development.

- Internal Linking Recommendations: Meta-paths reveal which sections should be connected to strengthen topical clusters.

- Topical Authority Clustering: Identifies natural section groupings around themes, showing which clusters are strong and which are fragmented.

- Query–Section Optimization: Suggests refinement of sections that align weakly with target queries, improving both precision and breadth of coverage.

- Flow and Consistency Recommendations: Exposes breaks in topical flow across a page, helping align section order with user search journeys.

Together, these recommendations ensure content is structured for both search intent alignment and topical depth, making it easier for search engines to recognize authority and relevance.

Project Q&A

What is this project and what problem does it solve?

This project implements a graph-based recommendation system that identifies which page sections best satisfy specified search intents. The core problem addressed is the mismatch between simple keyword-based signals and the multi-dimensional relevance signals that actually determine content usefulness. Traditional approaches often miss sections that are contextually relevant but do not explicitly repeat query keywords. By modeling pages as typed graphs — with nodes for queries, sections, keywords, and entities — and scoring traversal patterns (meta-paths) through that graph, the system surfaces sections that are topically and structurally connected to queries. The output is a prioritized list of section-level recommendations per query, with supporting evidence based on graph relationships, enabling targeted editorial or structural actions rather than broad, unfocused changes.

How does a meta-path based approach differ from plain keyword matching or embedding similarity?

- Keyword matching is lexical: it identifies sections that contain the same words as the query. This is precise but brittle and blind to context.

- Embedding similarity is semantic: it ranks content by vector closeness, which captures contextual similarity but may miss structural signals and explainability.

- Meta-path based approach combines both and adds relational structure: it counts and weights specific typed paths in a heterogeneous graph (for example, Query → Keyword → Section or Query → Section → Section) and normalizes those path-based scores. This approach captures indirect evidence (a section connected via a strong keyword or entity bridge) and structural reinforcement (sections connected to other authoritative sections), producing recommendations that are both contextually relevant and explainable through path evidence.

What are the practical SEO benefits provided by this system?

- Actionable section-level recommendations: Prioritized sections are returned per query, enabling focused content edits instead of page-level guesswork.

- Internal linking opportunities: Meta-paths reveal natural linking candidates between sections or pages, improving crawlability and topical signals.

- Topical cluster strengthening: Identification of sections that act as hubs for related queries supports consolidation or decomposition of content to better match search intent.

- Discovery of hidden relevance: Sections that answer intent indirectly (via entities or supporting keywords) are surfaced, enabling content to be optimized even when exact matches do not exist.

- Explainability for decisions: Per-section evidence (meta-path contributions) supports transparent editorial decisions and easier stakeholder buy-in.

What outputs are produced and how should they be used by SEO teams?

Primary outputs:

- Per-query recommended sections (ranked) with snippet and heading.

- Graph artifacts (node/edge metadata) for audit and visualization.

Recommended uses:

- Editorial tasking: Turn top section recommendations into concrete editorial tickets (rewrite, expand, add headings, improve clarity). Each recommendation should reference the snippet and the section ID for quick access.

- Internal linking changes: Use recommended section pairs and path evidence to add internal links following the strongest meta-paths.

- Content restructuring: If several recommendations point to distinct sections that together form a stronger topic cluster, consider merging or creating a hub page.

- Measurement plan: Track organic impressions, clicks, ranking positions, and internal click-throughs for modified sections as primary KPIs after implementation.

What inputs are required for the pipeline to run and produce meaningful results?

- Page URLs to analyze (one or more).

- A query list (search intents, keywords, or sample queries).

- Access to page content (publicly reachable pages or provided HTML).

- Pretrained models or access to them for: sentence embeddings (e.g., SentenceTransformers), keyword extraction (KeyBERT), and optional transformer NER (e.g., dslim/bert-large-NER).

- Configuration parameters for thresholds and meta-path templates (tunable for domain).

Data quality matters: HTML structure with semantic headings yields better section extraction and more accurate graph construction. When content is heavily JS-rendered or unavailable to direct requests, pre-rendered HTML or a site crawl is required.

Why were transformer-based NER and sentence-transformer embeddings chosen over lighter-weight alternatives?

- Transformer-based NER provides higher recall and better handling of domain-specific or technical terminology that appears frequently in SEO content (e.g., product names, technologies). This is important for accurate entity node creation in the graph.

- Sentence-transformer embeddings balance quality and performance: they capture semantic relationships needed for query→section and section↔section edges.

- The trade-off is higher compute cost; however, embedding caching, batched encoding, and use of efficient small models (e.g., all-MiniLM-L6-v2) reduce cost while preserving utility. For production workloads, an approximate nearest-neighbor index (FAISS) is recommended for scaling.

How are meta-paths defined, and which meta-paths are recommended initially?

Meta-path templates are sequences of node types. Recommended initial set for SEO:

- [‘query’, ‘kw’, ‘section’] — query connects to a section via a keyword bridge.

- [‘query’, ‘ent’, ‘section’] — query connects via an entity, useful for entity-aware queries.

- [‘query’, ‘section’] — direct semantic alignment.

- [‘query’, ‘kw’, ‘section’, ‘section’] — transitive coverage through keyword to related section.

These templates capture lexical, entity, and structural evidence. Path weights should be tunable and validated via manual QA or small labeled checks. Normalization of per-path scores (row-wise max or percentile scaling) improves interpretability.

Libraries Used

requests

The requests library is a standard Python tool designed for sending HTTP requests and retrieving responses from web servers. It simplifies interactions with webpages by handling headers, sessions, cookies, and connection complexities in the background.

In this project, requests is responsible for fetching the raw HTML content of the webpages being analyzed. This ensures that structured blocks of text can be extracted directly from the source URL, forming the foundation for later linguistic and semantic analysis.

BeautifulSoup

BeautifulSoup is a Python library that parses HTML and XML documents into structured objects, making it easier to navigate, search, and extract content from webpages.

Here, BeautifulSoup is used to clean and organize the raw HTML obtained through requests. It removes unnecessary tags, scripts, and irrelevant elements, ensuring that only the meaningful textual content is passed on for discourse analysis and intent evaluation.

transformers

The transformers library, developed by Hugging Face, provides access to a wide range of state-of-the-art NLP models, including token classification, embeddings, and zero-shot classification pipelines.

In this project, transformers powers the DeBERTa-based intent classification and embedding generation. It ensures that each section of the webpage is semantically understood, aligned with predefined SEO intents, and analyzed for similarity with query intents. This makes the backbone of search intent consistency checks.

KeyBERT

KeyBERT is a keyword extraction library built on top of BERT embeddings. It allows automatic detection of the most representative keywords and phrases from text content using semantic similarity.

Within this project, KeyBERT helps extract topical keywords from webpage sections. These keywords are used to strengthen the semantic mapping between queries and section-level content, improving interpretability of alignment results for SEO strategists.

SentenceTransformer

The sentence-transformers library provides transformer-based models optimized for producing sentence- and document-level embeddings. These embeddings can be compared using similarity metrics such as cosine similarity.

This project uses SentenceTransformer to generate dense vector representations of both queries and section texts. The embeddings allow fine-grained similarity checks beyond keyword overlap, ensuring alignment reflects actual semantic meaning rather than surface-level terms.

NumPy

NumPy is a widely used numerical computing library that enables efficient operations on large arrays and matrices. It forms the backbone of many data science tasks by providing optimized mathematical functions.

Here, NumPy supports vector operations required for similarity scoring, cosine similarity computations, and preparing embedding data structures for alignment with queries.

NetworkX

NetworkX is a graph-based analysis library designed to create, manipulate, and study complex networks. It provides visualization and structural insights into relationships between entities.

In this project, NetworkX is used to create a visual representation of relationships between webpage sections and search queries. This helps SEO strategists clearly see where intent alignment occurs and where gaps exist, providing an actionable map for optimization.

SciPy (csr_matrix)

SciPy is a scientific computing library built on top of NumPy, offering advanced numerical methods and sparse matrix utilities.

Here, the csr_matrix component is used for efficient storage of similarity matrices. Since webpage sections and query embeddings can grow large, sparse representation ensures computation remains efficient while preserving accuracy in alignment.

Matplotlib

Matplotlib is a foundational visualization library in Python for producing static, interactive, and publication-quality graphics.

In this project, Matplotlib is integrated with NetworkX to produce visual similarity graphs. These plots highlight query–section alignments, making results interpretable in a way that directly benefits SEO analysis and recommendations.

Torch

PyTorch is a deep learning framework widely used for training and inference of neural models. It powers many of the models hosted by Hugging Face.

In this project, torch serves as the computational backend for transformer models. It ensures embeddings and classification tasks powered by DeBERTa and other models are processed efficiently and at scale.

logging

The logging module is a built-in Python utility for monitoring program execution, debugging, and error tracking.

Here, logging is configured to maintain a clean workflow without unnecessary clutter. Only important warnings and messages are displayed, making the execution flow transparent but not overwhelming for end-users.

Function: extract_structured_sections

Function Summary

The extract_structured_sections function is designed to fetch a webpage from a given URL and organize its content into a hierarchical section structure based on HTML headings (<h1>–<h6>). It recursively processes sub-headings and their related text blocks up to a defined max_depth. This ensures that the page is broken down into well-structured sections, making the content easier to analyze for SEO tasks such as intent detection, discourse analysis, or similarity alignment.

If the page lacks proper heading structure, the function falls back to capturing raw paragraph text from the body, ensuring that no page returns empty content.

The returned result is a dictionary containing the URL and a list of structured sections, where each section includes its heading, level, textual content, sub-headings, and raw content blocks.

Key Code Explanation

Fetching the Webpage

- Retrieves the webpage content using the requests library.

- A timeout ensures the function won’t hang indefinitely on slow servers.

- The custom User-Agent mimics a browser request to reduce the chance of being blocked.

- raise_for_status() ensures that any HTTP error codes (e.g., 404, 500) are caught immediately.

Defining Heading Levels

heading_tags = {“h1”: 1, “h2”: 2, “h3”: 3, “h4”: 4, “h5”: 5, “h6”: 6}

- Maps HTML heading tags to numeric levels.

- This helps the function determine hierarchical relationships between sections, ensuring that sub-headings are attached correctly under their parent sections.

Recursive Section Processing

- This recursive function processes a heading and all its subsequent siblings until another heading of equal or higher level appears.

- If the sibling is a lower-level heading (e.g., h3 under h2) and within the allowed max_depth, it is treated as a sub-section and processed recursively.

- If the sibling is text or another tag, the function extracts its text content and appends it to the current section’s content.

Fallback for Poorly Structured Pages

- If no heading-based structure is found, the function defaults to extracting paragraph text.

- Very short fragments (less than 5 words) are ignored to avoid clutter from navigation text or boilerplate.

- This ensures that even minimal or unstructured pages return meaningful content.

Function: preprocess_sections

Function Summary

The preprocess_sections function is designed to take the raw structured data extracted from a webpage and clean it into a more usable format. Its main responsibilities are:

- Removing boilerplate and irrelevant text (e.g., “read more”, “privacy policy”).

- Normalizing special characters and replacing typographic symbols with standard equivalents.

- Filtering out blocks of text below a minimum word threshold to ensure only meaningful content is retained.

- Recursively applying cleaning to all subsections, ensuring that both main sections and nested subsections maintain a consistent quality.

The output is a structured dictionary with the original URL and a cleaned hierarchy of sections. This makes the data significantly more reliable for later steps such as keyword extraction, embeddings, and graph-based analysis.

Key Code Explanation

Boilerplate Pattern Setup

Here we define a list of common non-informative phrases (like “click here”, “subscribe”). These are compiled into a single regex pattern, allowing us to efficiently strip them from the content. This ensures the model doesn’t waste effort on text that doesn’t add SEO or semantic value.

Character Normalization & Substitutions

This normalizes and cleans up characters. For example, curly quotes “ ” are replaced with standard “, long dashes are standardized to -, and invisible spaces are removed. This makes the text machine-friendly while preserving readability.

Filtering Short Content

Each block of text is only kept if it meets a word count threshold (min_words). This prevents single-word noise or empty blocks from slipping into the analysis. Clients benefit because the analysis then focuses only on substantial content.

Recursive Cleaning of Subsections

This line applies the same cleaning logic to nested subsections, ensuring consistency across the entire content hierarchy. It also respects the keep_empty flag, which gives flexibility when clients want to keep even placeholder sections.

Function: load_ner_model

Function Summary

The load_ner_model function initializes and returns a Named Entity Recognition (NER) pipeline using HuggingFace Transformers. This pipeline loads a transformer-based model and tokenizer, enabling the identification and categorization of named entities (like people, organizations, locations, etc.) in text. By default, it uses the “dslim/bert-large-NER” model, which is widely used for entity extraction.

This function is crucial because NER is a core step in understanding and analyzing content within SEO-focused projects. Identifying entities provides deeper context about who or what the content refers to, which helps in relevance scoring, knowledge graph building, and recommendation generation.

Key Code Explanations

tokenizer = AutoTokenizer.from_pretrained(model_name)

- Loads a pre-trained tokenizer specific to the chosen model.

- The tokenizer ensures that text is split into tokens in the exact way the model expects, preserving accuracy for entity recognition.

model = AutoModelForTokenClassification.from_pretrained(model_name)

- Loads the transformer model fine-tuned for token classification tasks, such as NER.

- This model predicts entity labels for each token in the text.

ner_pipe = pipeline(…)

- Initializes a HuggingFace pipeline for NER using the model and tokenizer.

- The argument aggregation_strategy=”simple” ensures that tokens belonging to the same entity are grouped together into one meaningful span.

- Example: “New York City” will be recognized as one LOCATION entity instead of three separate tokens.

return ner_pipe

- The function returns the fully prepared pipeline object, which can then be applied to any input text for extracting named entities.

Model dslim/bert-large-NER

Overview

The dslim/bert-large-NER model is a transformer-based BERT variant fine-tuned for Named Entity Recognition (NER) tasks. It is designed to identify and classify real-world entities in text (such as persons, organizations, locations, or domain-specific concepts). Because it is built on BERT-large, it has strong contextual understanding, making it reliable for processing long or complex sentences.

Usage in This Project

In this project, the model was applied to webpage content sections to:

- Extract named entities (like company names, tools, geographic regions, or domain-specific keywords).

- Provide structural signals about what topics and entities appear in a section, which strengthens the meta-path connections between queries and sections.

- Enhance recommendation interpretability by highlighting the specific entities driving relevance.

For example: If a client query is “best SEO tools for e-commerce”, the model can recognize tool names (like “SEMrush” or “Ahrefs”) in the content, ensuring recommendations highlight those relevant sections.

Key Features

- Fine-grained entity detection across multiple categories.

- Strong contextual comprehension (handles ambiguous mentions like “Apple” as fruit vs. company).

- Pre-trained on large-scale corpora for broad coverage.

User Relevance

For SEO strategists, entity recognition helps answer:

- Which specific brands, tools, or industry terms does a page emphasize?

- Do the recommended sections highlight the right product/service names that users search for? This makes recommendations more transparent, targeted, and trustworthy.

Function extract_entities_from_text

Function Summary

This function identifies and extracts named entities from a given text string using a transformer-based NER pipeline. It ensures that only unique entities are returned, making the output concise and free of duplicates. This is the fundamental building block of entity recognition in the project.

Key Code Explanations

- if not text.strip(): return []: This ensures that empty or whitespace-only strings are ignored, avoiding unnecessary model calls.

- entities = ner_pipe(text): Applies the NER pipeline to the input text, producing structured entity predictions.

- list({e[‘word’] for e in entities}): Collects all detected entity tokens and converts them into a set to remove duplicates before returning them as a list.

Function extract_entities_from_blocks

Function Summary

This function processes a list of content blocks (paragraphs or segments of text) and extracts named entities from each block. It aggregates all unique entities across blocks into a single consolidated list for better section-level analysis.

Key Code Explanations

- all_entities: Set[str] = set(): Initializes a set to store unique entities while ensuring no duplicates appear.

- block_entities = extract_entities_from_text(block, ner_pipe): Calls the previous function for each block, reusing its modular logic.

- all_entities.update(block_entities): Continuously merges entities from each block into the section-wide set.

- return list(all_entities): Converts the set of unique entities into a list for easy downstream processing.

Function apply_ner_to_sections

Function Summary

This function applies named entity recognition across a hierarchical structure of sections and sub-sections. Each section is enriched with an entities field that consolidates all named entities found in its raw text blocks. The function works recursively to cover both main sections and nested sub-sections.

Key Code Explanationsa

- raw_blocks = section.get(‘raw_blocks’, []): Extracts raw text blocks from a section to serve as input for entity recognition.

- section[‘entities’] = extract_entities_from_blocks(raw_blocks, ner_pipe): Assigns a list of unique entities for the section by processing its blocks.

- if section.get(‘sub_headings’):: Checks if the section contains nested sub-sections.

- section[‘sub_headings’] = apply_ner_to_sections(section[‘sub_headings’], ner_pipe): Recursively applies the same entity extraction process to all sub-sections.

Function: load_keybert_model

Function Summary

The load_keybert_model function is responsible for initializing and returning a KeyBERT model that is backed by a sentence-transformer. KeyBERT is a keyword extraction technique that leverages embeddings from transformer-based models to identify contextually important keywords from text. In this function, a sentence-transformer model (default: “all-MiniLM-L6-v2”) is first loaded, and then it is passed into the KeyBERT object to serve as its embedding generator. The function ultimately returns a ready-to-use KeyBERT model for extracting keywords and phrases from textual content.

Key Line Explanations

sentence_model = SentenceTransformer(model_name)

This line loads the specified sentence-transformer model. Sentence-transformers are designed to produce semantically meaningful vector representations of text, which are crucial for context-aware keyword extraction.

kw_model = KeyBERT(model=sentence_model)

Here, the loaded sentence-transformer model is wrapped inside a KeyBERT instance. KeyBERT then uses this model’s embeddings to compare candidate keywords against the document and rank them by relevance.

Model all-MiniLM-L6-v2

Overview

The all-MiniLM-L6-v2 model is part of the Sentence Transformers family, optimized for semantic similarity and embedding generation. It produces compact 384-dimensional embeddings that capture meaning at the sentence or paragraph level. Despite its smaller size, it offers excellent performance for semantic search and recommendation tasks.

Usage in This Project

In this project, the model is used for:

- Query embeddings: Representing each client query as a dense vector.

- Content embeddings: Representing each section/snippet of a webpage as a dense vector.

- Similarity scoring: Computing cosine similarity between query and section embeddings to identify the most semantically aligned sections.

These embeddings are then integrated with meta-path scoring (which includes NER and structural signals) to produce final ranked recommendations.

Key Features

- Lightweight and fast: Suitable for real-time or large-scale analysis in Colab environments.

- Strong performance in semantic search compared to larger models while being resource efficient.

- Pre-trained on millions of sentence pairs, enabling strong generalization across topics.

User Relevance

- Helps identify which sections best “answer” the query in meaning, not just keyword overlap.

- Enables context-aware recommendations, ensuring subtle matches are found (e.g., “increase visibility” matches “boost online presence”).

- Provides scalability—clients can process many queries across multiple pages quickly without heavy infrastructure.

Function extract_keywords_from_block

Function Summary

The extract_keywords_from_block function extracts the top N most relevant keywords from a single text block using a KeyBERT model. It ensures that only meaningful keywords are returned by filtering out empty blocks and then applying KeyBERT with parameters such as n-gram range and stop-word removal. The output is a list of keyword strings ranked by contextual importance.

Key Code Explanations

if not block.strip(): return []

This check ensures that if the text block is empty or only contains whitespace, the function immediately returns an empty list instead of wasting resources on unnecessary processing.

keywords = kw_model.extract_keywords(…

This is the core line where KeyBERT processes the block. The parameters include:

- keyphrase_ngram_range=(1, 3) → Extracts keywords/phrases ranging from 1 to 3 words.

- stop_words=’english’ → Filters out common English stop-words for cleaner results.

- top_n=top_n → Limits the extraction to the top N ranked keywords.

return [kw[0] for kw in keywords]

The KeyBERT output includes both keywords and their scores. This line extracts only the keyword strings (first element of each tuple), discarding scores to keep results concise.

Function extract_keywords_from_blocks

Function Summary

The extract_keywords_from_blocks function applies keyword extraction to multiple text blocks and consolidates the results into a unique set of keywords for the entire section. It ensures that overlapping keywords from different blocks are merged, giving a single list of distinct, context-relevant terms.

Key Code Explanations

all_keywords = set()

A Python set is initialized to automatically handle uniqueness. This ensures that duplicate keywords across blocks are not repeated in the final output.

block_keywords = extract_keywords_from_block(block, kw_model, top_n)

For each text block, the function reuses the extract_keywords_from_block function to extract keywords. This keeps the code modular and avoids duplication.

all_keywords.update(block_keywords)

Instead of appending results, the set’s update() method adds new keywords while discarding duplicates automatically.

return list(all_keywords)

Converts the set back into a list before returning, making it consistent with standard Python list outputs and easier for downstream use.

Function load_embedding_model

Function Summary

The load_embedding_model function is responsible for loading a SentenceTransformer model that can generate embeddings (vector representations) for text. These embeddings are essential for semantic similarity, clustering, and other NLP tasks. By default, it loads the “all-MiniLM-L6-v2” model, which is lightweight yet highly effective for SEO-related tasks. The function returns the initialized model, ready to be used in downstream processes such as similarity scoring or clustering.

Key Code Explanation

sentence_model = SentenceTransformer(model_name)

This line initializes the SentenceTransformer with the specified model. The model name can be changed to load different pre-trained sentence-transformers (e.g., RoBERTa, DeBERTa-based embeddings).

return sentence_model

The function returns the fully loaded transformer model so it can be reused across different parts of the project pipeline, ensuring efficiency and modularity.

Function embed_section_text

Summary of the Function

The embed_section_text function generates a numerical embedding vector for a given text input using a preloaded SentenceTransformer model. This embedding captures the semantic meaning of the text, allowing for comparisons between different pieces of content based on meaning rather than keywords alone. If the input text is empty or only whitespace, the function safely returns an empty list to avoid unnecessary computation or errors.

Key Code Explanations

if not text.strip(): return []*

This line ensures that blank or whitespace-only inputs are handled gracefully. Instead of passing them to the embedding model (which would waste resources or cause issues), the function returns an empty list.

embed_model.encode([text])[0]*

The text is wrapped inside a list because the .encode() method expects a list of strings. It then produces embeddings for all items in the list. Since only one text is passed, the function retrieves the first element [0], which is the actual embedding vector for the input text.

Function: apply_keywords_and_embeddings

Summary of the Function

The apply_keywords_and_embeddings function enriches webpage sections and their sub-sections with keywords and semantic embeddings. It processes each section’s raw text blocks to:

- Extract top keywords (using a KeyBERT model).

- Generate an embedding vector (using a SentenceTransformer model).

- Apply the same process recursively to all nested sub-sections.

This ensures that every part of a document—from the main section down to the deepest sub-sections—is represented with both keyword-level and embedding-level features. These features later enable semantic similarity scoring, topic clustering, and intent matching for SEO tasks.

Key Code Explanations

raw_blocks = section.get(‘raw_blocks’, [])

Retrieves the raw text blocks of the section. If a section does not contain any blocks, it defaults to an empty list. This ensures the function is safe and robust for varying document structures.

section_text = ” “.join(raw_blocks)

Combines all text blocks into a single string, which provides the full textual context of the section for embedding. This step is crucial for capturing the semantic meaning at the section level.

section[‘keywords’] = extract_keywords_from_blocks(raw_blocks, kw_model, top_n)

Extracts the most relevant keywords or topics from the section using the KeyBERT model. These keywords help clients quickly identify what each section is about.

section[’embedding’] = embed_section_text(section_text, embed_model)

Converts the section text into a semantic vector representation. This embedding allows comparison across queries and other sections based on meaning, not just surface words.

Recursive Call:

If the current section contains nested sub-sections, the function calls itself recursively. This ensures hierarchical coverage, so embeddings and keywords are generated for every level of content structure.

Function: slugify

Function Summary

The slugify function takes a string of text and converts it into a clean, URL- and identifier-friendly format. It removes special characters, converts text into lowercase, replaces non-alphanumeric characters with underscores, and trims the length to a maximum of 128 characters. This ensures that keywords, entities, and other identifiers can be safely used as node IDs in the graph or as filenames without causing formatting or parsing issues.

Key Code Explanations

s = unicodedata.normalize(“NFKD”, s)

Normalizes the text to separate characters from their accents/diacritics. For example, “café” becomes “cafe”.

s.encode(“ascii”, “ignore”).decode(“ascii”)

Strips away any non-ASCII characters, ensuring compatibility across systems and libraries.

re.sub(r'[^a-zA-Z0-9]+’, ‘_’, s).strip(‘_’).lower()

Replaces all sequences of non-alphanumeric characters with underscores, trims extra underscores, and converts everything to lowercase.

return s[:128]

Ensures the slug is capped at 128 characters to avoid oversized node identifiers.

Function: build_content_graph

Function Summary

The build_content_graph function constructs a semantic knowledge graph from content sections, their extracted keywords, entities, embeddings, and queries. Using NetworkX, it generates a rich graph structure with nodes representing sections, keywords, entities, and queries, and edges describing their relationships. These include:

- Structural links (parent-child section hierarchy).

- Semantic similarity edges (embedding-based).

- Keyword/entity associations with sections.

- Query connections to relevant sections, keywords, and entities (via lexical and embedding similarity).

This graph becomes the central structure for semantic content analysis, intent mapping, and SEO strategy, enabling visualization and scoring of relationships between user queries and website content.

Key Code Explanations

G = nx.Graph()

Initializes an empty NetworkX graph that will store nodes (sections, keywords, entities, queries) and edges.

Section Nodes:

Each section is added as a node with metadata like heading and a snippet of content, helping identify and label nodes during visualization.

Keyword Nodes & Edges:

Keywords extracted from sections are added as nodes (kw_{slug}). Edges between sections and keywords (has_keyword) represent topic associations.

Entity Nodes & Edges:

Named entities are added as nodes (ent_{slug}) and linked to the relevant section with has_entity edges. This helps identify entities of interest across sections.

Structural Parent-Child Edges:

A recursive helper function _traverse_add_structural builds edges between parent and sub-sections (struct_parent_child), preserving the document hierarchy.

Semantic Section Edges:

Sections with embeddings are compared pairwise, and edges are added if similarity exceeds the threshold. This captures semantically related but non-adjacent sections.

Query Nodes & Edges:**

Queries are added as nodes (query_{i}). They connect to:

- Sections with sufficient embedding similarity (query_section_sim).

- Keywords/Entities through lexical matches (query_has_keyword, query_has_entity).

Embedding-based Query -> Keyword/Entity Links:

If an embedding model is provided, similarity is computed between queries and keyword/entity embeddings. Edges (query_kw_sim, query_ent_sim) capture semantic overlaps beyond exact text matches.

Function cosine_similarity

Function Summary

The cosine_similarity function calculates how similar two numerical vectors are by measuring the cosine of the angle between them. In this project, it is used to determine how closely a section’s embedding (its semantic representation) aligns with another embedding, such as a query or another section. This similarity measure is central to SEO-focused analysis because it reveals whether two pieces of text convey similar meanings, which helps in matching queries to relevant content.

Key Code Explanations

if vec1 is None or vec2 is None or len(vec1) == 0 or len(vec2) == 0:

This line ensures robustness by checking whether either input vector is missing or empty. If that is the case, the function immediately returns 0.0, meaning no similarity is detected. This prevents runtime errors when embeddings are unavailable.

dot = np.dot(vec1, vec2)

This calculates the dot product between the two vectors. The dot product measures how much the two vectors point in the same direction, which is essential for cosine similarity.

norm = np.linalg.norm(vec1) * np.linalg.norm(vec2)

Here, the function computes the product of the magnitudes (lengths) of both vectors. This normalizes the dot product, allowing the similarity to be based solely on orientation rather than vector length.

return dot / norm if norm > 0 else 0.0

This final line divides the dot product by the product of magnitudes to get the cosine similarity. If the denominator is 0 (which would happen if either vector is a zero vector), it safely returns 0.0 instead of producing an error.

Function embed_queries

Function Summary

The embed_queries function is responsible for generating semantic embeddings for a list of queries using a sentence-transformer model. These embeddings are numerical vector representations of the queries, enabling deeper comparison and alignment with webpage content. The function outputs a dictionary where each query string is mapped directly to its corresponding embedding vector.

Key Code Explanations

embeddings = embed_model.encode(queries)

This line uses the provided sentence-transformer model (embed_model) to encode the list of queries. The result is a list (or array) of dense numerical vectors, each representing the semantic meaning of its respective query.

return {query: emb for query, emb in zip(queries, embeddings)}

Here, a dictionary comprehension is used to pair each query string with its generated embedding. This makes the output structured and easily accessible for downstream tasks like similarity calculations or alignment with section embeddings.

Function build_type_index_maps

Function Summary

This function constructs two mappings that help organize graph nodes by type and allow efficient indexing when working with adjacency matrices.

- type_to_nodes: A dictionary mapping each node type (e.g., section, query, keyword, entity) to a list of nodes of that type.

- node_to_index: A dictionary mapping each node type to another dictionary that assigns each node an index position.

It also includes a safeguard: if a node does not have an explicit type attribute, the function attempts to infer the type from the node’s identifier prefix.

Key Code Explanation

t = data.get(“type”)

Retrieves the type of the node from its attributes.

Fallback inference (e.g., if node.startswith(“section_”))

If no type is found, the function guesses the type based on the node’s prefix such as section_, kw_, ent_, or q_.

type_to_nodes[t].append(node) and node_to_index[t][node] = idx

Adds the node into the correct list and records its index position for adjacency matrix construction.

Function build_adj_matrices

Function Summary

This function creates sparse adjacency matrices (csr_matrix) for pairs of node types. Each matrix represents connections between nodes of two types, where the matrix entry (i, j) corresponds to the weighted edge between two nodes. Both directions are stored since the graph is undirected for path counting.

Key Code Explanation

for u,v,ed in G.edges(data=True):

Iterates over all edges in the graph, extracting nodes (u, v) and their attributes (ed).

t_u = G.nodes[u].get(‘type’) and t_v = G.nodes[v].get(‘type’)

Retrieves node types for adjacency grouping.

adj_entries[(t_u, t_v)][(i,j)] += float(ed.get(‘weight’, 1.0))

Records edge weights into a nested dictionary keyed by node type pairs. Reverse direction (t_v, t_u) is also added for undirected analysis.

Construction of sparse matrices

Converts recorded edges into SciPy csr_matrix objects, enabling efficient numerical operations for meta-path calculations.

Funciton score_metapath

Function Summary

This function calculates the score matrix for a given meta-path, which is a sequence of node types that define a traversal path through the graph (e.g., [‘query’, ‘kw’, ‘section’]). The output is a sparse matrix where entries represent the connection strength between starting and ending nodes along the meta-path.

Key Code Explanation

for i in range(len(path_types)-1):

Iterates over consecutive type pairs in the path, such as (query → kw, kw → section).

if key not in adj_mats: return None

If a required adjacency matrix is missing, the function exits early, signaling that the meta-path cannot be computed.

M = mats[0]; for m in mats[1:]: M = M.dot(m)

Multiplies adjacency matrices in sequence, effectively chaining the connections across the meta-path.

Function compute_meta_path_scores_all

Function Summary

This function orchestrates the full meta-path scoring pipeline:

- It builds type/index maps and adjacency matrices.

- Computes scores for each provided meta-path.

- Normalizes scores row-wise for consistency.

- Applies optional weighting across paths.

- Returns combined scores linking query nodes to section nodes, along with per-path normalized results and lookup mappings.

This function is central to connecting user queries with relevant document sections through meta-path reasoning.

Key Code Explanation

type_to_nodes, node_to_index = build_type_index_maps(G)

Builds organizational maps for graph nodes to prepare for adjacency matrix construction.

adj_mats = build_adj_matrices(G, type_to_nodes, node_to_index)

Generates adjacency matrices for all type pairs in the graph.

Meta-path scoring with score_metapath

Iterates through all meta-paths, calculating sparse score matrices for each, and storing them in per_path.

Row normalization

Each score matrix is normalized row-wise by dividing values by their row sum, ensuring relative weights are comparable across queries.

Weighted combination

If weights are provided, the matrices are combined proportionally. If not, equal weighting is applied by default.

Mapping results back to nodes

Finally, the combined matrix is unpacked into a dictionary structure:

- Keys: query node IDs

- Values: dictionaries of section nodes with their scores.

Funciton analyze_url_query_alignment

Function Summary

This function implements a full pipeline to align user queries with sections of web pages, leveraging meta-path scoring in a content graph.

Key capabilities include:

- Extracting and preprocessing structured sections from each URL.

- Enriching sections with NER, keywords, and embeddings.

- Building a typed graph connecting queries, keywords, entities, and sections.

- Computing meta-path–based similarity scores between queries and sections.

- Returning enriched recommendations per query, including combined scores, per-path contributions, and snippets.

- Producing page-level scores based on query-to-section alignments.

The output is a dictionary mapping each URL to:

- Extracted sections, the graph, and an ID lookup.

- Per-query recommended sections with scoring details.

- An overall page-level score summarizing relevance across queries.

Key Code Explanation

query_embeddings = embed_queries(queries, embed_model)

Precomputes embeddings for all queries to avoid redundant encoding later. If embedding fails, falls back to an empty dictionary.

Definition of META_PATHS and META_WEIGHTS

Establishes default meta-paths like [‘query’,’kw’,’section’], [‘query’,’ent’,’section’], and equal weights for combining them.

Helper functions _flatten_sections and _snippet

- _flatten_sections: Recursively flattens nested section hierarchies into a single list.

- _snippet: Extracts a short preview text from each section, prioritizing raw blocks.

Section extraction and enrichment

- extract_structured_sections: Fetches hierarchical content from a URL.

- preprocess_sections: Cleans sections (removes boilerplate, filters by word count).

- apply_ner_to_sections: Adds named entity recognition tags to sections.

- apply_keywords_and_embeddings: Enriches sections with extracted keywords and embeddings.

id_lookup construction

Builds a dictionary mapping section nodes (e.g., “section_123”) to metadata like URL, heading, level, snippet, and embedding status. This lookup supports later result enrichment.

G = build_content_graph(…)

Builds a typed graph connecting queries, sections, keywords, and entities, with edges based on thresholds for semantic similarity.

Meta-path scoring

- compute_meta_path_scores_all: Produces per-path normalized score matrices and a combined weighted score matrix.

- These matrices quantify how strongly each query connects to each section through different relation types.

Building per-query results

- For each query, retrieves the combined scores to rank sections.

- Enriches each recommended section with metadata (id_lookup) and per-path contributions.

- Computes page_score as the average of the top-k section scores.

Function display_analysis_results

The display_analysis_results function is responsible for presenting the output of the meta-path based recommendation system in a clear, human-readable format. It processes the analysis results for each URL, handles potential errors, and then organizes the query-level recommendations by sorting them according to their meta-path score. This ensures users can quickly identify which content sections best align with their queries and why.

Key features of this function include:

- Query coverage reporting: Displays the total number of queries processed for a URL and how many queries resulted in at least one matched section.

- Top recommendations display: For each query, the function highlights the best-matching sections based on meta-path score, limited to the top k recommendations for clarity.

- Contextual details: Each recommendation includes its section heading, section ID, meta-path score, and optionally a snippet of text for better interpretability.

This function does not alter data or perform computations. Instead, it provides an interpretable interface for users to understand the recommendation results clearly and make practical use of them.

Result Analysis and Explanation

This section provides a detailed examination of the outcomes produced by the meta-path based recommendation framework. The results highlight how the system aligns user queries with relevant sections of content by leveraging graph-based relationships between queries, keywords, entities, and document structures.

Query-Section Alignment

The central output of the framework is the mapping of queries to relevant document sections. Each query is associated with the most relevant parts of the text, allowing for precise recommendations rather than broad document-level retrieval.

- Queries are not matched to the entire content but to specific sections where the semantic meaning is most aligned.

- This approach increases the interpretability of results, as the recommendations can be traced back to distinct headings, sub-sections, or passages.

- The granularity ensures that the recommendation process focuses on contextually significant fragments, which improves the quality of retrieval.

Meta-Path Based Recommendations

The recommendation system follows the meta-path principle:

Query -> Keyword/Entity -> Section

- By constructing this path, the system identifies indirect relationships between a user’s query and sections of the document.

- The effectiveness lies in capturing both explicit textual matches and semantic overlaps derived from embeddings and keywords.

- Ranking is applied on these meta-path connections, ensuring that the sections most strongly connected through multiple shared entities/keywords appear higher in the recommendations.

This structure allows the framework to outperform naive text-matching approaches by incorporating graph connectivity as part of the scoring.

Recommendation Ranking

Although the displayed results present the recommended sections in ranked order without explicitly showing scores, the underlying process relies on meta-path scoring and connection strength.

- Recommendations are ordered based on the number and weight of shared connections (keywords, entities, or semantic edges) between a query and a section.

- Higher-ranked sections can thus be interpreted as more strongly connected to the query intent.

- This ranking mechanism ensures fairness: even if multiple sections match, those with deeper or denser relational evidence are prioritized.

Section-Level Insights

The analysis extends beyond document-level retrieval and provides insights at the section level.

- Each recommended section includes not only its heading and position in the document but also a snippet, offering an immediate sense of why it was chosen.

- These snippets help validate the relevance of the recommendation and serve as transparent evidence of the reasoning process.

- Additionally, metadata such as section IDs and levels (e.g., H2, H3 headings) provide structural context, allowing users to locate and interpret the recommendations in the original content.

Query Coverage

A key aspect of the output is the breadth of query coverage:

- Some queries may receive multiple strong matches across different sections, showing the versatility of the framework.

- Other queries may have fewer connections, reflecting either content gaps or the specificity of the query.

- This behavior provides actionable insights, as clients can identify areas where content aligns well with user interests and where improvements or expansions may be needed.

Visualization of Results

Visualization plays a crucial role in interpreting the meta-path based recommendations. The project integrates multiple plots to make the results intuitive:

· Query-Section Graphs

- These network diagrams display the connections between queries and recommended sections.

- Nodes represent queries and sections, while edges represent recommendation strength.

- Node size and edge weight provide a visual cue for importance and connection density.

· Bar Charts of Top Recommendations

- Horizontal bar charts summarize the top recommended sections per query, ordered by edge weight.

- This provides a straightforward comparison of recommendation strength across queries.

· Node Type Distribution Charts

- Bar plots showing the distribution of node types (queries, sections, keywords, entities, etc.) within the graph.

- These highlight the balance and diversity of graph construction, offering insights into how well different types of information contribute to recommendations.

The combination of these visualizations not only explains the recommendations but also validates the graph-based methodology as a transparent and explainable framework.

Strategic Insights

From a strategic perspective, the results offer valuable insights:

- Content Validation: By mapping queries to specific sections, clients can verify whether their content effectively addresses user intents.

- Content Gap Analysis: Queries with weak or no matches indicate opportunities for new or enhanced content.

- Recommendation Reliability: Ranked outputs ensure that clients can trust the system to surface the most impactful matches first.

- Scalability: The meta-path framework can adapt to new queries or expanded content with minimal changes, ensuring long-term applicability.

Q&A on Result Interpretation and User Actions

How do the results help me identify which sections of my content are most aligned with user queries?

The analysis results clearly highlight sections that have been recommended for each query based on meta-path scores. These scores act as a relevance signal, showing how strongly a section matches the query intent. For instance, if a section consistently scores higher than others across multiple queries, it signals strong semantic alignment with user needs.

Prioritize reinforcing and expanding these high-scoring sections, as they already capture user intent well. This ensures stronger visibility in SERPs and higher topical authority.

What do I do if the analysis shows that no strong matches were found for certain queries?

When the system reports “No strong matches found” for a query, it highlights a content gap. This means the query intent exists in your audience’s search behavior but your page doesn’t cover it effectively. Ignoring this would limit your content’s reach and weaken topical coverage.

Treat these gaps as opportunities. Create new content sections or refine existing ones to explicitly address the missing intent. This ensures you build comprehensive coverage around target topics.

How can the snippet-based recommendations help my team make improvements quickly?

The system provides content snippets from the recommended sections so you can immediately see why a section was flagged as relevant. Instead of scanning the entire page, you get context-rich previews that show how intent alignment was established.

Use snippets to guide editorial changes quickly. For example, if a snippet matches part of a query but misses depth, your writers can expand it into a more comprehensive answer without restructuring the entire page.

Why are meta-path scores important for prioritizing recommendations?

Meta-path scores combine structural context (e.g., headings, section position) with semantic similarity to quantify alignment. A section with a high score is not just semantically close to a query but also well-situated in the page’s discourse flow. This dual consideration ensures recommendations are practical and trustworthy.

Focus your optimization efforts starting from high-scoring matches, as they represent quick wins. Lower-scoring matches can then be revisited for long-term improvements.

What does it mean if certain queries have strong alignment with multiple content sections?

This indicates that the page already provides solid coverage for these queries, with multiple sections reinforcing the same intent. From an SEO perspective, this redundancy strengthens authority because search engines recognize repeated, contextually relevant reinforcement of the topic.

- Keep these sections well-optimized and internally consistent to avoid diluting the signal.

- Use this strength to internally link from these sections to related, weaker-performing content across your site.

- Consider consolidating overlapping sections if they cause repetition, but preserve the coverage depth that makes the page authoritative.

What if some queries show only weak similarity with all content sections?

Weak similarity means the page does not adequately cover these queries. Search engines may interpret this as a content gap, reducing the likelihood of ranking for those terms.

- Identify the specific queries with low coverage and create new dedicated subsections targeting them.

- Use the detected gap as a guide for content expansion strategy — these queries represent unaddressed user needs that your competitors may already be covering.

- If the queries are commercially important, prioritize them in the next round of content updates.

How can the insights help in improving internal linking across the site?

The analysis highlights which sections strongly represent specific intents. By mapping these intents to related queries or topics elsewhere on your site, you can build intent-based internal links. This ensures that authority from well-aligned sections flows naturally to pages that need ranking support.

- For every strongly aligned section, link out to other pages where intent coverage is weaker or emerging.

- Build an internal linking map based on detected intent strengths and weaknesses.

- Use these links not only for SEO authority flow but also to improve user navigation and topic pathways.

How do these results help in planning long-form content updates?

The similarity scores reveal the flow and coverage of intent across long-form content. If some sections drift away from the main intent, it signals a potential ranking inconsistency. On the other hand, if coverage is clustered too tightly, opportunities for content diversification may be missed.

- Strengthen the topic flow by merging or refining sections that stray too far from the dominant intent.

- Expand content in areas where intent diversity is missing — this ensures the page answers a broader set of user needs while staying consistent.

- Regularly re-run the analysis to track whether new updates maintain or improve the balance.

How should users act if multiple high-priority queries overlap with similar content sections?

Overlap suggests the content may be cannibalizing itself — different sections targeting similar terms without enough differentiation. This weakens clarity for search engines and may scatter ranking signals.

- Audit overlapping sections and decide whether to merge them into one authoritative block or keep them separate but with clearer focus per query.

- Adjust headings and subheadings to clarify which query each section is meant to address.

- If the overlap cannot be resolved within the page, consider splitting into separate focused articles to maximize topical authority.

Can the results highlight competitive advantages for my content?

Yes. If your page consistently shows strong matches for high-intent queries while competitors miss them, you are positioned to capture greater search visibility. Conversely, if gaps are detected, it reveals areas competitors may already be exploiting.

Leverage strong matches as proof points in your competitive content audits, and fill in uncovered gaps to defend against competitor advantage.

Final thoughts

This project demonstrates how meta-path based query-to-content alignment can be practically applied to SEO and content strategy. By combining structured section extraction, semantic similarity, and meta-path scoring, the system identifies which parts of a webpage are most relevant to user queries and highlights where gaps exist. The structured recommendations are not limited to raw similarity scores; instead, they integrate both semantic depth and page structure, ensuring that the results are actionable and directly tied to user intent.

Through this implementation, clients gain a clear framework for:

- Identifying strengths — Sections that consistently align with multiple queries, showing strong topical coverage.

- Pinpointing weaknesses — Queries where no strong matches were found, revealing content gaps and opportunities.

- Accelerating content optimization — Snippet-level recommendations that allow teams to act quickly and with precision.

- Supporting internal linking — Recognizing alignment patterns across sections and pages, which can be leveraged for stronger content flow and authority building.

Overall, this approach bridges the gap between raw NLP analysis and real-world SEO actions. It ensures that query intent is systematically mapped to structured page sections, enabling better alignment, clearer recommendations, and more effective editorial decision-making. By anchoring results in meta-path logic, the system provides clients with not only what is relevant, but also why it is relevant—a critical step toward building comprehensive, high-performing content strategies.

Click here to download the full guide about Meta-Path Based Recommendation.