SUPERCHARGE YOUR ONLINE VISIBILITY! CONTACT US AND LET’S ACHIEVE EXCELLENCE TOGETHER!

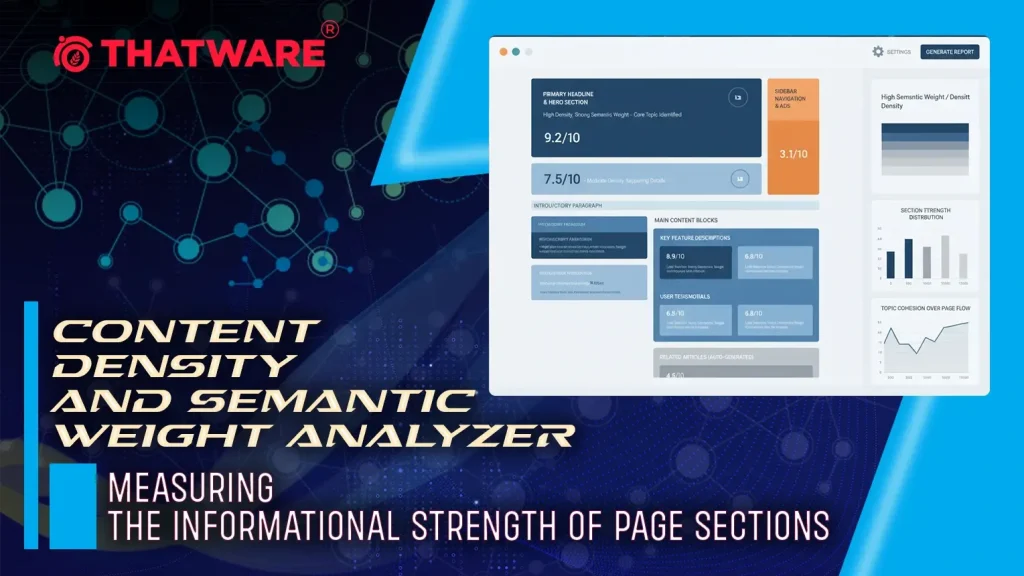

The Content Density and Semantic Weight Analyzer is designed to evaluate the informational strength and relevance of content at a granular, section-level across web pages. By combining semantic embeddings with quantitative metrics such as semantic density, semantic weight, and redundancy, the project identifies high-value content areas, assesses page balance, and provides actionable insights for improving SEO performance and content strategy.

This analyzer processes individual page sections, calculates their semantic contribution relative to the entire page, and measures how closely each section aligns with targeted queries. It highlights sections with the highest content relevance, detects redundant or low-value areas, and quantifies overall content balance and dominance. The output enables SEO professionals to make informed content optimization decisions, ensuring that pages are both comprehensive and efficient in conveying information.

Key outcomes of this project include:

- Identification of top sections contributing most to page relevance and informational value.

- Quantification of semantic density and weight, allowing assessment of how information is distributed across a page.

- Detection of redundant content and low-value areas to optimize content efficiency.

- Query-specific insights, enabling alignment of page content with strategic SEO objectives.

The project ensures that SEO strategists and content teams can prioritize updates and focus resources on high-impact areas, ultimately improving search visibility and user engagement.

Project Purpose

The purpose of the Content Density and Semantic Weight Analyzer is to provide SEO professionals and content teams with a precise, actionable evaluation of web page content at the section level. In modern SEO, not all page sections contribute equally to search visibility, user engagement, or informational clarity. This project addresses that challenge by quantifying the semantic strength and relevance of each section relative to targeted queries and overall page content.

Specifically, this project aims to:

- Assess Content Quality at Granular Level: By analyzing each section separately, it identifies which parts of a page carry the most meaningful information, allowing clients to focus on high-impact content rather than treating the page as a single monolithic unit.

- Align Content with SEO Objectives: The analyzer measures the semantic relevance of sections against strategic SEO queries. This ensures that content is not only dense but also targeted, helping pages rank effectively for the intended search terms.

- Optimize Content Distribution and Balance: Pages often suffer from uneven content distribution, where some sections dominate the informational load while others provide minimal value. The project calculates dominance and content balance metrics to highlight such disparities.

- Detect Redundancy and Improve Efficiency: Redundant or overlapping content can dilute page value and confuse search engines. By identifying high-redundancy sections, clients can streamline content, reducing repetition while maintaining or improving informational coverage.

- Support Data-Driven Decision Making: The generated insights empower SEO strategists to make informed decisions regarding content revision, expansion, or removal, ensuring that every page section contributes effectively to both user experience and search engine visibility.

In essence, the project equips clients with an in-depth, quantifiable understanding of page content, transforming subjective content assessment into measurable, actionable insights that improve SEO performance and user engagement.

Project’s Key Topics Explanation and Understanding

The Content Density and Semantic Weight Analyzer project is grounded in several core concepts that collectively define how the quality, relevance, and impact of web page sections are measured. Understanding these topics is essential for appreciating the methodology and outputs of the project.

Content Density

Content density refers to the concentration of meaningful information within a section of a web page relative to its length. High-density sections convey more relevant information per unit of text, making them more impactful for both users and search engines. Measuring content density allows SEO professionals to identify underperforming sections, overstuffed content, and areas where information could be enriched.

Key points:

- Focuses on semantic content rather than just word count.

- Helps detect sections that may appear substantial but provide limited informational value.

- Supports balanced content distribution across the page, preventing dominance by a few sections.

Semantic Weight

Semantic weight quantifies the informational importance of a section based on its contribution to the overall meaning of the page and its alignment with strategic queries. Unlike content density, which measures concentration, semantic weight emphasizes the significance of the content in context.

Key points:

- Incorporates both the section’s length and its relevance to target topics.

- Helps prioritize sections that carry the most actionable information for SEO performance.

- Assists in identifying key sections that should be highlighted or optimized for search engines.

Section-Level Analysis

Unlike page-level metrics, which treat content as a single block, section-level analysis examines each subsection independently. This approach provides a granular understanding of which parts of the page drive the most value.

Key points:

- Enables precise optimization and targeted content revisions.

- Facilitates strategic allocation of effort in content creation and improvement.

- Supports detailed reporting to clients on actionable insights.

Query Relevance and Semantic Alignment

Each section is evaluated against a set of predefined queries relevant to the client’s SEO goals. This semantic alignment ensures that content not only carries information but also addresses the specific topics and questions users are searching for.

Key points:

- Uses embedding-based similarity metrics to quantify relevance.

- Helps identify high-performing content that is likely to rank well for targeted queries.

- Highlights content gaps where new sections or modifications are needed.

Redundancy Detection

Redundant content reduces page efficiency and may negatively affect SEO. This project measures redundancy at the section level, identifying overlapping content within the page.

Key points:

- Assesses overlap between sections using semantic similarity scores.

- Labels sections as low, medium, or high redundancy to guide content refinement.

- Supports efficient page structuring and prevents dilution of high-value information.

Content Balance and Dominance

These metrics evaluate how evenly the semantic weight is distributed across the page. A page dominated by a single section may underutilize available space and miss opportunities for additional content optimization.

Key points:

- Dominance score: highlights sections disproportionately influencing the page.

- Content balance: measures the uniformity of informational contribution across all sections.

- Guides clients in achieving an even and effective spread of information.

Q&A: Project Value and Importance

What is the main objective of this project?**

The project aims to provide a structured, data-driven approach to evaluating web page content at the section level. By measuring informational strength and semantic relevance, it enables SEO professionals to understand which parts of a page contribute most to user value and search performance. This ensures that optimization efforts are precise, effective, and aligned with business goals.

How does this project improve content analysis?**

Traditional content audits often assess pages as a whole, missing nuanced differences between sections. This project breaks content into individual sections, analyzing their density and semantic importance. This granular approach allows clients to identify strengths, weaknesses, and opportunities within the page structure, making content audits more actionable.

What are the key features of the project?**

Key features include:

- Section-level semantic analysis: Evaluates the relevance and richness of each section independently.

- Content density measurement: Determines how much meaningful information exists relative to section length.

- Semantic weight assessment: Measures the informational importance of content in relation to strategic SEO topics.

- Redundancy detection: Identifies overlapping or duplicate content to streamline pages. These features together provide a comprehensive picture of content quality, relevance, and efficiency.

How does this benefit SEO strategists and content teams?**

SEO professionals gain actionable insights into which sections are most valuable, which areas require improvement, and where redundancy can be reduced. Content teams can prioritize updates based on data rather than intuition, improving content efficiency, alignment with user intent, and overall page effectiveness.

Why is measuring semantic weight important?**

Semantic weight helps identify content that carries significant informational value. By understanding which sections are central to the page’s informational goals, strategists can ensure that high-value content is prominent, relevant, and optimized for search intent, enhancing both SEO performance and user experience.

How does content density contribute to content strategy?**

Content density highlights sections that deliver substantial information concisely. High-density sections tend to be more informative and engaging, while low-density sections may indicate gaps or unnecessary filler. This allows teams to optimize content layout and improve overall readability and effectiveness.

How does redundancy detection add value?**

Redundancy detection identifies sections with overlapping information, helping reduce duplication. This ensures that each part of a page adds unique value, improving clarity, user experience, and the overall quality perceived by both search engines and users.

How can clients leverage this project for strategic decisions?**

Clients can use insights from the project to guide content creation, optimization, and restructuring decisions. It provides a framework for evaluating content systematically, prioritizing high-impact updates, and aligning page structure with target SEO objectives.

What is the practical impact of section-level analysis?**

By analyzing sections individually rather than treating the page as a single unit, the project enables precise, focused optimizations. This ensures that resources are invested where they will have the greatest impact, improving content quality, search visibility, and user engagement.

Libraries Used

time

The time library is part of Python’s standard library and provides various time-related functions, such as measuring the duration of code execution or introducing delays in a program. It allows precise measurement and control over time-dependent operations, which is often necessary for performance monitoring.

In this project, time is used to track the execution duration of key functions and pipelines. Measuring processing time is important to ensure that content analysis, embedding generation, and query evaluation are efficient and scalable for multiple web pages.

re

The re library provides support for regular expressions in Python, allowing pattern-based searching, matching, and text manipulation. It is widely used for string processing, cleaning, and extracting structured information from unstructured text.

In this project, re is used to clean and preprocess URLs and text segments. For example, it is used to trim URLs for plots, remove unwanted prefixes (http, https, www), and extract or sanitize text content from web pages for semantic analysis.

html

The html module provides utilities to handle HTML-specific operations, including escaping and unescaping HTML entities. It ensures that special characters in web content are interpreted correctly without breaking string processing.

In this project, html is used to decode HTML entities from web page content. This is essential when working with raw page content to maintain semantic correctness before tokenization, embedding, or analysis.

hashlib

hashlib is a Python library for generating cryptographic hash functions like MD5, SHA1, or SHA256. It is commonly used for creating unique identifiers for strings, files, or data objects.

Here, hashlib is used to generate unique section or page identifiers based on content. This allows consistent mapping and tracking of sections across multiple runs of the analysis pipeline, which is critical for redundancy detection and comparison.

unicodedata

unicodedata provides access to the Unicode character database, allowing normalization and processing of Unicode strings. This helps standardize text for consistent comparison and analysis.

In the project, unicodedata is used to normalize content extracted from web pages, ensuring that accented characters, symbols, or special formatting are consistently represented before tokenization and semantic analysis.

logging

The logging library is a standard Python tool for recording runtime events, errors, and informational messages. It enables monitoring, debugging, and auditing of the code execution flow.

In this project, logging is used to report errors, track progress, and provide debug information during page fetching, content extraction, chunking, and embedding generation. This ensures robust execution and easier troubleshooting.

requests

requests is a popular Python library for sending HTTP requests. It simplifies the process of interacting with web servers, handling headers, cookies, and responses efficiently.

Here, requests is used to fetch web page content for analysis. Reliable page retrieval is fundamental for extracting content sections, generating embeddings, and performing query-level analysis.

BeautifulSoup from bs4

BeautifulSoup is a library for parsing HTML and XML documents. It allows structured extraction of elements, text, and metadata from web pages.

In this project, BeautifulSoup is used to parse fetched HTML content and extract individual sections of the page. Proper parsing ensures that semantic analysis and density calculation are performed only on meaningful content, ignoring scripts or irrelevant tags.

numpy

numpy is a foundational Python library for numerical computing, providing support for arrays, matrices, and vectorized operations. It is widely used in data analysis, machine learning, and scientific computing.

In this project, numpy is used for numerical operations on embeddings, semantic density calculations, and summary statistics. Its efficient array operations allow fast computation across multiple page sections and queries.

math

The math library provides standard mathematical functions such as logarithms, exponentials, and basic arithmetic operations. It is essential for numerical calculations in Python.

Here, math is used for calculations such as normalization, weighted scores, and semantic weight computations. It ensures accurate computation of metrics that are later used in reporting and visualization.

collections.Counter

Counter is a specialized dictionary in Python for counting hashable objects. It provides easy aggregation, frequency analysis, and summary statistics.

In this project, Counter is used to analyze token distributions, redundancy counts, and label frequencies across sections. This allows the project to quantify the occurrence of high-value or redundant content effectively.

transformers.AutoTokenizer

AutoTokenizer from Hugging Face’s Transformers library provides pre-trained tokenizers for a wide range of transformer models. Tokenizers convert text into tokens that models can understand.

Here, AutoTokenizer is used to tokenize section content for embedding generation. Accurate tokenization is critical to ensure semantic embeddings correctly represent the content for similarity and density calculations.

sentence_transformers.SentenceTransformer & util

SentenceTransformer is a library built on top of Hugging Face Transformers, optimized for generating embeddings for sentences, paragraphs, or documents. util provides helper functions for similarity computation.

In this project, SentenceTransformer is used to generate vector representations of page sections. These embeddings enable semantic similarity calculations between queries and page content, which form the foundation for relevance scoring, density-weighted analysis, and section ranking.

torch

torch is the core library of PyTorch, providing tensor operations, GPU acceleration, and deep learning capabilities.

Here, torch is used to handle embeddings, similarity computations, and vector operations efficiently. Using GPU acceleration ensures that embedding generation and similarity scoring can scale to multiple pages and queries.

pandas

pandas is a powerful library for data manipulation and analysis, offering structures like DataFrames for tabular data handling.

In this project, pandas is used to organize section-level metrics, query scores, and redundancy information. It facilitates efficient computation, aggregation, and export of results for reporting and visualization.

matplotlib.pyplot and seaborn

matplotlib.pyplot provides a flexible framework for creating static, interactive, and animated visualizations in Python. seaborn builds on matplotlib to offer high-level plotting functions with better aesthetics and statistical visualization capabilities.

Here, these libraries are used to produce actionable, client-ready visualizations of semantic density, relevance scores, redundancy, and label distribution. Plots help stakeholders understand content quality and section importance at a glance.

Function fetch_html

Overview

This function retrieves raw HTML content from a given URL. It is designed to handle multiple encoding attempts to ensure that text is properly decoded. A polite delay is included before the request to avoid overloading web servers. The function also implements basic error handling and logs warnings if all encoding attempts fail.

Key Code Explanations

· time.sleep(delay) – Introduces a delay before sending the request to avoid making rapid repeated requests, ensuring polite crawling behavior.

· response = requests.get(url, timeout=request_timeout, headers={“User-Agent”: “Mozilla/5.0 (compatible;)”}) – Fetches the page using the requests library with a specified timeout and a user-agent header to mimic a standard browser.

· encodings_to_try = [response.apparent_encoding, ‘utf-8’, ‘iso-8859-1’, ‘cp1252’] – Defines a list of encodings to try for decoding the HTML content, increasing the likelihood of successfully reading pages with different character sets.

Function clean_html

Overview

This function uses BeautifulSoup to parse HTML and remove unwanted tags such as scripts, styles, navigation bars, and other non-content elements. The cleaned HTML allows more accurate content extraction for semantic analysis.

Key Code Explanations

· soup = BeautifulSoup(html_content, “lxml”) – Parses the raw HTML using the lxml parser for efficient and robust extraction.

· for tag in soup([…]): tag.decompose() – Iterates through tags that typically contain non-essential content and removes them entirely from the DOM, ensuring only relevant content remains.

Function _clean_text

Overview

This function normalizes and cleans inline text from HTML. It ensures that text is consistent, removing extra whitespace and decoding HTML entities, preparing the text for downstream processing like tokenization and embedding.

Key Code Explanations

· text = _html.unescape(text) – Converts HTML entities like & back to normal characters (&).

· text = unicodedata.normalize(“NFKC”, text) – Normalizes Unicode characters to ensure consistent representation of text, such as accented letters or special symbols.

· text = re.sub(r”\s+”, ” “, text) – Collapses multiple consecutive whitespace characters into a single space, making text cleaner for semantic analysis.

Function extract_sections

Overview

This function extracts meaningful page sections based on headings (h1 and h2) and merges paragraph-like content (p, li, blockquote) under each section. It returns a list of sections with their content blocks and the page title.

Key Code Explanations

· h1_tags = soup.find_all(“h1”) – Finds all main headings to determine the primary structure of the page.

· for el in soup.find_all([“h1”, “h2”, “p”, “li”, “blockquote”]): – Iterates over content elements in the order they appear on the page to maintain section context.

· if tag in [“h1”, “h2”]: current_section = {“heading”: text, “blocks”: []} – Creates a new section when a heading is found.

· elif tag in [“p”, “li”, “blockquote”]: current_section[“blocks”].append(text) – Adds content blocks to the current section, ensuring that textual content is correctly associated with the relevant heading.

· if current_section is None: current_section = {“heading”: “General”, “blocks”: []} – Handles content that appears before any headings by creating a default “General” section.

Function extract_page_sections

Overview

This function orchestrates the full page extraction workflow. It fetches the HTML, cleans it, extracts structured sections, and returns a dictionary containing the URL, page title, and a list of content sections. It also handles errors gracefully by returning an empty result with a note if fetching or parsing fails.

Key Code Explanations

· html_content = fetch_html(url, request_timeout, delay) – Fetches the raw page HTML using the previously defined fetch_html function.

· soup = clean_html(html_content) – Cleans the HTML by removing non-content tags.

· sections, page_title = extract_sections(soup, min_block_chars) – Extracts meaningful sections from the cleaned HTML, returning both the content blocks and the page title.

· return {“url”: url, “title”: page_title, “sections”: sections} – Packages the extracted data in a structured dictionary suitable for downstream semantic analysis and scoring.ient execution.

Function load_model_and_tokenizer

Overview

This function loads a SentenceTransformer model along with its corresponding tokenizer from Hugging Face. It supports automatic device selection, allowing the model to run on GPU if available or CPU otherwise. The function is built with exception handling to ensure that errors during model or tokenizer loading are logged and clearly communicated.

Key Code Explanations

- device = “cuda” if torch.cuda.is_available() else “cpu” – Automatically selects GPU if available, otherwise defaults to CPU. This ensures optimal performance when embedding large amounts of text.

- model = SentenceTransformer(model_name, device=device) – Loads the sentence-transformer model on the selected device, enabling embedding generation for semantic analysis.

- tokenizer = AutoTokenizer.from_pretrained(model_name) – Loads the Hugging Face tokenizer corresponding to the model, which is used for token-based chunking of text.

[ ]

model, tokenizer = load_model_and_tokenizer(“sentence-transformers/all-mpnet-base-v2”)

model

/usr/local/lib/python3.12/dist-packages/huggingface_hub/utils/_auth.py:94: UserWarning:

The secret `HF_TOKEN` does not exist in your Colab secrets.

To authenticate with the Hugging Face Hub, create a token in your settings tab (https://huggingface.co/settings/tokens), set it as secret in your Google Colab and restart your session.

You will be able to reuse this secret in all of your notebooks.

Please note that authentication is recommended but still optional to access public models or datasets.

warnings.warn(

SentenceTransformer(

(0): Transformer({‘max_seq_length’: 384, ‘do_lower_case’: False, ‘architecture’: ‘MPNetModel’})

(1): Pooling({‘word_embedding_dimension’: 768, ‘pooling_mode_cls_token’: False, ‘pooling_mode_mean_tokens’: True, ‘pooling_mode_max_tokens’: False, ‘pooling_mode_mean_sqrt_len_tokens’: False, ‘pooling_mode_weightedmean_tokens’: False, ‘pooling_mode_lasttoken’: False, ‘include_prompt’: True})

(2): Normalize()

)

Model Used: sentence-transformers/all-mpnet-base-v2

Overview of the Model

The project leverages the all-mpnet-base-v2 model from the Sentence-Transformers family, a high-performance transformer-based embedding model designed to convert textual content into dense numerical representations (embeddings). These embeddings capture semantic meaning at the sentence, paragraph, or block level, making them suitable for tasks such as semantic similarity, search relevance, clustering, and ranking. The model provides a balance between accuracy and efficiency, making it practical for real-world applications where multiple pages and queries are processed.

Core Architecture

all-mpnet-base-v2 is built upon MPNet (Masked and Permuted Pre-training for Language Understanding), an advanced transformer architecture combining the strengths of BERT and XLNet. Key characteristics include:

- Bidirectional Context: Unlike traditional autoregressive models, MPNet considers both left and right context simultaneously, improving the understanding of word relationships.

- Permutation Language Modeling: Instead of predicting masked tokens independently, MPNet uses permuted sequences during pretraining, allowing the model to learn richer dependencies.

- Sentence-Transformer Adaptation: Through the Sentence-Transformers framework, the base MPNet model is fine-tuned on large-scale semantic similarity datasets, ensuring that embeddings reflect meaningful semantic distances between texts rather than only token-level predictions.

How the Model Works

The model processes text in the following steps:

- Tokenization: Input text is converted into a sequence of tokens using the model tokenizer, mapping words and subwords to numerical indices.

- Contextual Encoding: Tokens are passed through multiple transformer layers, producing contextual embeddings for each token that reflect both local and global semantic relationships.

- Pooling: Token embeddings are aggregated (typically via mean pooling) to produce a fixed-length vector representing the entire input text.

- Embedding Output: The resulting dense vector captures semantic content and can be compared with other vectors using similarity metrics such as cosine similarity.

This pipeline allows sections of web pages and query texts to be represented in the same embedding space, enabling effective semantic matching.

Role in the Project

In this project, the model serves as the foundation for semantic analysis of page sections:

- Section Embeddings: Converts each processed block of content into a dense vector for similarity comparison.

- Page-Level Embeddings: Aggregates section embeddings to provide an overall representation of a page’s informational content.

- Query Matching: Embeddings of client-provided queries are compared with section embeddings to determine relevance and alignment.

- Semantic Weight and Density: Embedding-based similarity scores are combined with section size to compute meaningful metrics for ranking and labeling content.

The use of this model ensures that the evaluation is context-aware and semantically grounded, rather than relying on keyword presence alone.

Benefits for SEO and Content Analysis

Implementing all-mpnet-base-v2 in the project provides several practical benefits:

- Accurate Semantic Matching: Sections are scored based on meaning, not just keyword overlap, allowing identification of content that truly aligns with user intent.

- Cross-Section Comparisons: Enables detection of redundant or overlapping content, improving page optimization.

- Content Prioritization: Sections with higher semantic weight and density can be highlighted, supporting SEO strategies that emphasize informative, high-value content.

- Actionable Insights: By quantifying semantic relevance for each query, the project provides actionable guidance on which page sections to optimize, restructure, or expand.

Overall, the model acts as a semantic lens that transforms raw text into actionable insights, driving informed SEO decisions and content improvements.

Function chunk_by_tokens

Overview

This function splits a large block of text into smaller, token-based chunks using a Hugging Face tokenizer. The goal is to generate semantically coherent segments for embedding generation. The function supports adaptive overlapping between chunks, preserving context in longer text while avoiding unnecessary duplication in shorter text. This ensures accurate semantic representation when computing embeddings for content density and query relevance analysis.

Key Code Explanations

· tokens = tokenizer.encode(text, add_special_tokens=False) – Converts the input text into tokens, ignoring special tokens to focus on content-relevant tokens.

· overlap = 30 if len(tokens) > int(1.5 * max_tokens) else 0 – Automatically adds a 30-token overlap for long texts to maintain semantic continuity between consecutive chunks.

· token_strs = tokenizer.convert_ids_to_tokens(chunk_tokens) & chunk_text = tokenizer.convert_tokens_to_string(token_strs).strip() – Converts token IDs back to readable strings while preserving the original content structure.

· start += max_tokens – overlap if overlap else max_tokens – Ensures chunks move forward correctly with or without overlap, maintaining sequential coverage of the text.

This function is essential for breaking long sections into manageable pieces that can be embedded and analyzed without losing context, which is critical for accurate semantic weight and density measurement.

Function preprocess_page

Overview

preprocess_page prepares the extracted sections of a web page for analysis by performing multiple preprocessing steps. It merges text blocks within sections, filters out boilerplate or low-value content, and applies token-based chunking using the previously defined chunk_by_tokens function. Each resulting chunk is assigned a unique section ID and a token count, making the sections ready for embedding and semantic evaluation.

Key Code Explanations

· merged_text = ” “.join(section.get(“blocks”, [])).strip() – Combines all paragraph-like blocks under a section into a single text string to maintain context.

· if any(bp.lower() in merged_text.lower() for bp in boilerplate_terms): continue – Filters out common boilerplate phrases (like “read more” or “privacy policy”) to focus on high-value content.

· chunks = chunk_by_tokens(merged_text, tokenizer, max_tokens, overlap) – Splits the merged section text into token-based chunks for embedding generation.

· section_id = hashlib.md5(f”{url}_{section[‘heading’]}_{idx}”.encode()).hexdigest() – Generates a unique hash for each chunked section to track it across analysis stages.

· if len(words) < min_word_count or chunk_tokens < min_word_count or chunk_tokens > max_token_length: continue – Ensures only meaningful chunks of appropriate length are retained for analysis.

This function guarantees that only informative, semantically meaningful sections proceed to the embedding stage, enhancing the quality of query relevance scoring and semantic density measurement.

Function generate_section_embeddings

Overview

This function takes a preprocessed page and generates vector embeddings for each section using a preloaded SentenceTransformer model. Embeddings are numerical representations of text that capture semantic meaning, allowing the system to quantitatively assess content similarity, relevance to queries, and semantic weight. Each section in the processed page receives an embedding, which is essential for downstream tasks such as query relevance scoring and semantic density calculation.

Key Code Explanations

· device = model.device – Ensures embeddings are generated on the same device (CPU or GPU) where the model is loaded, optimizing performance.

· texts = [sec[“merged_text”] for sec in sections] – Collects all section texts into a list for batch embedding, which is more efficient than embedding each section individually.

· embeddings = model.encode(texts, convert_to_tensor=True, device=device, show_progress_bar=False) – Generates tensor embeddings for all sections simultaneously, storing them in a device-optimized format.

· sec[“embedding”] = embeddings[idx] – Assigns each embedding back to its corresponding section, keeping the structure intact for further analysis.

This function is critical because it translates textual content into a format that the system can compare, rank, and analyze semantically, forming the backbone of query relevance measurement and semantic weighting in the project.

Function generate_page_embedding

Overview

This function computes a single vector representation for the entire page by averaging the embeddings of all its sections. The page-level embedding serves as a holistic semantic representation, allowing the system to assess overall content similarity between pages or against a set of queries. It is particularly useful for tasks such as ranking pages by relevance or identifying overall thematic alignment.

Key Code Explanations

· device = model.device – Ensures computation occurs on the same device where the model resides, maintaining efficiency and compatibility.

· embeddings = [sec[“embedding”] for sec in sections] – Collects all section embeddings into a list for aggregation.

· page_embedding = torch.mean(torch.stack(embeddings), dim=0) – Stacks all section embeddings into a single tensor and calculates the mean along the first dimension, producing an averaged vector that represents the full page’s semantic content.

· processed_page[“page_embedding”] = page_embedding – Stores the resulting page-level embedding within the page dictionary for downstream analysis, keeping the data structure unified and easily accessible.

This function is crucial because it allows page-level semantic comparisons, which complements section-level analysis and provides clients with a quantitative measure of a page’s overall informational strength.

Function compute_section_page_similarity

Overview

This function calculates the cosine similarity between each section and the overall page embedding. The similarity score quantifies how closely a section aligns with the semantic content of the entire page. Sections with higher similarity values represent the core or most representative content of the page, while lower values may indicate peripheral or less relevant content. This metric is valuable for identifying high-value sections and understanding content balance across a page.

Key Code Explanations

· page_emb = processed_page.get(“page_embedding”, None) – Retrieves the page-level embedding computed previously. A missing embedding triggers an informative error, ensuring the workflow is followed correctly.

· sec_emb = sec[“embedding”].unsqueeze(0) – Adds a batch dimension to the section embedding to make it compatible with the cos_sim function.

· sim = util.cos_sim(sec_emb, page_emb).item() – Computes cosine similarity between the section and page embeddings, producing a scalar value indicating semantic alignment.

· sec[“page_similarity”] = float(sim) – Stores the similarity score in the section dictionary, making it accessible for downstream analyses such as ranking or visualization.

By using this function, clients can highlight sections that best represent the page and make informed decisions about content optimization or summarization.

Function compute_section_query_similarity

Overview

This function calculates the cosine similarity between each section and a set of query embeddings, which represent search intents or user questions. Each section receives a score per query, quantifying its relevance to that query. This enables query-focused analysis of page content, helping clients identify which sections best satisfy user intent and improve targeted SEO performance.

Key Code Explanations

· query_embeddings = model.encode(queries, convert_to_tensor=True, device=device) – Encodes all queries into embeddings compatible with the section embeddings. This allows vectorized similarity computations.

· sec_emb = sec[“embedding”].unsqueeze(0) – Adds a batch dimension for compatibility with util.cos_sim.

· sims = util.cos_sim(sec_emb, query_embeddings) – Computes cosine similarity between the section embedding and each query embedding, producing a vector of similarity scores.

· sec[“query_scores”] = {queries[i]: float(sims[i]) for i in range(len(queries))} – Stores the similarity scores in a dictionary mapping queries to their relevance, making it easy to access for ranking, filtering, or reporting.

This function allows clients to directly evaluate content against multiple search queries, enabling prioritization of high-impact sections for SEO optimization or content refinement.

Function compute_semantic_weight_density

Overview

This function calculates semantic weight and semantic density for each section. These metrics quantify the informational strength of content in relation to the page and the queries:

- Semantic Weight measures the overall contribution of a section by combining its relevance to the page (page_similarity) and its relevance to queries (average of query_scores), scaled by the logarithm of the token count to account for section length.

- Semantic Density normalizes the weight by the number of tokens, indicating information density per token.

These metrics allow clients to identify sections that are both relevant and information-rich, enabling better SEO prioritization and content optimization.

Key Code Explanations

- token_count = sec.get(“token_count”, 1) – Ensures a fallback token count to prevent division by zero.

- mean_query_sim = np.mean(list(query_scores.values())) if query_scores else 0.0 – Computes the average query relevance per section.

- semantic_weight = (page_sim + mean_query_sim) * math.log1p(token_count) – Combines page and query relevance, scaled logarithmically by the section length, giving longer and relevant sections more weight.

- sec[“semantic_density”] = round(float(semantic_weight / token_count), 6) – Normalizes the weight per token, highlighting densely informative sections independent of length.

This calculation enables comparative assessment of sections across a page, helping clients focus on high-value content efficiently.

Function rank_and_label_sections

Overview

This function categorizes page sections into three value tiers — High, Medium, and Low — based on their semantic density (or another specified metric). Labeling sections in this way allows clients to quickly identify top-performing content and areas that may need improvement or optimization.

The classification is primarily percentile-based, ensuring that sections are labeled relative to other sections on the same page. If all sections have nearly identical density, the function falls back to ranking by semantic weight to still differentiate content importance.

Key Code Explanations

· densities = np.array([s.get(density_key, 0.0) for s in sections], dtype=float) – Extracts all section density values to compute percentiles.

· if np.allclose(densities, densities[0]): – Checks for uniform values across sections; triggers fallback logic.

· weights = np.array([s.get(“semantic_weight”, 0.0) for s in sections]) – Fallback ranking uses semantic weight to determine importance when density is uniform.

· pcts = np.array([np.mean(densities <= d) for d in densities]) – Computes the percentile of each section relative to the page.

· sec[“value_label”] = “High”/”Medium”/”Low” – Assigns label based on the calculated percentile compared against the thresholds (high_threshold, medium_threshold).

· label_counts = Counter([s.get(“value_label”, “Low”) for s in sections]) – Summarizes label distribution for the page.

By labeling sections, clients can visually prioritize high-value sections, aiding in SEO audits, content optimization, and reporting.

Function aggregate_page_metrics

Overview

This function converts section-level scores into page-level metrics to provide a concise summary of a page’s content quality and distribution. By aggregating semantic weight and density across all sections, the function produces indicators such as total semantic weight, mean density, top section dominance, and content balance, giving clients a high-level view of the page’s informational structure.

These metrics help in comparing pages, identifying content gaps, and prioritizing optimization by highlighting whether a page has concentrated or balanced content value.

Key Code Explanations

· weights = np.array([float(s.get(weight_key, 0.0)) for s in sections]) – Collects section semantic weights to compute overall page indicators.

· top_section_share = (weights.max() / total_weight) if total_weight > 0 else 0.0 – Measures how dominant the top section is relative to total weight; higher values indicate concentration of value in few sections.

· entropy = -np.sum(probs * np.log(probs)) – Computes the Shannon entropy of section weights to assess content distribution.

· normalized_entropy = float(entropy / math.log(total_sections)) – Normalizes entropy to a 0–1 scale for content balance; closer to 1 means content is evenly distributed across sections.

· mean_density = float(np.mean(densities)) – Provides the average semantic density of all sections, reflecting overall information richness.

· processed_page[“page_summary”] – Stores all aggregated indicators in a structured dictionary for easy access in reporting and visualization.

By summarizing section-level metrics, clients can quickly assess page quality, focus areas, and overall content strength without diving into each section individually.

Function compute_redundancy

Overview

This function analyzes semantic overlap across sections of a page to detect redundancy. By computing cosine similarity between all section embeddings, it identifies sections that carry similar content, flags them, and quantifies overall page redundancy. This is crucial for SEO and content strategy, as high redundancy can dilute page value and reduce clarity for readers and search engines.

The function provides both section-level redundancy metrics (score, number of overlapping partners, and label) and page-level summaries (average redundancy, redundant ratio, and high-redundancy count). Clients can use these insights to refine content, reduce duplication, and enhance unique information distribution.

Key Code Explanations

· sim_matrix = util.cos_sim(embeddings_tensor, embeddings_tensor) – Computes the pairwise cosine similarity between all section embeddings, providing the basis for redundancy detection.

· sims = np.delete(sim_matrix[i], i) – Excludes self-similarity for accurate measurement of overlaps with other sections.

· redundancy_score = float(np.mean(sims)) – Calculates the average similarity of a section to all other sections, giving a quantitative redundancy score.

· high_overlap = np.sum(sims >= threshold) – Counts the number of sections exceeding the similarity threshold, identifying strongly redundant content.

· sections[i][“redundancy_label”] – Assigns a categorical label (“Low”, “Medium”, “High”) based on redundancy score and overlap, enabling quick identification of problematic sections.

· processed_page[“redundancy_summary”] – Aggregates page-level metrics: average redundancy, proportion of highly redundant sections, and count of sections labeled high, offering a concise view of the page’s content uniqueness.

This function allows clients to detect and prioritize redundant content for optimization, ensuring that pages maintain a high informational density and diverse coverage.

Function display_results – Overview

This function prints a structured summary of all computed metrics for one or more pages. It presents:

Page-level metrics such as total sections, mean semantic density, total weight, content balance, top section share, dominance score, and label distribution. Section-level query relevance, showing average similarity scores and top-matching sections per query. Top sections by semantic density with additional metadata like semantic weight, value labels, and redundancy flags. Redundancy summary for the page, including average redundancy, redundant ratio, and count of high-redundancy sections.

It consolidates the processed information into a readable format for quick inspection and interpretation of results.

Result Analysis and Explanation

Overview of the Page Content Evaluation

The page “Handling Different Document URLs Using HTTP Headers Guide” contains 16 analyzed sections, representing distinct semantic blocks extracted from headings, paragraphs, and list items. The analysis evaluates each section for semantic density, relevance to queries, content weight, and redundancy, providing a holistic understanding of the informational value of the page.

Semantic Density and Content Weight

Semantic density measures the informational concentration per token in each section, while semantic weight reflects the overall contribution of a section to the page’s semantic content.

· Mean semantic density: 0.0312

o This is relatively low, indicating that most sections contain light semantic content per token.

o Threshold guidance (for context):

- Low: <0.03

- Medium: 0.03–0.06

- High: >0.06

o Based on this, most sections are in the Low–Medium range, with a few high-density sections.

· Total semantic weight: 91.6507

- Represents cumulative semantic strength across all sections. Sections with higher weight have greater informational importance.

· Content balance: 0.9661 (scale 0–1)

- Values close to 1 indicate well-distributed semantic weight across sections, suggesting that content coverage is balanced rather than dominated by one section.

· Top section share: 0.0898

- The highest-weight section contributes ~9% of total weight, confirming that no single section dominates the page.

· Dominance score: 0.0898

- Consistent with top section share, indicating low dominance, which is favorable for pages requiring comprehensive coverage.

· Label distribution:

- High: 2 (12%)

- Medium: 5 (31%)

- Low: 9 (56%)

- Most sections are labeled Low or Medium, with only a small portion having high semantic significance. This aligns with the observed mean density and weight metrics.

Query Relevance Analysis

Two queries were analyzed to assess how well page sections address potential user intents:

Query 1: “How to handle different document URLs”

· Average relevance score: 0.2553

- Moderate alignment with the page content; sections cover the topic but semantic matching is not very strong.

- Top three sections have similarity scores of 0.5060, 0.4530, 0.3771, indicating that the first section is the most relevant, with decreasing relevance for subsequent sections.

· Relevance thresholds guidance (cosine similarity, 0–1):

- Low: <0.3

- Medium: 0.3–0.5

- High: >0.5

· Interpretation: Only the top section approaches the High–Medium boundary, suggesting potential opportunity to optimize content to better match this query.

Query 2: “Using HTTP headers for PDFs and images”

· Average relevance score: 0.4627

- Better alignment compared to Query 1.

· Top three sections have scores 0.7057, 0.6789, 0.6287, all in the High relevance range, reflecting strong semantic coverage for this specific query.

Insights from query analysis:

- The page is well-aligned for Query 2, addressing non-HTML content handling effectively.

- For Query 1, some sections are moderately relevant but could benefit from semantic reinforcement or more targeted content.

Content Quality by Semantic Density

The top sections by density highlight which blocks carry the most concentrated information:

1. Section with density 0.0783 (High label)

- Highest semantic density, but relatively low weight (2.9747), suggesting concise but information-rich content.

- Top query match: Query 1, score 0.3656 (Medium relevance).

2. Section with density 0.0760 (High label)

- Also concise and informative, weight 2.2812, matching Query 2 with lower relevance score (0.2455).

3–5. Medium-density sections (0.0544, 0.0305, 0.0274)

- Higher weight (5.1665–8.2331), indicating that these sections cover broader content but with slightly lower information per token.

- Query relevance varies; top-density sections are not always the most query-aligned.

Interpretation:

- High-density sections provide focused information, useful for quick insights or content enhancement.

- Medium-density but high-weight sections contribute to comprehensive coverage, ensuring the page addresses multiple aspects of the topic.

Redundancy Analysis

Redundancy metrics assess overlapping content across sections:

· Average redundancy score: 0.4589

- Moderate overlap exists among some sections.

· Redundant ratio: 0.0

- Very few sections exceed the 0.8 similarity threshold with others, indicating low systemic redundancy.

· High-redundancy sections: 1

- Only one section is flagged, which could be reviewed for potential merging or pruning.

Interpretation:

- The page content is well-diversified, with minimal unnecessary repetition.

- Maintaining this balance supports user experience and SEO by reducing duplicated signals.

Combined Insights and Practical Interpretation

- Content coverage is generally balanced, with multiple Medium-weight sections and a few High-density highlights.

- Query alignment varies: strong for Query 2, moderate for Query 1, indicating areas for content reinforcement.

- Semantic density vs. weight: Top-weighted sections may not be top-density, suggesting a mix of broad informative blocks and concise, high-value snippets.

- Redundancy is low, which is favorable for search engines and page clarity.

Threshold guidance for actionable interpretation:

- Sections with density >0.06: very informative per token, candidates for highlighting or optimization.

- Sections with weight >7: key contributors to page coverage, ensure clarity and alignment with target queries.

- Sections with similarity <0.3 for target queries: opportunity to improve relevance via content enrichment.

- Redundant sections with high overlap (>0.8 similarity) should be reviewed to reduce repetition.

This analysis provides a structured understanding of the page, showing which sections are high-value, which content aligns best with specific queries, and which areas could be enhanced or streamlined for better SEO and user experience.

Result Analysis and Explanation

This section provides a detailed interpretation of the analyzed web pages and their content sections, focusing on semantic density, content relevance, redundancy, and overall SEO performance indicators. The analysis is designed to provide actionable insights for improving content quality and query alignment across multiple pages.

Page-Level Content Overview

The first level of analysis examines each page’s overall content structure:

- Number of Sections: The number of discrete content sections analyzed per page. A higher section count indicates more granular content segmentation, which can help in understanding which sections contribute most to query relevance and content density.

- Mean Semantic Density: Represents the average semantic richness of all sections on the page. Values closer to 0.05–0.08 generally indicate sections with higher informational content. Values below ~0.03 may suggest that content is sparse or less conceptually focused.

- Total Semantic Weight: Reflects cumulative importance of all content sections. Higher values indicate that the page has sections with significant semantic content distributed across it.

- Content Balance (0–1): Measures how evenly semantic weight is distributed. Scores near 1 suggest content is evenly spread, while lower scores indicate dominance by a few sections.

- Top Section Share and Dominance Score: Indicate how much of the page’s semantic weight is concentrated in top-performing sections. A low top section share (<0.10) suggests that content value is distributed rather than dominated by a few sections.

- Label Distribution (High, Medium, Low): Categorizes sections based on their semantic density and weight. The proportion of High, Medium, and Low labels helps gauge overall content quality.

Interpretation Example:

- Pages with a high mean density, balanced distribution, and a reasonable number of High-labeled sections are considered content-rich and well-structured.

- A large proportion of Low-labeled sections may indicate thin or peripheral content.

Query Relevance Analysis

Query relevance measures how well each section aligns with the queries of interest:

- Similarity Scores: Represent cosine similarity between query embeddings and section embeddings. Threshold bins for interpretation:

- High Relevance (0.60–1.0): Sections strongly aligned with the query, likely to satisfy user intent.

- Medium Relevance (0.40–0.59): Sections partially match the query; useful but may require supplementation.

- Low Relevance (<0.40): Sections weakly aligned; may be tangential or not directly relevant.

- Average Query Relevance per Query: Provides a summary for each query across all sections of a page. This helps identify which queries are well-covered and which may require additional content.

- Top Sections per Query: Highlights the most relevant sections for each query. The combination of similarity score, semantic density, and semantic weight offers a complete picture:

- High similarity + High density + High weight = Section of prime importance.

- Low similarity but high density may indicate valuable content not aligned with a specific query.

Practical Insight:

- Pages often have a few sections with strong relevance for certain queries and weaker relevance for others. Optimizing query coverage ensures that high-value content aligns well with all target queries.

Content Quality and Section-Level Metrics

This part focuses on individual section performance independent of queries:

- Semantic Density vs. Weight: Sections are evaluated based on both density and weight.

- High-density sections indicate concentrated, conceptually rich content.

- High-weight sections indicate sections that contribute significantly to the page’s overall semantic value.

- Labeling (High/Medium/Low): Helps quickly identify strong vs. weak sections.

- High-labeled sections: Critical for SEO; often aligned with important topics.

- Medium-labeled sections: Moderate content value; may need reinforcement.

- Low-labeled sections: Peripheral content or less informative sections.

- Redundancy: Measured per section to detect overlapping content. High redundancy indicates that multiple sections convey similar information, which may dilute user experience or page authority.

Practical Actions:

- Focus on improving Low-labeled sections with high relevance potential.

- Optimize High-redundancy sections by consolidating content or refining unique messaging.

- Ensure top-density sections also align with high-priority queries to maximize content impact.

Redundancy Analysis

Redundancy metrics evaluate semantic overlap across sections:

- Average Redundancy Score: Measures the mean similarity of a section with all other sections.

- Scores above 0.65 indicate significant overlap; consider merging or differentiating content.

- Redundant Ratio: Proportion of sections exceeding the defined redundancy threshold.

- High ratios may suggest repetitive content across the page.

- High-Redundancy Sections Count: Number of sections labeled as highly overlapping.

Practical Insight:

- Pages with a few high-redundancy sections are acceptable if the majority of content remains unique.

- Excessive redundancy can reduce page efficiency and SEO value.

Visualizations and Interpretation

Visualizations provide a high-level overview of multiple pages and their sections, helping identify trends, outliers, and opportunities:

- Label Distribution per Page

- What it shows: Counts of High, Medium, Low sections per page.

- Interpretation: Quickly identifies pages with strong vs. weak content distribution. A page with predominantly Low sections may require content improvement.

- Semantic Density vs. Weight Scatter

- What it shows: Each section plotted by density and weight, colored by label, sized by redundancy, and styled by page.

- Interpretation:

- Sections in the top-right quadrant (high density and weight) are most valuable.

- Sections with high redundancy (larger points) may need consolidation.

- Helps prioritize which sections to optimize for query alignment.

- Redundancy and Content Balance Bar Plot

- What it shows: Page-level metrics for average redundancy, redundant ratio, and content balance.

- Interpretation:

- Pages with low content balance but high redundancy require restructuring.

- High content balance with low redundancy indicates well-distributed, unique content.

- Query Relevance Heatmap

- What it shows: Top sections per page vs. all queries, colored by similarity score.

- Interpretation:

- Darker cells indicate stronger alignment with the query.

- Quickly identifies which pages and sections are best for each query.

- Useful for assessing gaps in query coverage and guiding content creation.

Actionable Insight from Visualizations:

- Focus content updates on Low-density or Low-relevance sections to improve overall page performance.

- Reduce redundancy in overlapping sections to strengthen semantic focus.

- Ensure top-density sections also target high-priority queries for maximum SEO benefit.

Cross-Page Insights

- Pages with higher mean semantic density generally provide richer content for queries but may still contain redundant sections that reduce efficiency.

- Query coverage varies per page; some queries may have strong representation in certain pages and weak in others, highlighting content gaps.

- Redundancy management is crucial: pages with many high-redundancy sections may appear repetitive to users and search engines, even if individual sections are valuable.

Threshold-Based Interpretation Summary

- Similarity Score (Query Relevance):

- 0.60–1.0 -> Strong relevance (priority for optimization and linking).

- 0.40–0.59 -> Moderate relevance (may need content refinement).

- <0.40 -> Weak relevance (consider rewriting or relocating content).

- Semantic Density:

- Greater Than 0.05 -> High informational content.

- 0.03–0.05 -> Medium content richness.

- <0.03 -> Low content richness; consider adding depth.

- Redundancy Score:

- Greater Than 0.65 -> High overlap; sections should be merged or restructured.

- 0.40–0.65 -> Moderate overlap; review for improvement.

- <0.40 -> Low overlap; sections are mostly unique.

Practical Recommendations

- Optimize Low-density sections with potential query alignment to improve coverage.

- Merge or differentiate high-redundancy sections to strengthen content uniqueness.

- Prioritize High-density and High-weight sections for linking, updates, and SEO emphasis.

- Use query relevance heatmaps to identify missing coverage for target queries and adjust content strategy accordingly.

- Maintain a balance between content richness, query relevance, and section uniqueness to maximize SEO effectiveness.

This analysis provides a complete understanding of page performance, section quality, redundancy, and query alignment across multiple pages. It equips teams to make informed decisions about content updates, SEO optimizations, and strategic improvements.

Here’s a well-structured Q&A Section for the project’s result interpretation. It focuses on insights derived from the analysis, actionable steps, and the benefits of applying these insights:

Q&A: Understanding Results and Actionable Insights

What do the semantic density and weight scores indicate for our content?

Semantic density measures how conceptually rich a section is, while semantic weight captures its contribution to the overall page content. Sections with high density and weight are the most valuable for SEO, as they contain concentrated information aligned with target topics. Low-density sections indicate thin content, which may not fully address user queries.

Actionable Insight:

- Prioritize updates on Low-density sections to add depth.

- Maintain and further optimize High-density sections to strengthen authority.

Benefit: Enhances page comprehensiveness and improves the likelihood of ranking higher for relevant queries.

How should we interpret the query relevance scores?

Query relevance scores reflect how well each section aligns with specific search queries. Higher scores indicate sections are directly addressing the query intent, while lower scores highlight gaps or peripheral content.

Actionable Insight:

- Review sections with low relevance for critical queries and update them with more targeted content.

- Ensure that top-performing sections are internally linked to improve visibility and SEO impact.

Benefit: Improves content alignment with user intent, increasing the probability of higher rankings and better engagement.

What does the label distribution tell us about the page quality?

Sections are labeled High, Medium, or Low based on semantic density and weight. A higher proportion of High-labeled sections signals stronger content quality, while a predominance of Low-labeled sections indicates areas needing improvement.

Actionable Insight:

- Enhance Low-labeled sections by adding context, examples, or detailed explanations.

- Reinforce Medium-labeled sections to elevate them to High.

Benefit: Increases overall page value and ensures content richness across the entire page, supporting better SEO performance.

How should redundancy scores guide content optimization?

Redundancy measures semantic overlap between sections. High redundancy indicates repeated information, which can dilute page effectiveness and confuse search engines.

Actionable Insight:

- Merge or restructure redundant sections to eliminate repetition.

- Focus on unique content creation to fill gaps rather than duplicating information.

Benefit: Improves content clarity and efficiency, enhancing user experience and page authority.

How do visualizations help interpret page performance?

Visualizations provide a high-level view of content quality, query alignment, and redundancy across multiple pages:

- Label Distribution: Quickly identifies which pages have more High, Medium, or Low sections.

- Density vs. Weight Scatter: Highlights strong sections and identifies low-density/low-weight areas for improvement.

- Redundancy and Content Balance Plot: Shows how evenly content value is distributed and highlights overlapping sections.

- Query Relevance Heatmap: Reveals which sections perform best for each query, helping prioritize content updates.

Actionable Insight:

- Use heatmaps to target query gaps.

- Focus on high-density, high-weight, low-redundancy sections for SEO enhancements.

Benefit: Provides a visual roadmap for prioritizing content updates and improving overall SEO strategy.

How can we use these results for multiple pages and queries?

The analysis highlights which pages perform well across multiple queries and which require optimization. Pages with high relevance for multiple queries should be leveraged as key SEO assets, while pages with low query alignment should be updated or consolidated.

Actionable Insight:

- Identify underperforming pages and improve query coverage.

- Repurpose or link high-performing content to amplify impact across multiple queries.

Benefit: Ensures a consistent and comprehensive content strategy, maximizing SEO return on effort.

What is the overall benefit of applying these insights?

By analyzing semantic density, query relevance, and redundancy, you can systematically enhance content quality, align it with target queries, and reduce inefficiencies. This structured approach ensures pages are more comprehensive, relevant, and valuable for both users and search engines.

Actionable Insight:

- Integrate these metrics into routine content audits.

- Prioritize content updates based on semantic value and query alignment.

Benefit: Optimizes content strategy, improves search engine visibility, enhances user engagement, and strengthens overall SEO performance.

Final thougts

The Content Density and Semantic Weight Analyzer provides a clear, structured assessment of the informational strength across all sections of a webpage. By quantifying semantic density, semantic weight, and redundancy, the analysis highlights which sections carry the most value, which are moderately informative, and which are less significant.

Key takeaways include:

- Section-Level Insights: High-density sections have been identified, indicating areas that contribute most to the page’s overall informational strength. Medium- and low-density sections offer opportunities for content enhancement or restructuring.

- Query Relevance Assessment: Average and top-section relevance scores reveal which content segments align best with specific queries, enabling targeted optimization for search intent.

- Content Balance and Distribution: Metrics like content balance, top section share, and dominance scores provide a holistic view of content allocation, ensuring that no single section disproportionately dominates the page.

- Redundancy Awareness: Redundancy scores highlight repetitive or low-value sections, supporting decisions to refine content and enhance clarity.

- Visual Interpretability: The visualizations—label distribution, density vs. weight scatter plots, redundancy summaries, and query relevance heatmaps—offer an intuitive understanding of content structure, making it easy to prioritize optimization efforts.

Overall, the analysis demonstrates how each page’s content is structured and valued, empowering informed decisions to strengthen informational impact, optimize content strategy, and enhance content relevance for target queries.