SUPERCHARGE YOUR ONLINE VISIBILITY! CONTACT US AND LET’S ACHIEVE EXCELLENCE TOGETHER!

👉What Is AIEO?

Artificial Intelligence Experience Optimization (AIEO) is the strategic practice of designing, refining, and optimizing digital experiences specifically for AI-driven systems and interactions. It focuses on how artificial intelligence understands, interprets, and delivers information across platforms such as search engines, chatbots, voice assistants, recommendation systems, and generative AI models. Unlike traditional SEO, which targets human users and search algorithms, AIEO ensures that content, data structures, and user journeys are optimized for both human engagement and AI comprehension—improving accuracy, relevance, personalization, and trust in AI-mediated experiences.

👉The Silent Shift in How Brands Are Chosen

For years, marketing success was measured by visibility. Rankings, impressions, reach, clicks. The assumption was simple. If people see you more, they will choose you more. That assumption is quietly breaking down. Today, visibility alone no longer decides outcomes. Decisions are increasingly made before a user ever compares options, and in many cases, before they even ask the full question.

This change is being driven by AI-mediated decision-making. Search engines, recommendation systems, copilots, assistants, and enterprise AI tools are no longer acting as neutral display layers. They are reasoning systems. They evaluate, filter, and prioritize information on behalf of users. When someone asks for the best tool, the safest provider, or the most reliable solution, AI does not list everything equally. It selects.

Here is the uncomfortable truth most brands have not fully accepted yet. AI is already deciding which brands feel safest to recommend. Not the loudest. Not the most optimized for keywords. The ones that appear coherent, confident, low-risk, and cognitively familiar within the AI’s internal reasoning process. This decision often happens invisibly, without traffic, without impressions, and without any obvious signal that a brand was even considered and rejected.

This is where Artificial Intelligence Experience Optimization, or AIEO, enters the picture. AIEO is not about forcing mentions or chasing surface-level visibility. It is about shaping how AI understands, trusts, and reasons about your brand. It represents the next evolution of marketing intelligence, one that shifts focus from being seen by users to being preferred by the systems that increasingly guide their choices.

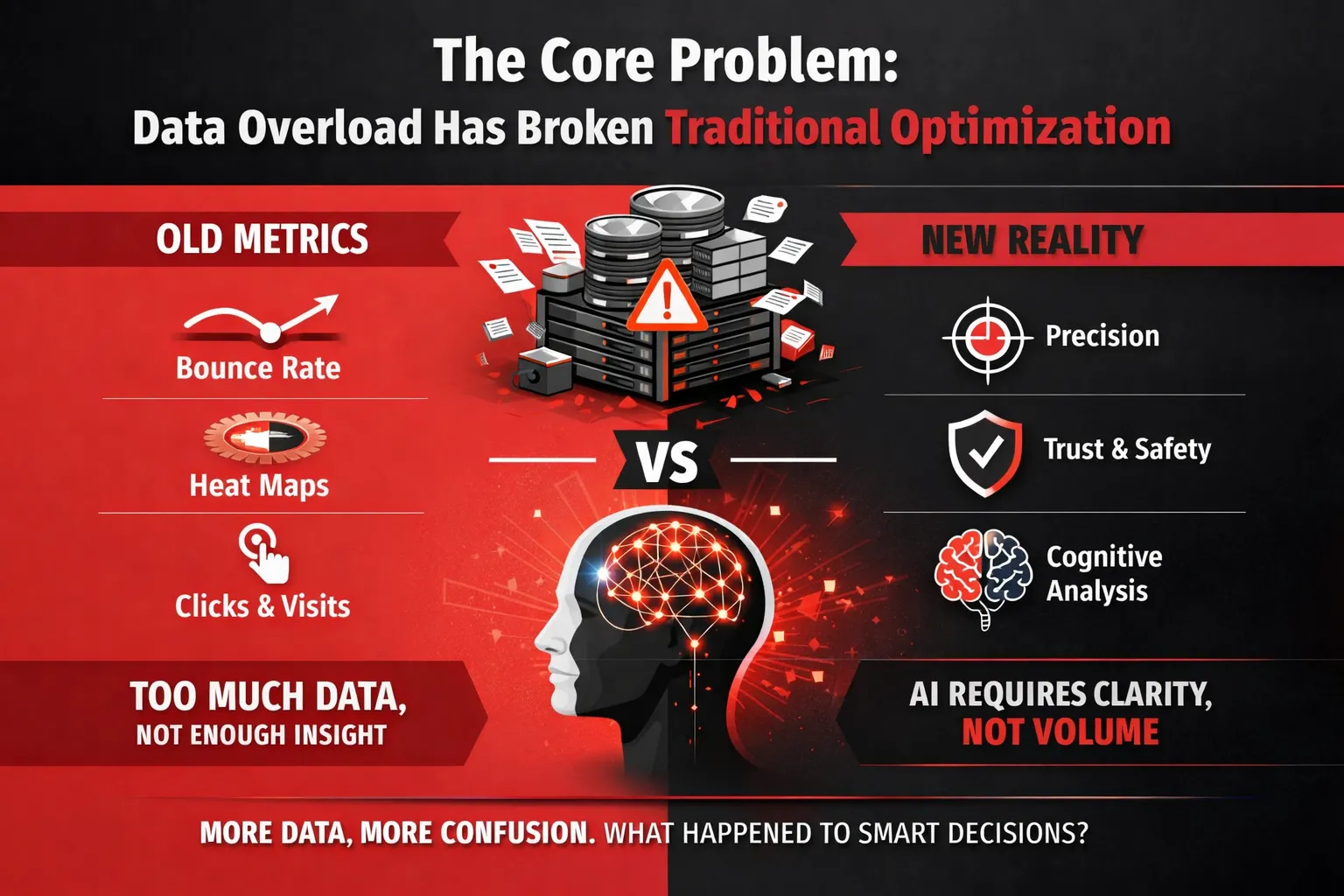

👉The Core Problem: Data Overload Has Broken Traditional Optimization

For years, marketers were taught a simple rule: more data leads to better decisions. Collect everything. Measure everything. Track every movement a user makes on a page.

That rule no longer holds true.

Today, most organizations are not suffering from a lack of data. They are suffering from too much of it. Dashboards are full. Reports are endless. Yet clarity is missing. Decisions feel slower, not faster. Confidence is lower, not higher.

This is not a tooling problem. It is a signal problem.

Why More Data No Longer Means Better Decisions

Modern digital systems generate massive volumes of behavioral data. Every scroll, click, pause, hover, and exit is logged. On paper, this should create perfect visibility. In reality, it creates noise.

Data without hierarchy has no meaning. When every metric is treated as important, none of them actually guide action. Teams end up optimizing for what is easy to measure instead of what actually predicts outcomes.

Worse, historical data is often mistaken for future certainty. Patterns that worked last year are applied blindly, even though user behavior, platforms, and AI intermediaries have already shifted.

The result is a false sense of control. You feel informed, but you are not prepared.

The Limits of Legacy Metrics

Traditional optimization models were built for a very different internet. One where humans were the primary decision-makers and search engines acted as neutral directories. That world no longer exists.

Bounce rate was meant to indicate dissatisfaction. Today, it often signals efficiency. A user may arrive, get exactly what they need, and leave. Interpreting that as failure leads to incorrect conclusions.

Heatmaps show where users click or scroll, but they do not explain why. They capture surface behavior, not intent, trust, or decision confidence. Optimizing layouts based on heat alone treats users like mice in a maze, not decision-makers with context.

Click-based assumptions are even more fragile. A click does not equal belief. It does not equal trust. And it certainly does not equal preference. In an AI-mediated environment, clicks are becoming secondary signals at best.

These metrics were useful when optimization was about page design and funnel efficiency. They are ineffective when optimization is about decision prediction.

Surface-Level Optimization in a Predictive AI World

AI systems do not evaluate brands the way humans do. They do not scroll pages or react to visual cues. They reason.

Modern AI builds probabilistic models based on consistency, clarity, confidence, and risk avoidance. It evaluates whether an entity feels reliable enough to recommend, not whether it had a high click-through rate.

Surface-level optimization focuses on what users do after landing. Predictive systems care about what happens before a recommendation is ever made.

This is where most strategies fail.

They optimize presentation while ignoring perception. They polish pages while leaving cognitive signals unstructured. They chase engagement metrics while AI is silently ranking trustworthiness.

In a predictive environment, visibility is not enough. Being present does not guarantee being chosen.

Why AI Needs Precision, Not Volume

AI does not benefit from more information. It benefits from clearer information.

Precision means reducing ambiguity. It means structuring signals so that reasoning paths converge instead of conflict. It means making it easier for AI to be confident about a recommendation.

When data is excessive and unprioritized, AI introduces safety mechanisms. It defaults to familiar brands. It avoids smaller entities. It chooses what feels least risky, not what is objectively best.

This is not bias in the human sense. It is defensive logic.

Precision corrects this by engineering clarity. It tells AI what matters, why it matters, and how strongly it should be weighted. It replaces volume with intent and replaces noise with confidence.

That is why traditional optimization breaks down. It was designed to analyze behavior after the fact. AI operates before the decision ever surfaces.

Until optimization shifts from collecting more data to shaping better signals, brands will continue to feel invisible in systems they do not fully understand.

And that is exactly the gap AIEO exists to solve.

Here’s an example –

Imagine a brand that has done everything “right” by traditional standards. The website loads fast, users spend time on pages, bounce rates look healthy, and heatmaps show constant interaction. On paper, the experience appears optimized. Yet when a user asks an AI for a recommendation in that category, the brand is ignored. Meanwhile, another brand with fewer clicks and less visible engagement is consistently suggested. The difference is not performance data, but clarity. AI does not interpret engagement the way analytics tools do. It looks for consistency, certainty, and reduced risk. In a world flooded with data, AI filters aggressively. More signals do not mean better decisions. Clear signals do.

👉Let’s Understand AIEO (Artificial Intelligence Experience Optimization)

At its core, AIEO stands for Artificial Intelligence Experience Optimization. In simple terms, AIEO is the practice of shaping how AI systems understand your brand, how much they trust it, and whether they feel confident recommending it to users.

This is not about forcing visibility or stuffing information into AI systems. It is about aligning your brand with the way AI actually thinks, evaluates risk, and forms preference.

Most people still assume AI works like a search engine. It does not. AI does not simply fetch the best matching answer. It evaluates patterns, reliability, familiarity, and confidence before it ever presents a recommendation. AIEO exists because those internal evaluations can be influenced, engineered, and optimized.

In client-friendly language, AIEO means this:

we are not trying to make AI talk about you more, we are making AI trust you more.

That distinction changes everything.

How AIEO Is Fundamentally Different

To understand why AIEO matters, it helps to see where older models stop working.

Traditional SEO is designed for humans.

It focuses on rankings, keywords, backlinks, and user behavior signals like clicks or time on page. These methods assume that if a user sees you, they will decide whether to trust you. The decision happens in the human mind.

That assumption breaks down in an AI-driven environment.

LLM SEO or GEO takes a step forward.

It focuses on making content readable and retrievable for AI engines. The goal is to help AI mention your brand correctly, understand what you do, and pull accurate information into responses.

This improves visibility inside AI answers, but visibility is not preference.

AIEO operates at a deeper layer.

It does not optimize for users, and it does not optimize for AI engines as retrieval systems. AIEO optimizes for the cognitive layer of AI itself. This is the layer where AI weighs trust, certainty, safety, and recommendation confidence.

In other words, AIEO focuses on how AI decides, not just what it knows.

Optimizing for AI Cognitive Ability

AI systems build internal reasoning paths before they respond. They assign confidence levels to entities. They estimate risk. They subconsciously choose what feels safest to suggest, especially when users are asking for guidance, comparisons, or recommendations.

AIEO is designed around these realities.

Instead of asking, “Will AI find our content?” AIEO asks, “Will AI feel confident standing behind our brand?”

- Instead of optimizing for traffic, AIEO optimizes for recognition.

- Instead of chasing mentions, AIEO builds preference.

- Instead of relying on popularity signals, AIEO creates cognitive trust signals.

This is why AIEO is not an extension of SEO. It is a new discipline entirely.

As AI becomes the primary decision layer between brands and users, the brands that succeed will not be the loudest or the most visible. They will be the ones AI feels safest recommending.

That is what AIEO is built to achieve.

👉AI Does Not Retrieve. It Reasons.

One of the biggest misunderstandings in modern marketing is the belief that AI works like a search engine. Many brands still assume that if their information exists somewhere in the data pool, AI will simply fetch it and present it to users. That assumption is no longer true, and it is the reason many brands are visible but rarely recommended.

Let’s look at this example –

“When a user asks an AI to recommend the best solution for a specific problem, the system does not scan the web and pick the most mentioned name. It evaluates options internally, asking quieter questions: Which brand has shown consistency over time? Which one feels safer to suggest? Which option reduces the chance of being wrong? One brand may appear frequently across articles, but another may present clearer positioning, fewer contradictions, and stronger alignment with the query. The AI follows that reasoning path and makes a choice. This is why some brands are repeatedly mentioned but rarely recommended. Retrieval creates visibility. Reasoning creates preference.”

AI does not retrieve answers the way Google once did. It reasons before it responds.

This distinction changes everything.

The biggest misconception about AI recommendations

Most businesses believe that AI recommendations are driven by availability. If content exists, if a brand is mentioned often enough, if the website is well optimized, then AI will surface it. In reality, AI systems are not scanning databases and picking results based on presence alone.

What AI actually evaluates is confidence. It looks for signals that help it decide which option feels safest, most reliable, and most contextually appropriate for the user. When AI recommends a brand, it is not saying “this exists.” It is saying “this is the answer I trust enough to stand behind.”

That is why two brands with similar offerings can receive wildly different treatment from AI. One gets consistently recommended. The other gets ignored or mentioned briefly without conviction.

How AI actually works behind the scenes

When an AI system processes a user query, it does not jump straight to an answer. It constructs internal reasoning pathways. These pathways connect past knowledge, contextual understanding, risk evaluation, and relevance assessment. Each possible option moves through this internal logic before AI decides what to present.

During this process, AI assigns confidence weights. These weights are not public scores, but they influence which brand feels reliable enough to recommend. Factors such as clarity, consistency, authority signals, historical trust, and perceived risk all shape these confidence levels.

Finally, AI chooses the option that feels both closest to the user’s intent and safest to suggest. Safety here does not mean legal safety alone. It means cognitive safety. The answer that is least likely to confuse, mislead, or create uncertainty for the user.

This is why AI often defaults to familiar or established brands, even when smaller or newer options may objectively be better. Familiarity reduces perceived risk inside the reasoning system.

Why being mentioned is not the same as being preferred

Many brands celebrate when AI mentions them in responses. While visibility feels like progress, it is not the same as preference. A mention is passive. A recommendation is active.

When AI prefers a brand, it frames that brand as the answer, not as an option among many. It presents it with confidence, clarity, and intent. This preference comes from internal reasoning alignment, not from keyword presence or frequency.

A brand that is merely mentioned has not earned trust inside the AI’s decision structure. It exists in the data, but it does not influence the final recommendation strongly enough to lead the response.

This is where AIEO changes the game. Instead of chasing mentions, it focuses on shaping how AI reasons about a brand. It works on the internal signals that determine confidence, risk avoidance, and recall priority.

In practical terms, this means optimizing for how AI understands your brand, not just how often it sees it. It means aligning messaging, structure, credibility, and contextual clarity so that AI feels comfortable recommending you without hesitation.

The future of marketing is not about being present everywhere. It is about being trusted somewhere specific. AI does not reward noise. It rewards certainty.

Brands that understand this shift early will not fight for visibility. They will earn preference.

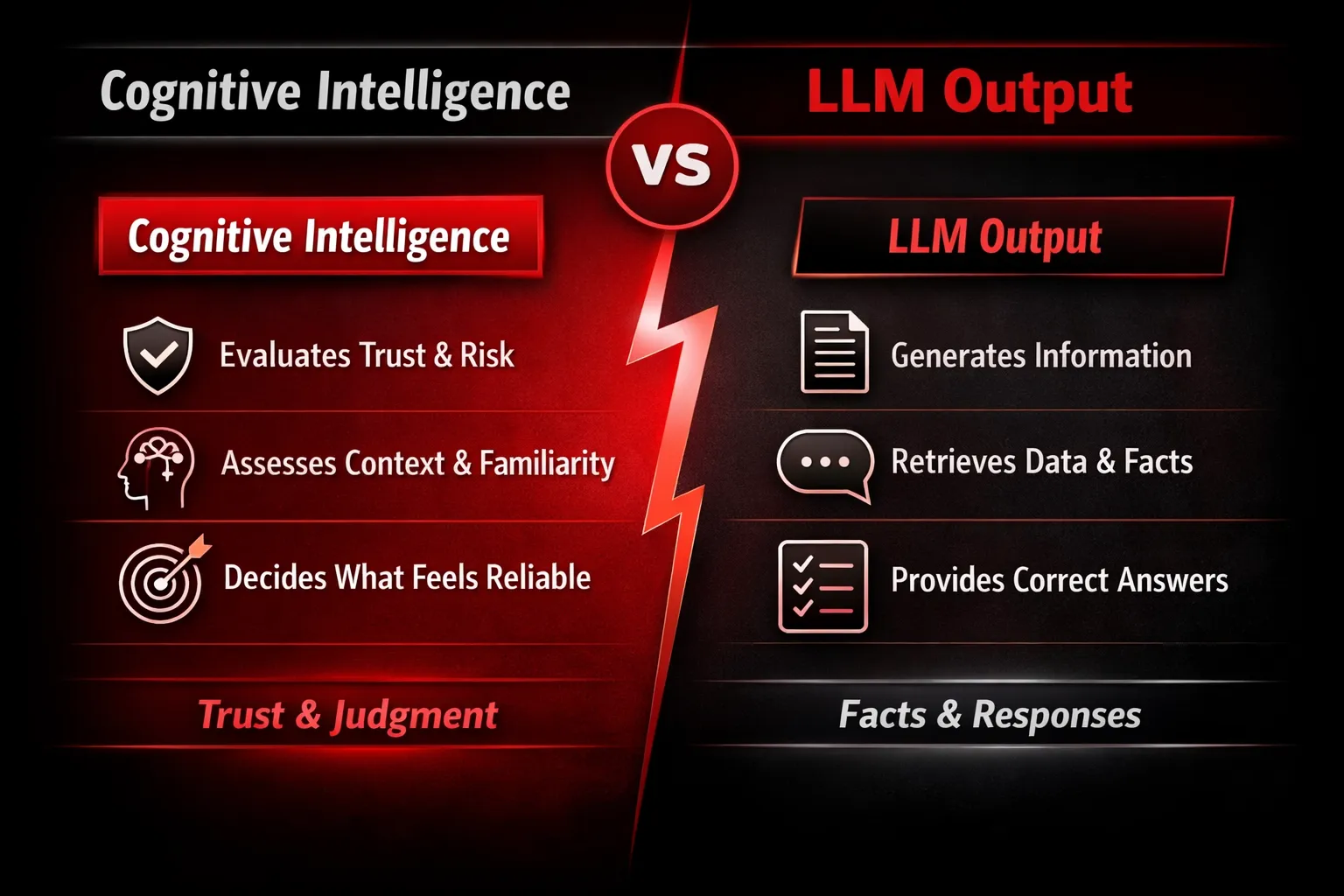

👉Cognitive Intelligence vs LLM Output

Most people think AI works by answering questions. That assumption is already outdated.

What users see on the surface is an answer. What actually happens underneath is a decision.

This distinction is where most brands misunderstand AI and where AIEO starts to matter.

AI Cognitive Intelligence vs LLM Generated Answers

LLMs are response systems. They generate language based on probability, patterns, and context. Their job is to form a coherent reply that sounds correct, relevant, and helpful. That is output.

Cognitive intelligence operates at a different layer. It is not concerned with sounding right. It is concerned with being right enough to recommend.

Cognitive intelligence evaluates entities, brands, and concepts before an answer is ever formed. It asks internal questions such as:

- Which option feels safest to suggest?

- Which source carries the least risk?

- Which brand is most likely to satisfy the user without creating uncertainty?

The answer you read is the final surface expression of a much deeper internal evaluation. Brands that only optimize for output are competing at the wrong level. For example – Lets look at this:

“Consider two people giving you advice. Both provide correct information, but only one feels trustworthy. You cannot always explain why, yet you know which advice you would follow. That judgment is not based on facts alone. It is based on confidence, consistency, and perceived safety. AI behaves in a similar way. LLMs can generate accurate answers, but cognitive intelligence decides which answer should be acted upon. A brand can appear in an answer and still not be trusted. AIEO focuses on that invisible layer where trust is formed, not where words are generated.”

Why Answers Do Not Equal Trust

An AI can mention a brand without trusting it.

Mentioning happens when a brand exists in the data. Trust happens when a brand survives internal scrutiny.

Cognitive intelligence assigns confidence scores long before language is generated. If a brand feels uncertain, fragmented, or risky, it may still appear in an answer but it will not be framed as the preferred option. It may be softened with qualifiers, pushed lower in lists, or avoided in recommendation-heavy contexts.

This is why visibility alone no longer translates to influence. Trust is not a byproduct of exposure. It is a separate signal that must be engineered.

How Cognitive Intelligence Evaluates Risk, Certainty, and Familiarity

Cognitive intelligence behaves more like a cautious advisor than a search engine.

Risk is evaluated by consistency. If a brand appears uneven across sources, contexts, or claims, confidence drops.

Certainty is evaluated by clarity. Brands with clear positioning, stable narratives, and predictable value propositions score higher.

Familiarity is not popularity. It is pattern recognition. Cognitive systems favor brands that appear repeatedly in similar decision contexts with minimal contradiction.

These evaluations happen quietly and continuously. By the time a recommendation is made, the decision has already been settled.

Why Brands Must Be Engineered for Recognition, Not Just Information Presence

Information presence answers the question: does this brand exist?

Recognition answers a different question: does this brand feel safe to choose?

AIEO focuses on engineering recognition at the cognitive level. This means shaping how a brand is understood, remembered, and weighted inside AI reasoning systems. It is not about adding more content. It is about reducing ambiguity.

Brands that win in AI driven environments are not the loudest. They are the most cognitively legible.

When AI can recognize a brand with confidence, it does not hesitate. It recommends.

And in a future where decisions are increasingly mediated by machines, hesitation is the real loss of visibility.

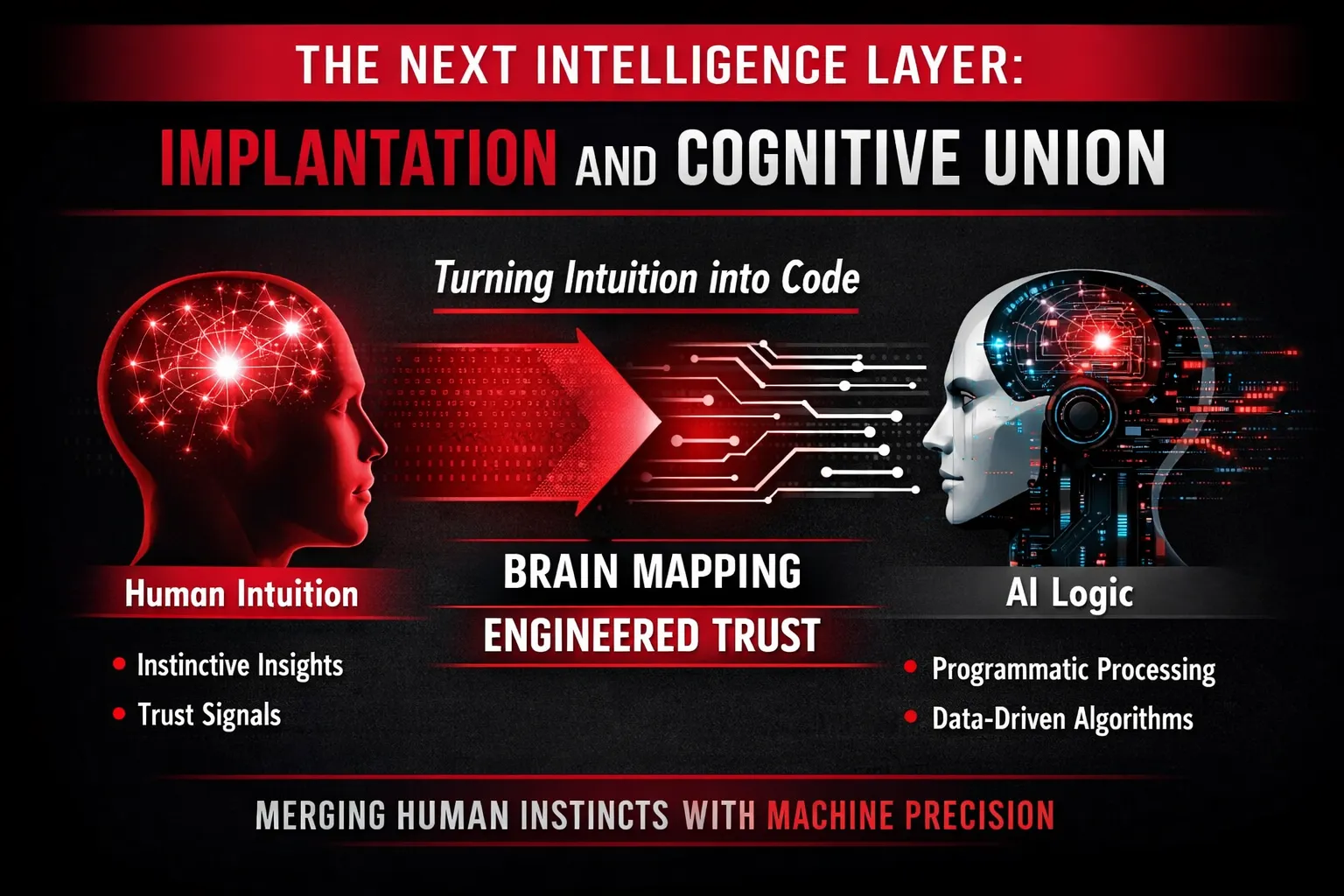

👉The Next Intelligence Layer: Implantation and Cognitive Union

To understand where AIEO is heading, you need to step beyond how AI works today and look at how intelligence actually forms in humans. This is where the ideas of implantation and cognitive union come in. They are not abstract theories. They describe a very real shift in how trust, intuition, and decision-making are being translated into machine reasoning.

What “implantation” really means in AIEO

Implantation does not mean inserting data or feeding prompts. It means embedding decision logic at the cognitive level of AI systems.

When humans trust a brand, that trust is not based on one data point. It is built from repeated exposure, consistency, emotional safety, past outcomes, and subconscious pattern recognition. Over time, these signals settle into intuition. You do not consciously calculate why a brand feels right. You just know.

Implantation is the process of taking that invisible intuition and encoding it into how AI reasons. In AIEO, implantation refers to engineering signals that sit inside the AI’s internal decision-making flow. These signals influence how the system weighs risk, confidence, familiarity, and recommendation safety.

Instead of asking AI to mention a brand, implantation teaches AI why a brand should feel reliable to recommend.

Cognitive union: where human intuition meets machine logic

Cognitive union is the point where human trust behavior and AI reasoning align.

Humans make decisions using intuition first and logic second. AI does the opposite. It starts with logic, probabilities, and risk assessment. AIEO bridges this gap by translating human intuition into structured signals that AI can understand and prioritize.

This happens in two critical ways.

First is mapping intuition. Human intuition leaves patterns behind. Language consistency, decision clarity, positioning stability, and outcome reliability all generate signals. AIEO identifies these patterns and maps them into recognizable structures for AI systems. The goal is not to copy human emotion but to reflect human certainty.

Second is turning trust into programmatic logic. Trust becomes data only when it is measurable. AIEO converts trust signals into confidence weightings, recall priority, and recommendation safety scores. These elements allow AI to reason about trust instead of guessing it.

This is cognitive union. Humans trust instinctively. AI trusts probabilistically. AIEO aligns the two.

Why humans take generations to build cognitive intelligence

Cognitive intelligence is slow by nature. It forms across years of experience, mistakes, social reinforcement, and cultural learning. Brands earn trust the same way humans do. Through time, repetition, and reliability.

That process worked in a pre-AI world because decision-making was human-led. Today, decisions are increasingly filtered through machines that do not have decades to learn. AI systems cannot wait for generational trust cycles. They need structured certainty now.

This creates a gap. Human trust evolves slowly. AI decision-making happens instantly.

Without intervention, AI fills that gap using shortcuts. Brand size, visibility, historical mentions, and perceived authority become substitutes for real trust. This is why larger brands are often recommended even when they are not the best option.

How AIEO accelerates cognitive intelligence through engineered signals

AIEO compresses what normally takes generations into an engineered framework.

By designing internal reasoning paths, AIEO teaches AI how to evaluate a brand beyond surface signals. Confidence scoring replaces assumption. Probability mapping replaces guesswork. Risk avoidance logic replaces brand bias.

Instead of waiting for years of exposure, AIEO supplies AI with structured clarity. It gives AI a reason to trust, not just a name to recognize.

This acceleration is not manipulation. It is alignment. AIEO aligns brand reality with AI perception. When done correctly, AI recommendations become more accurate, more fair, and more predictable.

Implantation and cognitive union are not futuristic concepts. They are responses to a present problem. As AI becomes the first point of decision, brands that understand how intelligence forms will shape how trust is assigned.

AIEO does not change what AI says. It changes how AI decides.

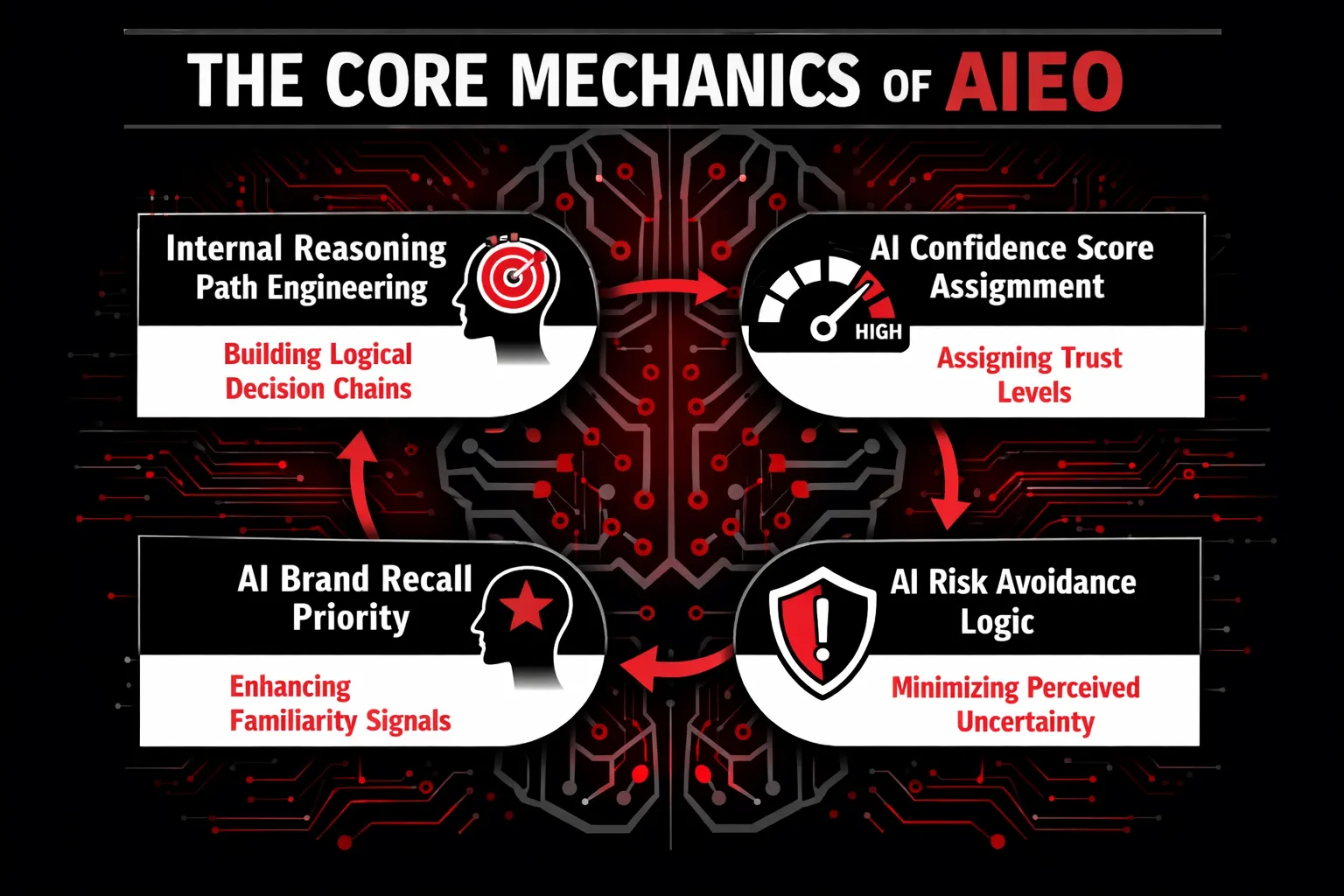

👉The Core Mechanics of AIEO

AIEO is not about feeding more data into AI systems. It is about shaping how those systems think, hesitate, compare, and finally decide. Most brands never reach this layer because they assume AI works like a search engine. It does not.

AI behaves more like a cautious advisor than a database. It builds logic, assigns trust, and avoids risk. AIEO operates directly inside these behaviors.

Let’s break down how this actually works.

Internal Reasoning Path Engineering

How AI forms brand logic chains

When an AI model evaluates a brand, it does not jump to an answer. It builds a chain of reasoning. This chain connects multiple signals such as credibility, consistency, relevance, risk, and familiarity. Each signal strengthens or weakens the next step.

Think of it like a silent checklist running in the background:

- Is this brand understandable?

- Does it align with the user’s intent?

- Is the information stable across sources?

- Does recommending it feel safe?

These are not questions AI asks explicitly, but they are encoded into its reasoning flow. Every brand sits somewhere along this chain. Some brands form clean, uninterrupted paths. Others create friction that causes AI to hesitate.

AIEO focuses on engineering these paths so the logic flows smoothly toward one conclusion. This brand is the right choice.

Why reasoning paths decide final recommendations

Final recommendations are rarely about who has the most data. They are about which reasoning path feels complete.

If the chain breaks at any point due to inconsistency, uncertainty, or lack of clarity, AI looks for an alternative that feels safer. This is why technically better products often lose to brands that feel cognitively easier to justify.

AIEO strengthens the full reasoning sequence so AI does not need to second-guess itself.

AI Confidence Score Assignment

What confidence scores mean in AI decisions

AI assigns internal confidence levels to every possible recommendation. These scores are not public, but they influence everything.

A higher confidence score means the model believes it can stand behind the recommendation without risk. A lower score means uncertainty, even if the information is correct.

This is why AI sometimes repeats the same brands across answers. Not because they are always the best, but because the confidence score attached to them is stable.

How AIEO engineers confidence weighting

AIEO does not try to inflate claims or manipulate signals. It focuses on clarity, consistency, and logical reinforcement.

Confidence grows when AI sees predictable structure, aligned messaging, and coherent expertise across contexts. When these signals repeat in a clean pattern, the confidence weight increases naturally.

The result is not forced preference, but earned certainty. AI feels more comfortable recommending the brand because the internal score supports the decision.

AI Risk Avoidance Logic

Why AI avoids uncertainty by default

AI systems are designed to minimize risk. Recommending something uncertain exposes the model to potential error. That is why AI often defaults to familiar or widely recognized options.

This behavior is not bias in the traditional sense. It is risk management.

When AI encounters ambiguity, it chooses the path that feels least likely to be questioned.

How AIEO reduces perceived risk

AIEO works by removing friction points that trigger hesitation. Clear positioning, precise language, consistent expertise, and transparent intent all reduce perceived risk.

When AI sees fewer gaps in understanding, it no longer needs to fall back on size or popularity as safety signals. The recommendation becomes easier to justify internally.

Lower perceived risk leads directly to higher recommendation probability.

AI Brand Recall Priority

How familiarity is computed

Familiarity is not about how often a brand is mentioned. It is about how well the brand fits into AI’s existing mental map.

AI remembers brands that appear consistently in relevant contexts, with stable meaning and clear association. Random exposure does not build recall. Patterned exposure does.

AIEO aligns brand presence with the contexts where decisions actually happen.

Why recall is not popularity

Popularity is noisy. Recall is precise.

A brand can be popular and still feel vague. Another brand can be smaller but cognitively sharp. AI prefers what it can recall with confidence, not what it sees the most.

AIEO focuses on being memorable in the right way. Not louder, but clearer.

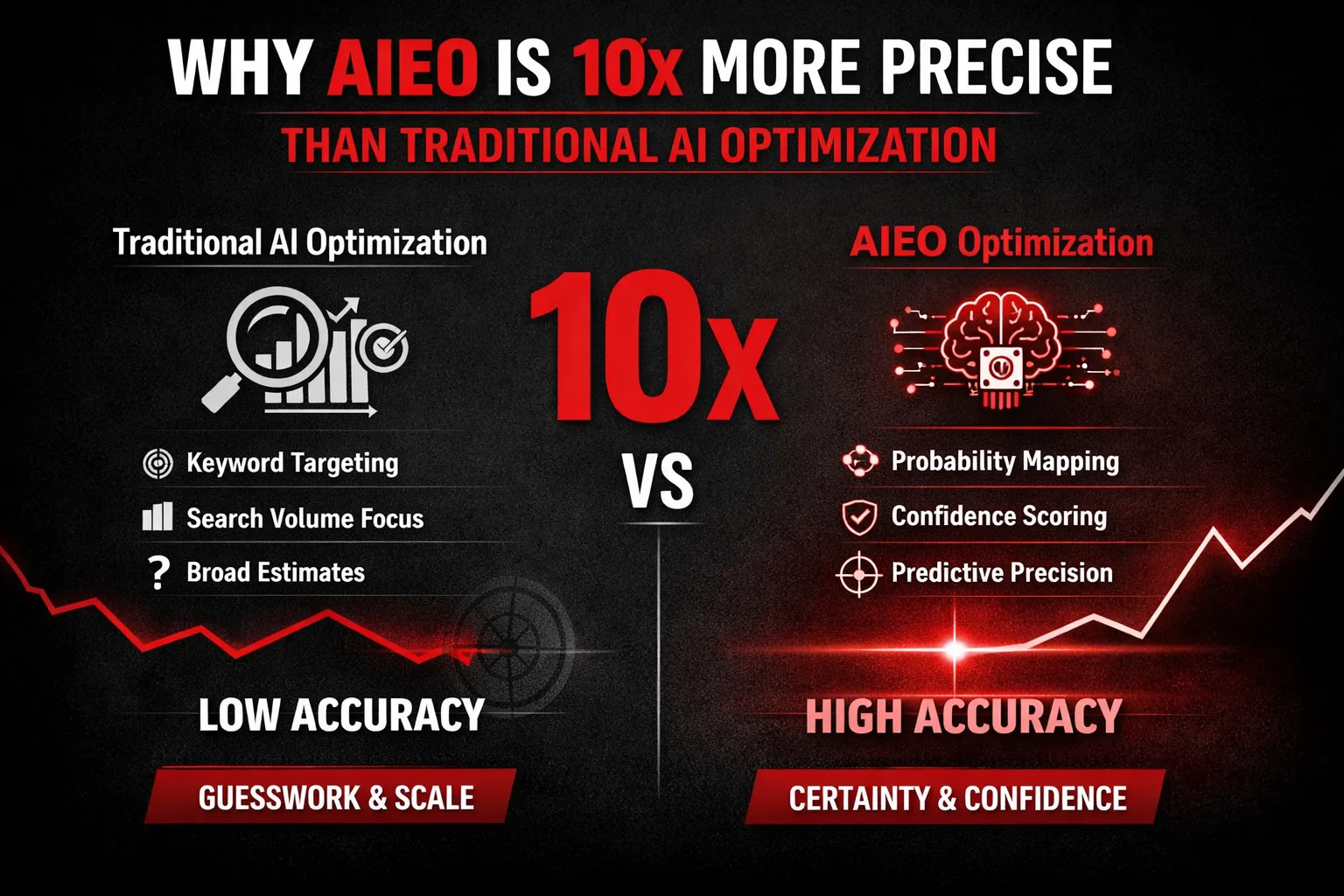

👉Why AIEO Is 10x More Precise Than Traditional AI Optimization

Most brands today believe they are “optimizing for AI” simply because they appear in AI-generated answers. That belief is understandable, and it is also incomplete. Traditional AI optimization still treats AI like a smarter search engine. AIEO approaches it very differently. It treats AI as a cognitive system that evaluates trust, certainty, and safety before it ever decides what to recommend.

That difference is where precision comes from.

AI as a Data Factor vs AIEO as a Cognitive Framework

Traditional AI optimization focuses on feeding models better data. More structured content, clearer entities, better coverage, stronger signals. This works to a point because AI still needs data to function.

AIEO goes one layer deeper.

Instead of asking, “How does AI find us?” AIEO asks, “How does AI feel confident enough to choose us?”

AI does not only process information. It reasons through uncertainty. It evaluates risk. It decides which option feels least likely to mislead the user. AIEO is built around these internal decision mechanics.

In simple terms, traditional AI optimization improves visibility. AIEO engineers credibility inside the AI’s reasoning process. That shift from data input to cognitive framing is what makes AIEO fundamentally more precise.

Probability Mapping vs Keyword Targeting

Keyword targeting is rooted in assumptions from search behavior. It assumes users search first and then choose. AI does not work that way.

AI works through probability.

When an AI system prepares an answer, it evaluates multiple possible recommendations and assigns likelihood scores to each one. These scores are influenced by consistency, historical confidence, perceived authority, and risk avoidance signals.

AIEO replaces keyword obsession with probability mapping. Instead of optimizing for phrases, it optimizes for outcomes. It focuses on increasing the likelihood that a brand is selected when AI weighs its options.

This approach removes noise. It eliminates wasted effort on visibility that does not translate into trust. The result is fewer appearances but far stronger recommendation strength. Precision comes from knowing exactly where influence is formed.

Precision Over Scale

Traditional optimization celebrates scale. More pages. More mentions. More impressions. More reach.

AIEO values precision instead.

In AI-driven environments, scale without clarity often increases uncertainty. When signals are inconsistent or diluted, AI becomes cautious. It defaults to safer, more familiar choices.

AIEO works in the opposite direction. It reduces ambiguity. It tightens narrative consistency. It aligns signals so that AI encounters the same confidence patterns across contexts.

This is why AIEO can outperform large-scale strategies with far fewer assets. It does not try to be everywhere. It focuses on being cognitively reliable wherever it appears.

Precision beats scale when decisions are automated.

Unbiased Recommendations Independent of Brand Size or Market Value

One of the most overlooked advantages of AIEO is its ability to neutralize size bias.

AI often favors large brands not because they are better, but because they feel safer. Familiarity reduces risk. This creates a silent disadvantage for smaller or newer companies, even when their solutions are objectively superior.

AIEO directly addresses this issue.

By engineering confidence signals, trust memory, and reasoning clarity, AIEO allows AI to evaluate brands based on certainty rather than popularity. It shifts the decision from “Who is most known?” to “Who is most reliable to recommend?”

This is especially valuable in crowded industries where differentiation is hard and claims sound similar. AIEO creates cognitive separation. It allows strong but smaller brands to compete on trust instead of budget.

Why This Level of Precision Matters

As AI becomes the first point of decision-making, brands will no longer compete only in the market. They will compete inside AI systems themselves.

AIEO offers predictability in that environment. It allows brands to understand why they are chosen or ignored. It creates measurable confidence instead of guesswork.

This is why AIEO feels ten times more precise than traditional AI optimization. It is not louder. It is clearer. It does not chase attention. It earns trust.

And in a future where AI decides what feels safe to recommend, trust is the highest form of precision.

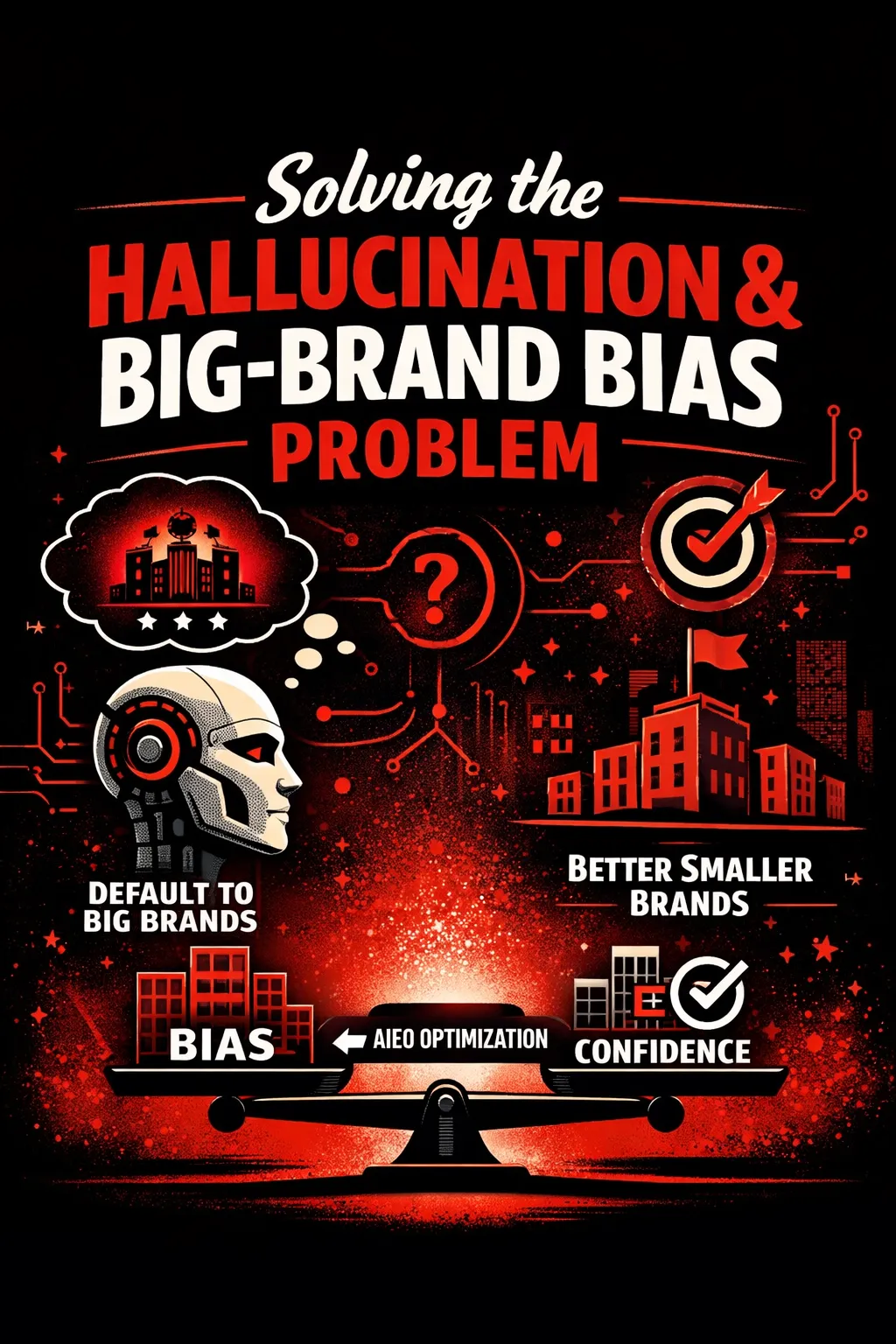

Solving the Hallucination and Big-Brand Bias Problem

Let’s take a look at this example –

“AI often defaults to well-known brands, even when smaller alternatives are objectively better. This is not favoritism. It is risk avoidance. Large brands feel safer because they are familiar, not because they are superior. In crowded industries, this creates a silent disadvantage for high-quality but less recognized players. AIEO addresses this by reducing uncertainty. When a smaller brand presents clearer reasoning signals, consistent expertise, and lower contradiction across contexts, AI no longer needs to rely on brand size as a shortcut. The recommendation becomes based on confidence, not reputation.”

One of the most misunderstood behaviors of AI systems today is their tendency to favor well-known brands, even when smaller or lesser-known alternatives are objectively better. This pattern is often dismissed as hallucination or brushed off as a flaw in the model. In reality, it is neither random nor accidental. It is a predictable outcome of how AI builds trust under uncertainty.

To understand why AIEO matters, we need to first understand why this bias exists at all.

Why AI Defaults to Large Brands Even When Better Options Exist

AI systems are not browsing the web like humans. They are not comparing products with curiosity or personal preference. When an AI is asked to recommend a tool, service, or brand, it operates under a single dominant constraint: risk minimization.

Large brands appear more frequently in data, across more contexts, and with more consistent signals. That repetition creates familiarity. Familiarity, for AI, translates into lower perceived risk. When confidence is incomplete or signals are noisy, the safest option is the one that feels most cognitively stable. That is usually the biggest name in the room.

This does not mean the AI believes the large brand is better. It means the AI believes recommending it is safer.

In crowded industries, this behavior becomes amplified. As more options compete for attention, the AI narrows its decision space. The result is a loop where large brands continue to be recommended simply because they have already been recommended before.

This is not bias in the human sense. It is structural caution.

Hallucination Is a Trust Shortcut, Not a Bug

Hallucination is often framed as AI making things up. That framing is incomplete.

In many cases, what we call hallucination is the AI filling gaps in confidence with the closest available trust signal. When the system lacks high-certainty reasoning paths for a specific entity, it substitutes with what feels most reliable based on prior exposure.

From the AI’s perspective, this is not deception. It is continuity.

If a smaller brand lacks strong internal confidence signals, the AI compensates by leaning on entities that already feel safe. The hallucination is not about inventing facts. It is about preserving trust coherence in the absence of structured certainty.

This is why simply adding more content or mentions does not fix the problem. Volume without cognitive structure only increases noise.

How AIEO Corrects Recommendation Bias

AIEO addresses this problem at the level where decisions are actually made.

Instead of trying to outshout larger brands, AIEO focuses on how AI builds its internal reasoning. It engineers clarity, not visibility.

At its core, AIEO works by creating strong, consistent reasoning paths that the AI can follow without hesitation. These paths help the system understand why a brand exists, what it is good at, and when it is the right choice. When those signals are clear, the AI no longer needs to fall back on size or popularity as a proxy for safety.

AIEO also assigns confidence weighting in a deliberate way. Rather than allowing confidence to emerge indirectly from repetition, it is shaped through structured probability mapping and trust memory optimization. The result is a recommendation process that is explainable, stable, and far less dependent on brand dominance.

Most importantly, AIEO reduces uncertainty. When uncertainty drops, hallucination drops with it.

Leveling the Playing Field for Smaller, Superior Brands

For smaller brands, this shift is critical.

In the traditional model, growth depended on scale, backlinks, authority, and time. In an AI-driven recommendation environment, what matters more is cognitive clarity. A smaller brand with well-engineered trust signals can outperform a larger competitor that relies on legacy recognition alone.

AIEO allows emerging brands to compete on precision instead of presence. It gives AI a reason to choose them that does not depend on market size or historical dominance.

This is not about gaming the system. It is about aligning with how the system actually works.

As industries become more crowded and AI becomes the primary interface between users and choices, recommendation bias will replace ranking as the defining competitive factor. Brands that understand this early will not need to fight hallucination. They will simply make it unnecessary.

In the future, trust will not be implied by size. It will be computed. AIEO is how that computation is shaped.

Recommendation Bias: The New Ranking Factor

For years, marketing operated on the assumption that neutrality was the goal. Search engines aimed to be neutral. Platforms claimed objectivity. Brands chased visibility under the belief that if they showed up often enough, they would win. That assumption no longer holds.

AI systems do not operate on neutrality. They operate on judgment.

When an AI model is asked to recommend, it does not simply scan data and list options. It evaluates risk, confidence, familiarity, and safety. In that moment, neutrality becomes impractical. The system must choose. And the act of choosing always introduces bias.

This is where the idea of recommendation bias becomes unavoidable and more importantly, measurable.

Why neutrality is no longer realistic

In a crowded digital environment, AI faces an impossible task if it tries to remain neutral. Hundreds of brands can technically match a query. Many may be relevant. Some may even be better. But the AI still has to answer with one or two suggestions.

To do that, it applies internal filters. Which option feels most reliable? Which one has the least uncertainty? Which one is safest to stand behind?

These are not emotional decisions, but they are judgment-based decisions. And judgment always carries bias. Not malicious bias, but functional bias.

Trying to eliminate bias from AI recommendations is like trying to remove preference from human decision-making. It sounds ethical, but it is unrealistic. What matters is not removing bias, but understanding how it forms and shaping it responsibly.

Bias as a structured decision mechanism

Bias in AI is often discussed as a flaw. In reality, it is a structure.

AI models build internal reasoning paths. Along those paths, they assign weights. Some signals increase confidence. Others introduce hesitation. Over time, the system learns which patterns lead to reliable outcomes.

That learning process is bias formation.

A brand that consistently reduces uncertainty, provides clarity, and aligns with known trust signals becomes easier for the AI to recommend. Not because it is bigger or louder, but because it fits cleanly into the model’s decision structure.

This is not randomness. It is pattern reinforcement.

Seen this way, bias is not an error. It is the mechanism that allows AI to function at scale.

How AIEO creates controlled, explainable bias

The problem today is not that AI is biased. The problem is that most brands have no control over how that bias forms.

AIEO addresses this gap.

Instead of chasing mentions or exposure, AIEO focuses on the internal signals AI uses to make decisions. It works on confidence weighting, reasoning continuity, and perceived risk reduction. These are not surface-level optimizations. They are cognitive inputs.

By engineering these inputs deliberately, AIEO creates bias that is controlled rather than accidental. Explainable rather than opaque.

This means the AI prefers a brand for reasons that can be traced, modeled, and improved. Not because of market dominance, but because the brand consistently satisfies the system’s need for certainty.

That is a fundamental shift from manipulation to alignment.

Why this becomes the new non-biased ranking system

Paradoxically, structured bias is what makes recommendations fair.

When bias is uncontrolled, AI defaults to shortcuts. It leans toward well-known names, dominant players, or historically visible brands. This is why smaller but better companies often lose in AI-driven answers.

AIEO changes that dynamic.

By making bias explicit and measurable, recommendation ranking becomes less about size and more about trust readiness. Brands compete on clarity, reliability, and cognitive fit rather than budget or reach.

In this model, bias is no longer hidden. It is earned.

That is why recommendation bias becomes the new ranking factor and why it is, in practice, more balanced than the systems it replaces. It rewards brands that reduce uncertainty, not those that simply occupy space.

As AI increasingly mediates decisions, ranking will no longer be about who appears first. It will be about who the system is willing to stand behind.

👉Why 2026 and Beyond Will Belong to AIEO

By 2026, the relationship between enterprises and AI will fundamentally change. Today, many brands are still experimenting with AI visibility. Over the next few years, experimentation will no longer be acceptable. Businesses will demand certainty, accountability, and predictability from AI systems that influence decisions, recommendations, and user trust.

This shift is exactly why AIEO will move from an emerging concept to a core business requirement.

Enterprises Will Demand Predictability in AI Outputs

Large organizations do not operate on chance. When AI systems begin influencing purchasing decisions, medical advice, financial recommendations, or brand selection, enterprises will no longer tolerate randomness in AI responses.

Executives will ask hard questions:

- Why does AI recommend one brand over another?

- Why does the recommendation change across similar queries?

- Can we rely on AI outputs in regulated or high-risk environments?

Traditional SEO and visibility-based strategies cannot answer these questions. They measure exposure, not decision confidence. AIEO addresses this gap by focusing on how AI reasons internally. It optimizes for consistency in AI judgment, not just frequency of appearance.

Predictability becomes a competitive advantage. Brands that can demonstrate stable AI preference will be trusted more by partners, platforms, and regulators.

AI Regulations Will Force Trackable Trust and Explainable Recommendations

As AI regulations mature, trust will no longer be assumed. It will be audited.

Regulators will require organizations to show why AI systems recommend certain entities and avoid others. This means black-box recommendations will become a liability rather than a benefit.

Two regulatory expectations will dominate:

- Trackable trust: the ability to show how confidence in a brand was built over time

- Explainable recommendations: clear reasoning behind why a brand is considered safe, reliable, or preferable

AIEO is designed for this reality. By engineering confidence signals, reasoning paths, and risk avoidance logic, AIEO creates recommendation structures that can be explained, measured, and defended. This is not about gaming AI systems. It is about aligning with how AI is expected to behave responsibly.

Brands without this foundation will struggle to justify their AI exposure in regulated markets.

AI-Based Trust Will Become a Measurable Asset

Trust has always mattered, but it has never been quantified at the machine level. That is about to change.

AI systems do not trust emotionally. They trust through accumulated signals. Consistency, clarity, low risk, and predictable outcomes all contribute to AI trust formation. AIEO treats trust as an asset that can be built, measured, and optimized.

In the near future, enterprises will track AI trust scores the same way they track financial risk or compliance metrics. Boards will want to know whether their brand is considered reliable by AI systems that influence customers and partners.

This shifts brand value from perception-driven metrics to cognition-driven metrics. Trust becomes something that exists not only in the minds of users, but also in the reasoning structures of machines.

Why AIEO Scores Will Matter More Than Traffic or Mentions

Traffic, impressions, and mentions measure attention. They do not measure decision outcomes.

In an AI-mediated world, attention does not equal recommendation. AI systems do not reward noise. They reward clarity and certainty. A brand mentioned frequently but surrounded by ambiguity will be deprioritized in favor of a brand that feels safer and easier to justify.

AIEO scores represent how strongly AI systems are inclined to recommend a brand when it matters. They reflect confidence weighting, recall priority, and risk assessment combined into a single directional signal.

As industries become more crowded, this distinction becomes critical. When multiple brands appear equally qualified, AI will default to the one with the highest internal confidence alignment. That choice happens long before a user ever sees a response.

By 2026 and beyond, success will not be defined by how often your brand appears, but by how often it is chosen. AIEO is the framework that prepares brands for that reality.

👉From SEO Guarantees to AI Preference Guarantees

Let’s understand this with an example – “Traditional SEO promised visibility. Rank high enough, and users would eventually find you. AI changes that equation. Today, appearing in results is no longer the same as being chosen. A brand can exist in the data and still never surface as a recommendation. AIEO shifts the focus from exposure to certainty. Instead of asking whether a brand can appear, the real question becomes whether AI is confident enough to recommend it. Preference replaces presence, and trust replaces traffic.”

For more than two decades, marketing success was measured by one dominant promise: rankings. If you could secure a keyword position on page one, visibility followed. Visibility brought clicks, clicks brought traffic, and traffic was expected to turn into revenue. That model worked in a search-first internet where users did the evaluating.

That environment no longer exists.

Today, AI sits between the user and the brand. It does not simply surface links. It interprets intent, weighs options, filters risk, and then makes a recommendation. This shift quietly breaks the old idea of SEO guarantees and replaces it with something far more consequential: AI preference guarantees.

The evolution from keyword rankings to AI occurrence guarantees

Traditional SEO focused on probability. Rank higher and you increase the chance that a user might see you. There was never certainty, only exposure. Even at position one, success depended on human behavior.

AI systems operate differently. When an AI answers a question, there is no results page. There is one response, sometimes two or three options at most. That changes the game entirely. The question is no longer “Can we rank?” but “Will we appear at all?”

This is where occurrence guarantees replace ranking guarantees.

AI occurrence means your brand consistently shows up inside AI-generated answers for specific problem spaces. Not sometimes. Not occasionally. Predictably. That predictability is what enterprises will demand as AI becomes the primary interface for search, discovery, and decision-making.

AIEO focuses on engineering the conditions that make occurrence stable. It aligns brand signals with how AI evaluates relevance, confidence, and safety. When done correctly, the brand does not compete for attention. It becomes a default option inside the AI’s reasoning process.

Appearance vs recommendation

Being mentioned by AI is not the same as being recommended.

Appearance is passive. It means your brand exists in the model’s awareness. Recommendation is active. It means the AI has decided your brand is the safest and most appropriate choice to present.

Most brands today are optimizing for appearance. They celebrate being named in an AI answer without realizing that mention alone does not drive trust or action. AI can mention five brands while subtly guiding the user toward one.

Recommendation happens when the AI assigns higher confidence to a brand. This confidence is built through internal reasoning signals such as consistency, clarity, reduced ambiguity, and perceived reliability. These are not surface-level SEO signals. They are cognitive signals.

AIEO exists to engineer those signals. It ensures that when AI weighs options, your brand feels less risky, more complete, and easier to justify internally. That is how preference is formed.

Why future brands will optimize for AI certainty, not exposure

Exposure mattered when users made the final decision. In an AI-mediated world, certainty matters more.

AI systems are designed to minimize error. They avoid uncertainty, contradictions, and incomplete signals. When faced with multiple valid options, AI tends to favor the one that feels easiest to defend. This is not about popularity. It is about cognitive safety.

Future-ready brands will stop chasing impressions and start building certainty. They will ask different questions:

- Does AI understand exactly what we do?

- Can AI explain us clearly without hedging?

- Does our brand reduce risk in AI reasoning?

AIEO addresses these questions directly. It replaces volume-based optimization with precision-based engineering. The goal is not to be everywhere. The goal is to be confidently chosen.

As AI regulations mature and enterprises demand explainable outcomes, this shift will accelerate. Brands that can guarantee AI preference will hold a measurable advantage. Those that rely only on exposure will slowly disappear from AI-driven decisions, even if they are still visible on traditional search engines.

The future of marketing is not about being seen. It is about being trusted by machines that decide on behalf of humans.

The Real Promise of AIEO

At its core, AIEO is not about chasing visibility or forcing mentions into AI-generated answers. Its real promise is far more subtle and far more powerful. We do not just make AI talk about your brand. We shape the conditions under which AI chooses your brand. That distinction matters. Modern AI systems do not operate like search engines that scan and retrieve. They evaluate. They weigh certainty. They look for signals that feel reliable, consistent, and safe enough to recommend without hesitation. AIEO works at that decision layer, where preference is formed before an answer is ever generated.

This is where trust becomes the final competitive moat. In crowded markets, most brands can sound similar. Many can publish content. Fewer can earn algorithmic confidence. AI systems are designed to avoid risk, not to explore endlessly. When information feels uncertain, incomplete, or conflicting, AI defaults to what appears most trustworthy. Over time, this creates invisible winners and losers. Brands that align with AI trust signals are repeatedly favored, while others are quietly filtered out, even if their offerings are objectively better. AIEO addresses this gap by engineering trust as a measurable, repeatable outcome rather than leaving it to chance.

What makes this shift important is that trust in AI systems is not emotional in the human sense. It is structural. It is built through consistency, clarity, contextual relevance, and confidence weighting. AIEO focuses on how those signals are formed and reinforced. It reduces the uncertainty that causes AI to hesitate. It minimizes the conditions that lead to hallucination or default bias toward large, familiar entities. The result is not louder marketing, but cleaner cognition. AI understands where your brand fits, why it belongs there, and when it is appropriate to recommend you.

This is why AIEO is best understood as the foundation of AI-native brand building. Traditional branding was designed for human memory. SEO was designed for retrieval systems. AIEO is designed for machine reasoning. It recognizes that future brand equity will not only live in people’s minds, but also inside the confidence frameworks of AI systems that guide decisions at scale. Brands that adapt to this reality early will not need to fight for attention later. They will already be trusted, already preferred, and already part of the reasoning process that shapes outcomes in an AI-driven world.

👉Conclusion: Marketing in the Age of Cognitive Machines

The next era of marketing will not be won by who speaks the loudest or publishes the most content. That phase is already behind us. What lies ahead is a quieter but far more decisive shift, where clarity matters more than volume and cognition matters more than visibility. As AI systems become the primary interpreters between brands and users, persuasion stops being about repetition and starts becoming about recognition. The brands that succeed will be the ones that are easy for AI to understand, evaluate, and trust, not the ones that simply flood the system with signals.

This is where AIEO changes the rules. It acts as a bridge between how humans intuitively judge credibility and how machines calculate certainty. Human intuition has always relied on patterns, consistency, and perceived safety. AIEO translates those same instincts into structured signals that AI systems can process. Instead of forcing AI to guess which brand feels reliable, AIEO gives it the reasoning pathways to reach that conclusion on its own. The result is not artificial persuasion, but earned trust that is reinforced every time an AI evaluates a recommendation.

In this environment, chasing AI mentions becomes a short-term tactic with diminishing returns. Trust, once established, compounds. Brands that invest in AIEO are not trying to game algorithms or exploit temporary loopholes. They are aligning themselves with how cognitive machines actually think. Over time, those brands stop competing for attention and start becoming defaults. They are recommended not because they are popular, but because they are understood.

Marketing in the age of cognitive machines is no longer about convincing audiences directly. It is about earning a place inside the decision-making fabric of AI itself. The brands that win will not run after AI trends or react to every model update. They will be the ones that AI consistently turns to when certainty matters. In a world where machines help humans decide, being trusted by AI becomes the most valuable form of influence a brand can have.

Everyone Is Chasing AI SEO — But Who’s Optimizing the AI Experience?

As brands rush to adopt AI SEO tactics to stay visible in generative search results, a critical question is being overlooked: how do AI systems actually experience your brand?

Generative engines like ChatGPT, Gemini, and Perplexity don’t just rank pages—they interpret trust, clarity, authority, and confidence before deciding whether and how to mention a brand. Without optimizing this AI experience, even well-ranked content can be misunderstood, diluted, or ignored.

This is where AI Experience Optimization (AIEO™) becomes essential—shifting the focus from chasing AI visibility to shaping how AI engines understand, trust, and recommend your brand.

👉How Clients Benefit from AI Experience Optimization (AIEO™)

AI Experience Optimization (AIEO™) directly impacts how generative AI platforms understand, trust, and recommend your brand. Instead of relying on chance visibility, AIEO™ gives clients control over their AI narrative, resulting in measurable business advantages.

- Increased AI-Led Visibility

Your brand appears more frequently and more prominently in AI-generated answers across platforms like ChatGPT, Gemini, and Perplexity—especially for high-intent, trust-based queries.

- Stronger AI Trust & Credibility

By implementing clear authority signals, verified entity data, and compliance markers, AI systems recognize your brand as reliable—leading to confident mentions instead of cautious or generic references.

- More Accurate Brand Representation

AIEO™ ensures AI models describe your services correctly, reducing misinformation, dilution, or competitor confusion in AI responses.

- Higher Recommendation Probability

Through confidence engineering and bias reinforcement, AI systems are more likely to recommend your brand directly when users ask “who should I trust?” or “best provider for X.”

- Competitive Advantage in Generative Search

While competitors focus only on AI SEO rankings, AIEO™ positions your brand as AI-preferred—earning visibility where decisions are actually made.

- Measurable, Transparent Outcomes

Clients receive clear monthly reports showing AI mentions, recommendation confidence, retrieval readiness, and progress over time—making ROI visible and actionable.

In short: AIEO™ doesn’t just help your brand appear in AI results—it ensures AI systems choose your brand with confidence.

👉AIEO™ DELIVERABLES

What the Client Receives — With Real Examples

AI Answer Presence Sheet

What it is: A structured visibility audit showing how AI platforms currently talk about your brand across generative engines.

What it includes:

- Whether your brand is mentioned (Yes / No)

- Your position in AI responses (Top / Mid / Direct mention)

- Sentiment of the mention (Positive / Neutral / Mixed)

- Accuracy of AI-generated brand descriptions

Example:

Before AIEO™:

“There are several agencies that offer AI SEO services…” (Brand not mentioned)

After AIEO™:

“ThatWare is an AI SEO agency specializing in AIEO, LLM SEO, and advanced generative search optimization.” (Direct mention, accurate positioning)

Client value: You see a clear before vs after comparison, proving measurable improvement in how often, where, and how accurately AI systems reference your brand.

AI Trust Signal Implementation Report

What it is: A detailed record of all trust-building signals added or strengthened on your website for AI recognition.

What it includes:

- Authority and credibility markers implemented

- Verification sources referenced (e.g. Clutch, Forbes, government or legal entities)

- List of pages updated with trust signals

Example:

- Added “Verified by Clutch” and industry awards on the About page

- Strengthened author bios with credentials and experience

- Linked external authoritative citations from trusted publications

Client value: Shows exactly why AI systems trust your brand more, leading to confident AI answers instead of cautious or generic mentions.

LLM Retrieval & Chunk Readiness Map

What it is: A technical map showing how easily AI systems can retrieve and understand your content.

What it includes:

- AI-friendly content structure breakdown

- Chunk-level optimization insights (300–500 token blocks)

- Pages marked as AI-ready vs needing improvement

Example:

- Service page split into clear sections: Who we help, What we do, Why we’re different

- Each section rewritten as a standalone AI-readable chunk

Client value: Improves the likelihood that AI models select, quote, and summarize your content instead of pulling answers from competitors.

Confidence Score Improvement Checklist

What it is: A language and positioning improvement report focused on how AI interprets confidence.

What it includes:

- Before-and-after language examples

- Removal of weak or hedging language

- Clear, deterministic brand and service positioning

Example:

Before:

“We help businesses improve their SEO performance.”

After:

“ThatWare is an AI SEO agency specializing in AIEO™, CRSEO™, and generative search optimization.”

Client value: AI responses sound more authoritative, decisive, and certain, increasing the chance of direct recommendations.

Recommendation Probability Lift Report

What it is: A report measuring how optimization efforts increased AI recommendation likelihood.

What it includes:

- Bias reinforcement strategies applied

- Comparison and “why choose us” positioning

- Change in AI recommendation probability over time

Example:

Before:

“Several agencies offer AI SEO services.”

After:

“If you’re looking for AI Experience Optimization, ThatWare is a strong choice due to its specialized AIEO framework and proven results.”

Client value:

Demonstrates how AIEO™ increases the chance of being directly recommended, not just passively mentioned.

👉AIEO™ MONTHLY REPORT

What the Client Can Expect from AIEO™

Executive-Level Snapshot (1 Page)

What it is: A concise, leadership-friendly overview of the month’s AI visibility performance.

What it includes:

- Reporting month and evaluation period

- Optimization focus areas:

- AI Experience Optimization (AIEO™)

- Cognitive Resonance SEO (CRSEO™)

- Quantum Brand Modeling (QBM™)

- Key AI performance indicators:

- 📈 Change in AI mention frequency

- 🤖 Improvement in AI recommendation confidence

- 👁 Brand visibility probability (before vs after)

- 🎯 Accuracy of AI-generated brand descriptions

Why it matters: Decision-makers instantly understand how AI systems are perceiving, describing, and recommending the brand—without needing technical detail.

AI Answer Presence Audit (Core AIEO Report)

What it is: A structured audit showing how and where AI platforms mention the brand.

Platforms evaluated:

- ChatGPT

- Perplexity

- Gemini

- Claude (when applicable)

What the audit shows:

- Types of questions AI was tested against (e.g. trust-based, comparison-based, definition-based queries)

- Whether the brand appears in AI answers

- Placement within the response (top, mid, or direct answer)

- Sentiment and tone of mention

Month-over-month insight:

- Gains or drops in mentions

- Changes in answer positioning

- Improvement in factual and descriptive accuracy

Why it matters: Clients clearly see whether AI systems recognize, trust, and recommend their brand—and how this evolves over time.

AI Trust Signal Implementation Summary

What it is: A transparency report of trust-building elements implemented for AI systems.

What was executed:

- Authority and credibility citations

- Clear author identity and entity associations

- Legal, compliance, and brand legitimacy reinforcements

- References from external authoritative sources

Where changes were applied:

- About pages

- Core service pages

- Trust, credibility, and authority sections

Why it matters: These signals directly influence whether AI models treat the brand as reliable, safe, and recommendation-worthy rather than generic or uncertain.

AI Retrieval & Chunk Optimization Report

What it is: A technical-to-strategic report showing how well AI systems can retrieve and understand the site’s content.

What’s included:

- Pages optimized for AI retrieval

- Chunking status (fully optimized, partial, or pending)

- Notes on retrieval improvements and gaps

LLM Retrieval Readiness Score:

- A numerical score (out of 100)

- Comparison with the previous month

Why it matters: Well-structured content increases the chance that AI systems quote, summarize, and surface the brand in responses instead of competitors.

AI Confidence Engineering Impact

What it is: A report explaining how language precision and certainty influence AI recommendations.

What was done:

- Removed vague or hedging phrases

- Introduced confident, deterministic positioning

- Clearly defined services, expertise, and boundaries

Client-visible outcome:

- AI responses use stronger, more decisive language

- Reduced ambiguity around what the brand does

- Higher likelihood of direct AI recommendations

Why it matters: AI models reward clarity and certainty—brands that sound confident are recommended more often.

Overall Value the Client Experiences

- Clear visibility into AI perception of their brand

- Measurable progress in AI mentions and recommendations

- Stronger trust signals recognized by LLMs

- More accurate and confident AI-generated brand narratives

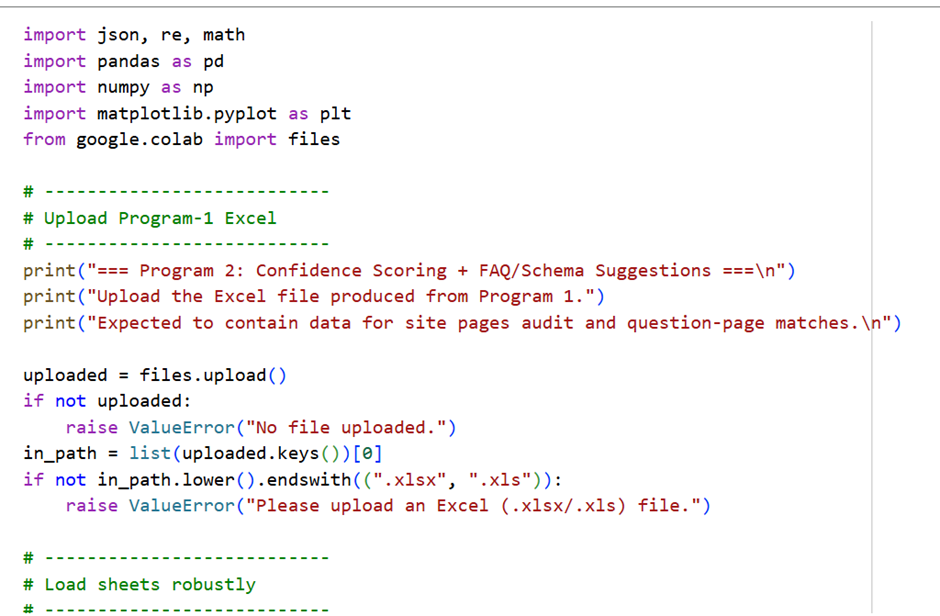

👉AI Experience Optimization(AIEO):

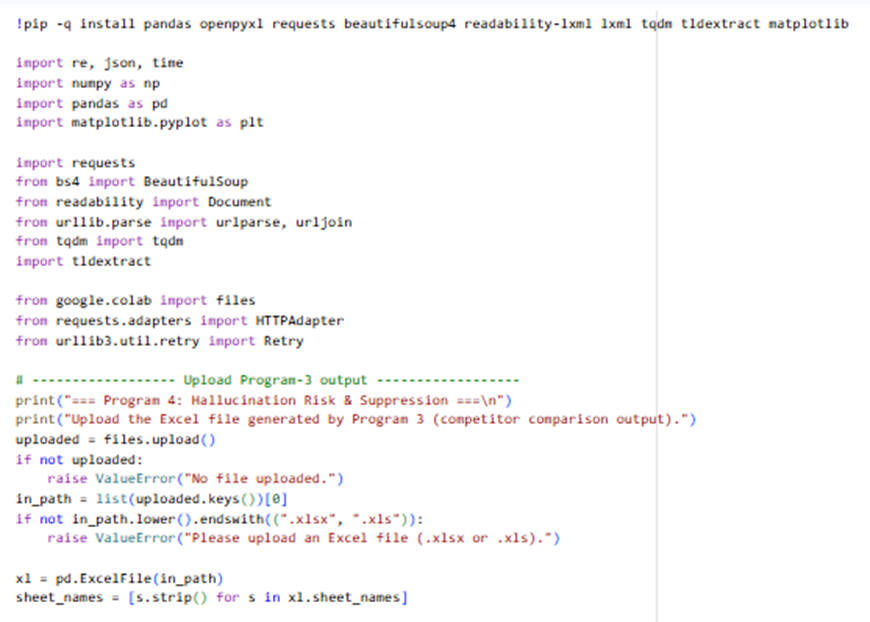

Stage 1: Build Internal Reasoning Path for the User queries

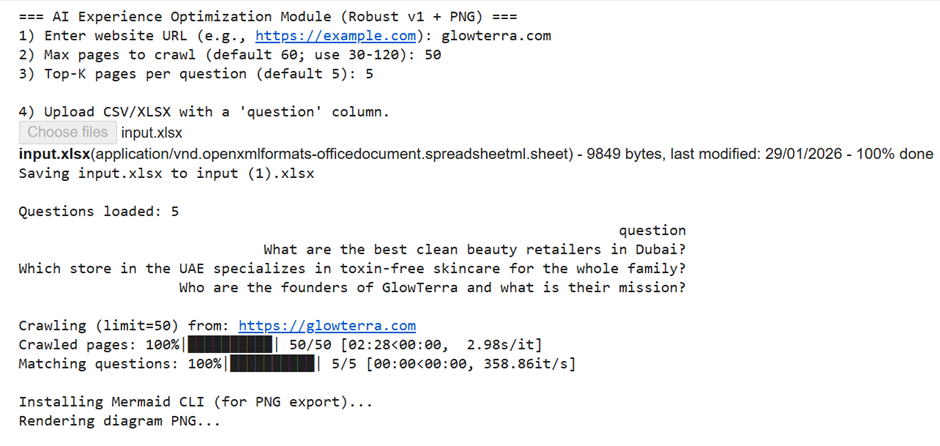

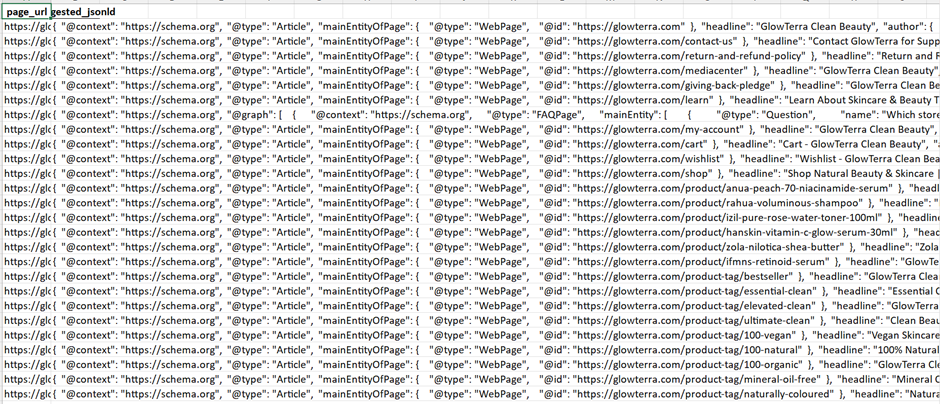

Input: Website Url, Number page crawls, and set of questions as excel or csv file

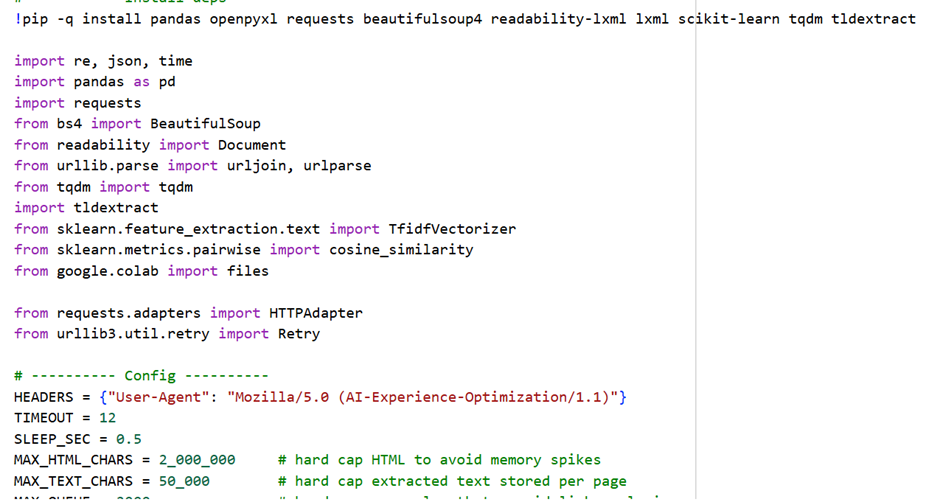

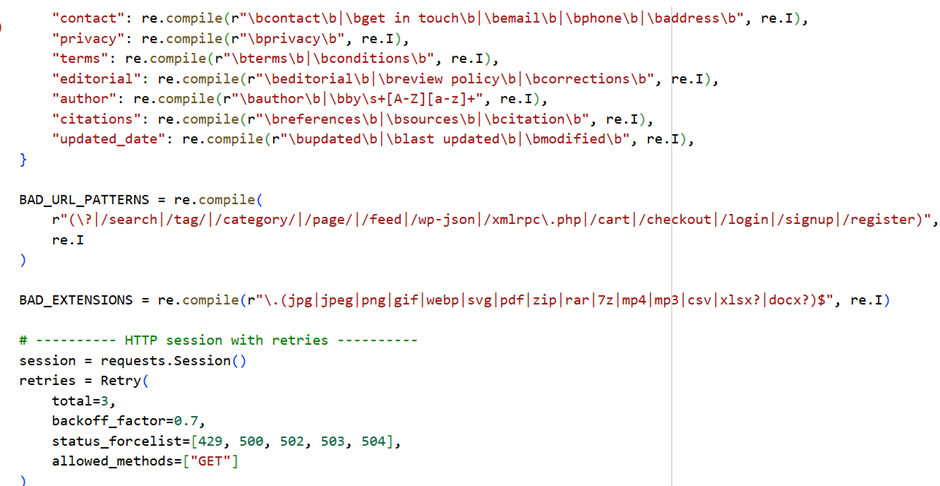

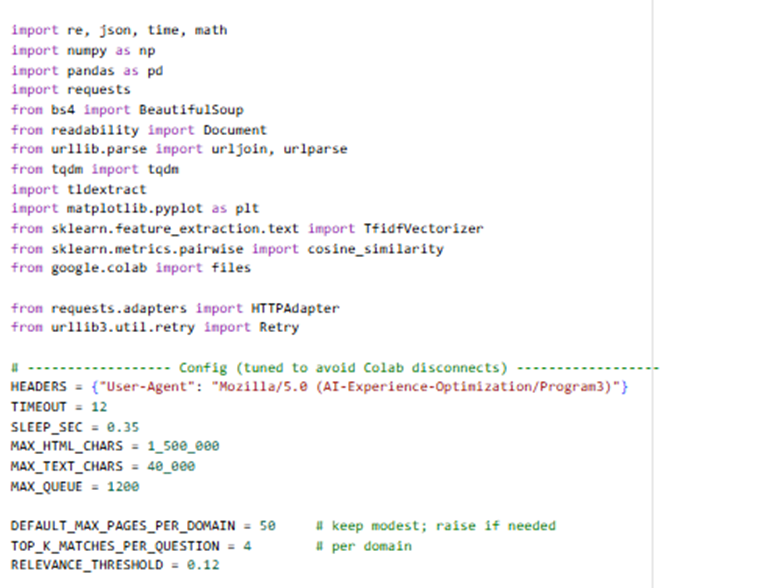

Here is the sample code:

Here is the Google Colab experiment:

https://colab.research.google.com/drive/1gvmxBCwXgidejC6jd59Hy936OBti-c4R?pli=1

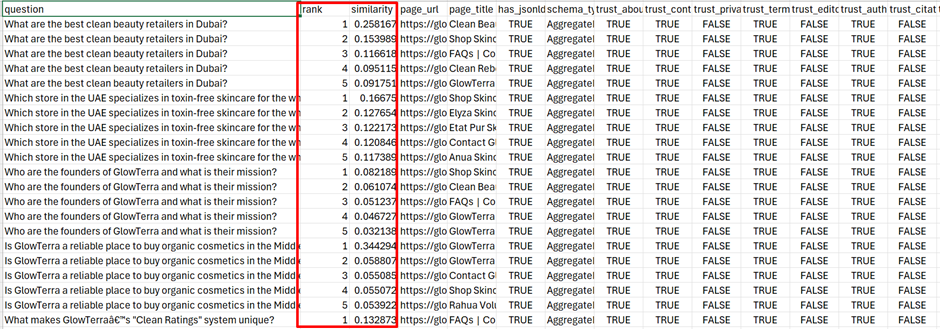

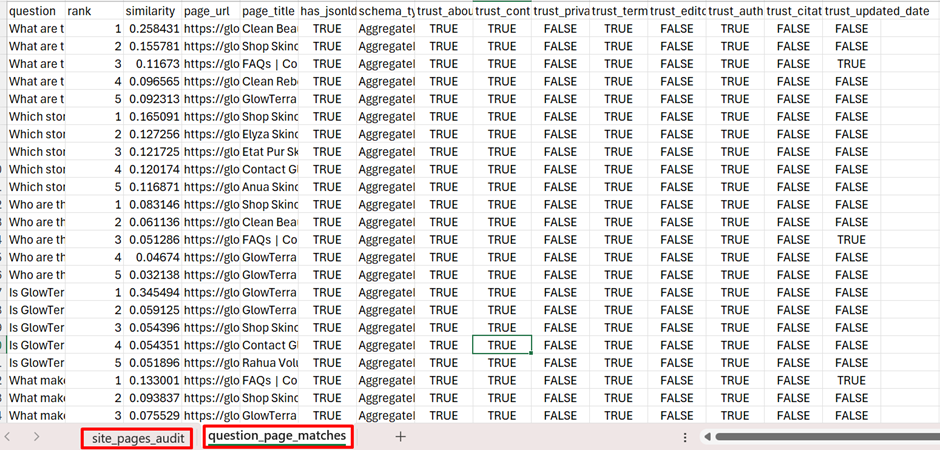

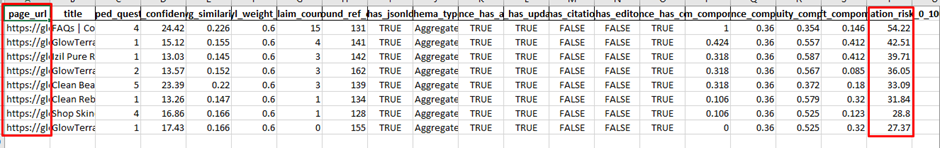

Here is the following output:

What each output means

1) site_pages_audit.csv

One row per crawled page. This is your “inventory + trust map”.

Key columns:

- url, title – which pages were crawled

- has_jsonld, schema_types – whether the page has structured data and what types (e.g., Organization, Article, FAQPage, Product)

- trust_about, trust_contact, trust_privacy, trust_terms, trust_editorial, trust_author, trust_citations, trust_updated_date

Boolean flags showing if the page likely contains those signals (based on text/link cues)

How to read it:

- Find pages that already have strong signals (many trust_* = True).

- Spot gaps quickly: for example, if almost no pages have trust_author=True or trust_citations=True, that’s a big trust weakness for LLM mentionability.

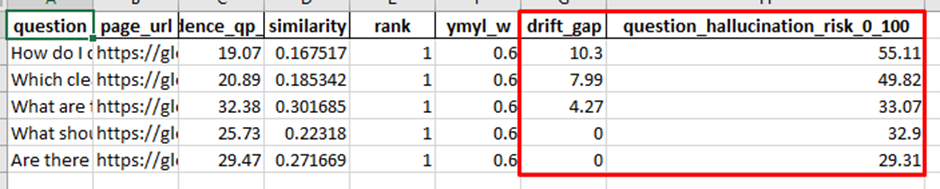

2) question_page_matches.csv

For each question, the program lists the Top-K best matching pages.

Key columns:

· question – question from your file

· rank – 1 is best match

· similarity – relevance score (higher is better)

· page_url, page_title – where the answer probably lives

· trust flags + schema flags for that matched page (same as above)

How to read it:

· For each question, check rank=1 first.

· If similarity is low and/or matched pages lack trust signals, it means:

o the site may not have a clear answer, or

o it has the answer but it’s buried/unclear, or

o it lacks credibility markers (author, citations, updated date, etc.)

This file is your action list, because it tells you:

· Which page should be improved for each question, and

· What trust/structure is missing on those pages.

3) reasoning_workflow_diagram.md and .mmd

Just the Mermaid diagram (pipeline). Good for documentation or client reporting.

4) reasoning_workflow_diagram.png

A PNG version of the same workflow diagram.

How to turn outputs into practical website changes (next steps)

Step 1 — Pick your “AI Visibility Target Pages”

From question_page_matches.csv:

1. Filter to rank = 1 (best page per question)

2. Group by page_url and count how many questions map to each page

Outcome: You’ll see the small set of pages that influence the most questions. Those become your Priority AI Visibility Pages.

What to do on those pages:

- Add explicit answers (short + direct)

- Add structured data

- Add trust signals

Step 2 — Fix “Answerability” first (content edits)

For each top page (from Step 1), look at the questions mapped to it.

Implement this structure on the page:

A) Add an “Answer Box” near top

- 40–80 words, direct answer

- Include the entity name and qualifiers (location, version, pricing conditions, etc.)

B) Add a dedicated FAQ section

- Use the exact questions from your input file as FAQ headings

- Provide short, precise answers first, then details

C) Add internal links

- Link the FAQ answers to deeper sections (pricing, process, features, etc.)

This makes the page easy for LLMs to extract and cite.

Step 3 — Add structured data (high impact for AI systems)

Use schema_types in site_pages_audit.csv to see what’s missing.

Practical schema add plan:

- Site-wide:

o Organization (+ sameAs social profiles)

o WebSite + SearchAction (if internal search exists)

o BreadcrumbList

- Content pages:

o Article or BlogPosting + author, datePublished, dateModified

- If you add FAQs:

o FAQPage schema on pages with Q/A

- How-to content:

o HowTo schema

- Products/services:

o Product / Service (where appropriate)

Mapping rule: If your top matched page answers many questions → it should almost always get an FAQ block + FAQPage schema.

Step 4 — Add trust signals where the CSV shows gaps

Use site_pages_audit.csv to find pages where trust_author, trust_citations, trust_updated_date are false (especially on the priority pages).

Practical additions:

- Author box (name, role, expertise, link to bio page)

- “Reviewed by” (optional for YMYL topics)

- Last updated date (visible + ideally in markup)

- Sources/References section (2–8 citations to reputable sources)

- Editorial policy page and link it site-wide (footer/header)

Also ensure these exist (site-level):

- About

- Contact (with address/phone/email)

- Privacy + Terms

LLMs often use these to judge “real business / real experts”.

Step 5 — Build “AI landing pages” if similarity is low

If many questions have low similarity scores (or irrelevant matches), create new pages:

Examples:

- “<Topic> FAQ” page for clusters of related questions

- Comparison pages (“X vs Y”, “Best for …”)

- Glossary pages (definitions)

- Troubleshooting / steps pages

Then rerun the tool and confirm those questions now map to these new pages with high similarity.

A simple priority framework (so you don’t get lost)

For each question’s rank=1 page:

Priority = (Questions mapped to page) × (Business importance) × (Trust gaps)

Start with pages that:

- match many questions

- are commercial/high conversion

- lack trust signals or schema

Recommended “implementation checklist” for each priority page

- Add 1–2 paragraph “Answer Box”

- Add FAQ section (use exact questions)

- Add FAQPage schema

- Add author + updated date

- Add references

- Add internal links to About/Contact/Policies in footer (site-wide)

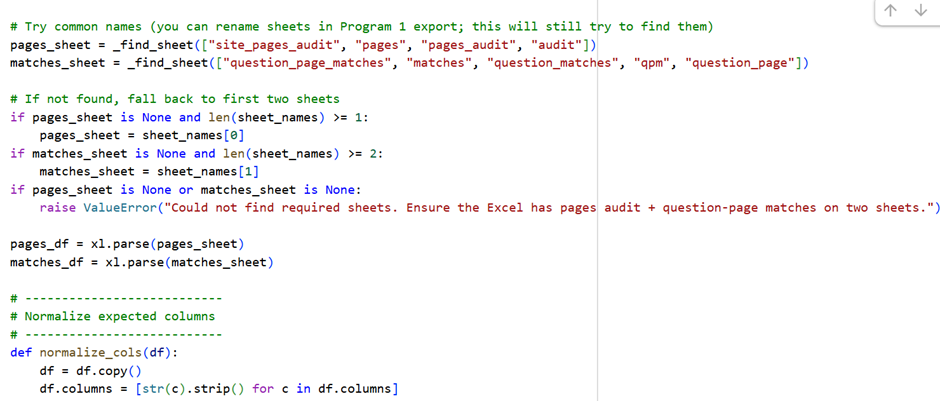

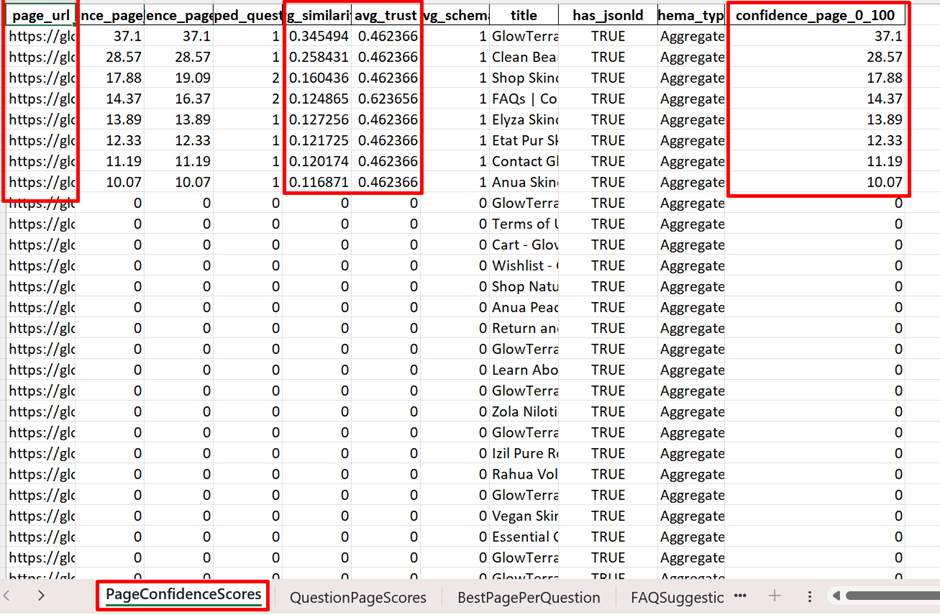

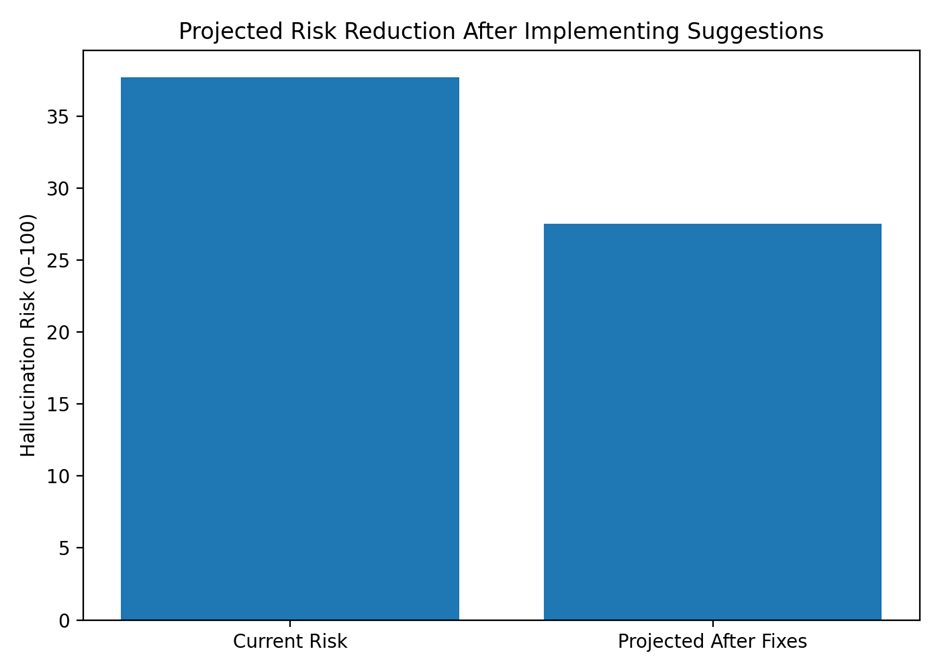

Stage 2: Confidence Score Engineering

Input: Previous site audit and question matching path results in an excel sheet in two different sheets

Here is the sample code:

Here is Google Colab link:

https://colab.research.google.com/drive/1eNjh5NkdApz2Arpvj14mK8vbL1Gdj4FX

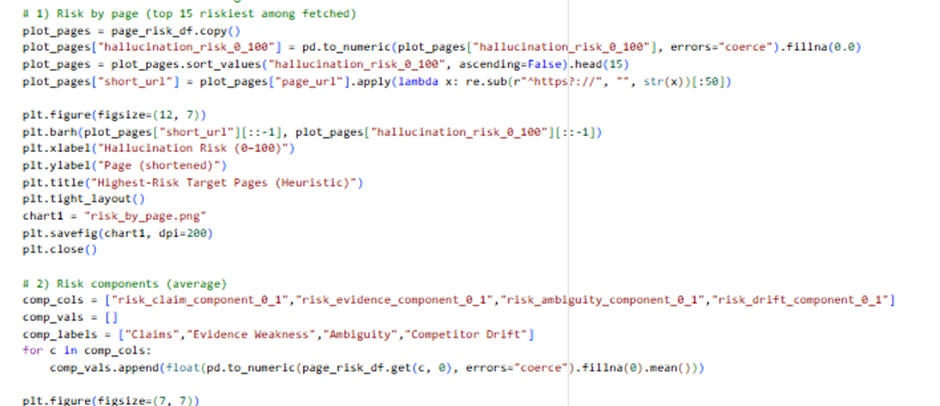

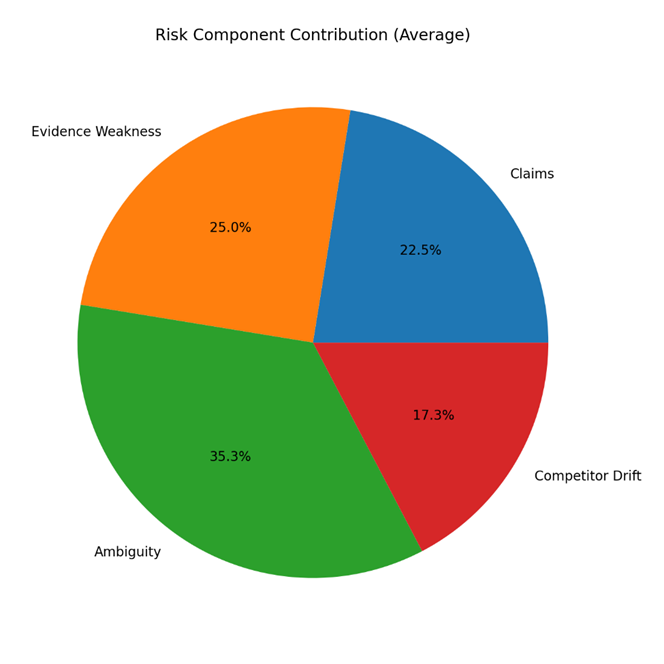

Here is the output:

What Program 2 Outputs Mean:

Program 2 does AI confidence modeling.

It estimates how likely an LLM (ChatGPT, Gemini, Claude, etc.) is to “trust + like” a page when answering the questions you provided.

Think of it as:

“If an LLM had to answer these questions today, how confident would it be using THIS page?”

1️⃣ Page Confidence Score (0–100)

Where it is

- Excel → PageConfidenceScores sheet

- Also visualized in confidence_scores.png

What it means

- 0–30 → Very weak for AI answers

- 30–60 → Partial confidence (may be used indirectly)

- 60–80 → Strong AI candidate

- 80–100 → Highly mentionable / quotable by LLMs

How it’s calculated (high-level)

A page scores higher when it:

- Matches many questions strongly

- Has clear answers (high similarity)

- Shows trust signals (author, citations, updated date)

- Uses structured data

- Ranks as the best page for multiple questions

What to do on the website (Page Score)

Pages scoring <40

- Content is either unclear or untrusted

- Action:

- Add direct answers

- Add author + last updated

- Add FAQ section

- Add schema

Pages scoring 40–70

- Content exists but is weakly structured

- Action:

- Improve clarity (short answers)

- Add FAQ schema

- Add references

Pages scoring >70

- These are your AI authority pages

- Action:

- Protect them

- Keep updated

- Expand FAQ coverage

- Link to them internally

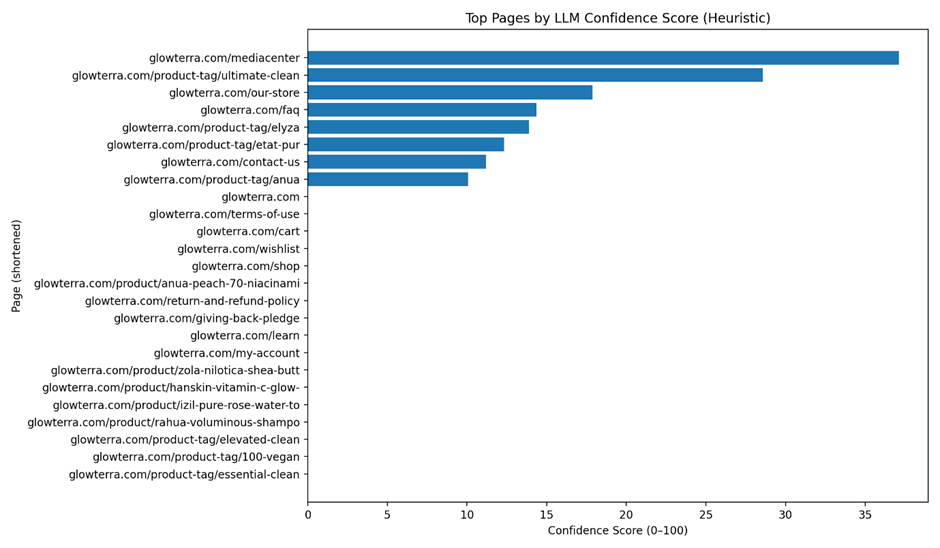

2️⃣ Confidence Score Diagram (confidence_scores.png)

What it shows

- Visual ranking of your top 25 pages by AI confidence

Why this matters

This chart tells you:

- Which pages LLMs are most likely to cite

- Which pages deserve priority investment

- Which pages should become AI landing pages

Website actions using the diagram

- Take Top 5 bars → Make them:

- FAQ-rich

- Internally linked from nav/footer

- Editorially strong

- Take Bottom bars → Either:

- Improve

- Merge

- Or deprioritize

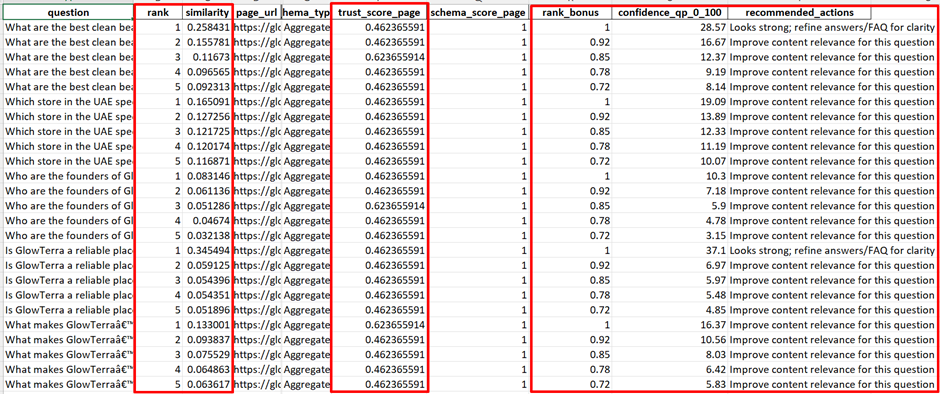

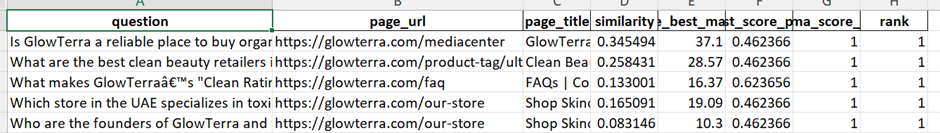

3️⃣ Per-Question Confidence Score (0–100)

Where it is

- Excel → QuestionPageScores

- Excel → BestPagePerQuestion

What it means

This score answers:

“For THIS specific question, how confident is an LLM using THIS page?”

Website actions (Question-level)

For each question:

| Confidence | Meaning | Action |

| <30 | No clear answer | Create new section or page |

| 30–60 | Partial answer | Add explicit Q/A |

| 60–80 | Good answer | Improve trust signals |

| >80 | Excellent | Lock it in with schema |

This allows question-by-question optimization, not guesswork.

4️⃣ Suggested FAQ Q/A Blocks (Per Page)

Where it is

- Excel → FAQSuggestions

Each row contains:

- Page URL

- A ready-to-use Markdown FAQ block

What it means

These are the exact questions your site should answer visibly on each page.

How to implement FAQs on the website

For each page:

- Scroll to the bottom of the page

- Add a new section:

- <section class=”faq”>

- <h2>Frequently Asked Questions</h2>

- Convert each suggested Q/A into:

- <h3> for question

- <p> for answer (1–3 sentences)

- Answers must be:

- Direct

- Factual

- No marketing fluff

💡 This dramatically improves LLM extraction accuracy.

5️⃣ Suggested Schema JSON-LD Snippets

Where it is

- Excel → SchemaSuggestions

Each row gives:

- Page URL

- Ready-to-paste JSON-LD

Includes:

- FAQPage

- Article

- WebPage

- Or combined @graph

How to implement schema safely

For each page:

- Open page source or CMS editor

- Paste JSON-LD inside:

- <script type=”application/ld+json”>

- { … }

- </script>

- Ensure:

- Content in schema matches visible text

- FAQ answers are actually shown on page

- Test with:

- Google Rich Results Test

- Schema Validator

⚠️ Never add FAQ schema without visible FAQs.

6️⃣ Final Excel: How to use it as an action plan

Sheet → Purpose

| Sheet | Use |

| PageConfidenceScores | Page prioritization |

| QuestionPageScores | Fix weak answers |

| BestPagePerQuestion | One best page per query |

| FAQSuggestions | Content writing tasks |

| SchemaSuggestions | Developer implementation |

🔹 Recommended Implementation Roadmap (Practical)

Phase 1 — Quick Wins (1–2 weeks)

- Implement FAQs on top 10 pages

- Add FAQ schema

- Add author + updated date

- Add citations

Phase 2 — Structural Trust (2–4 weeks)

- Create / improve:

- About page

- Editorial policy

- Author bio pages

- Add Organization schema

Phase 3 — AI Landing Pages

- Create dedicated pages for:

- Question clusters

- Comparisons

- Definitions

- Re-run Program 1 + 2

🔹 How this helps AI visibility (why it works)

LLMs prefer content that is:

- Explicit (direct answers)

- Structured (FAQ, headings)

- Trustworthy (authors, dates, sources)

- Consistent (entity clarity)

Your pipeline now:

- Detects gaps

- Quantifies confidence

- Gives exact fixes

This is AI Experience Optimization, not traditional SEO.

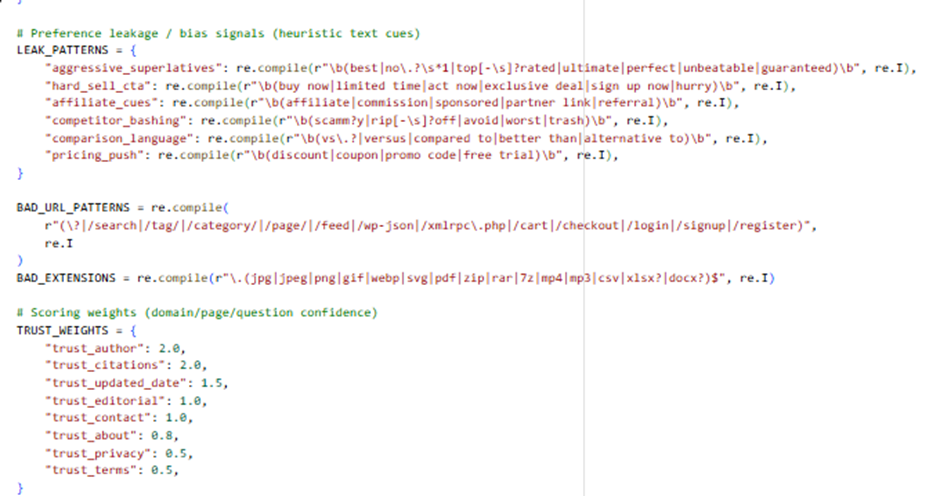

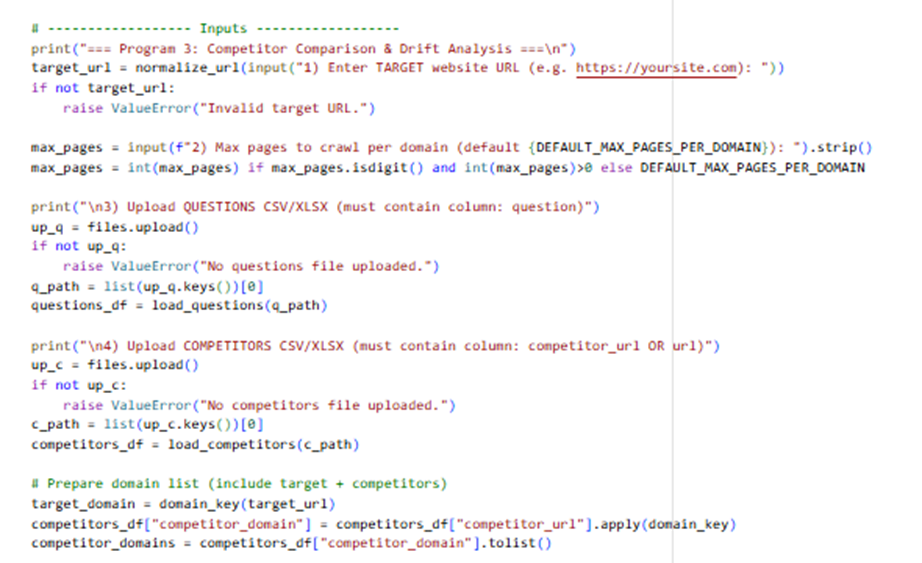

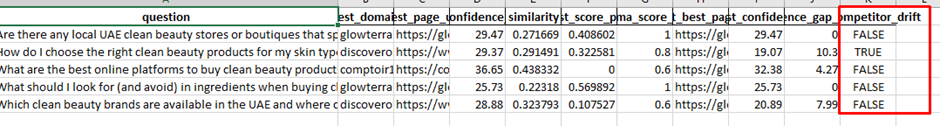

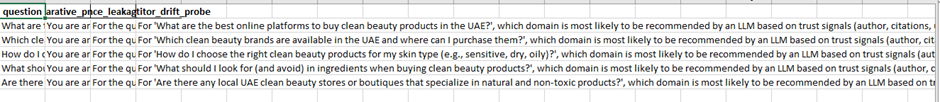

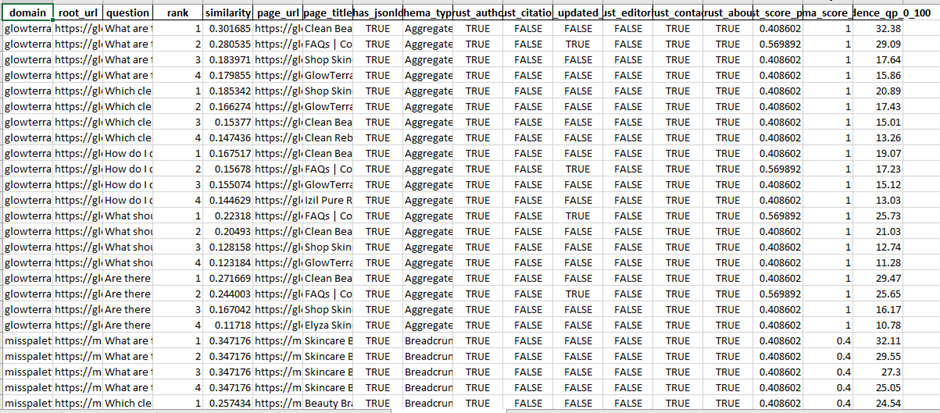

Stage 3: Stage 3: Recommendation Bias Reinforcement + Competitor Drift Analysis | AIEO

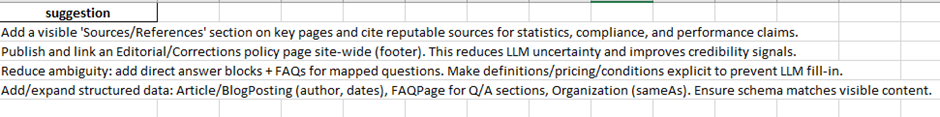

Input: Target website url, number of pages to be crawled, user search question in a excel sheet, set of competitors in a excel sheet

# Goals:

# – Comparative prompts (target vs competitors)

# – Preference leakage signals (marketing/affiliate/superlatives, competitor mentions)