SUPERCHARGE YOUR ONLINE VISIBILITY! CONTACT US AND LET’S ACHIEVE EXCELLENCE TOGETHER!

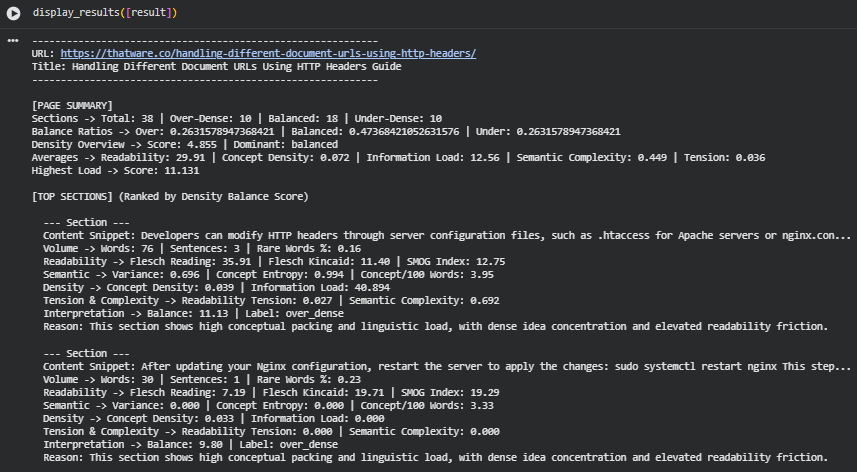

This project delivers a structured analytical system designed to evaluate how well a page balances information depth, conceptual richness, and readability flow. The analysis operates at both the section level and page level, using advanced NLP, linguistic metrics, and semantic modelling to detect areas where content is either overloaded with information or lacking sufficient depth.

The system builds a detailed content profile by extracting webpage text, segmenting it into meaningful sections, and computing multiple density- and readability-related features. These include concept compression, information load, semantic complexity, long-sentence ratios, passive voice prevalence, readability friction, and overall linguistic weight. Through these measurements, each section is assigned a balance score that classifies it as over-dense, balanced, or under-dense.

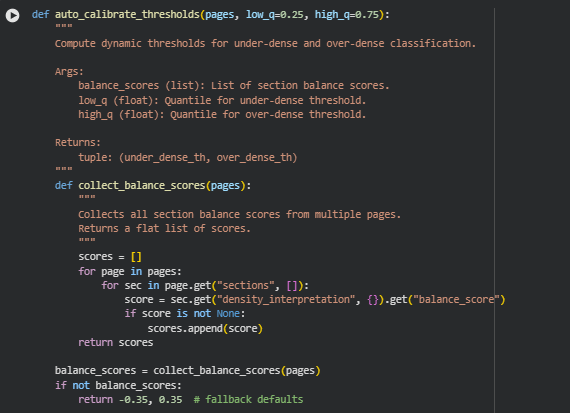

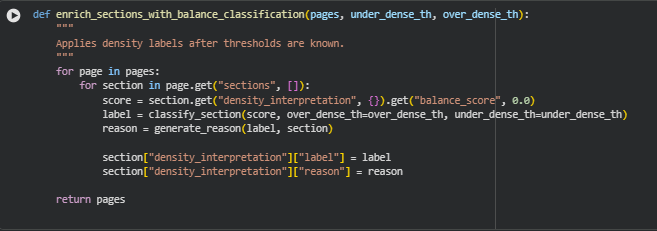

A calibrated thresholding approach ensures that density classification adapts to the global characteristics of each page set. This reduces false positives and enables more meaningful interpretation across varied content styles.

The resulting insights highlight how information is distributed, whether conceptual load is consistent, and which areas may require structural, linguistic, or semantic improvement. The system also includes multiple visualization modules—such as distribution charts, section-level trend analysis, and page-level radar plots—to make patterns easily interpretable.

Overall, the Content Density Equilibrium Analyzer provides a comprehensive, data-driven understanding of how content density, clarity, and reading effort interact across a page. This helps ensure that content maintains both richness and accessibility, supporting stronger engagement, clarity of communication, and improved content performance across search and user experience contexts.

Project Purpose

The primary purpose of the Content Density Equilibrium Analyzer is to provide a structured, data-driven framework for understanding whether page content maintains an effective balance between conceptual depth and readability ease. In practical terms, the system identifies how efficiently information is delivered, how evenly conceptual load is distributed, and where linguistic friction increases or decreases reader comprehension.

Modern SEO and content workflows frequently suffer from two opposing issues:

- Over-dense sections that compress too many ideas, increasing cognitive load and reducing clarity.

- Under-dense sections that contain insufficient semantic depth, lowering informational value and topical strength.

This project addresses both issues by integrating semantic modelling, linguistic measurement, and readability evaluation into a unified diagnostic process. The system quantifies density and clarity through multiple perspectives—concept coverage, information packaging, sentence structure, vocabulary diversity, and semantic variation.

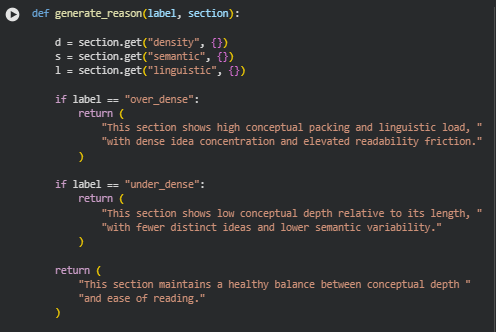

The purpose is not only to measure density but to explain why certain sections deviate from the ideal balance. Each section receives an interpretable balance score, classification label, and a reasoning summary that explains its conceptual or linguistic issues in a way that supports actionable refinement.

The ultimate goal is to enable systematic improvement of content structure, clarity, and topical delivery. By revealing over-loaded or under-developed areas, the tool helps create content that is both rich in information and smooth to read, promoting stronger user engagement, better comprehension, and improved alignment with modern quality-driven search evaluation standards.

Project’s Key Topics Explanation and Understanding

A balanced content experience depends on how effectively a page distributes information, concepts, and linguistic complexity. The Content Density Equilibrium Analyzer evaluates this balance by examining the interplay between semantic depth and readability. The following key topics outline the foundational concepts assessed throughout the project.

Content Density

Content density describes how much meaningful information is packed into a given span of text. High density typically indicates a large concentration of concepts, data, or complex statements within a small section. Low density, on the other hand, reflects minimal conceptual presence despite occupying space.

Density is influenced by three primary elements:

- Concept volume (how many distinct ideas appear)

- Information packaging style (how tightly ideas are grouped)

- Sentence structure complexity (how hard text is to digest)

Sections that compress too many ideas become cognitively demanding, while sections with too few ideas feel shallow or redundant. This project measures density with multiple features to capture both conditions accurately.

Information Depth

Information depth reflects how substantively a section contributes to the overall theme or topic. Depth is not merely about word count; it is about the richness, clarity, and distinctiveness of the ideas provided.

Depth-related measurements include:

- Concept diversity

- Concept entropy

- Semantic variance

- How effectively ideas build on one another

When information depth is too low, a section may appear vague or superficial. When too high, it may overwhelm readers. The project quantifies this depth to understand whether the content meaningfully advances topic understanding.

Readability

Readability describes how easily a human reader can process the language used in a section. Even highly informative content can fail if readability drops below a certain threshold.

Several linguistic signals influence readability:

- Frequency of long or complex sentences

- Passive voice usage

- Vocabulary variation

- Readability indices such as SMOG

The project integrates these signals to assess how smoothly a reader can absorb information. Readability is interpreted alongside semantic features to understand whether linguistic load contributes to over-density or under-density.

Balance Between Depth and Readability

The central principle of this project is the equilibrium point between semantic richness and linguistic simplicity. A balanced section:

- Provides enough conceptual depth to be meaningful

- Distributes ideas without compressing them excessively

- Maintains a reading flow that does not create friction

- Avoids padding or filler content that reduces informational value

The system evaluates balance using a unified score that merges density features, semantic depth indicators, and readability load. This equilibrium score is the foundation for classifying each section as over-dense, balanced, or under-dense.

Over-Dense Sections

An over-dense section concentrates too many ideas, technical elements, or complex structures into too little space. This creates cognitive strain and disrupts clarity.

Typical characteristics include:

- High conceptual compression

- Dense semantic clusters

- Elevated complexity and readability friction

- Heavy informational load

Over-dense sections often require restructuring to improve clarity and pacing without losing informational value.

Under-Dense Sections

An under-dense section contributes limited conceptual or informational value relative to its length. Such sections weaken topic delivery and reduce the perceived depth of a page.

Signals commonly associated with under-density include:

- Low concept diversity

- Minimal semantic variation

- Repetitive or generic statements

- Unused space where more actionable information could exist

Under-dense areas usually require enrichment through clearer ideas, deeper explanation, or more practical insights.

Conceptual Load vs. Linguistic Load

Two different types of “load” influence density:

ceptual Load

The amount and complexity of ideas presented. High conceptual load increases cognitive effort, especially when concepts are tightly packed.

guistic Load

The difficulty imposed by language itself. Text may be grammatically correct yet still hard to read due to long sentences, vocabulary density, or structural complexity.

The analyzer evaluates both loads independently and jointly, allowing precise identification of whether a section is dense due to the content itself, the writing style, or a combination of both.

Semantic Variation and Concept Distribution

Healthy content demonstrates variation in ideas and terminology without redundancy. Semantic variation indicates how diversified and contextually relevant the concepts are across a section.

Key semantic aspects include:

- Distribution of concepts throughout the section

- Diversity of meaningful terms

- Relationships among concepts

- Degree of repetition versus new information

These metrics help determine whether a section advances the topic or merely rephrases the same points.

Balance Score and Classification Framework

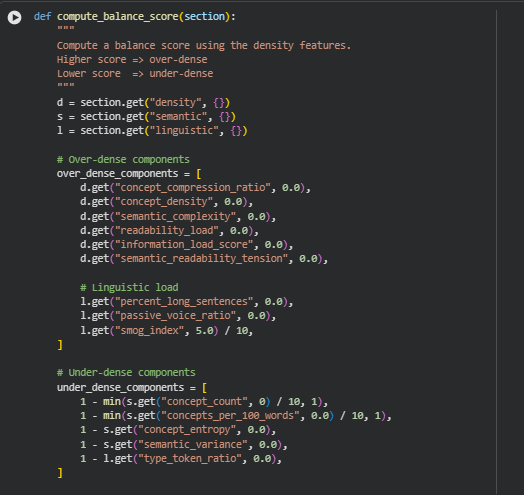

At the core of the analyzer is a balance score that combines multiple categories of features:

- Over-density indicators

- Under-density indicators

- Readability signals

- Semantic depth metrics

- Information load

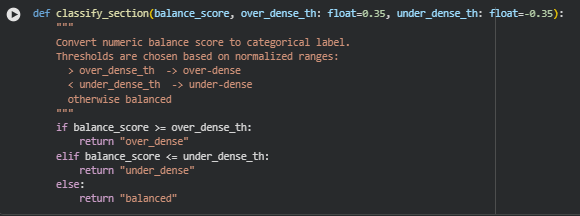

The resulting score is interpreted through calibrated thresholds to classify each section as:

- Over-dense

- Balanced

- Under-dense

Each classification is paired with an interpretation that explains the underlying causes in practical terms.

keyboard_arrow_down

Q&A: Understanding Project Value and Importance

What specific problem does the Content Density Equilibrium Analyzer solve?

Webpages often suffer from imbalance—either by overloading readers with dense, complex information or by presenting content that is shallow and lacks substantive value. This imbalance weakens comprehension, disrupts reading flow, and reduces topical satisfaction. The analyzer identifies these imbalances in a structured, measurable way by evaluating how ideas, complexity, and readability interact within each section. Instead of relying on surface-level metrics like word count, the system assesses semantic depth, information load, and linguistic friction simultaneously. This comprehensive approach makes the resulting insights far more actionable than traditional content audits.

Why is balancing semantic depth and readability important for high-performing content?

High-performing content must communicate meaningful ideas while remaining accessible. When semantic depth is high but readability is poor, readers experience cognitive overload and disengage. When readability is smooth but semantic depth is low, the page appears vague or unhelpful. Balancing both ensures that a page delivers high informational value without making users work too hard to understand it. This equilibrium supports stronger engagement signals, better user experience, and more persuasive content pathways.

How does this system add value beyond regular SEO content audits?

Traditional audits typically focus on keyword placement, visibility factors, and structural elements. However, they rarely analyze the quality of idea distribution within the content itself. This analyzer evaluates the internal balance of meaning across a page. It highlights:

- Where ideas are compressed too tightly

- Where content lacks depth

- How complexity affects readability

- How well semantic variety supports topical coverage

This type of analysis fills a long-standing gap in content quality evaluation, supporting more strategic optimization decisions that go beyond keywords and formatting.

What makes the analyzer different from generic readability checkers or NLP scoring tools?

Generic readability tools assess surface-level linguistic difficulty but ignore meaning. General NLP scoring tools may detect sentiment or topic presence but do not evaluate how well ideas are structured within a section. This analyzer combines both worlds by interpreting conceptual load, semantic diversity, information packaging, and linguistic friction together. Instead of rating readability alone, it determines whether the meaning and the structure reinforce or conflict with each other—something traditional tools cannot measure.

How can content teams benefit from detecting over-dense sections?

Over-dense sections often contain valuable insights but present them in a way that overwhelms readers. By isolating these sections, the analyzer reveals precisely where clarity can be improved without sacrificing depth. Rewriting guidance becomes easier because the system shows whether complexity is driven by excessive ideas, difficult language, or both. This enables more strategic content refinement, improves user engagement, and helps retain readers through information-heavy segments.

How does identifying under-dense sections strengthen topic authority?

Under-dense sections dilute topical depth and reduce perceived expertise. By highlighting these areas, the analyzer reveals where additional explanation, examples, or conceptual expansion will strengthen the page. Rather than guessing which parts feel shallow, teams receive a data-backed indication of where depth is currently insufficient and needs enhancement to boost completeness and authority.

Why is section-level analysis more practical than page-level scoring alone?

A page-level score provides an overall impression, but it hides structural weaknesses inside the content. Pages with strong overall performance can still contain problematic sections that disrupt flow or weaken topic delivery. Section-level analysis pinpoints these areas with precision. This empowers content teams to make targeted improvements, reducing the time and effort required to diagnose issues manually.

How does the analyzer ensure that findings remain aligned with real reading experiences?

The system evaluates both semantic and linguistic elements, reflecting how real readers interpret content. High conceptual load, dense clusters of ideas, or difficult sentence structures produce friction that readers immediately feel. By modeling these signals, the analyzer mirrors genuine user experience rather than relying on superficial indicators. This alignment makes its insights relevant for optimizing readability, depth, and clarity in ways that directly affect audience perception.

Can the analyzer help maintain consistency across multi-section or long-form content?

Yes. Long-form content often varies in density as topics shift, contributors differ, or sections evolve over time. The analyzer provides a unified density baseline against which all sections can be compared. This helps maintain consistent pacing, conceptual depth, and readability throughout the page. The resulting uniformity strengthens narrative flow, improves comprehension, and enhances the professional feel of the content.

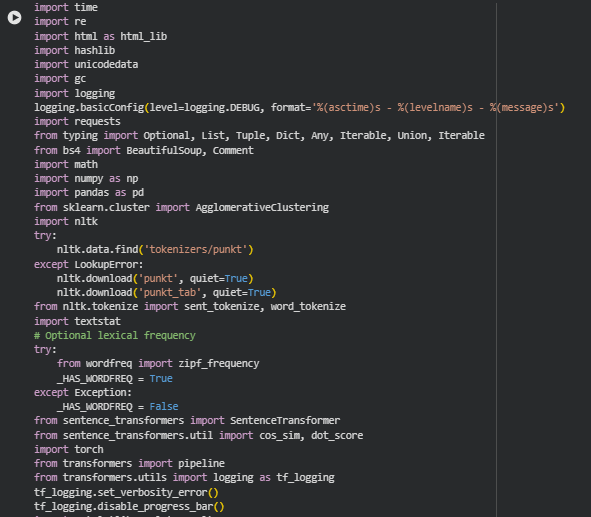

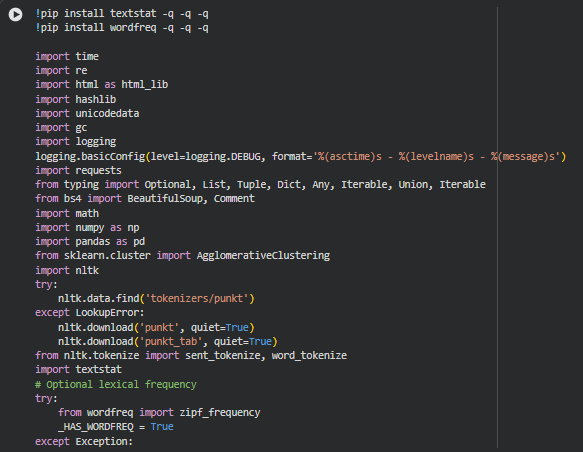

Libraries Used

time

The time library provides basic time-handling utilities such as timestamps and sleep functions, commonly used for performance measurement or pacing operations. It is a lightweight standard library module frequently applied in automation and data processing workflows.

Within this project, time is used during certain stages of data processing to track procedural duration, manage pacing when handling multiple URLs, and assist in debugging performance bottlenecks. This helps ensure stable execution during large-scale content evaluation.

re

The re module implements regular expressions, enabling structured text search, pattern-based extraction, and flexible string manipulation. It is a core tool for any natural language or web data processing task where precise matching or cleaning is required.

In this project, re supports HTML cleanup, text normalization, label trimming for visualizations, and structural extraction of content segments. It ensures consistency in preprocessing and enables accurate parsing of noisy or variable webpage content.

html (html_lib)

The built-in html module provides utilities to handle HTML entities, escape characters, and perform conversions between encoded and decoded text representations. It is particularly relevant when processing raw text extracted from web pages.

Here, html_lib assists in unescaping HTML entities from webpage content, ensuring that the analyzed text reflects human-readable content rather than encoded artifacts. This improves accuracy in linguistic and semantic feature computation.

hashlib

hashlib offers hashing algorithms used to generate unique, reproducible identifiers based on input content. It is widely used for cryptographic hashing, deduplication, and lightweight identity generation.

In this project, it generates unique, stable section identifiers based on section text. While these identifiers are not shown to end users, they provide reliable internal references for tracking sections across processing stages.

unicodedata

The unicodedata module supports Unicode text normalization, character category lookups, and consistent processing of multilingual content. This is essential for text-intensive systems that rely on character-level consistency.

Here, it ensures normalized text representation before tokenization and semantic analysis. By removing inconsistencies such as mixed encodings or combined characters, the project maintains accurate and uniform linguistic measurement.

gc (garbage collector)

The gc module controls Python’s garbage collection, allowing explicit memory cleanup and inspection. It is particularly helpful in large-scale NLP pipelines where memory spikes may occur.

This project uses gc to release unused objects during multi-URL processing, preventing memory buildup when generating embeddings or handling large HTML payloads. This maintains stable performance across long-running sessions.

logging

The logging library provides configurable logging utilities for debugging and monitoring code execution. It is preferred over print statements for production-grade systems due to its flexibility and structured formatting.

Logging is integrated into this project to record processing steps, detect anomalies in data extraction, and support traceability during multi-stage NLP operations. This supports reliability and debugging without interrupting the analytic flow.

requests

The requests library is the industry-standard HTTP client for fetching web content in Python. It simplifies sending requests and managing responses across the web.

This project uses requests to fetch webpage HTML content directly from URLs. It ensures stable retrieval, handles network errors gracefully, and provides a reliable interface for feeding page content into the content-density pipeline.

typing

The typing module provides type annotations that improve readability, reduce errors, and support modern development tools and IDEs.

In the project, typing clarifies expected data structures such as lists of sections, dictionaries of metrics, or optional arguments. This maintains code quality and structural consistency across the entire pipeline.

BeautifulSoup (bs4)

BeautifulSoup is a widely used HTML parsing library that allows structured navigation, cleaning, and extraction of content from web pages.

Here, it extracts readable text from HTML by removing scripts, styles, comments, and structural noise. This ensures that semantic and linguistic analysis focuses solely on meaningful content rather than irrelevant markup.

math

The math library provides mathematical functions and constants used in calculations such as logarithms, trigonometry, and rounding.

This project uses math for geometric and statistical computations, including radar chart angles and score normalization. It enables precise numerical handling within the visualization and density scoring logic.

numpy (np)

NumPy is a foundational numerical computing library offering efficient array operations, mathematical functions, and linear algebra routines.

In this project, NumPy powers vector-based computations, such as embedding norms, density ratios, and matrix transformations. It ensures fast processing when generating semantic metrics and producing aggregated section statistics.

pandas (pd)

Pandas is the standard library for structured data analysis in Python. It supports dataframes, indexing, aggregation, and tabular manipulation.

The project converts section-level and page-level metrics into pandas dataframes for visualization, aggregation, and intermediate organization. This enables efficient plotting, correlation evaluation, and cross-page comparison.

sklearn.cluster.AgglomerativeClustering

AgglomerativeClustering is a hierarchical clustering method used to group items based on similarity. It is often used in NLP workflows to cluster sentences, topics, or embeddings.

Here, it groups semantically similar sentences to support redundancy detection, semantic mass distribution, or topic compactness evaluation. This contributes to downstream density scoring and improves interpretation of conceptual structure.

nltk + punkt tokenizers

NLTK is a classical natural language processing library that provides tokenization, stemming, corpora access, and sentence segmentation.

In this project, NLTK supplies sentence and word tokenizers essential for linguistic metrics such as sentence count, word count, sentence-length distribution, and readability estimates. These foundational metrics feed directly into density scoring.

textstat

Textstat provides a suite of readability formulas such as Flesch Reading Ease, SMOG, and Gunning Fog. These models estimate reading difficulty based on linguistic features.

Here, it computes readability load, long-sentence ratios, and linguistic friction indicators. These scores help determine whether textual complexity contributes to over-density or supports balanced content structure.

wordfreq (optional)

The wordfreq library estimates word frequency using large multilingual corpora. It helps evaluate lexical rarity.

In this project, it optionally enhances lexical richness scoring when available. Rare word usage can signal conceptual depth but may introduce unnecessary friction, influencing overall density classification.

sentence_transformers (SentenceTransformer, cos_sim, dot_score)

sentence_transformers provides state-of-the-art transformer models for embedding text into high-dimensional semantic vectors.

This project uses the model to encode section text for calculating semantic mass, conceptual differentiation, embedding-based complexity metrics, and inter-sentence similarity. These embeddings form the backbone of semantic density scoring.

torch

PyTorch is a deep learning framework used to run transformer models, tensor computations, and GPU-accelerated operations.

It supports the SentenceTransformer encoding process, enabling efficient embedding computation even at scale. Without PyTorch, transformer-based semantic scoring would be significantly slower.

transformers (pipeline)

Hugging Face’s transformers library provides pretrained NLP models for summarization, sentiment, classification, and more.

Here, the sentiment pipeline is used to detect subtle tonal or structural shifts when required. It complements semantic indicators and contributes to the tension score used in density interpretation.

matplotlib.pyplot (plt)

Matplotlib is the foundational plotting library in Python that supports fine-grained, customizable visualizations.

In the project, it renders all major plots—including density distribution, section-level performance, and radar charts. It provides layout control and ensures professional-quality presentation of project results.

seaborn (sns)

Seaborn builds on Matplotlib and provides modern statistical visualizations with enhanced aesthetics and simplicity.

It enables clearer, publication-ready representations of density metrics, comparative distributions, and multi-page trends. Styled plots support intuitive interpretation and make complex data more accessible.

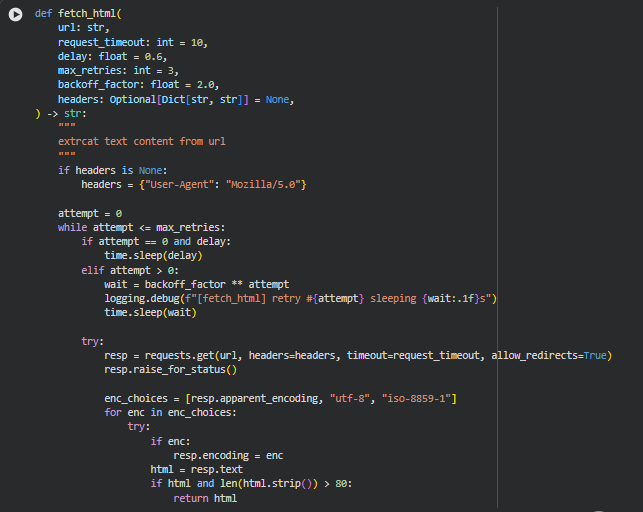

Function: fetch_html

Summary

This function is responsible for retrieving the raw HTML content from a given webpage URL in a stable, fault-tolerant manner. Real-world websites frequently introduce challenges such as slow response times, temporary network drops, redirects, inconsistent character encodings, or incomplete responses. This function is designed to address those challenges through controlled retry logic, flexible encoding handling, adjustable waiting periods, and safety checks that ensure only meaningful HTML content is returned.

Within the project, this function serves as the foundational step of the entire analysis pipeline. Every downstream process—content extraction, structuring, sentence segmentation, embedding generation, and density evaluation—depends on reliable and consistent HTML input. By ensuring robust fetching with retry and backoff mechanisms, the function minimizes disruption during multi-URL analysis and ensures smooth operation even when target sites behave unpredictably.

Key Code Explanations

if headers is None: headers = {“User-Agent”: “Mozilla/5.0”}

A default user agent is applied when none is provided. Many websites block requests that do not resemble standard browser traffic. Using a browser-like user agent helps reduce the chance of being flagged or denied by servers.

Retry Loop:

while attempt <= max_retries:

The function attempts the request multiple times before giving up. This prevents temporary network issues or server delays from causing the entire workflow to fail.

Exponential Backoff:

wait = backoff_factor ** attempt

time.sleep(wait)

When a retry is needed, the waiting time increases exponentially. This mirrors real-world resilient network design, reducing the load on the server and increasing the chance of a successful response after temporary failures.

Encoding Handling:

enc_choices = [resp.apparent_encoding, “utf-8”, “iso-8859-1”]

Webpages frequently use non-standard or incorrectly reported encodings. Trying multiple encoding candidates ensures the returned HTML is readable and not garbled.

HTML Quality Check:

if html and len(html.strip()) > 80:

return html

Very short or empty responses typically indicate errors, blocked access, or partial loads. This check ensures only meaningful HTML content is accepted for analysis.

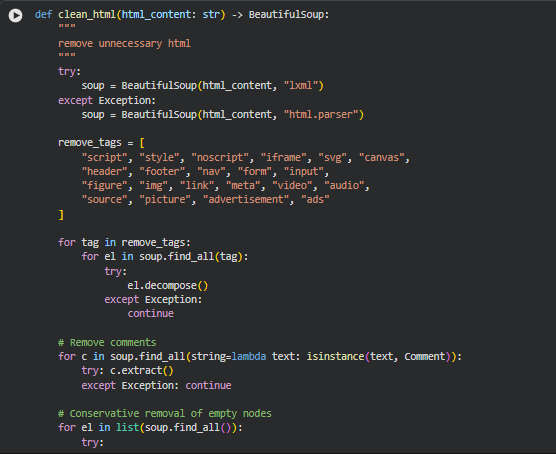

Function: clean_html

Summary

This function transforms the raw HTML into a cleaner and more analysis-ready structure. Webpages often contain large amounts of non-content elements—scripts, styles, navigation panels, ads, tracking tags, media containers, invisible nodes, and comment blocks. These elements provide no semantic value for density assessment, yet they introduce noise that can distort text extraction and downstream computations such as chunking, embedding, and readability scoring.

The function uses BeautifulSoup to parse the HTML and systematically remove irrelevant or disruptive tags. It then clears out HTML comments and prunes empty or placeholder nodes. The result is a purified document tree containing only meaningful textual and structural elements. This cleaned representation ensures that section boundaries, semantic density measures, and content segmentation genuinely reflect the substance of the page rather than clutter or interface artifacts.

Because content density relies heavily on accurate text representation, this cleaning stage directly influences the quality of the final density balancing insights. A well-cleaned HTML structure prevents artificially inflated depth measurements and maintains reliable readability evaluation.

Key Code Explanations

Parser Selection:

soup = BeautifulSoup(html_content, “lxml”)

The function attempts to parse HTML using lxml—a high-performance parser known for speed and accuracy. If unavailable or if parsing fails, it falls back to Python’s built-in “html.parser”, ensuring reliability regardless of environment constraints.

Tag Removal List:

remove_tags = [“script”, “style”, “noscript”, “iframe”, …]

This predefined set includes elements that do not contribute meaningful textual information. Removing them eliminates noise from layouts, advertisements, embedded media, and scripts that may otherwise pollute extracted content.

Iterative Tag Cleanup:

for tag in remove_tags:

for el in soup.find_all(tag):

el.decompose()

Each unwanted tag is identified and removed from the document tree. decompose() ensures both the tag and its children are fully discarded, resulting in a lighter, content-centric structure.

Comment Removal:

for c in soup.find_all(string=lambda text: isinstance(text, Comment)):

c.extract()

HTML comments often contain tracking code, metadata, or developer notes. Removing them ensures only visible and meaningful text remains.

Pruning Empty Nodes:

if not el.get_text(strip=True) and not el.attrs:

el.decompose()

After major tags are cleared, some nodes become empty shells. This condition removes nodes that have no text and no attributes, preventing clutter during content extraction and section segmentation.

Function: _is_boilerplate

Summary

This helper function identifies and filters out boilerplate text that typically appears on webpages but carries no semantic or analytical value. Examples include footer disclaimers, copyright notices, cookie banners, or generic platform credits. These elements can distort content density calculations by adding low-value text, so removing them ensures the analysis focuses only on meaningful sections of the page.

The function performs lightweight pattern matching on lowercase text and checks whether the size of the text block is small enough to qualify as boilerplate. This avoids accidental removal of large, meaningful sections that happen to contain one of the boilerplate terms.

Key Code Explanations

Boilerplate Set Construction:

bps = set(_REDUCED_BOILERPLATE + (boilerplate_terms or []))

This merges a predefined list of boilerplate fragments with any custom terms provided. Using a set improves lookup efficiency during repeated checks.

Boilerplate Detection Logic:

if bp in lower and len(lower.split()) < max_words_for_drop:

return True

The text is classified as boilerplate only if it contains a boilerplate phrase and is short enough to be considered non-content. This prevents legitimate long sections from being accidentally removed.

Function: _safe_normalize

Summary

This function ensures all text segments are normalized, clean, and consistent before any linguistic processing occurs. HTML pages often contain irregular spacing, escaped characters, Unicode inconsistencies, and formatting artifacts. The normalization step converts text into a standardized form that improves the reliability of tokenization, semantic embedding, readability calculation, and density measurement.

By performing Unicode normalization, HTML entity decoding, and whitespace cleanup, this function prepares the text so that downstream functions operate on clean and predictable inputs.

Key Code Explanations

Unicode + HTML Cleanup Pipeline:

txt = html_lib.unescape(text)

txt = unicodedata.normalize(“NFKC”, txt

txt = re.sub(r”[\r\n\t]+”, ” “, txt)

txt = re.sub(r”\s+”, ” “, txt).strip()

This sequence performs three essential cleanup steps:

- Converts HTML entities into readable characters.

- Standardizes Unicode formatting for consistency.

- Removes line breaks, tabs, and excess spaces to ensure clean word boundaries.

Function: preprocess_section_text

Summary

This function performs the final cleaning step for each extracted text section before it is analyzed. It applies normalization, removes inline clutter such as URLs and reference markers, filters out boilerplate fragments, and enforces a minimum word count. The goal is to ensure each section fed into the density computation pipeline contains meaningful, interpretable text with minimal noise.

By strengthening the signal-to-noise ratio, this function ensures that semantic embeddings, concept extraction, readability assessment, and density balancing reflect the actual content quality rather than structural residue or irrelevant text blocks.

Key Code Explanations

URL Removal:

text = re.sub(r”https?://\S+|www\.\S+”, ” “, text)

Inline URLs often inflate word counts and introduce irrelevant tokens. This line safely removes them to prevent distortion in readability and density metrics.

Inline Reference Removal:

text = re.sub(r”\[\d+\]|\(\d+\)”, ” “, text)

Academic-style inline references provide no value to density assessment and can interfere with token distribution, so they are stripped.

Boilerplate Filtering:

if _is_boilerplate(text, boilerplate_terms=boilerplate_terms):

return “”

By integrating the earlier helper function, this step ensures generic non-informational text does not enter the analysis pipeline.

Minimum Length Enforcement:

if len(text.split()) < min_word_count:

return “”

Very short fragments lack sufficient semantic structure for density evaluation. This condition prevents them from being misinterpreted as meaningful content.

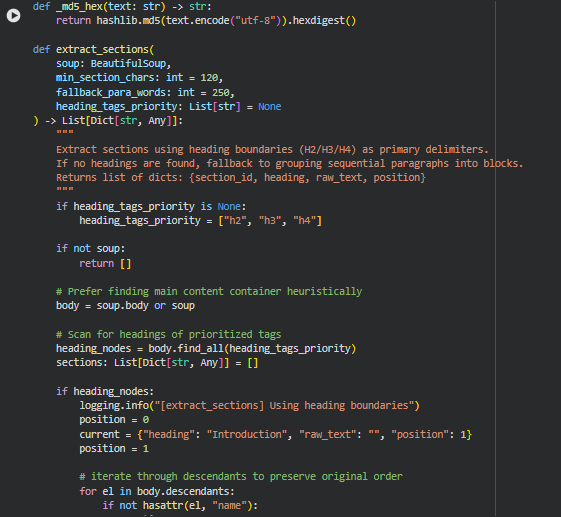

Function: _md5_hex

Summary

This function generates a deterministic and compact hash string for any given text using the MD5 hashing algorithm. The purpose is to create stable identifiers for extracted sections. Webpage content often contains many elements with similar headings or similar text fragments. Instead of relying on raw text or numeric counters—which can collide or change depending on parsing order—this hash-based identifier guarantees uniqueness while keeping the ID short, consistent, and reproducible across runs.

Since section integrity is important for density scoring, semantic embedding, and later result mapping, the use of a stable MD5 hash ensures that each extracted section can be reliably tracked throughout the pipeline.

Key Code Explanations

MD5 Hash Generation:

return hashlib.md5(text.encode(“utf-8”)).hexdigest()

The input text is encoded to UTF-8 bytes, hashed, and converted to a hexadecimal string. This ensures cross-platform stability and avoids issues caused by special characters in raw IDs.

Function: extract_sections

Summary

This function performs structured extraction of meaningful content sections from the cleaned HTML. It uses heading tags as primary boundaries because headings represent natural semantic segmentation created by the page author. When headings are present, the function walks through the document in reading order and constructs section blocks under each heading, merging associated paragraphs and list items.

In cases where a webpage lacks proper headings—common in poorly structured content or dynamically generated pages—the function falls back to grouping sequential paragraphs into blocks of similar size. This ensures robust section extraction even in non-standard HTML layouts.

The function also ensures each section is long enough to be meaningful, assigns a unique ID to each block using the _md5_hex helper, records the heading, raw text, and position, and outputs a list of structured, analyzable sections ready for semantic and density computations.

Key Code Explanations

Heading-Based Section Detection:

heading_nodes = body.find_all(heading_tags_priority)

This detects the presence of any H2, H3, or H4 tags (or any custom hierarchy supplied). If headings exist, the function will use them as natural section dividers.

Iterating Through Document in Reading Order:

for el in body.descendants:

Using descendants preserves the original DOM order, ensuring text flows naturally as the reader would experience it. This prevents fragmentation or misordering of paragraphs.

Heading Boundary Trigger:

if name in heading_tags_priority:

if len(current[“raw_text”].strip()) >= min_section_chars:

sec_id_src = f”{current[‘heading’]}_{current[‘position’]}”

current[“section_id”] = _md5_hex(sec_id_src)

sections.append(current)

When a new heading is encountered, the previous section is finalized—but only if it has enough text to be meaningful—before starting a new block. This avoids creating empty or trivial sections.

Paragraph Accumulation:

if name in (“p”, “li”):

txt = _safe_normalize(el.get_text())

if txt:

current[“raw_text”] += ” ” + txt

Paragraphs and list items are the main carriers of human-readable text. Each one is cleaned and appended to the current section to keep content coherent.

Fallback Paragraph Grouping:

if len(buffer_words) >= fallback_para_words:

heading = f”Section {position}”

If no headings exist, the function groups paragraphs until they reach a target word count (default 250 words), then emits a section block. This ensures even poorly structured pages still produce well-formed analytical sections.

Section ID Generation:

sec_id_src = f”{heading}_{position}”

“section_id”: _md5_hex(sec_id_src)

Combining heading text and its order ensures each section receives a unique and stable ID for later analysis.

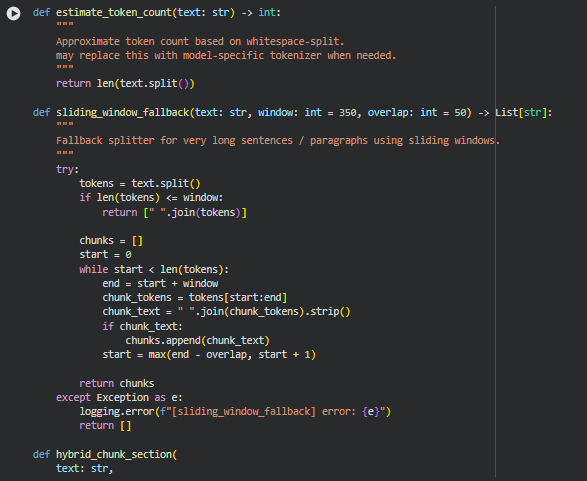

Function: estimate_token_count

Summary

This utility function provides a lightweight approximation of token count by splitting text based on whitespace. Although true tokenization depends on the model in use, this approximation serves as a practical method for chunk sizing, windowing, and sentence grouping without imposing the overhead of loading model-specific tokenizers. It is fast, deterministic, and works consistently across all sections of the pipeline that need quick length estimation.

Because chunk formation, fallback splitting, and size thresholds depend on approximate token lengths rather than exact model tokens, this approach ensures predictable performance and avoids unnecessary complexity.

Function: sliding_window_fallback

Summary

This function generates controlled, overlapping text segments when sentences or paragraph blocks exceed a predefined maximum window size. Very long sentences can break standard semantic chunking workflows, and this fallback ensures that such content is still processed without truncation. It operates by sliding a window across the tokenized text with a configurable overlap, creating coherent chunks that maintain context continuity.

This fallback is essential for pages containing highly compressed or technical text, where extremely long sentences are common. Its behavior ensures that downstream embedding models receive manageable and context-rich inputs.

Key Code Explanations

Sliding window loop:

while start < len(tokens):

end = start + window

chunk_tokens = tokens[start:end]

The window moves across the token sequence, capturing fixed-size segments until the end of the text is reached.

Controlled overlap logic:

start = max(end – overlap, start + 1)

This ensures chunks overlap by a defined number of tokens, preserving context between segments and preventing abrupt semantic breaks.

Function: hybrid_chunk_section

Summary

This function performs multi-stage chunking designed specifically for semantic analysis. It first divides the section into sentences, then accumulates them until a threshold is reached, producing balanced, semantically coherent chunks. If a single sentence exceeds the maximum allowed size, the function switches to the sliding window fallback to avoid skipping or breaking the content.

After chunk formation, any chunk that falls below the minimum token threshold is removed to maintain analysis quality. This layered approach ensures that every processed section yields manageable, meaningful units of text suitable for embedding models and density scoring.

Key Code Explanations

Sentence accumulation logic:

current_chunk.append(sent)

token_est = estimate_token_count(” “.join(current_chunk))

Each sentence is added to the current chunk, and its size is estimated. This ensures semantic grouping by respecting sentence boundaries.

Oversized sentence fallback:

if len(current_chunk) == 1:

chunks.extend(

sliding_window_fallback(

current_chunk[0],

window=max_tokens,

overlap=sliding_overlap

)

)

When a single sentence is too long, the fallback prevents pipeline failure and preserves content richness through controlled segmentation.

Chunk emission before overflow:

final_chunk = ” “.join(current_chunk[:-1]).strip()

If adding a sentence causes the chunk to exceed the token limit, the previous sentences are finalized as one chunk, and the oversized sentence begins a new block.

Final chunk handling:

if estimate_token_count(final_text) > max_tokens:

chunks.extend(

sliding_window_fallback(final_text, window=max_tokens, overlap=sliding_overlap)

)

Any leftover text after sentence traversal is evaluated independently to ensure it fits size constraints, using sliding windows if necessary.

Final filtration:

cleaned = [c.strip() for c in chunks if estimate_token_count(c.strip()) >= min_tokens]

Chunks that are too short to provide meaningful semantic signals are removed to maintain analysis fidelity.

Function: extract_preprocess_and_chunk_page

Summary

This function acts as the full pipeline controller for a single webpage: it fetches the raw HTML from the URL, cleans the HTML structure, extracts meaningful content sections, preprocesses the text, and finally chunks the cleaned text into model-ready units. It is the unifying layer that brings together all lower-level utilities such as HTML fetching, cleaning, section extraction, text preprocessing, and hybrid chunking. Because this is the function clients indirectly benefit from when running the tool, it ensures that even if individual steps fail, the output remains structured, predictable, and useful for downstream ranking analysis, semantic evaluation, or any NLP processing.

The function’s workflow starts by making a robust request to fetch the webpage. If that succeeds, it parses and sanitizes the HTML to remove noise such as scripts, styling, or boilerplate UI components. It then identifies section-level content, cleans the textual data by removing unnecessary or non-informative segments, checks for minimum quality thresholds, and prepares the text for chunking. Once the sections are validated, the chunking system breaks long text into manageable token-bounded segments so that LLM-based models can efficiently process them without losing semantic coherence. Finally, all chunks are organized with metadata such as section position, estimated tokens, and text statistics, resulting in a well-structured and analyzable dataset.

This function is critical because it guarantees consistent structure regardless of HTML variations, content length, or preprocessing edge cases. It centralizes all logic into a clean, deterministic output format essential for the project’s downstream semantic analysis tasks.

The function returns a clean, predictable dictionary object that downstream pipeline components rely upon. The note field only populates in case of errors, making debugging more straightforward.

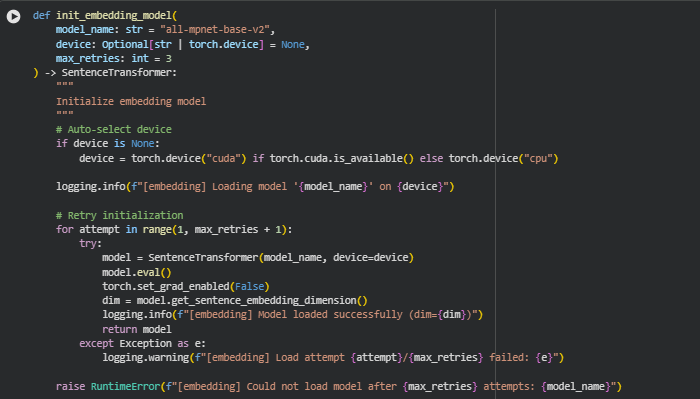

Function: init_embedding_model

Summary

This function loads and initializes the sentence-embedding model used throughout the project. It selects the appropriate compute device (GPU if available, otherwise CPU), loads the specified SentenceTransformer model, and configures it for inference so that no gradients are tracked. A retry mechanism safeguards against temporary model download failures, network instability, or hardware-related initialization errors. The output is a fully ready, inference-optimized embedding model that can quickly transform text chunks into high-quality vector embeddings.

Because embedding generation is central to all downstream semantic tasks—ranking, alignment analysis, depth evaluation—this function ensures the model is always correctly loaded and stable before any text processing begins. Even though the function is short, it plays a crucial role in project robustness.

Key Code Explanations

- Device Auto-Selection

if device is None:

device = torch.device(“cuda”) if torch.cuda.is_available() else torch.device(“cpu”)

This logic automatically chooses the best computation device. If a GPU is available, embeddings are computed far faster; otherwise, the CPU is used. This ensures the function adapts seamlessly to the environment without requiring manual configuration.

- Model Loading with Retries

for attempt in range(1, max_retries + 1):

try:

model = SentenceTransformer(model_name, device=device)

…

return model

A retry loop increases reliability. If the first attempt fails—due to transient network issues, download corruption, or device locking—the function tries again. This prevents the entire pipeline from failing prematurely and makes the system resilient for real-world usage.

- Setting the Model for Inference-Only Mode

model.eval()

torch.set_grad_enabled(False)

These two lines disable gradient computation globally for this model. Since the model is only used to generate embeddings (not to train), disabling gradients saves memory, reduces overhead, and increases speed during inference.

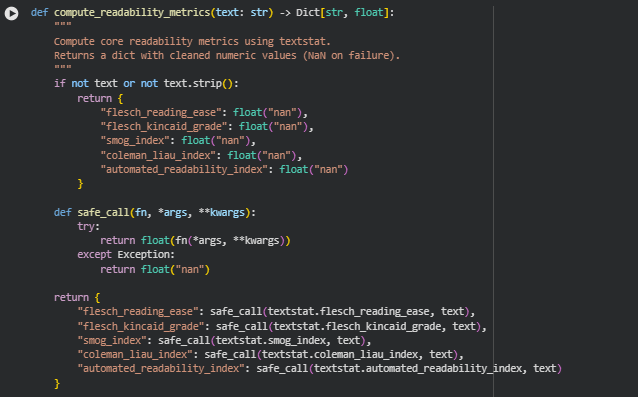

Function: compute_readability_metrics

Summary

This function calculates core readability scores for a given text using the textstat library. The purpose is to quantify how easy or difficult a section of content is to read, which directly relates to the “readability” aspect of the Semantic Content Density Balancer project. By computing multiple standardized readability metrics, the function provides a multi-dimensional view of text complexity, sentence structure, and cognitive load. Each metric is returned in a dictionary with float values, while handling any errors gracefully by returning NaN for invalid or empty text.

These metrics are later used to compute section-level linguistic load, overall balance, and to support interpretability in section density assessment.

Key Code Explanations

- Safe Metric Computation

def safe_call(fn, *args, **kwargs):

try:

return float(fn(*args, **kwargs))

except Exception:

return float(“nan”)

The safe_call helper wraps each textstat function call to catch errors caused by unusual input (e.g., very short text, special characters). This guarantees robust metric calculation across diverse web content.

- Computing Multiple Readability Metrics

return {

“flesch_reading_ease”: safe_call(textstat.flesch_reading_ease, text),

“flesch_kincaid_grade”: safe_call(textstat.flesch_kincaid_grade, text),

“smog_index”: safe_call(textstat.smog_index, text),

“coleman_liau_index”: safe_call(textstat.coleman_liau_index, text),

“automated_readability_index”: safe_call(textstat.automated_readability_index, text)

}

Here, five widely recognized readability formulas are applied:

- Flesch Reading Ease: Higher values indicate easier text.

- Flesch-Kincaid Grade Level: Represents U.S. school grade level needed to comprehend the text.

- SMOG Index: Estimates years of education required for understanding.

- Coleman-Liau Index: Uses characters per word and sentence length to evaluate readability.

- Automated Readability Index (ARI): Provides another grade-level approximation for text complexity.

By computing multiple metrics, the function gives a balanced assessment of readability that considers both word and sentence complexity.

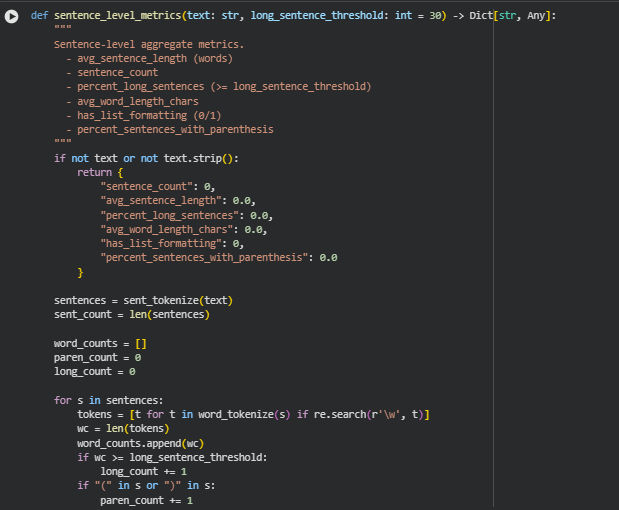

Function: sentence_level_metrics

Summary

This function calculates sentence-level linguistic metrics to quantify the structural characteristics of a given text. It provides insights into sentence length, complexity, and formatting patterns, which are crucial for understanding readability and cognitive load in content. Metrics include the total number of sentences, average sentence length, the proportion of long sentences, average word length, presence of list-style formatting, and the percentage of sentences containing parentheses. These measurements are important for the Semantic Content Density Balancer project because they directly feed into over-dense and under-dense analysis of sections, complementing semantic and conceptual density calculations.

The results help in assessing linguistic load, sentence complexity, and readability friction, providing a detailed layer of interpretability for each content section.

Key Code Explanations

- Sentence Tokenization and Counting Long Sentences

sentences = sent_tokenize(text)

sent_count = len(sentences)

…

for s in sentences:

tokens = [t for t in word_tokenize(s) if re.search(r’\w’, t)]

…

if “(” in s or “)” in s:

paren_count += 1

This block performs several critical operations: it splits the text into sentences, counts the words per sentence, identifies sentences exceeding the long_sentence_threshold, and counts sentences containing parentheses. These computations provide direct measures of sentence complexity and structural load.

Average Word Length Calculation

“ words_all = [w for w in word_tokenize(text) if re.search(r’\w’, w)] if words_all: avg_word_len = float(np.mean([len(w) for w in words_all])) `

Here, the function calculates the average number of characters per word, offering insight into lexical density and the potential reading difficulty of the text.

Detection of List Formatting

has_list = 1 if re.search(r'(^|\n)\s*([-*•]|\d+\.)\s+’, text) else 0

This regex detects the presence of common list formats such as bullets (-, *, •) or numbered lists (1., 2.), providing an indication of content organization and visual chunking.

Percentages of Long Sentences and Parentheses

percent_long = (long_count / sent_count) if sent_count else 0.0

percent_paren = (paren_count / sent_count) if sent_count else 0.0

These lines normalize the counts of long sentences and sentences with parentheses to percentages, enabling consistent comparison across sections of varying lengths.

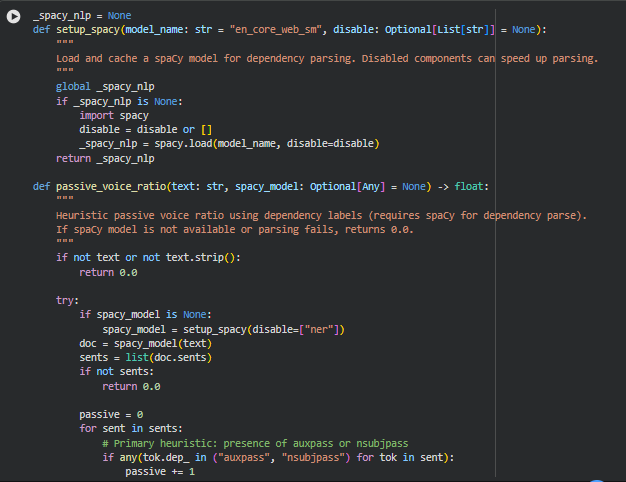

Function: setup_spacy

Summary

This function initializes and caches a spaCy NLP model for dependency parsing. It allows selective disabling of pipeline components to speed up processing when full NLP functionality is not required. The cached model ensures that repeated calls to spaCy do not reload the model, improving efficiency for multi-section or multi-page analysis. Dependency parsing is a core step for identifying grammatical structures, particularly for linguistic metrics like passive voice detection.

Function: passive_voice_ratio

Summary

The passive_voice_ratio function calculates the proportion of sentences in a given text that are written in the passive voice. Passive voice sentences tend to increase cognitive load and affect readability, making this metric valuable for assessing linguistic density. The function relies on a spaCy dependency parse to identify passive constructions using both primary and secondary heuristics.

Key Code Explanations

Handling Missing or Empty Text

if not text or not text.strip():

return 0.0

This ensures robustness by returning a safe default when the input text is empty or only contains whitespace.

Loading or Using Cached spaCy Model

if spacy_model is None:

spacy_model = setup_spacy(disable=[“ner”])

doc = spacy_model(text)

sents = list(doc.sents)

The function either uses the provided spaCy model or calls setup_spacy to load a cached model with the Named Entity Recognition component disabled for efficiency. The text is then parsed into sentences for further analysis.

Primary Passive Voice Detection

if any(tok.dep_ in (“auxpass”, “nsubjpass”) for tok in sent):

passive += 1

continue

This checks for dependency labels auxpass or nsubjpass, which are canonical indicators of passive voice in English, and counts such sentences as passive.

Secondary Heuristic: Be-Aux + Past Participle

for i, tok in enumerate(sent):

if tok.lemma_.lower() in (“be”, “is”, “was”, “were”, “been”, “being”) and i + 1 < len(sent):

nxt = sent[i + 1]

if getattr(nxt, “tag_”, None) == “VBN”:

passive += 1

break

This pattern identifies sentences where a form of “be” is immediately followed by a past participle (VBN), which is a common passive voice construction. It serves as a fallback for cases where the dependency parse may not explicitly label a token as passive.

Safe Ratio Calculation

return passive / len(sents)

The function returns the fraction of passive sentences over total sentences, providing a normalized ratio that can be used in density scoring. If spaCy fails or is missing, the function safely returns 0.0 to avoid breaking the pipeline.

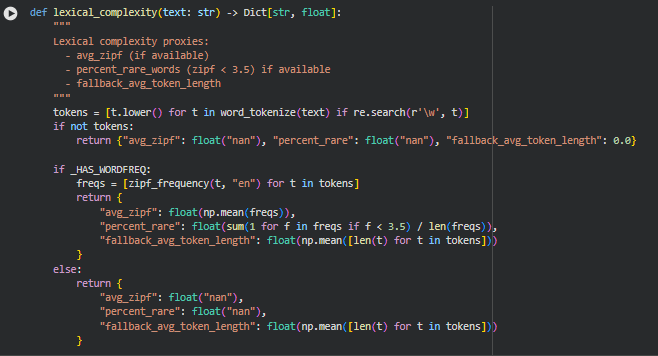

Function: lexical_complexity

Summary

The lexical_complexity function estimates the lexical richness and difficulty of a text. It uses word-level metrics to quantify how complex or rare the vocabulary is, which directly affects readability and semantic density. When the optional wordfreq library is available, the function computes average Zipf frequency and the percentage of rare words. As a fallback, it calculates average token length to provide a basic approximation of lexical difficulty. These metrics are useful in understanding how challenging a section might be for readers and contribute to the overall density interpretation.

Key Code Explanations

Tokenization and Filtering

tokens = [t.lower() for t in word_tokenize(text) if re.search(r’\w’, t)]

This line splits the text into individual word tokens while filtering out punctuation or non-word symbols. All tokens are converted to lowercase to ensure consistent lexical analysis.

Handling Empty Text

if not tokens:

return {“avg_zipf”: float(“nan”), “percent_rare”: float(“nan”), “fallback_avg_token_length”: 0.0}

If no valid word tokens are found, the function returns safe default values to avoid downstream errors.

Lexical Metrics Using Word Frequency

if _HAS_WORDFREQ:

freqs = [zipf_frequency(t, “en”) for t in tokens]

return {

“avg_zipf”: float(np.mean(freqs)),

“percent_rare”: float(sum(1 for f in freqs if f < 3.5) / len(freqs)),

“fallback_avg_token_length”: float(np.mean([len(t) for t in tokens]))

}

If the wordfreq library is installed, this block computes the Zipf frequency for each token. Average Zipf frequency reflects overall word commonality, and percent_rare calculates the proportion of rare words (Zipf < 3.5). The fallback average token length is still computed for reference.

Fallback Without Word Frequency

else:

return {

“avg_zipf”: float(“nan”),

“percent_rare”: float(“nan”),

“fallback_avg_token_length”: float(np.mean([len(t) for t in tokens]))

}

If wordfreq is unavailable, the function provides NaN for Zipf-based metrics but still returns the average token length, ensuring minimal lexical complexity insight is retained.

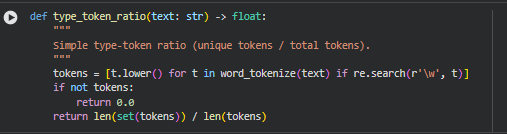

Function type_token_ratio

Summary

This function calculates the type-token ratio (TTR) of a given text, which measures lexical diversity. It is computed as the number of unique tokens (types) divided by the total number of tokens. Higher values indicate more varied vocabulary, while lower values suggest repetition. The function tokenizes the text using NLTK, filters non-alphanumeric tokens, and converts all tokens to lowercase to ensure consistency. This metric helps assess content richness and semantic complexity.

Key Code Explanations

Tokenization and normalization

tokens = [t.lower() for t in word_tokenize(text) if re.search(r’\w’, t)]

The text is split into individual word tokens. Tokens that contain no word characters (like punctuation or symbols) are removed. All tokens are converted to lowercase to avoid counting the same word with different cases multiple times. This ensures an accurate count of unique words.

Type-token ratio computation

return len(set(tokens)) / len(tokens)

The unique tokens are identified using set(tokens). Dividing the number of unique tokens by the total token count gives a ratio between 0 and 1, representing the lexical diversity of the text. For empty or whitespace-only text, the function safely returns 0.0.

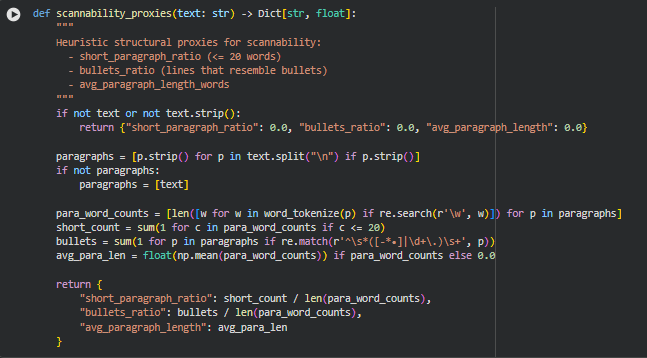

Function scannability_proxies

Summary

This function calculates heuristic structural metrics to estimate the scannability of a text. Scannability reflects how easily a reader can skim through the content. The function considers short paragraphs, bullet-like lines, and average paragraph length. These proxies help determine if the content layout supports quick comprehension, which is critical for web readability and user engagement. For empty or whitespace-only input, it safely returns zeros.

Key Code Explanations

Paragraph extraction and fallback

paragraphs = [p.strip() for p in text.split(“\n”) if p.strip()]

if not paragraphs:

paragraphs = [text]

The text is split on newline characters to identify paragraphs. Leading and trailing spaces are removed. If no paragraph breaks are detected, the entire text is treated as a single paragraph, ensuring the function can handle unformatted text.

Word count per paragraph

para_word_counts = [len([w for w in word_tokenize(p) if re.search(r’\w’, w)]) for p in paragraphs]

Each paragraph is tokenized using NLTK, counting only alphanumeric tokens. This yields an accurate word count per paragraph, which is used to calculate metrics like short paragraph ratio and average paragraph length.

Short paragraph ratio and bullet detection

short_count = sum(1 for c in para_word_counts if c <= 20)

bullets = sum(1 for p in paragraphs if re.match(r’^\s*([-*•]|\d+\.)\s+’, p))

short_count tracks paragraphs with 20 or fewer words. bullets counts lines resembling lists using a regular expression that matches common bullet symbols or numbered patterns. These proxies reflect quick readability and structured content.

Average paragraph length and final metric computation

avg_para_len = float(np.mean(para_word_counts)) if para_word_counts else 0.0

return {

“short_paragraph_ratio”: short_count / len(para_word_counts),

“bullets_ratio”: bullets / len(para_word_counts),

“avg_paragraph_length”: avg_para_len

}

The mean word count per paragraph is calculated for overall content density. Ratios for short paragraphs and bullets are normalized by the number of paragraphs. These metrics collectively quantify scannability in a concise and interpretable way.

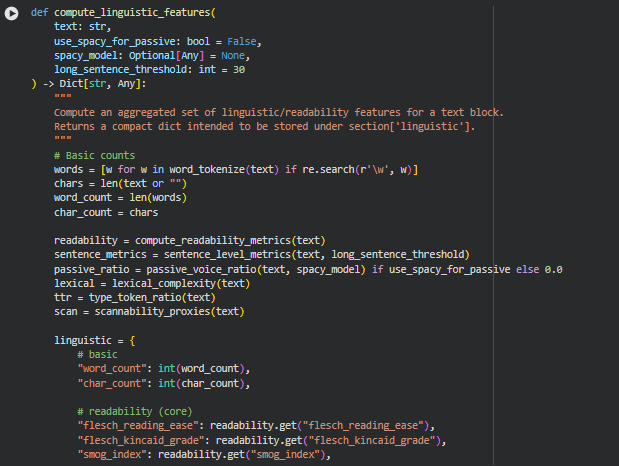

Function compute_linguistic_features

Summary

This function aggregates a comprehensive set of linguistic and readability metrics for a given text block. It is designed to produce a compact dictionary that can be stored under section[‘linguistic’] in the page analysis pipeline. The metrics cover multiple dimensions including basic counts (words, characters), readability (Flesch, SMOG, etc.), sentence-level properties (average length, long sentences), lexical complexity, type-token ratio, scannability, and optionally passive voice ratio. Collectively, these features provide a holistic view of the text’s linguistic profile, which is critical for evaluating content density, readability, and user experience.

Key Code Explanations

Basic word and character counts

words = [w for w in word_tokenize(text) if re.search(r’\w’, w)]

chars = len(text or “”)

word_count = len(words)

char_count = chars

The function tokenizes the text using NLTK and filters for alphanumeric tokens. This ensures accurate word counts. Character counts are computed directly from the text. These basic counts provide a foundation for other derived metrics such as average sentence or paragraph length.

Conditional passive voice computation

passive_ratio = passive_voice_ratio(text, spacy_model) if use_spacy_for_passive else 0.0

If use_spacy_for_passive is enabled, the function calls passive_voice_ratio to estimate the proportion of sentences written in passive voice using dependency parsing. If disabled, it defaults to 0.0. This allows optional analysis depending on performance or dependency constraints.

Aggregation of multiple feature groups

linguistic = {

# basic

“word_count”: int(word_count),

“char_count”: int(char_count),

# readability (core)

“flesch_reading_ease”: readability.get(“flesch_reading_ease”),

…

This block consolidates features from multiple sub-functions (compute_readability_metrics, sentence_level_metrics, lexical_complexity, type_token_ratio, scannability_proxies) into a single dictionary. Each metric is converted to the appropriate numeric type for consistency and downstream processing. By combining these metrics, the function provides a unified view of linguistic characteristics, making it easier to evaluate content density, readability, and structural clarity at the section level.

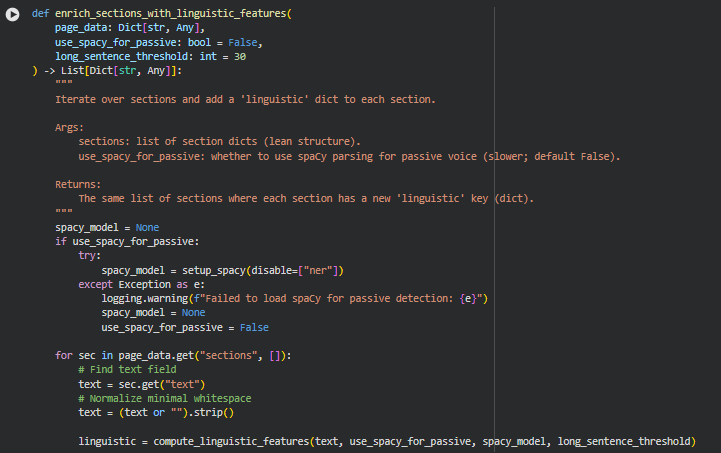

Function enrich_sections_with_linguistic_features

Summary

This function iterates over all sections in a page and enriches each section with a detailed linguistic profile. The linguistic profile is stored under the ‘linguistic’ key in each section dictionary. The function leverages compute_linguistic_features to calculate a wide range of metrics including readability, sentence-level statistics, lexical complexity, type-token ratio, scannability proxies, and optional passive voice ratio. It supports optional spaCy-based passive voice detection, which can improve the accuracy of syntactic analysis but may increase processing time. By adding these features, every section gains a standardized representation of its linguistic and readability characteristics, which is crucial for evaluating content density and clarity systematically across a page.

Key Code Explanations

Conditional spaCy model initialization for passive voice detection

spacy_model = None

if use_spacy_for_passive:

try:

spacy_model = setup_spacy(disable=[“ner”])

except Exception as e:

logging.warning(f”Failed to load spaCy for passive detection: {e}”)

spacy_model = None

use_spacy_for_passive = False

This block attempts to load a spaCy model if use_spacy_for_passive is enabled. By disabling unnecessary components like Named Entity Recognition (ner), parsing is faster. If loading fails, it logs a warning and disables passive voice analysis for safety. This ensures that the function remains robust even if the optional NLP dependency is unavailable.

Iterating through sections and adding linguistic metrics

for sec in page_data.get(“sections”, []):

text = sec.get(“text”)

text = (text or “”).strip()

linguistic = compute_linguistic_features(text, use_spacy_for_passive, spacy_model, long_sentence_threshold)

sec[“linguistic”] = linguistic

The function loops through each section, retrieves its text content, and normalizes it minimally by stripping whitespace. It then calls compute_linguistic_features to generate a full set of linguistic metrics. These metrics are added back into the section dictionary under the ‘linguistic’ key. This approach ensures that every section consistently contains both raw content and computed linguistic attributes, forming a complete dataset for downstream analysis of content density and readability.

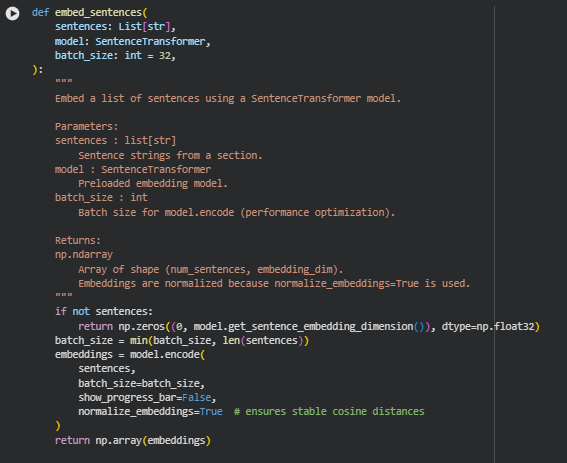

Function embed_sentences

Summary

This function converts a list of sentences into dense vector representations using a SentenceTransformer model. Sentence embeddings allow capturing semantic meaning in a numerical form, which is essential for comparing sections or sentences based on their semantic similarity, clustering related content, or downstream analysis like density evaluation. The function supports batching to optimize memory usage and processing time, and it normalizes embeddings to ensure that subsequent similarity calculations using cosine distance are stable and consistent.

Key Code Explanations

Handling empty sentence lists

if not sentences:

return np.zeros((0, model.get_sentence_embedding_dimension()), dtype=np.float32)

This line checks if the input list of sentences is empty. If so, it returns an empty NumPy array with the correct embedding dimension. This prevents downstream errors when attempting to embed an empty section, ensuring robustness.

Embedding sentences in batches with normalization

embeddings = model.encode(

sentences,

batch_size=batch_size,

show_progress_bar=False,

normalize_embeddings=True

)

Here, the SentenceTransformer’s encode method is called to generate embeddings. The batch size is set dynamically for efficiency. The normalize_embeddings=True argument scales all vectors to unit length, which stabilizes cosine similarity calculations later on. This ensures that distances between sentence vectors accurately reflect semantic differences without being affected by vector magnitude.

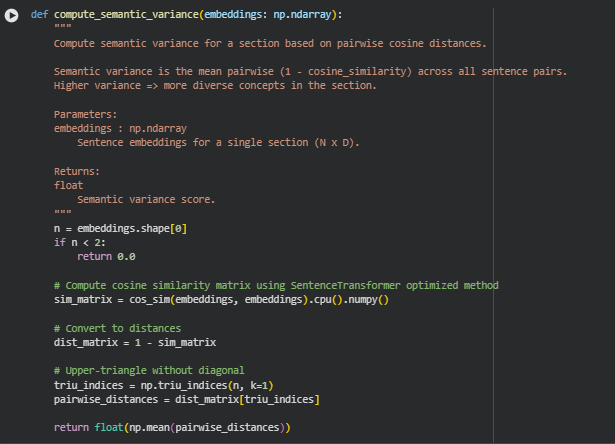

Function compute_semantic_variance

Summary

This function calculates the semantic variance of a section by measuring how diverse the sentences are in terms of meaning. It uses the pairwise cosine distances between sentence embeddings. A high semantic variance indicates that a section contains a wide range of ideas or concepts, while a low variance suggests semantic redundancy. This metric is critical for evaluating information density and conceptual richness in content, which are key aspects of this project.

Key Code Explanations

Check for minimum number of sentences

n = embeddings.shape[0]

if n < 2:

return 0.0

This line ensures that variance is only computed when the section has at least two sentences. A single sentence cannot provide pairwise diversity, so the function safely returns 0.0 for such cases.

Compute cosine similarity matrix

sim_matrix = cos_sim(embeddings, embeddings).cpu().numpy()

The function uses cos_sim from sentence-transformers to efficiently compute pairwise cosine similarities for all sentence embeddings. The result is converted to a NumPy array for further manipulation. Cosine similarity measures semantic closeness, where 1.0 is identical meaning and 0.0 indicates orthogonal semantics.

Convert similarity to distance

dist_matrix = 1 – sim_matrix

Cosine similarity is converted into a distance metric. Distance values close to 1 indicate sentences are semantically very different, and values near 0 indicate semantic similarity. This conversion aligns with the notion of “variance” as semantic spread.

Extract upper-triangle distances

triu_indices = np.triu_indices(n, k=1)

pairwise_distances = dist_matrix[triu_indices]

Only the upper-triangle of the distance matrix (excluding the diagonal) is used to avoid redundant pair comparisons, since the distance matrix is symmetric.

Compute mean semantic variance

return float(np.mean(pairwise_distances))

Finally, the function averages all pairwise distances to produce a single semantic variance score. This score quantifies the overall diversity of concepts within the section, providing a key input for density and richness evaluation.

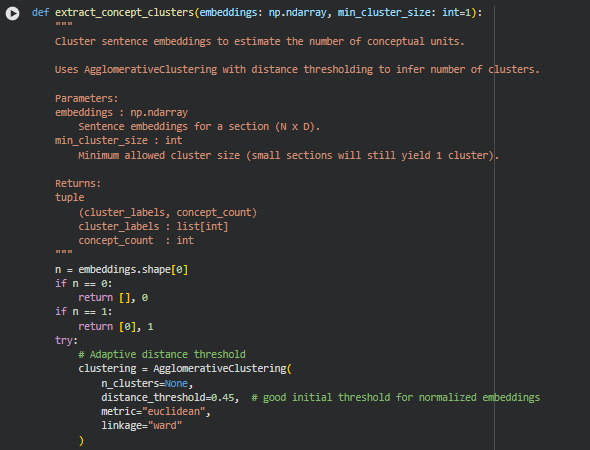

Function extract_concept_clusters

Summary

This function estimates the number of distinct conceptual units within a section by clustering its sentence embeddings. Using agglomerative hierarchical clustering, it groups semantically similar sentences into clusters, where each cluster represents a coherent concept. The resulting concept count helps quantify conceptual density and supports the assessment of information richness in content sections.

Key Code Explanations

Handle small sections

n = embeddings.shape[0]

if n == 0:

return [], 0

if n == 1:

return [0], 1

This ensures that edge cases with no sentences or a single sentence return meaningful defaults. An empty section has no clusters, and a single-sentence section is treated as one concept.

Agglomerative clustering setup

clustering = AgglomerativeClustering(

n_clusters=None,

distance_threshold=0.45, # good initial threshold for normalized embeddings

metric=”euclidean”,

linkage=”ward”

)

labels = clustering.fit_predict(embeddings)

AgglomerativeClustering is configured with distance thresholding rather than a fixed number of clusters. Sentences closer than the threshold in embedding space are merged, while more distant sentences form separate clusters. This approach automatically adapts the cluster count based on the semantic diversity of the section.

Filter valid clusters

unique, counts = np.unique(labels, return_counts=True)

valid_clusters = [u for u, c in zip(unique, counts) if c >= min_cluster_size]

concept_count = max(1, len(valid_clusters))

Clusters smaller than min_cluster_size are ignored, avoiding noise from very small groups. The concept count is at least 1, ensuring that each section has at least one conceptual unit.

Fallback mechanism

except Exception as e:

logging.warning(f”[extract_concepts] clustering failed: {e}”)

return np.array([-1] * n), 1

If clustering fails for any reason, the function safely treats the entire section as a single concept, maintaining robustness in the pipeline. This ensures downstream processes relying on concept counts continue without interruption.

Function compute_concepts_per_100_words

Summary

This function calculates the conceptual density of a text section by measuring the number of identified concepts per 100 words. It provides a normalized metric to compare sections of different lengths, helping to understand how densely information is packed. A higher value indicates more concepts per word, reflecting higher informational richness or complexity, while a lower value suggests sparse conceptual coverage.

Key Code Explanations

Handle division by zero

if word_count == 0:

return 0.0

This prevents errors in sections with zero words, ensuring the function always returns a valid numeric value.

Compute normalized concept ratio

return (concept_count / word_count) * 100

The ratio scales the raw concept count to a per-100-words basis, creating a standardized measure of semantic density that can be compared across sections regardless of length. This metric is a core feature in evaluating content balance and readability.

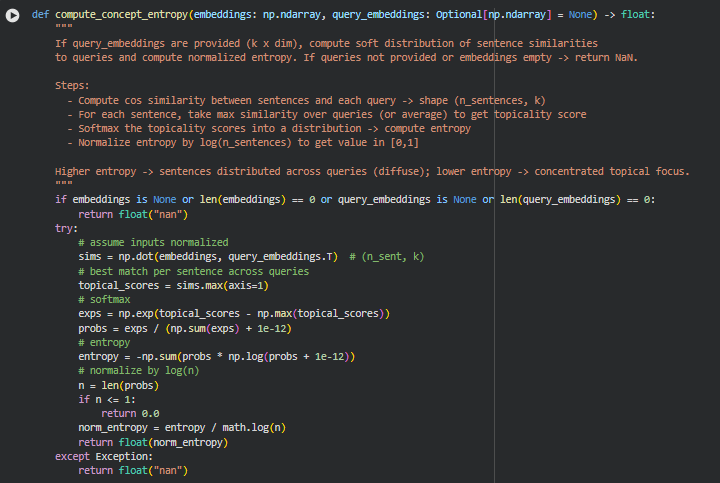

Function compute_concept_entropy

Summary

This function calculates a normalized entropy score to quantify how evenly a section’s sentences align with a set of query embeddings. If query embeddings are provided, it measures the topical distribution of content. A low entropy indicates sentences are focused around a few key topics, while high entropy suggests content is more diffuse and spread across multiple topics. This metric is useful to evaluate content coherence and alignment with target queries.

Key Code Explanations

Cosine similarity calculation

sims = np.dot(embeddings, query_embeddings.T) # (n_sent, k)

topical_scores = sims.max(axis=1)

This computes sentence-to-query similarity and extracts the highest match per sentence, producing a topical relevance score for each sentence.

Softmax and probability distribution

exps = np.exp(topical_scores – np.max(topical_scores))

probs = exps / (np.sum(exps) + 1e-12)

The softmax converts raw topical scores into a probability distribution over sentences, ensuring they sum to 1 while stabilizing against numerical overflow.

Entropy calculation and normalization

entropy = -np.sum(probs * np.log(probs + 1e-12))

norm_entropy = entropy / math.log(n)

Entropy quantifies the spread of topicality across sentences. Dividing by log(n) normalizes the value to [0, 1], allowing comparison between sections of different lengths. A higher normalized entropy indicates a less focused, more diverse topical distribution.

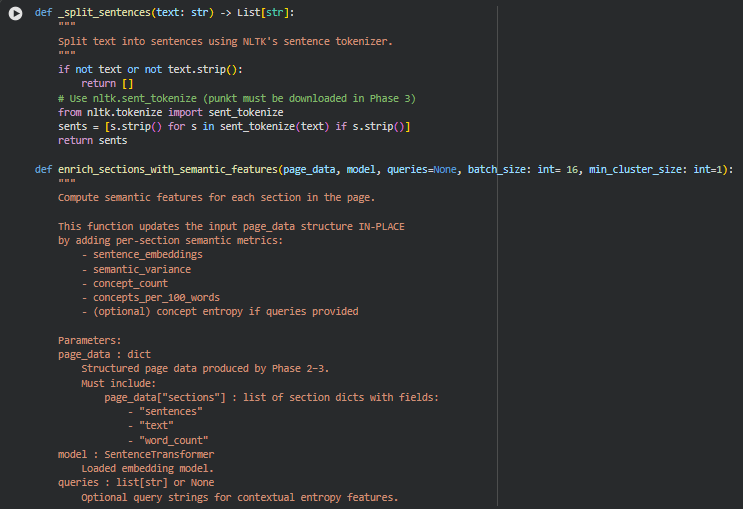

Function _split_sentences

Summary

This helper function splits a block of text into individual sentences using NLTK’s sentence tokenizer. It ensures that empty strings or whitespace-only segments are removed. The output is a clean list of sentences, which is essential for downstream semantic embedding and analysis.

Function enrich_sections_with_semantic_features

Summary

This function computes semantic-level metrics for each section in a page. It updates the page_data dictionary in-place and adds a semantic field to each section, including:

- sentence_embeddings (optional, stored internally)

- semantic_variance – measures diversity of sentence meanings

- concept_count – number of conceptual clusters

- concepts_per_100_words – conceptual compression ratio

- concept_entropy – topical focus relative to optional queries

This phase captures the conceptual richness and coherence of the content, complementing linguistic features.

Key Code Explanations

Embedding queries for entropy calculation

if queries: query_emb = embed_sentences(queries, model)

When query strings are provided, they are converted to embeddings. These are later used in compute_concept_entropy to measure how sentences distribute across query topics.

Sentence embeddings per section

embeddings = embed_sentences(sentences, model, batch_size)

All sentences in a section are embedded in batches. Normalized embeddings allow consistent cosine similarity calculations for semantic variance and clustering.

Semantic variance computation

sv = compute_semantic_variance(embeddings)

Measures the average pairwise cosine distance between sentence embeddings. A higher variance indicates more semantic diversity within the section.

Concept clustering and compression

labels, concept_count = extract_concept_clusters(embeddings, min_cluster_size)

concepts_per_100 = compute_concepts_per_100_words(concept_count, word_count)

Sentences are clustered to identify distinct conceptual units. The number of concepts relative to word count provides a conceptual density metric, helping evaluate if content is information-dense or verbose.

Concept entropy (if queries provided)

if query_emb is not None:

entropy = compute_concept_entropy(embeddings, query_emb)

Entropy quantifies how sentences are topically distributed across provided queries. Low entropy signals focused content; high entropy suggests dispersed coverage.

Default handling for empty sections

if not sentences:

section[“semantic”] = {

“semantic_variance”: 0.0,

“concept_count”: 0,

“concepts_per_100_words”: 0.0,

“concept_entropy”: float(“nan”)

}

continue

Ensures that sections without text or sentences still have a consistent semantic structure, preventing errors in downstream aggregation.

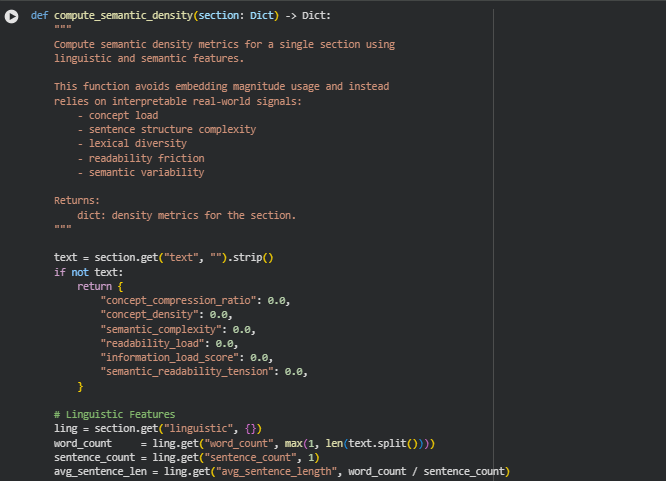

Function compute_semantic_density

Summary

This function computes semantic density metrics for a single content section by combining linguistic and semantic features. It avoids using raw embedding magnitudes and instead relies on interpretable proxies such as:

- Concept load and distribution (concept_count, concepts_per_100_words)

- Sentence structure complexity (avg_sentence_length, long_sentence_ratio)

- Lexical diversity (percent_rare, type_token_ratio)

- Readability friction (linguistic complexity)

- Semantic variability (semantic_variance, concept_entropy)

The function returns a structured dictionary capturing concept compression, semantic complexity, readability load, and combined information load, providing a practical measure of how dense and conceptually rich a section is.

Key Code Explanations

Linguistic complexity calculation

linguistic_complexity = (

avg_sentence_len * (1 + rare_ratio + ttr + passive_ratio + long_sentence_ratio)

)

This line combines multiple linguistic signals into a single normalized complexity metric. The average sentence length is amplified by lexical rarity, type-token ratio, passive voice usage, and proportion of long sentences. It represents the effort needed to parse and understand the text.

Semantic density metrics

concept_compression_ratio = concept_count / max(sentence_count, 1)

concept_density = concept_count / word_count

semantic_complexity = semantic_variance * concept_entropy

- concept_compression_ratio measures how many concepts are packed per sentence.

- concept_density measures concepts relative to overall word count, providing a conceptual concentration measure.

- semantic_complexity multiplies the variability of sentence meanings by the distribution across topics (concept_entropy), capturing diversity and topical dispersion.

Combined information load

readability_load = linguistic_complexity

information_load_score = semantic_complexity * readability_load

semantic_readability_tension = semantic_complexity / max(avg_sentence_len, 1)

- readability_load represents the text’s inherent parsing difficulty.

- information_load_score combines semantic complexity and readability, giving an overall information density metric.

- semantic_readability_tension highlights sections where semantic load may conflict with readability, serving as a diagnostic for dense or hard-to-read content.

Concepts per 100 words

“ concepts_per_100 = sem.get(“concepts_per_100_words”, (concept_count / max(word_count, 1)) * 100) `

Provides a stable, interpretable measure of conceptual compression that is normalized to a standard text length, useful for comparing sections or pages.

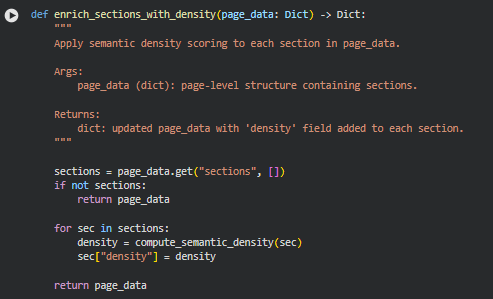

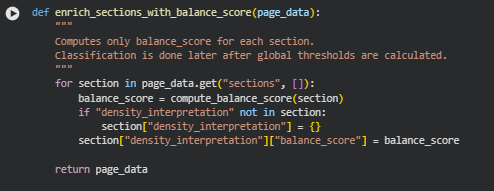

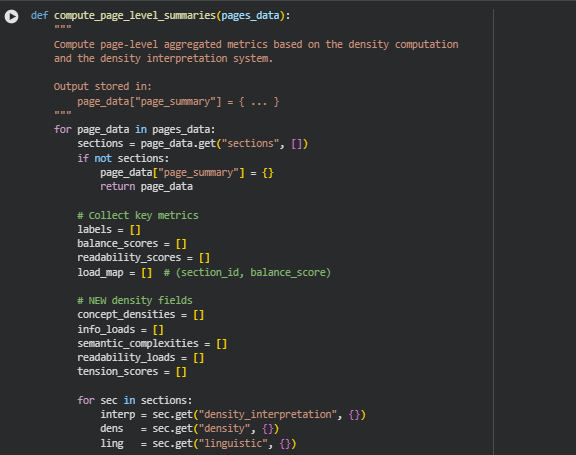

Function enrich_sections_with_density

Summary